The State of HTML 2023 survey results are now live!

Believe it or not, I've been around in web development long enough to remember a time when HTML was exciting.

I still recall my excitement the first time I coded something up and FTP'd it to a server. Knowing that people throughout the world could see my Fallout fan page (complete with visitor counter) was just magical!

But let's be honest: since then, HTML has often felt like an afterthought compared to its cooler siblings, CSS and JavaScript.

Why HTML?

So why care about a HTML survey then? Well first of all, I have to come clean about something: the survey wasn't just about HTML. Instead, you can think of it as focusing on all the things that the existing State of JavaScript and State of CSS surveys didn't cover.

But as it turns out, between the new Popover API, selectlist, and many more features, even HTML itself is now seeing the same kinds of exciting evolutions that JavaScript and CSS have been experiencing recently.

What Took So Long?

This is why it made sense to publish an HTML survey in 2023. And this is why… Hmm? What's that? It's not 2023 anymore, you say? Hasn't been for over five months now?

Well, it's true the survey results took a tiny bit longer to compile than I had anticipated. But, without trying to make any excuses, there are a couple good reasons behind that delay.

New Survey

This was the first time ever we ran this survey, and that meant a lot of work to come up with the right questions. In fact, you can go back all the way to 2022 to see the our first discussions about the topic with the community (even though the surveys are eventually ran by a pretty small team, we do try to involve as many external people as possible throughout the whole process).

Luckily, thanks to financial support from the Google Chrome team, Lea Verou was able to leverage her considerable experience with the web platform and take the lead on designing the survey.

New UI

As we were gathering feedback from various stakeholders, there was also a strong push to improve our main question-asking format.

We previously used a branching format disguised as a multiple-choice question in order to collect finer-grained responses:

But this required parsing through five relatively complex sentences, so instead Lea invented –as far as I know it hasn't been used elsewhere before– a brand new component that lets respondents input two-dimensional data (experience and sentiment) with a single click by leveraging hover states:

I was skeptical about this new control at first, but after we did some user testing, I saw that it was proving fairly intuitive and even enjoyable to use for the majority of testers.

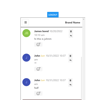

Lea also came up with a clever way to let respondents split up freeform answers instead of inputting multiple thoughts in a single textfield:

New Data

All these thoughtful improvements delayed the survey, which ended up running from September 19th to October 19th, 2023 instead of running during the summer like planned.

The upside though was that the survey was very successful: with over 20,000 responses, it beat out the long-running State of CSS survey, and even came close to equalling the State of JS.

But we now had a problem –and by "we" I mean I had a problem. I was now face to face with 20,000 responses, each of which contained dozens of question answers – including many freeform questions supporting their own sub-answers like outlined above.

As an example, that “forms pain point” question got 11,357 answers. And that's not 11,357 people checking box A or box B, that's 11,357 distinct freeform comments covering all the things people hate about HTML forms.

In total, the survey collected 69,053 freeform answers across all questions.

I ended up building a dedicated dashboard just to make parsing all this data slightly easier, but it still took months:

New Visualizations

It was only after all this was done that I could finally start the process of working on the survey results.

But once again, I hit a wall. Now that we were collecting experience ("I've used the Popover API") and sentiment ("I would use it again") data together, I needed a way to display them together as well.

Up to now we had been using the excellent Nivo dataviz library for React, but I wasn't sure how to customize it to support such a specific use case, or even if it was possible at all:

So I did the only sensible thing when you're already overworked and months behind schedule: I decided to rewrite everything from scratch.

This time though, I didn't use SVG, Canvas, or some other fancy rendering technique. Instead, fittingly enough I built the charts with plain old HTML/CSS!

The main reason is that HTML/CSS is still unequalled when it comes to building responsive UIs. By using grid/subgrid I was able to create charts that can adapt to the viewport width to stay legible even on smaller screens, instead of simply shrinking down or relying on scrollbars.

Another cool bonus was the ability to inject React components anywhere in the chart, such as these info popovers that are available for some items:

But of course, the biggest perk of rewriting everything was that I could now create tailor-made charts for our new data model:

And there are many other cool new features, such as nested bar charts:

But I'll let you discover all of them in the actual survey.

Note: you can also learn more about the new features in this short YouTube video:

https://www.youtube.com/watch?v=G-rFwp2zUT4

What's Next

I'm very excited to see how the community is able to use all this data to find new insights (I haven't even mentioned the fact that all the charts support dynamic filtering via our API!) – as well as how browser vendors leverage the results to inform initiatives such as Interop 2024.

So whether you publish a blog post about the survey, upload a video reacting to the results, or even create new data visualizations based on this data, let us know by leaving a comment here or –even better– joining our Discord.

State of JS & State of React

Of course, you're probably wondering when the other two pending surveys (State of JavaScript and State of React 2023) are coming out.

The bad news is, I've yet to get started on processing and publishing that data.

But the good news is, with all the new infrastructure that was built to get the State of HTML out the door, it should hopefully not take too long!

So you can look forward to both surveys being released between now and the end of June. As they say, better six months late than never!

And once that's done, well… it'll be right about time for the 2024 surveys!

Top comments (4)

Hey, awesome work!

But why didn't you open-sourced labeling of the responses to open source community? Like you could have published the original dataset through some labeling platforms and people would be able to label those free-form responses.

BTW I worked a data-engineer and if you are interested we can find you a suitable opensource self-hosted data-labeling platform, there are quite a few, so you won't spend that much time writing everything from scratch and do all the work with 1 pair of hands

We did try that, but simply put we didn't find enough people willing to put in the time required to learn the tool and go through the data.

It's true I didn't explore existing data-labeling solutions (happy to check out some suggestions if you have any) but I often worry that integrating a third-party solution into our workflow will end up taking almost as much time as building something from scratch, and end up beings less tailor-made to our use case (I might very well be wrong though).

The Gender distribution is a lot more unbalanced than I would have expected. Makes me wonder if the real demographics actually look as sad as in the survey, or there's some bias at play, like women being less confident that their opinion is worth sharing.

Either way, barely 1/10 of respondents not being men is... worrying.

Same goes for race as well, although that might be a bit more due to language barriers and other factors.

Thanks a lot for the detailed explanations! It was very useful and interesting!