Do you ever tackle a problem and know that you’ve just spent way too much time on it? But you also know that it was worth it? This post (which is also long!) sums up my recent experience with exactly that type of problem.

tl:dr

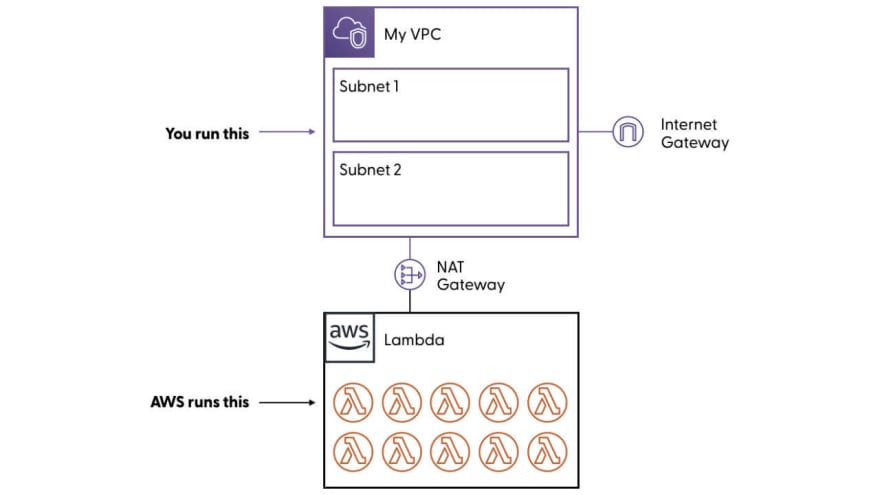

- AWS Lambda has a storage limit for

/tmpof 512MB - AWS Lambda functions needs to be in a VPC to connect to an Amazon EFS filesystem

- AWS Lambda functions within in a VPC require a NAT gateway to access the internet

- Amazon EC2 instances can use cloud-init to run a custom script on boot

- A solution needs to address all five pillars of the AWS Well-Architected Framework in order to be the “best”

Read on to find out the whole story…

The Problem

I love learning and want to keep tabs on several key areas. Ironically, tabs themselves are massive issue for me.

Every morning, I get a couple of tailored emails from a service called Mailbrew. Each of these emails contains the latest results from Twitter queries, subreddits, and key websites (via RSS).

The problem is that I want to track a lot of websites and Mailbrew only supports adding sites one-by-one.

This leads to a problem statement of…

Combine N feeds into one super feed

Constraints

Ideally these super feeds would be published on my website. That site is build in Hugo and deployed to Amazon S3 with CloudFlare in front. This setup is ideal for me.

Following the AWS Well-Architected Framework; it’s highly performant, low cost, has minimal operational burden, a strong security posture, and is very reliable. It’s a win across all five pillars.

Adding the feeds to this setup shouldn’t compromise any of these attributes.

I think it’s important to point out that there a quite a few services out there that combine feeds for your. RSSUnify and RSSMix come to mind, but there are many, many others…

The nice thing about Hugo is that it uses text files to build out your site, including custom RSS feeds. The solution should write these feed items as unique posts (a/k/a text files) in my Hugo content directory structure.

🐍 Python To The Rescue

A quick little python script (available here) and I’ve got a tool that takes a list of feeds and writes them into unique Hugo posts.

Problem? Solved.

Hmmm…I forgot these feeds need to be kept up to date and my current build pipeline (a GitHub Action) doesn’t support running on a schedule.

Besides, trying to run that code in the action is going to require another event to hook into or it’ll get stuck in an update loop as the new feed items are committed to the repo.

New problem statement…

Run a python script on-demand and a set schedule

This feels like a good problem for a serverless solution.

AWS Lambda

I immediately thought of AWS Lambda. I run a crazy amount of little operational tasks just like this using AWS Lambda functions triggered by a scheduled Amazon CloudWatch Event. It’s a strong, simple pattern.

It turns out that getting a Lambda function to access a git repo isn’t very straight forward. I’ll save you the sob story but here’s how I got it running using a python 3.8 runtime;

- add the git binaries as a Lambda Layer, I used git-lambda-layer

- use the GitPython module

That allows a simple code setup like this:

repo = git.Repo.clone_from(REPO_URL_WITH_AUTH)

...

# do some other work, like collect RSS feeds

...

for fn in repo.untracked_file:

print("File {} hasn't been added to the repo yet".format(fn))

# You can also use git commands directly-ish

repo.git.add('.')

repo.git.commit('Updated from python')

This makes it easy enough to work with a repo. With a little bit of hand wavy magic, I wired a Lambda function up to a scheduled CloudWatch Event and I was done.

…until I remembered—and by, “remembered”, I mean the function threw an exception—about the Lambda /tmp storage limit of 512MB. The repo for my website is around 800MB and growing.

Amazon EFS

Thankfully, AWS just released a new integration between Amazon EFS and AWS Lambda. I followed along the relatively simple process to get this set up.

I hit two big hiccups.

The first, for a Lambda function to connect to an EFS file system, both need to be “in” the same VPC. This is easy enough to do if you have a VPC setup and even if you don’t. We’re going to come back to this one in a second.

The second issue was that I initially set the path for the EFS access point to /. There wasn’t a warning (that I saw) in the official documents but an off-handed remark in a fantastic post by Peter Sbarski highlighted this problem.

That was a simple fix (I went with /data) but the VPC issue brought up a bigger challenge.

The simplest of VPCs that will solve this problem is one or two subnets with an internet gateway configured. This structure is free and only incurs charges for inbound/outbound data transfer.

Except that my Lambda function needs internet access and that requires one more piece.

That piece is a NAT gateway. No big deal, it’s a one click deploy and a same routing change. The new problem is cost.

The need for a NAT gateway makes completely sense. Lambda runs adjacent to your network structure. Routing those functions into your VPC requires an explicit structure. From a security perspective, we don’t want an implicit connection between our private network (VPC) and other random bits of AWS.

Well-Architected Principles

This is where things really start to go off the of the rails. As mentioned above, the Well-Architected Framework is built on five pillars;

The AWS Lambda + Amazon EFS route continues to perform well in all of the pillars except for one; cost optimization.

Why? Well I use accounts and VPCs as strong security barriers. So the VPC that this Lambda function and EFS filesystem are running in is only for this solution. The NAT gateway would only be used during the build of my site.

The cost of a NAT gateway per month? $32.94 + bandwidth consumed.

That’s not an unreasonable amount of money until you put that in the proper context of the project. The site costs less than $0.10 per month to host. If we add in the AWS Lambda function + EFS filesystem, that skyrockets to $0.50 per month 😉.

That NAT gateway is looking very unreasonable now

Alternatives

Easy alternatives to AWS Lambda for computation are AWS Fargate and good old Amazon EC2. As much as everyone would probably lean towards containers and I’ve heard people say it’s the next logical choice…

💯 agree with @timbray’s prioritization...here’s a similar one for compute:

- don’t use instances

- use @awscloud Lambda if you can

- look hard at Fargate, it’s getting better & better 😉

- if you have to use instances, automate everything, including shutdown

#devops twitter.com/timbray/status…11:17 AM - 18 Jun 2020Tim Bray @timbrayCorey’s database roundup is good: https://t.co/cvEqIuZ2aL But I can make it simpler: 1. Don't use relational 2. Use Dynamo if you can 3. Look hard at QLDB. It’s excellent. 4. If you *have* to use relational, use Aurora Serverless. @QuinnyPig

…I went old school and started to explore what a solution in EC2 would look like.

For an Amazon EC2 instance to access the internet, it only needs to be in a public subnet of a VPC with an internet gateway. No NAT gateway needed. This removes the $32.94 each month but does put us into a more expensive compute range.

But can we automate this easily? Is this going to be a reliable solution? What about the security aspects?

Amazon EC2 Solution

The 🔑 key? Remembering the user-data feature of EC2 and that all AWS-managed AMIs support cloud-init.

This provides us with 16KB of space to configure an instance on the fly to accomplish out task. That should be plenty...if you haven't figured it out from my profile pic, I'm approaching the greybeard powers phase of my career 🧙♂️.

A quick bit of troubleshooting (lots of instances firing up and down), and I ended up with this bash script (yup, bash) to solve the problem;

#! /bin/bash

sleep 15

sudo yum -y install git

sudo yum -y install python3

sudo pip3 install boto3

sudo pip3 install dateparser

sudo pip3 install feedparser

cat > /home/ec2-user/get_secret.py <<- PY_FILE

# Standard libraries

import base64

import json

import os

import sys

# 3rd party libraries

import boto3

from botocore.exceptions import ClientError

def get_secret(secret_name, region_name):

secret = None

session = boto3.session.Session()

client = session.client(service_name='secretsmanager', region_name=region_name)

try:

get_secret_value_response = client.get_secret_value(SecretId=secret_name)

except ClientError as e:

print(e)

else:

if 'SecretString' in get_secret_value_response:

secret = get_secret_value_response['SecretString']

else:

decoded_binary_secret = base64.b64decode(get_secret_value_response['SecretBinary'])

return json.loads(secret)

if __name__ == '__main__':

github_token = get_secret(secret_name="GITHUB_TOKEN", region_name="us-east-1")['GITHUB_TOKEN']

print(github_token)

PY_FILE

git clone https://\`python3 get_secret.py\`:x-oauth-basic@github.com/USERNAME/REPO /home/ec2-user/website

python3 RUN_MY_FEED_UPDATE_SCRIPT

cd /home/ec2-user/website

# Build my website

./home/ec2-user/website/bin/hugo -b https://markn.ca

# Update the repo

git add .

git config --system user.email MY_EMAIL

git config --system user.name "MY_NAME"

git commit -m "Updated website via AWS"

git push

# Sync to S3

aws s3 sync /home/ec2-user/website/public s3://markn.ca --acl public-read

# Handy URL to clear the cache

curl -X GET "https://CACHE_PURGING_URL"

# Clean up by terminating the EC2 instance this is running on

aws ec2 terminate-instances --instance-ids `wget -q -O - http://169.254.169.254/latest/meta-data/instance-id` --region us-east-1

This entire run takes on average 4 minutes. Even at the higher on-demand cost (vs. spot), each run costs $0.000346667 on a t3.nano in us-east-1.

For the month, that’s $0.25376244 (for 732 scheduled runs).

We’re well 😉 over the AWS Lambda compute price ($0.03/mth) but still below the Lambda + EFS cost ($0.43/mth) and certainly well below the total cost including a NAT gateway. It’s weird, but this is how cloud works.

Each of these runs is triggered by a CloudWatch Event that calls an AWS Lambda function to create the EC2 instance. That’s one extra step compared to the pure Lambda solution but it’s still reasonable.

Reliability

In practice, this solution has been working well. After 200+ runs, I have experienced zero failures. That’s a solid start. Additionally, the cost of failure is low. If this process fails to run, the site isn’t updated.

Looking at the overall blast radius, there are really only two issues that need to be considered;

- If the sync to S3 fails and leaves the site in an incomplete state

- If the instance fails to terminate

The likelihood of a sync failure is very low but if it does fail the damage would only be to one asset on the site. The AWS CLI command copies files over one-by-one if they are newer. If one fails, the command stops. This means that only one asset (page, image, etc.) would be in a damaged state.

As long as that’s not the main .css file for the site, we should be ok. Even then, clean HTML markup leaves the site still readable.

The second issue could have more of an impact.

The hourly cost of the t3.nano instance is $0.0052/hr. Every time this function runs, another instance is created. This means I could have a few dozen of these instances running in a failure event…running up a bill that would quickly top $100/month if left unchecked.

In order to mitigate this risk, I added another command to the bash script; shutdown. Also ensuring that the API parameter of —instance-initiated-shutdown-behavior set to terminate is set on instance creation. This means the instance calls the EC2 API to terminate itself and shuts itself down to terminate…just in case.

Adding a billing alert rounds out the mitigations to significantly reduce the risk.

Security

The security aspects of this solution concerned me. AWS Lambda presents a much smaller set of responsibilities for the user. In fact, an instance is the most responsibility taken on by the builder within the Shared Responsibility Model. That’s the opposite way we want to be moving.

Given that this instance isn’t handling inbound requests, the security group is completely locked down. It only allows outbound traffic, nothing inbound.

Additionally, using an IAM Role, I’ve only provided the necessary permissions to accomplish the task at hand. This is called the principle of least privilege. It can be a pain to setup sometimes but does wonders to reduce the risk of any solution.

You may have noticed in the user-data script above that we’re actually writing a python script to the instances on boot. This script allows the instance to access AWS Secrets Manager to get a secret and print its value to stdout.

I’m using that to store the GitHub Personal Access Token required to clone and update the private repo that holds my website. This reduces the risk to my GitHub credentials which are the most important piece of data in this entire solution.

This means that the instance needs to the following permissions;

secretsmanager:GetSecretValue

secretsmanager:DescribeSecret

s3:ListBucket

s3:*Object

ec2:TerminateInstances

The permissions for secretsmanager are locked to the specific ARN of the secret for the GitHub token. The s3 permissions are restricted to read/write my website bucket.

ec2:TerminateInstances was a bit trickier as we don’t know the instance ID ahead of time. You could dynamically assign the permission but that adds needless complexity. Instead, this is a great use case for resource tags as a condition for the permission. If the instance isn’t tagged properly (in this case I use a “Task” key with a value set to a random, constant value), this role can’t terminate it.

Similarly, the AWS Lambda function has standard execution rights and;

iam:PassRole

ec2:CreateTags

ec2:RunInstances

Cybersecurity is simply making sure that what you build does what you intend…and only what is intended.

If we run through the possibilities for this solution, there isn’t anything that an attacker could do without already having other rights and access within our account.

We’ve minimized the risk to a more than acceptable level, even though we’re using EC2. It appears that this solution can only do what is intended.

What Did I Learn?

The Well-Architected Framework isn’t just useful for big projects or for a point-in-time review. The principles promoted by the framework apply continuously to any project.

I thought I had a simple, slam dunk solution using a serverless design. In this case, a pricing challenge required me to change the approach. Sometimes it’s a performance, sometimes it’s security, sometimes it’s another aspect.

Regardless, you need to be evaluating your solution across all five pillars to make sure you’re striking the right balance.

There’s something about the instance approach that still worries me a bit. I don’t have the same peace of mind that I do when I deploy Lambda but the data is showing this as reliable and meeting all of my needs.

This setup also leaves room for expansion. Adding addition tasks to the user-data script is straightforward and would not dramatically shift any of the concerns around the five pillars if done well. The risk here is expanding this into a custom CI/CD pipeline which is something to avoid.

“I built my own…”, generally means you’ve taken a wrong turn somewhere along the way. Be concerned when you find yourself echoing those sentiments.

This is also a reminder that there are a ton of features and functionality within the big three (AWS, Azure, Google Cloud) cloud platforms and that can be a challenge to stay on top of.

If I didn’t remember the cloud-init/user-data combo, I’m not sure I would’ve evaluated EC2 as a possible solution.

One more reason to keep on learning and sharing!

Btw, checkout the results of this work at;

And if I'm missing a link with a feed that you think should be there, please let me know!

Total Cost

If you’re interested in the total cost breakdown for the solution at the end of all this, here is it;

Per month

===========

24 * 30.5 = 732 scheduled runs

+ N manual runs

===========

750 runs per month

EC2 instance, t3.nano at $0.0052/hour

+ running for 4m on average

===========

(0.0052/60) * 4 = $0.000346667/run

AWS Lambda function, 128MB at $0.0000002083/100ms

+ running for 3500ms on average

============

$0.00000729/run

Per run cost

============

$0.000346667/run EC2

$0.00000729/run Lambda

============

$0.000353957/run

Monthly cost

=============

$0.26546775/mth to run the build 750 times

$0.00 for VPC with 2 public subnets and Internet Gateway

$0.00 for 2x IAM roles and 2x policies

$0.40 for 1x secret in AWS Secrets Manager

$0.00073 for 732 CloudWatch Events (billed eventually)

$0.00 for 750 GB inbound transfer to EC2 (from GitHub)

$0.09 for 2 GB outbound trasnfer (to GitHub)

=============

$0.75620775/mth

This means it'll take 3.5 years before we've spent the same as one month of NAT Gateway support.

* Everything is listed in us-east-1 pricing

Top comments (16)

Nice post! I've been thinking alot recently about the Well-Architected framework and realized it can often be accomplished without needing aws services.

For your goal

You mentioned a few points about GitHub actions

I wanted to share for other readers that it actually is possible to run a python script in GitHub actions on a schedule, for free even!

help.github.com/en/actions/referen...

Have a look. I've used this to replace main cloudwatch scheduled event lambdas for free

I wanted to clarify for other readers that you can associate a separate action for pushes. A scheduled action to run your script and a push trigger for what ever else you need, filtering on paths or branches of needed.

If this is just static content you could also just host on gh pages for free

To tie these back to the AWS Well-Architected Framework am alternative might be

Assuming this is a static website, check. A python script will run as fast as a python script can run where ever it runs ;)

Both GitHub actions and GitHub pages are free.

There's no infra to maintain. No lambda lambdas to write, no IAM credentialing to manage. You check a workflow file into your repo and GitHub manages the operations of executing it.

There's also many moving parts which can sometimes remove operational burden.

A secret store is built into GitHub actions if you need it

Same story here. GitHub scheduling system is pretty reliable. If a script fails it gets rerun on the next pass.

Sweet! Excellent tips. I'll check it out and see if how I can make that work as well.

As expected (and loved) there are always a bunch of ways to solve the problem.

This one might even come in less expensive than what I've laid out. Not because it's actually cheaper computationally but thanks to GitHub absorbing the cost!

Agreed. Love the detail and spirit in this post

Excellent post! I'm looking at designing something similar myself (a completely serverless CMS), using S3, DynamoDB, and Lambda, so this was helpful for considering the design.

One recommendation I would make is that your use case seems perfect for AWS CodeBuild. I have used it a little bit for syncing a repo with S3 using webhooks, but I believe you can also set it up to run on a schedule. There is a free tier offering for 100 build minutes per month. It might save you some (minimal) cost, but considering you've fully automated the pipeline already, it's probably worth it to keep what you have.

I haven't had a chance yet to play around with Lambda and EFS, but it is definitely something that piqued my curiosity when I heard about it. Does Lambda require a NAT, or can it be routed through your IGW directly? For enterprise solutions, I imagine this is not a big deal (especially if you don't need to access the internet), but for small personal projects, obviously, this is a huge cost increase and a bit disappointing.

While writing the above, it also occurred to me that CodeCommit might be a good solution to the NAT problem, assuming your repo does not need to be hosted on GitHub. I'm not sure, but I imagine, that you could put up a VPC endpoint for your Lambda and access the CodeCommit repo without traversing the public internet to clone and do your build.

Glad the post could help a bit. That's the whole reason for sharing!

Lambdas in a VPC require a NAT to reach the internet. That was the snag as soon as I integrated EFS (which has to be in a VPN).

CodeCommit and CodeBuild are definitely possibilities here as well. @esh pointed that out to me as well. It's been a while since I used CodeBuild, so I have to circle back on it.

I would caution you in building your own CMS unless it's as a project to learn about building in the cloud. There are already a ton fo great options out there (most free and open source) that could save you a ton of time.

That said, as a learning project, it's a fun activity to take on!

Good to know about the Lambdas and NAT. A bit disappointed, but not entirely surprised. But, because it's a brand new offering, maybe this will change in the future as the service evolves. For an enterprise solution, this is probably not a deal-breaker, but for a student like me, it's definitely cost-prohibitive for portfolio projects I want to keep going on a long-term basis.

The project is definitely just a learning project! I did a few projects in the past with Lambda and DynamoDB and did not completely understand how they work. I have a much better understanding now, but definitely want to learn more. I am also considering playing around with Aurora serverless and a CMS is a fairly simple use case to integrate all of the above and get some experience with them.

Yes, for work production accounts the NAT gateway cost is usually a drop in the bucket. Though it would be nice to see some sort of slicker solution there for purely serverless setups.

Your CMS efforts sound like a lot of fun. Aurora serverless is very cool and also pretty straight forward. For the DynamoDB piece, have you read Alex Debrie's book dynamodbbook.com/?

I have not, I'll definitely check it out. Thanks for the recommendation!

Code build does not compete well on price, especially on smaller instances. The author would pay at least 10x cost for code build.

After a couple people suggested it, I checked into the latest updates around it. You're 100% spot on.

Even if I could drop build time by 50% to 2m, that's $0.01/build * 750 builds a month for a total of $7.50/mth or 10x!

Thanks for sharing this! I use the heck out of cloud-init for doing initial config of servers, but never thought to co-opt it to make an ec2 instance into something Lambda-ish. Might come in handy in the future.

Great post! I am the developer of Mailbrew and love when people hack on the product.

Thanks Francesco, I'm loving Mailbrew and signed up immediately when I saw. Super clean and amazingly useful.

Btw, I completely understand why you wouldn't support OPML in the product as it would be a nightmare on the backend. I had originally included that call out in the post but things were getting way, way too long.

Besides, this was a fun thing to solve!

If we are playing AWS cost golf, why not switch to parameter store and save 40p, 50% cost reduction. I don’t think your solution uses any feature of secret manager that isn’t part of parameter store.

I actually get that one a lot and there's a reason not to use Parameter Store and that's intention.

Yes, Secrets Manager is more expensive but if you clearly delineate between sensitive data (Secrets Manager) and configuration options (Parameter Store), you're less likely to run into accidental leaks down the road. It's a nice bit of operational security to help show you and the team what needs a high level of care.

...but yes, Parameter Store is significantly less expensive

Just getting started into AWS and this is great content for me. I like how you explained your decisions in terms of the well-architected framework. Cheers!