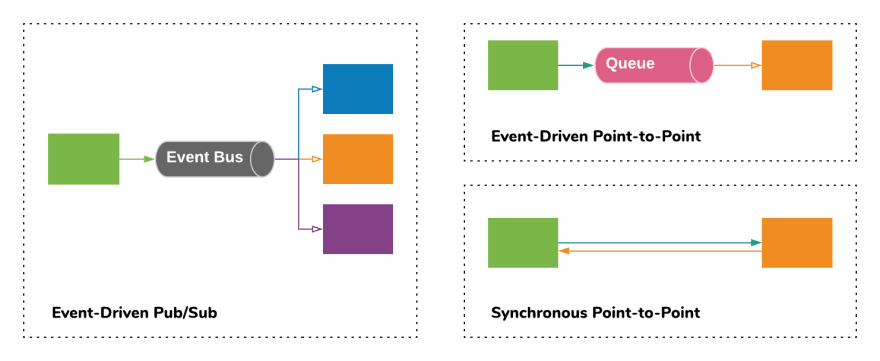

Let's say you have a serverless deployment in AWS with external, public-facing APIs and some Lambda functions behind those APIs. As your deployment grows, you are likely to need internal communication between isolated parts of your system (microservices). This can be divided into three categories:

- Pub/Sub-style event-driven communication. This is where one service publishes events about what has occurred. Other, subscribing services react to events as required according to their responsibility.

-

Point-to-point event-driven communication. This is where you have a queue as part of a service so it can receive messages to be proceeds. For example, an Email Service might receive a message containing

to,from,subjectandfromfields and use these fields to construct and send an Email using SES or SendGrid - Point-to-point Synchronous communication. This is when a calling service needs to make a request to another service (which may be internal or external) and blocks waiting on the response. An example of this could be a request made to the User Service to find a user's email address based on a user ID found in an authorization header.

Synchronous Calls Between Services

This post is about the third case; point-to-point synchronous communication. In a serverless context, you could do this with a function-to-function invocation. In AWS, this would use Lambda.invoke. The calling service could grant itself the right IAM permissions to invoke the target Lambda defined by its ARN. However, this can be seen as a bad smell! It leaks implementation details about the service you are calling. If you want to replace that Lambda service with something else, like an external web-service, a container-based implementation or even a managed AWS service with no Lambda code required, you have a problem. You would then have to replace the implementation and change the code in each individual calling service.

Instead, a simple abstraction can be achieved by putting the implementation behind a HTTP interface. HTTP is ubiquitous, well understood and can be maintained while you change the underlying implementation without any significant drama. For our serverless, Lambda-backed implementation, this means putting them behind an API Gateway, just like our external APIs.

Options for Securing Internal APIs

Then comes the crucial question: how do we secure them? We only want our internal APIs to be accessed internally. The way I see it, these are your options:

- Use VPCs

- Use an API Key to secure the API Gateway

- Use AWS IAM authorization on the API Gateway

A VPC approach would require putting your invoking Lambdas in a VPC and defining the API Gateway as a private API with a VPC endpoint. Avoid VPCs for your Lambdas if at all possible!. This will give you restrictions in your Lambda scalability and an additional (8-10 second) cold start time. Yan Cui covers this topic really well in the Production-Ready Serverless video course.

UPDATE 2019/10/17: Since this post was first written, AWS have reworked the ENI allocation method for VPCs so the cold start penalty is starting to go away for Lambdas in VPCs. This is yet to be rolled out to all major regions but it will change the picture significantly. I would still avoid VPCs in many cases unless necessary because of the additional complexity of managing VPCs.

An API Key approach seem reasonable. You can generate an API Gateway API Key for the internal API service and share it to the invoking service. The key is added to the authorization header when making the request. I have done this and it works. The challenge here was sharing the API Key. In order to do this, I had to create a custom CloudFormation resource to store the API Key in SSM Parameter Store so it could be discovered by other, internal services with permissions to access the key. API Keys are intended for controlling access to external APIs with quotas so internal APIs are not really their intended purpose. If you are interested in understanding how to do this, take a look at my solution here. For any questions, comment below or tweet me.

Internal API Gateway Security with IAM

The best practice recommendation is to use IAM authorization on the APIs. If you are using the Serverless Framework, it looks like this:

get:

handler: services/users/get.main

events:

- http:

path: user/{id}

method: get

cors: false

authorizer: aws_iam

This will result in the AuthorizationType being set to AWS_IAM in the associated ApiGateway::Method CloudFormation resource. If you now try and invoke your API externally, you should get a 403 Forbidden response. You're API is now secured! So, how do we grant permissions to other internal services who need to call it?

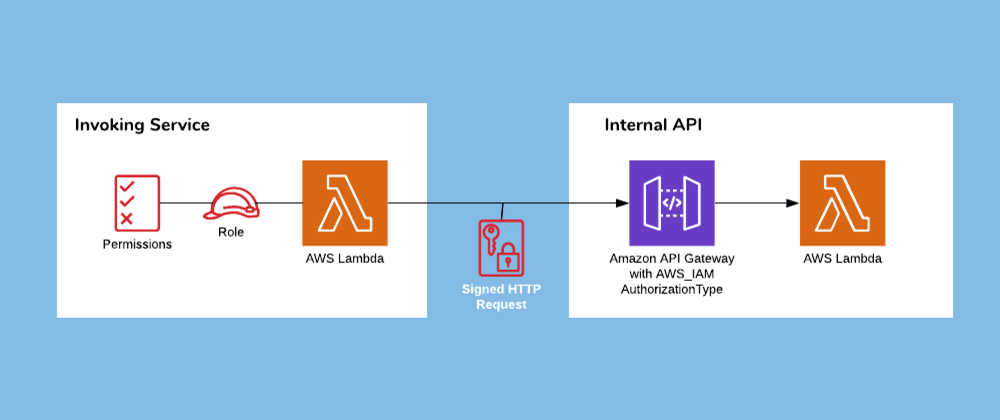

Now that we are using IAM authorization for the API Gateway, invoking services need to be granted IAM permissions to invoke it. For an AWS Lambda function, this means granting access to invoke the target API and to make requests with the relevant HTTP verbs (GET, POST, PUT, PATCH, etc.)

- Effect: Allow

Action:

- execute-api:Invoke

- execute-api:GET

Resource:

- arn:aws:execute-api:#{AWS::Region}:#{AWS::AccountId}:*/${self:provider.stage}/*/user/*

This IAM Policy snippet grants access to a Lambda function to invoke the GET methods for any API Gateway in the same account with /user/ in its path. The first wildcard (*) is for the API Gateway Resource ID. This is dynamically generated, so we don't want to explicitly write it here. The second wildcard is for the HTTP verb and the last is for the specific resource path.

Providing IAM Credentials in HTTP Requests

The final piece of the puzzle is in making the invocation with the correct credentials. When we use any HTTP request library (like requests in Python or axios in JavaScript), our AWS Lambda function role credentials will not be passed by default. To add our credentials, we need to sign the HTTP request. There is a bit of figuring out required here so I created an NPM module that wraps axios and automatically signs the request using the Lambda's role. The module is called aws-signed-axios and is available here.

To make the HTTP request with the credentials associated with the API Gateway permissions, just invoke with the wrapper library like this:

const signedAxios = require('aws-signed-axios')

...

async function getUser(userId) {

...

const { data: result } = await signedAxios({

method: 'GET',

url: userUrl

})

return result

}

That's it. In summary, we now have a clear, repeatable approach for internal serverless APIs with:

- A way to secure an API Gateway without VPCs

- IAM permission we can use to grant to any services we wish to allow

- A simple way to sign HTTP requests with the necessary IAM credentials

If you want to explore more, take a look at our open-source serverless starter project, SLIC Starter:

fourTheorem

/

slic-starter

fourTheorem

/

slic-starter

A complete, serverless starter project

SLIC Starter

Jump to: Getting Started | Quick Start | CI/CD | Architecture | Contributing

SLIC Starter is a complete starter project for production-grade serverless applications on AWS. SLIC Starter uses an opinionated, pragmatic approach to structuring, developing and deploying a modern, serverless application with one simple, overarching goal:

Get your serverless application into production fast

- How does SLIC starter help you?

- Application Architecture

- What does it provide?

- Before you Begin!

- Getting Started

- Getting to your First Successful Deployment

- Local Development

- Backend configuration for front end

- Demo

- Code Style and Syntax

- Who is behind it?

- Other Resources

- Troubleshooting

- Contributing

- License

How does SLIC starter help you?

- Serverless development involves a lot of decisions around which approach to take for a multitude…

I work as the CTO of fourTheorem and am the author of AI as a Service. I'm on twitter as @eoins.

Top comments (0)