Last reviewed : Aug 2022

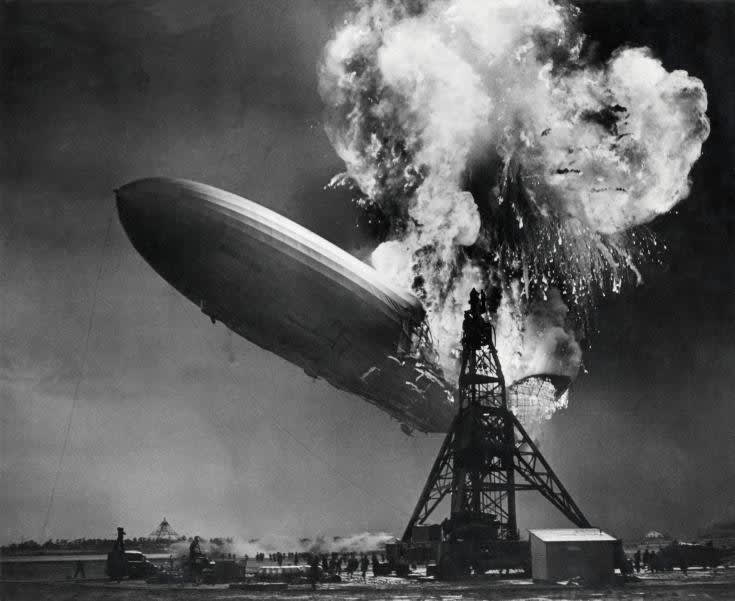

So, your system is operational. Lots of users are busily adding their data to your database and their money is rolling in to your bank account. What could possibly go wrong?

Supposing that data was corrupted or lost? Unless you're prepared to walk away from the smoking ruin of a once profitable business you're going to need to think about backup.

- Introduction

- Creating a Backup file

- Recovering your database from a Backup file

- Automating Backup file creation

- Recovering your Database to Point of Failure

- Managing your Backup files

- Strategy

Introduction

Let's start with a bit of ancient history. Before "managed" storage systems like the Google Cloud became available, a company's IT systems would run on an in-house equipment - computers and systems software that were entirely that company's responsibility. Life was hard! The IT manager's responsibilities started with the commission of the construction of a "machine room" and expanded, seemingly limitlessly through air-conditioning, backup power supplies and system software, from there. The development of application software actually came quite a long way down the list. Fortunately, "hacking" hadn't really been even thought of in those innocent days, and the possibility that the system's data might be destroyed by simple human error was nothing compared to the real possibility (it did happen) that the entire creaky enterprise might simply catch fire! Standard practice was to create copies of both data and software (usually on magnetic tape) and store these in some secure, remote location (old slate quarries were popular). Since you couldn't afford to do this too frequently and because both hardware and software failures were a regular occurrence, the systems would also maintain "transaction logs" so that you could recover a corrupted database by reloading it from the latest dump and then "rolling it forward" to its last "stable" state.

Since you've chosen to build your system on Google's managed Cloud services, life as a system-developer has been enormously simplified. Most of the issues introduced above are simply no longer your concern. Security and performance of your hardware are guaranteed by Google and, in principle, all you've got to worry about is the possibility that your systems themselves might fail. Is this possible?

Since your testing is so meticulous there is absolutely no way you might release a software update that, for example, deletes documents when it is actually trying to update them? And since your security systems are as tight as a gnat's proverbial, there is absolutely no possibility that a malicious third party might sneak in under your security radar and wreak havoc? Ah, well ....

The development and operation of your software environment involves people and people will always be the most likely cause of system failure. If you want to sleep at night, you'd better have a rock-solid system recovery procedure. Some day you're going to need it!

Creating a Backup file

If your systems thinking is still "old school", your initial plans for a backup system may be to find a way of turning your cloud database into some sort of local file. But once a database is established in the Google Cloud the only processes that can run on it are those that run within the cloud itself. What this means in practice is that the only place you can locate a backup (at least initially) is on Google Cloud storage within the Cloud itself.

This makes sense, since the Cloud with its multiple server locations and centralised security is probably the best place to keep your backups. Once safely copied to Cloud storage, your data is certainly insulated from anything that systems maintenance staff might inflict upon it!

Google makes the job of creating a backup extremely easy. In the simplest case all you need to do to get ourself a backup is to create a Cloud storage bucket and invoke the Google Export facility by clicking a few tabs and buttons in your project's Cloud Console pages.

Why not pick a modestly-sized collection in your database and give it a go now?

You first task is to configuring a Cloud Storage bucket to receive your exported data. At first acquaintance, this actually looks a bit intimidating, and quite rightly so because, when you're doing this for real, some big decisions need to be taken here that will have big consequences for both the cost and security of your backup system.

I'll run through the configuration parameters quickly here, but if you're just bent on trying things out I suggest you don't break too much sweat getting your head round them for now. Just use the suggested defaults and these points will be picked up again at the end of this post. Here's what Google needs to know:

-

Bucket Name (eg

mydatabase_backup_bucket) - Location. This specifies the new bucket's geographical location, a question that prompts several interesting thoughts. My advice is that a project's backup should be located somewhere geographically close to the project itself - though perhaps not actually on the same site!

Storage class. Basically this is letting you choose from a set of pricing plans. The most important factor here is something Google calls "minimum storage duration" (msd). If you create an object in the bucket with a particular msd, you are committed to paying for its storage for that period regardless of whether, in the interim, you delete, replace, or move it. The minimum duration ranges from 0 ("standard" storage) through 30, 90 and 365 days. The quid pro quo is that as the duration time increases, the cost of storage decreases. I suggest you use standard storage for the present - you've got more important things to worry about just now. Note that, irrespective of which storage plan you select, any use of Google Cloud storage will require you to upgrade a free "Spark" plan to a paid "Blaze" plan. However, a modest experiment is only going to cost you a cent or two, and the ability to set a budget limit should ensure that even if you make a mistake and ask Google to create some substantial files, the consequences won't be too embarrassing.

Access control mechanism. This determines "who can do what" with the contents of your bucket. Google recommends backup buckets should be configured with "Not Public" and "Uniform" access control settings. These settings restrict access to permissions granted through Google's IAM system. We'll use these later in configuring arrangements to run backups automatically on a fixed schedule using Cloud functions - sounds complicated, I know, but easier than you might imagine.

-

Protection. In addition to the general access control mechanism introduced above, Google allows you to add several extra more exotic devices:

-

protection tools : /none/object versioning/retention policy.

- Object versioning lets you set a limit on the number of versions of any object that you might create in your bucket (you create a new version by simply creating another object with the same name).

- Retention policy allows you to set a default "retention time" for objects in your bucket. This terminology may cause confusion. If you set this, say to 14 days, it doesn't mean that files in the bucket will be automatically deleted after 14 days. Rather, it's saying that they will be explicitly protected from deletion by you for 14 days after their creation.

- My recommendation is that you select "none" at this stage

- encryption : this is a setting that allows really sensitive applications to specify their own encryption keys to protect objects in the cloud storage bucket. As standard, Google supplies its own keys and most people will find that these will be fine for now.

-

protection tools : /none/object versioning/retention policy.

One you've created your bucket, actually launching a backup manually is perfectly straightforward - In the Google Cloud console's Cloud Firestore Import/Export page in the Cloud Console:

- Click

Export. - Click the

Export entire databaseoption (unless you prefer to create backups for selected collections only). - Below

Choose Destination, enter the name of a Cloud Storage bucket or use the Browse button to select a bucket. - Click

Export.

Now have a look inside your bucket to see what Google has created. You'll find that the contents are heavily structured. They're designed precisely for use in recovering a database (see next section). if you had any ideas of downloading them to use in other ways (for example, for the creation of test data) I suggest you forget them now.

Note in passing that an export is not an exact database snapshot taken at the export start time. An export may include changes made while the operation was running.

Recovering your database from a Backup file

Recovering a backup is as straightforward as creating it. Starting, again, from the Cloud Firestore Import/Export page, select Import and browse to the metadata file for the backup you want to recover. Select the file and click Import.

Control will now return to the Import/Export page to allow you to monitor progress. Google documentation at Export and import data makes the following important points:

- When you import data, the required indexes are updated using your database's current index definitions. An export does not contain index definitions.

- Imports do not assign new document IDs. Imports use the IDs captured at the time of the export. As a document is being imported, its ID is reserved to prevent ID collisions. If a document with the same ID already exists, the import overwrites the existing document.

- If a document in your database is not affected by an import, it will remain in your database after the import.

- Import operations do not trigger Cloud Functions. Snapshot listeners do receive updates related to import operations.

Automating Backup file creation

While running an Export manually is simple enough, you wouldn't want to make this your standard procedure. Fortunately, Google Cloud "functions" and the Cloud's "pubsub" scheduling services allow you to set up an arrangement that will allow you to relax, safe in the knowledge that a machine is looking after your interests rather than some willing but ultimately unreliable person. The procedure is nicely documented in Google's Schedule data exports document. Here's a copy of the code for the function that Google suggests you might use, together with a few modifications and comments of my own:

const functions = require('firebase-functions');

const firestore = require('@google-cloud/firestore');

const client = new firestore.v1.FirestoreAdminClient();

const bucket = 'gs://mydatabase_backup_bucket';

exports.scheduledFirestoreExport = functions

.region('europe-west3')

.pubsub

.schedule('00 16 * * 0') // Every Sunday at 4pm (but since the default timezone is America/LA, 8 hours behind UK GMT, this gives an effective runtime of midnight)

.onRun((context) => {

const projectId = process.env.GCP_PROJECT || process.env.GCLOUD_PROJECT;

const databaseName =

client.databasePath(projectId, '(default)');

return client.exportDocuments({

name: databaseName,

outputUriPrefix: bucket,

// Leave collectionIds empty to export all collections

// or set to a list of collection IDs to export,

// collectionIds: ['users', 'posts']

collectionIds: ['myCollection1', 'myCollection2']

})

.then(responses => {

const response = responses[0];

console.log(`Operation Name: ${response['name']}`);

})

.catch(err => {

console.error(err);

throw new Error('Export operation failed');

});

});

Notes:

- This amazingly compact piece of code is performing two operations - it both launches the configured Export and lodges it in the PubSub system with the configured schedule. I'm not clear whether or not the act of deploying it is sufficient to set all this in motion, but since you'll want to test the function (see below), the question is irrelevant.

- The unusual preamble at:

const firestore = require('@google-cloud/firestore');

const client = new firestore.v1.FirestoreAdminClient();

isn't commented upon in the Google document but seems to be something to do with a need to expose methods that allow the code to access the exportDocuments() functionality.

- the

const bucket = 'gs://mydatabase_backup_bucket';obviously needs to be amended to reference your own backup bucket - Google's version of the pubsub function is happy to use the default selection of 'us-central' for the function's location. It seemed preferable to over-ride this and locate the function in the same place as the database you're unloading - in my particular case ''europe-west3'

- Google comments that there are two ways in which you can specify scheduling frequency: AppEngine cron.yaml syntax or the unix-cron format. After experiencing several hopeless failures trying to get my head round cron-yaml ('every 24 hours' may work, but 'every 30 days' certainly doesn't), I found unix-cron a safer approach. Since you probably want to take your system off-line while a dump is in progress, it's likely that you'll opt to run the backup at a quiet time on a weekend. My

.schedule('00 16 * * 0')spec schedules the function to run at 4pm every Sunday in the default America/LA timezone. This means that it actually runs at midnight GMT (since LA is 8 hours behind GMT). It would be nice to be able to select your own timezone but I'm not sure that this is actually possible. Regardless, overall, this schedule ties in nicely with arrangements to delete out-of-date backups (see below), where I use the age of an object to trigger its deletion. It's worth mentioning that unix-cron is not perfect. If, for example, you wanted to dump your database, say, every second Sunday, you'd struggle. Stackoverflow is a good source of advice. - At

outputUriPrefix: bucket, I've opted to specify an explicit list of the collections I want backed up, rather than doing the whole database. I think this is likely to be a more realistic scenario and this is an arrangement that also creates the ability to Import collections individually - Google's Schedule data exports document tells you that your function will run under your project's default service account - ie

PROJECT_ID@appspot.gserviceaccount.com, wherePROJECT_IDis the name of your project - and that this needs bothCloud Datastore Import Export Admin' permission andOwner or Storage Admin roleon the bucket. The document tells you how to use the Cloud Shell to do this, but if you prefer to use the Google Console, you're on your own. I'm not a big fan of the Cloud Shell so I used the Google Console and found this rather tricky! However, a bit of persistence produced the desired result. Here's how to do it: On the IAM page for your project, click the Add button and enter the name of your project's default service account (see above) in thePrincipalsfield. Now click the pull-down list in theRolesfield and select theDatastoreentry (it may be some way down the list!). This should open a panel revealing aCloud Datastore Import Export Adminpermission. Select this and clickSave. Once you're done, bucket permissions can be viewed and amended in the Google Console's Cloud Storage page for your project - The Schedule data exports document nicely describes the procedure for testing the Export function in the Google Cloud Console. This worked fine for me - at least once I'd realised that the

RUN NOWbutton in the Cloud Scheduler page is at the far right of the entry for my function and required me to use the scroll bar to reveal it! Each time you run a test, a new Export will appear in your backup bucket (see Google's documentation above for how to monitor progress) and your function's entry in the Schedule page (keyed on a combination of your Export's name and region -firebase-schedule-scheduledFirestoreExport-europe-west3in the current case) will be updated with the current schedule settings.

Recovering your Database to Point of Failure

Possession of a database backup ensures that, when disaster strikes, you will certainly be able to recover something. But while you can now be confident in your ability restore a corrupt database to the state it was in when the backup was taken, what can be done about all the business you've transacted since? I'm sorry - unless you've taken steps to record this independently, this is now lost to the world. Depending on the frequency of your backup schedule, this might represent quite a setback!

What's needed is a mechanism that will allow the recovered database to be "rolled forward" to the point at which corruption occurred. Sadly, Google's NoSQL Firestore database management system provides no such "built-in" mechanism - if you want this you'll have to look to more formal, "classic" database systems like Cloud MySQL.

I think this is a pity, so I started to wonder what sort of arrangements an application might put in place to provide a "home-grown" "roll-forward" mechanism. What follows is one suggestion for the sort of arrangement you might consider.

I started with the premise that the main challenge was to find a way of doing this without distorting the main application logic. The design I came up with logs changes at the document level. In my design, you decide which collections need a "roll-forward" capability and you push all document-creation and update activity on these collections through a central recoverableCollectionCUD function. This logs details to a central recoverableCollectionLogs collection. In a disaster recovery situation, collections restored from a backup could be rolled forward by applying transactions read from recoverableCollectionLogs. As a bonus, the recoverableCollectionLogs collection provides a way of auditing the system in the sense of "who did what and when?"

Here's the code I came up with:

async function recoverableCollectionCUD(collectionName, transactionType, transaction, documentId, dataObject) {

let collRef = '';

let docRef = '';

switch (transactionType) {

case "C":

collRef = collection(db, collectionName);

docRef = doc(collRef);

documentId = docRef.id

await transaction.set(docRef, dataObject)

break;

case "U":

docRef = doc(db, collectionName, documentId);

await transaction.set(docRef, dataObject, { merge: true })

break;

case "D":

docRef = doc(db, collectionName, documentId);

await transaction.delete(docRef)

break;

}

// write a log entry to the recoverableCollectionLogs collection

let logEntry = dataObject;

logEntry.userEmail = userEmail;

logEntry.transactionType = transactionType;

logEntry.collectionName = collectionName;

logEntry.documentId = documentId;

logEntry.timeStamp = serverTimestamp();

collRef = collection(db, "recoverableCollectionLogs");

docRef = doc(collRef);

await transaction.set(docRef, logEntry);

}

In the recoverableCollectionCUD function:

- Arguments are:

-

collectionName- the collection target for the transaction -

transactionType- the CUD request type ("C", "U", "D") -

transaction- the Firestore transaction object that is wrapping the current operation (see below) -

documentId- the id of the document that is being updated or deleted -

dataObject- an object containing the fields that are to be addressed in the document (note that you only need to provide the fields that are actually being changed).

-

- documents in the

recoverableCollectionLogscollection are stamped withtimestampanduserIDfields to support the roll-forward and audit tasks - The

recoverableCollectionCUDfunction is designed to be called within a Firestore transaction block - the intention being that the content ofrecoverableCollectionLogsproceeds in lockstep with the content of the recoverable collections that it monitors. The pattern of the calling code is thus as follows:

await runTransaction(db, async (TRANSACTION) => {

... perform all necessary document reads ...

... perform document writes using the `recoverableCollectionCUD` function with TRANSACTION as a parameter..'

}

In the event that you needed to use recoverableCollectionLogs to recover your database, you would run something like the following:

const collRef = collection(db, 'recoverableCollectionLogs');

const collQuery = query(collRef, orderBy("timeStamp"));

const recoverableCollectionLogsSnapshot = await getDocs(collQuery);

recoverableCollectionLogsSnapshot.forEach(async function (myDoc) {

let collName = myDoc.data().collectionName

let collRef = collection(db, collName);

let docId = myDoc.data().documentId;

let docRef = doc(collRef, docId);

// re-create the original data object

let dataObject = myDoc.data();

delete dataObject.userEmail;

delete dataObject.transactionType;

delete dataObject.collectionName;

delete dataObject.documentId;

delete dataObject.timeStamp;

switch (myDoc.data().transactionType) {

case "C":

await setDoc(docRef, dataObject);

console.log("creating doc " + docId + " in " + collName );

break;

case "U":

await setDoc(docRef, dataObject, { merge: true });

console.log("updating doc " + docId + " in " + collName + " with data object " + JSON.stringify(dataObject));

break;

case "D":

await deleteDoc(docRef);

console.log("deleting doc " + docId + " in " + collName );

break;

};

Note a few interesting features of this arrangement:

- Recovered documents are restored with their original document ids

- Firestore's excellent

Merge: truecapability, combined with Javascript's neat abilities to juggle object properties make coding of the "update" section of this procedure a positive joy.

Here's a sample of the console log generated by a simple test of the procedure:

creating doc xfA55kKr3IJUvqGsflzj in myImportantCollection1

creating doc PI65wceOLa6P8IPytPX0 in myImportantCollection1

deleting doc xfA55kKr3IJUvqGsflzj in myImportantCollection1

deleting doc PI65wceOLa6P8IPytPX0 in myImportantCollection1

creating doc 5n4iC5t15H0QXv9jdUGR in myImportantCollection1

updating doc 5n4iC5t15H0QXv9jdUGR in myImportantCollection1 with data object {"field1":"ii","field2":"ii"}

updating doc 5n4iC5t15H0QXv9jdUGR in myImportantCollection1 with data object {"field1":"iii"}

updating doc 5n4iC5t15H0QXv9jdUGR in myImportantCollection1 with data object {"field2":"kk"}

You might wonder how this arrangement might actually be deployed. In my testing I linked it to a "restore" button in my test webapp. In practice I think you'd be more likely to deploy it as a function using the https.onRequest method. This would allow you to launch a roll-forward with an html call and also, optionally, supply a date-time parameter to allow you to direct recovery to a particular point in time.

Managing your Backup files

Once backup files start piling up in your bucket, cost considerations mean that you'll quickly find you need ways of deleting the older ones. Certainly you can delete unwanted backups manually but clearly this is not a sensible long-term strategy.

Fortunately, Google Cloud Services provide exactly the tool you need - see Object Lifecycle Management for background.

Lifecycle Management enables you to define rules on a bucket that allow you to either delete them or change their storage class (Standard, Nearline etc). To use it, just click on the bucket in your project's Cloud Storage Page and select the Lifecycle tab.

Rules for deletion can be based on a wide range of criteria: the object’s age, its creation date, etc. In my own version of Google's backup scheduling function of (see above), I chose to delete any object that was more than 8 days old. The weekly schedule I then define means that my backup bucket generally contains only the most recent backup (the slightly over-generous 8 day allowance on the rule means that I've got a day's grace to recover should a backup job itself fail).

In practice, testing that everything is working is trickier than you might expect. While the Object Lifecycle Management document says that Cloud Storage regularly inspects all the objects in a bucket for which Object Lifecycle Management is configured, it doesn't define what is meant by "regularly". Ominously, it goes on to say that changes to your Lifecycle rules may take up to to 24 hours to come into effect. My own experience is that the rules themselves are often only checked every 24 hours or so - possibly even longer. You need to be patient. But Lifecycle Management is a very useful time-saver when you consider the complexities that would follow if you tried to do this yourself programatically.

You might wonder how you might fit any "recovery to point of failure" arrangements into your backup storage scheme. If you're just maintaining a single backup, the recoverableCollectionLogsSnapshot collection might as well be cleared down once the dump has been successfully created. There are of course other scenarios, but in my own implementation of this scheme uses a scheduled backup that combines the following tasks:

- The system is taken "off the air" by setting a

maintenance_in_progressdatabase flag that logs out any active users and prevents them getting back in again - The backup is taken

- The

recoverableCollectionLogsSnapshotcollection is emptied (easier said than done - see below) - The

data_maintenance_in_progressflag is unset to bring the system back into service

In general, disposal of your log records raises many interesting issues. For one thing, as suggested earlier, the logs potentially delivers a useful "who did what and when" capability. So you might actually want to hang onto them for longer than is strictly necessary - a recovery run would just now have to specify a start time as well as end times for the logs that are to be applied. But at some point the collection will have to be pruned and then, if your system accepts high volumes of transactions, deleting them can be quite a procedure. If you're happy to delete the entire collection and are prepared to launch the process manually, you can do this through the Cloud console. But if you want to do this in Javascript (in order to automate things and also, perhaps, to be more selective about what is deleted), you have to delete each document individually. Problems then arise because, if you're dealing with a very large collection, you'll have to be "chunk" the process. If you don't, it may run out memory. All this can raise some "interesting" design and coding issues. Advice on this point can be found in Google's Delete data from Cloud Firestore document.

In a simpler situation, your code might just look something like the following:

const admin = require("firebase-admin");

admin.initializeApp();

const db = admin.firestore();

...........

.then(async (responses) => {

const response = responses[0];

console.log(`Operation Name: ${response['name']}`);

// now clear down the Logs

const logsCollRef = db.collection("recoverableCollectionLogs");

const logsSnapshot = await logsCollRef.get();

logsSnapshot.forEach(async (logsDoc) => {

await logsDoc.ref.delete();

});

})

Note that Firestore CRUD commands in a function follow different patterns from those you'd use in a webapp. This is because functions run in Node.js. When following example code in Google's online documents you need to select the Node.js tab displayed above each sample block of code.

Strategy

Now that you've had a chance to play with the various features of the Google Cloud's backup and recovery facilities you should be in a better position to start thinking about how you might deploy these in your particular situation.

Guidance on the issues are well-described in Google's Disaster recovery planning guide document. Clearly no two systems are going to be alike and precise arrangements will depend on your assessment of risk and how much you're prepared to spend by way of "insurance".

Costs for Google Cloud's various storage strategies are described in Google's Cloud Storage pricing document. You may also have noticed a handy "Monthly cost estimate" panel in the right panel of the bucket-creation page, but in practice you might find it more comforting to run a few experiments.

This has been a looooong post, but I hope it's given you've found it both interesting and useful. I wish you the best of luck with your backup system development!

Other posts in this series

If you've found this post interesting and would like to find out more about Firebase you might find it worthwhile having a look at the Index to this series.

Oldest comments (0)