JavaScript rendering is often a complicated and resource-intensive process, and can significantly impact a variety of different performance and user experience factors which SEO success depends on.

This is why it’s crucial to understand where these issues can occur and how they can impact your website.

Here are the 8 main things to watch out for within a JavaScript-powered website that can impact SEO performance:

- Rendering speed

- Main thread activity

- Conflicting signals between HTML and JavaScript

- Blocked scripts

- Scripts in the head

- Content duplication

- User events

- Service workers

1. Rendering speed

The process of rendering can be an expensive and strenuous process due to the different stages required to download, parse, compile and execute JavaScript. This causes significant issues when that work falls on a user’s browser or search engine crawler.

Having JavaScript-heavy pages that take a long time to process and render means that they are at risk of not being rendered or processed by search engines.

“If your web server is unable to handle the volume of crawl requests for resources, it may have a negative impact on our capability to render your pages. If you’d like to ensure that your pages can be rendered by Google, make sure your servers are able to handle crawl requests for resources.”

-Google Webmaster Central Blog

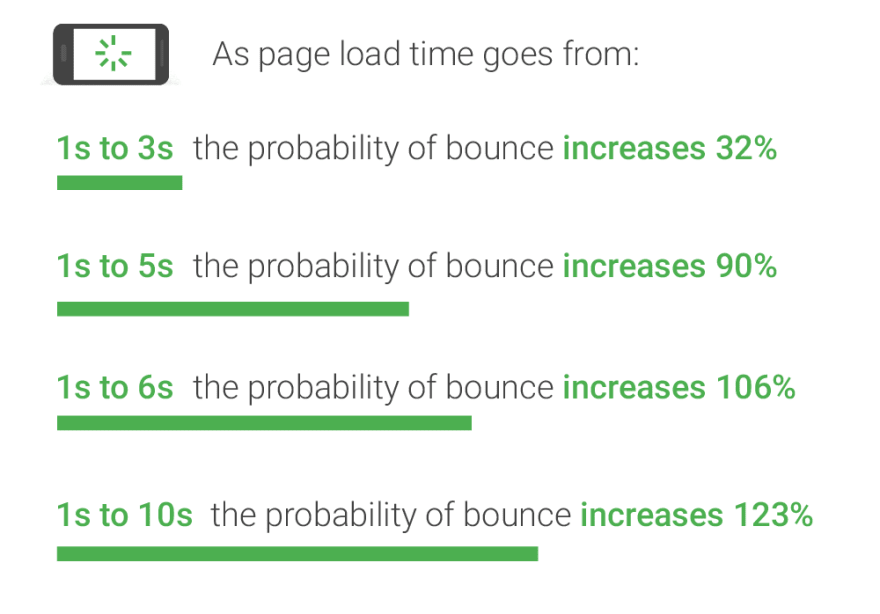

JavaScript that renders slowly will also impact your users because, with the increase of page load time, bounce rates will also rise. Nowadays a user will expect a page to load within a few seconds or less. However, getting a page that requires JavaScript rendering to load quickly enough to meet those expectations can be challenging.

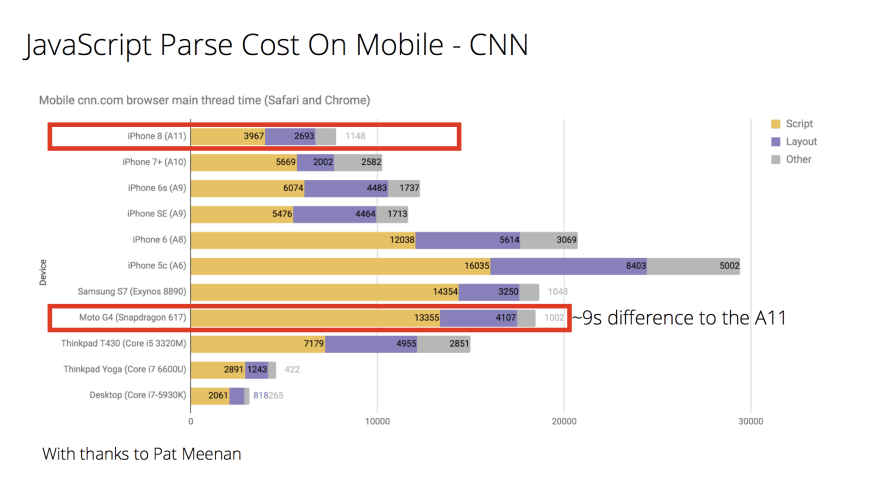

Another issue to consider is that a user’s device and CPU will usually have to do the hard work with JavaScript rendering, but not all CPUs are up for the challenge. It’s important to be aware that users will experience page load times differently depending on their device.

Just because a site appears to load quickly on a high-end phone, it doesn’t mean that this will be the case for a user accessing the same page with a lower-end phone.

“What about a real-world site, like CNN.com? On the high-end iPhone 8 it takes just ~4s to parse/compile CNN’s JS compared to ~13s for an average phone (Moto G4). This can significantly impact how quickly a user can fully interact with this site.”

2. Main thread activity

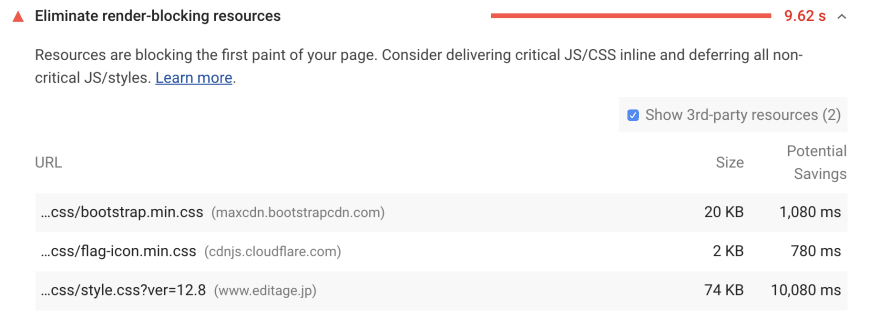

JavaScript is single-threaded, meaning that the entire main thread is halted while JavaScript is parsed, compiled and executed. With this kind of setup, queues can form and bottlenecks can happen, meaning that the entire process of loading a page can be delayed and a search engine won't be able to see any content on the page until the scripts have been executed.

Delays within the main thread can significantly increase the time it takes to load a page for search engines, and for the page to become interactive for users, so avoid blocking main thread activity wherever possible.

Keep an eye on how many resources are being executed and where request timeouts are happening, as these can be some of the main culprits which create bottlenecks.

3. Conflicting signals between HTML and JavaScript

First impressions count with search engines, so make sure you’re giving them clear, straightforward instructions about your website in the HTML as soon as they come across the page.

Adding important meta tags using JavaScript rather than adding them in the HTML is advised against, because either Google won’t see these tags straight away because of its delayed rendering process, or other search engines won’t see them at all due to the fact that they can’t render.

All search engines will use the signals from the HTML in the initial fetch to determine crawling and indexing. Google and the few search engines that have rendering capabilities will then render pages at a later date, but if the signals served via JavaScript differ from what was initially found in the HTML, then this will contradict what the search engine has already been told about the page.

For example, if you use JavaScript to remove a robots meta tag like noindex, Google will have already seen the noindex tag in the HTML and won’t waste resources rendering a page it has been told not to include in its index. This means that the instructions to remove the noindex won’t even be seen as they’re hidden behind JavaScript which won’t be rendered in the first place.

Aim to include the most important tags and signals within the HTML where possible and make sure they’re not being altered by JavaScript. This includes page titles, content, hreflang and any other elements that are used for indexing.

“The signals you provide via JavaScript shouldn't conflict with the ones in the HTML.”

-John Mueller, Google Webmaster Hangout

4. Blocked scripts

If a script is blocked, such as in the robots.txt file, this will impact how search engines will be able to see and understand a website. Scripts that are crucial to the layout and content of a page need to be accessible so that the page can be rendered properly.

“Blocking scripts for Googlebot can impact its ability to render pages.”

-John Mueller, Google Webmaster Hangout

This is especially important for mobile devices, as search engines rely on being able to fetch external resources to be able to display mobile results correctly.

“If resources like JavaScript or CSS in separate files are blocked (say, with robots.txt) so that Googlebot can’t retrieve them, our indexing systems won’t be able to see your site like an average user. This is especially important for mobile websites, where external resources like CSS and JavaScript help our algorithms understand that the pages are optimized for mobile.”

-Google Webmaster Central Blog

5. Scripts in the head

When JavaScript is served in the head, this can delay the rendering and loading of the entire page. This is because everything in the head is loaded as a priority before the body can start to be loaded.

“Don't serve critical JavaScript in the head as this can block rendering.”

-John Mueller, Google Webmaster Hangout

Serving JavaScript in the head is also advised against because it can cause search engines to ignore any other head tags below it. If Google sees a JavaScript tag within the contents of the head, it can assume that the body section has begun and ignore any other elements below it that were meant to be included in the head.

“JavaScript snippets can close the head prematurely and cause any elements below to be overlooked.”

-John Mueller, Google Webmaster Hangout

6. Content duplication

JavaScript can cause duplication and canonicalisation issues when it is used to serve content. This is because if scripts take too long to process, then the content they generate won’t be seen.

This can cause Google to only see boilerplate, duplicate content across a site that experiences rendering issues, meaning that Google won’t be able to find any unique content to rank pages with. This can often be an issue for Single Page Applications (SPAs) where the content dynamically changes without having to reload the page.

Here are Google Webmaster Trends Analyst, John Mueller’s thoughts on managing SPAs:

“If you're using a SPA-type setup where the static HTML is mostly the same, and JavaScript has to be run in order to see any of the unique content, then if that JavaScript can't be executed properly, then the content ends up looking the same. This is probably a sign that it's too hard to get to your unique content -- it takes too many requests to load, the responses (in sum across the required requests) take too long to get back, so the focus stays on the boilerplate HTML rather than the JS-loaded content.”

7. User events

JavaScript elements that require interactivity may work well for users, but they don’t for search engines. Search engines have a very different experience with JavaScript than a regular user.

This is because search engine bots can’t interact with a page in the same way that a human being would. They don’t click, scroll or select options from menus. Their main purpose is to discover and follow links to content that they can add to their index.

This means that any content that depends on JavaScript interactions to be generated won’t be indexed. For example, search engines will struggle to see any content hidden behind an ‘onclick’ event.

Another thing to bear in mind is that Googlebot and the other search engine crawlers clear cookies, local storage and session storage data after each page load, so this will be a problem for website owners who rely on cookies to serve any kind of personalised, unique content that they want to have indexed.

“Any features that requires user consent are auto-declined by Googlebot.”

8. Service workers

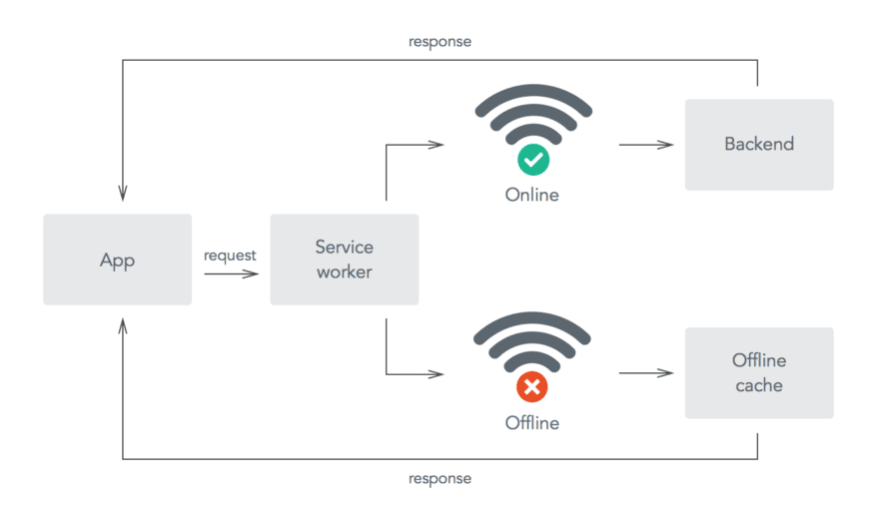

A service worker is a script that works in the background of the browser and on a separate thread. Service workers can run pages and provide content based on their own memory, meaning they can work offline without the server being involved.

The benefit of using a service worker is that it decreases page load time because it doesn’t reload assets that aren’t needed. However, the issue is that Google and other search engine crawlers don’t support service workers.

The service worker can make it look like content is rendering correctly, but this may not be the case. Make sure your website and its key content still works properly without a service worker, and test your server configuration to avoid this issue.

Hopefully this guide has provided you with some new insights into the impact that JavaScript can have on SEO performance, as well as some areas that you can look into for the websites that you manage.

Top comments (0)