Azure Functions is a very reliable platform for Serverless applications, but we had to implement our own retries to ensure reliability for Cosmos DB and Event Hubs. So far, messages were sometimes lost because Checkpoint is advanced even if Function fails.

Reliable retries are difficult to implement, but Azure Functions new feature "Retry Policy", makes it easier.

📢 NEW FEATURE - define a custom retry policy for any trigger in your function (fixed or exponential delay). Especially useful for Event Hub or CosmosDB functions to have reliable processing even during transient issues docs.microsoft.com/en-us/azure/az…18:19 PM - 03 Nov 2020

The Azure Functions error handling and retry documentation has been updated.

Retry Policy allows you to implement retries in your application by simply adding simple annotations. This is a long-awaited feature for those of us who love Change Feed.

I love the Cosmos DB Change Feed, so I use CosmosDBTrigger to check the retry policy.

For now, to use FixedDelayRetry and ExponentialBackoffRetry, please install the Microsoft.Azure.WebJobs package version 3.0.23 or later from NuGet.

Realistically, there should not be a case where you can lose a message received by Cosmos DB Change Feed or Event Hubs, so you shouldn't set a limit on the number of retries either trigger can be used. For no limit, just set it to -1.

public class Function1

{

[FixedDelayRetry(-1, "00:01:00")]

[FunctionName("Function1")]

public void Run([CosmosDBTrigger(

databaseName: "HackAzure",

collectionName: "TodoItem",

ConnectionStringSetting = "CosmosConnection",

LeaseCollectionName = "Lease")]

IReadOnlyList<Document> input, ILogger log)

{

log.LogInformation("Documents modified " + input.Count);

foreach (var document in input)

{

log.LogInformation("Changed document Id " + document.Id);

}

throw new Exception();

}

}

However, setting up monitoring and alerts using Application Insights is essential, because endless retries with unrecoverable errors means that the process comes to a complete halt.

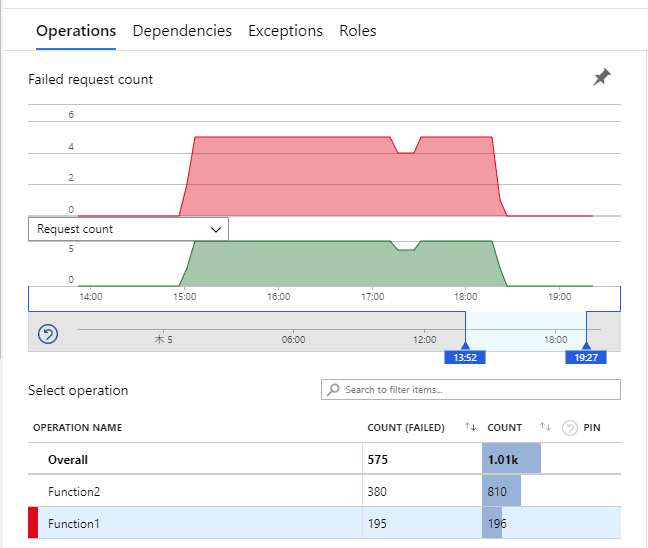

I wrote some code that always throws an exception to make sure the retry really takes place and that Change Feed doesn't go forward at that time, so I can confirm that if I deploy and run it for a while, I'll keep getting the following error.

After running it for a while, I removed the code that threw the exception and redeployed it, so the error has since been resolved.

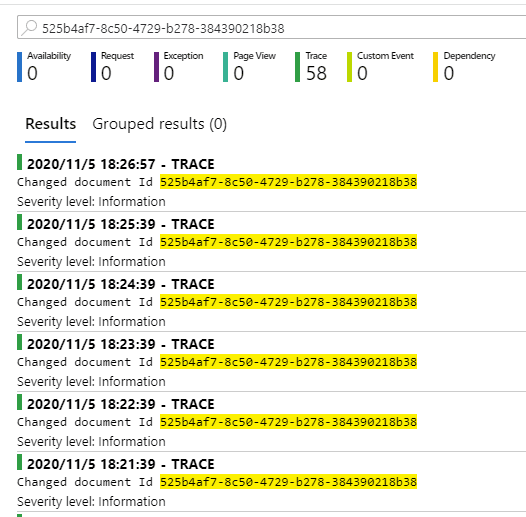

I wrote out the id of the document in Application Insights, so if I search by id, I can find the log every minute. Now you can see that Change Feed is not progressing every time you retry.

I did a couple of deployments along the way and the Change Feed was not lost. When I fixed the code to work correctly, it ran from the continuation as if nothing had happened.

Retry Policy worked perfectly in combination with the Cosmos DB Change Feed. It's the behavior I've been waiting for for a long time.

However, it is not recommended to use it with triggers other than Cosmos DB and Event Hubs. Especially for Http and Timer triggers, Durable Functions is a better choice to implement reliable processing.

Azure Functions is really great.

Top comments (1)

How come my checkpoint gets advanced when shutting down a function that is failing indefinitely