Last week I attended Dan North’s workshop “Testing Faster”. Dan North is the originator of the term Behavior Driven Development (BDD). The whole workshop was amazing but there was one thing which really surprised me. Since BDD is basically another way of Test Driven Development (TDD), I would have expected that having a high test coverage was one of Dan’s goals when writing software. But what I learned was that he actually doesn’t care about the overall test coverage. He explained that in a very convincing way. In this article I wrote down what I learned about the test coverage.

The Meaning of Test Coverage

The test coverage (also known as code coverage) is a number which tells you how much of your production code is covered by automated tests. If you don’t have any automated tests you have a test coverage of 0%. If every single line of your production code is executed by at least one automated test you have a coverage of 100%. Having a high number might feel great but even 100% doesn’t mean that there are no bugs in your code. It only tells you whether the use cases which you have written tests for are working as expected or not.

The Cost of Testing

Automated tests can save you a lot of money! They can detect bugs before they are deployed to the production system which can prevent big damage. They can also help you to design your code in a way that makes it easy to maintain and extend in the future. But writing tests is not for free. It takes time and that time could also be spent on other things. Dan calls it the opportunity costs. Don’t get me wrong on this point. I don’t say that tests are a bad idea. They are not. In fact, they can be very important. But I want to encourage you to think about what makes sense to test and what not.

The Risk Plane

So how do we decide what to test? Imagine a software system which looks like this:

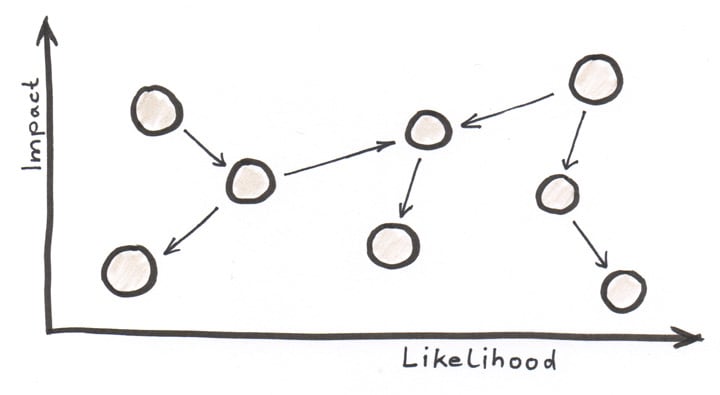

There are many components and dependencies between them. Now let’s add a coordinate system to the graph. The more a component is on the right side of the graph the more likely it is to break. The more impact a failure in a certain component would have the higher we draw it on the vertical axis. Let’s take a look at the risk plane:

Now imagine that the test coverage of our system is 80%. Sounds like quite a good number. But look at the component on the top right corner. It is very likely that something will break in that component and the impact of a failure would be very high. Having that in mind, 80% is actually quite low for that part. On the other hand: The component on the lower left corner is very unlikely to break. And even if it breaks the impact will be low. So does it really make sense to spend so much time on testing this component in order to keep the test coverage high? Wouldn’t it be more useful to spend our time on writing tests for the components on the upper right corner or even to build new features? Every hour we spend on coding means money invested by our company. It is our responsibility as software developers to make reasonable decisions on how to spend that money.

The Stakeholder Planes

Dan North says, “The goal of testing is to increase the confidence for stakeholders through evidence”. Evidence can be provided by our tests. Increasing doesn’t necessarily mean to reach the 100%. It requires some pragmatism to decide how much effort to spend in which situation. And who are the persons we are doing that for? It’s the stakeholders! It is not enough to tell them that we are great developers and everything is going to be alright. We should give them evidence. They should know which behavior is assured by tests. Developers tend to have a blind spot. Most developers think that their code is great and works. But the stakeholders do usually not have that much confidence in the developers which is why they need the evidence.

Often there are many kinds of stakeholders. They can be users, people from the security department, the customer support and many others. We can build software which is very unlikely to fail but if it is illegal we still cannot ship it. This is why we have to extend our model with another axis. We have to add planes for our stakeholders:

Usually, the stakeholders see the software from different perspectives. Sometimes aspects that you didn’t even think about are essential to them. Maybe it is extremely important that certain user roles must not access certain data. That would require a bigger testing effort. Talking to all kinds of your stakeholders is very helpful. Ask them what is the most important thing that you should concentrate on. On which part would they spend the most effort. If you do that with every stakeholder you will get a good idea of where to draw your components in the risk planes and how much testing effort to put on which component.

The Pragmatic Way

What Dan North made really clear to me is that the test coverage doesn’t tell much about the quality of the software. 80% can be too little for one component and it can be a waste of time for another component. When we write code we should first understand our stakeholders. What is really important? We should estimate the impact and the likelihood of potential bugs and pragmatically decide how much effort to spend on which component. We have to keep in mind that we are paid for our work. Every hour that we spend on coding costs money. It’s our responsibility as professional software developers to spend that money wisely.

This article was first published on team-coder.com.

Top comments (20)

The main problem that tests have is that they're usually written by programmers.

Most of the weird bugs that I've encountered are edge cases that customers trigger . Of course it's good to cover the bug then, and create a test case for that issue, but as you said 'coverage' is misleading in that case.

That is why you are writing your test in TDD before implementing the production code. So you keep sure that you do not look what the method does and write a test for that outcome. The result is then a more independent approach.

But I see your point, separate testers may detecting errors better than the developer who implemented the production code. On the other hand the may also just assert the outcome of the production code method.

I realized that the best tester is the

client: Always find the way to break the code...

A week ago I did a code to encrypt files using OpenSSL. In order to create them, I need two files and a password. My function create two new files and uses them to create a final one. I checked everything, all weird validations, "what if..." cases, and asked all Support team what the common user does. More than 4 hours testing. Also a partner with more experience with the user tested my code. Aparently, everything was fine.

Well, the code only stayed in production for 24hrs... One client found a way to make it crash. 2hrs trying to figure out why aaannnddd, finally, we found it: He added manually the extension because (in his own words) "It does't has one" (Windows don't shows it). The only case we didn't consider because, usually, the user NEVER touch those files (one time every 5 years), and is less probable to modify them.

So, I conclude that it doesn't matter if you do tons of test cases, the user always finds the case you never considered... Of course, do the cases to find the most common errors. The weirdest ones, let the user find them.

As Aaron said below (above ? :P), customers are 'clever'. You need to take into consideration all of the weird things they might do including renaming files to match extension requirements and that might be either

a) way too time consuming to write tests for all of the cases, and from a business perspective it might not be feasible cost wise.

b) you most likely will miss something

Imho best thing is to treat all user input as junk all of the time, and constantly sanitize and compare with what you actually need.

Also remember that the web is 'typeless', so user input is always tricky to validate.

What about legacy code?

Don't you think that in this case, 100% coverage is pretty great?

Yes, having a test coverage of 100% is always great. However, everything we do costs time and money. If I had unlimited resources I would probably also try to write tests for every possible case in a legacy system ;-)

And what if there was a tool that creates coverage for legacy code automatically?

Do you know a such a tool?

Hehe, if you find such a tool and that tool creates only useful tests then let me know ;-)

Will do :)

So what's your recommendation? testing only the main components?

It always depends ;-) If tests help you to build your software then do TDD. If you want to decide where to start writing tests for a legacy system then you might ask your stakeholders what’s most important for them and start with the components which are most likely to break and which would cause the biggest damage if they broke.

Thanks a lot!

You're making good and interesting points :)

:)

There are tools like IntelliTest msdn.microsoft.com/en-us/library/d...

Jessica Kerr (in a very interesting talk) mentions a tool called QuickCheck which allows you to run property based testing to find cases where you program might fail youtu.be/X25xOhntr6s?t=20m28s

Thanks for sharing Raúl! Do you use such tools? Do they work well for you?

not like I have seen 100% coverage, but if your testing suite is comprehensive, then uncovered code is dead code, as if it really would have served some purpose, it would be covered by specifications from one of stakeholders?

Risk-based testing is essentially the same idea. Consider where a bug would hit you most often or where it would deal most damage, that's where you have to test. Testing is always punctual, it's never a full proof. A good test suite will, in the best case, detect the presence of a bug. But it will never be able to show the absence of any bug. Code coverage is nice (and comparably easy to measure), but should not the primary metric to strive for. In my experience, getting code coverage higher than 70% (provided that the existing test cases really are meaningful) is hardly ever worth it. Better spend your time on documentation.

My main issue with this reasoning is that if it's that unimportant that it works correctly then why build it in the first place, or waste time maintaining it. Even more time since there would be no tests to indicate what's working, what may be broken, how it may be broken etc.

I think in many cases building a component with low test coverage is still more useful for many users than nothing at all.

Code coverage doesn't seem to help with side fx

You get the side effect executed and covered but doesn't imply the side effect is asserted, sometimes you can't even look for it

But it does help remember why code was written in the first place, and forces you to review your own code

Just aim for 100% responsibilities tested, or 100% features tested, not 100% code covered

I assume, this is NOT about unit testing. If that's the case, looks good. Otherwise, this whole thing should be reconsidered or forgotten. It is already suspicious as it's talking about testing without considering different type of tests.