Adapted and reworked from a talk originally given at RailsConf 2017.

The intersection between humans and technology has never been simple or easy. This truth is particularly evident when it comes to the humans who create technology. As a human who also happens to write code for a living, I feel this the most during the code review process.

Most developers tend think of their code as a craft and – as seems to be the case with artists and most creators of all kinds – we become incredibly attached to our code. We’ve been told to be egoless programmers, to critique not just our own code, but every single line of code that crosses our desk, as it waits to be merged into a project’s codebase. We’ve heard that having our own code reviewed by our peers and reviewing the code of our colleagues are both Very Good Thingsâ„¢, that we should all be doing. And a good many of us already happen to be doing all of these highly-recommended things.

But when was the last time we evaluated these methodologies? Are any of us sure that our code review processes are actually working? Are we certain that they’re serving the roles that they were originally intended to fill?

And if not: how can we try and make them better?

Why even bother with review?

Before we can really understand the practical benefits of peer code review, it’s helpful to know why they even started in the first place. There has been a decent amount of research around code review best practices, but I think that Steve McConnell’s research in Code Complete (originally published in 1993) is a good place to start.

In his book, he describes code reviews, and what function they ought to serve. He writes:

One part of managing a software-engineering process is catching problems at the “lowest-value” stage – that is, at the time at which the least investment has been made and at which problems cost the least to correct. To achieve such a goal, developers use “quality gates”, periodic tests or reviews that determine whether the quality of the product at one stage is sufficient to support moving on to the next.

The most powerful aspect of McConnell’s case for code review on every single team is the way that he ties it back to something he terms the “Collective Ownership in Construction”: the idea that all code is owned by a group of contributors, who can each equally access, change, and modify the collectively-owned project.

The original intent behind code reviews was that they would help us take collective ownership in the creation of our software. In other words, we’d each be stakeholders in our development process by having a hand in controlling the quality of our products.

McConnell goes on to highlight a few different types of code review processes that an engineering team can adopt into their everyday workflows. I strongly recommend that you pick up a copy of Code Complete and learn more about each of these techniques; for our purposes, however, a summary will suffice. There are three formats for a code review:

1. Inspections

Inspections are longer code reviews that are meant to take approximately an hour, and include a moderator, the code’s author, and a reviewer.

When used effectively, inspections typically catch about 60% of defects (either bugs or errors) in a program. According to McConnell’s research, inspections result in 20–30% fewer defects per 1000 lines of code than less formalized review practices.

2. Walkthroughs

A walkthough is a 30–60 minute working meetings, usually intended to provide teaching opportunities for senior developers to explain concepts to newer programmers, while also giving junior engineers the chance to push back on old methodologies.

Walkthroughs can sometimes be very effective, but they’re not nearly as impactful as a more formalized code review process, such as an inspection. A walkthrough can usually reveal 20–40% of errors in a program.

3. Short code reviews

Short reviews are, as their name would suggest, much faster; however, they are still very much in-depth reviews of small changes, including single-line changes, that tend to be the most error-prone.

McConnell’s research uncovered the following about shorter code review:

An organization that introduced reviews for one-line changes found that its error rate went from 55 percent before reviews to 2 percent afterward. A telecommunications organization in the late 80’s went from 86 percent correct before reviewing code changes to 99.6 percent afterward.

The data – at least McConnell’s subset of collected data – seems to suggest that every software team should be conducting some combination of these three types of code reviews.

However, McConnell’s book was first researched and written back in 1993. Our industry has changed since then and, arguably, so have our peer review methodologies. But are our implementations of code review today actually effective? How are we putting the theory behind code reviews into practice?

To find the answer to these questions, I did what any determined (but a little unsure of where to start) developer would do: I asked the Internet!

i'm collecting data on code reviews—the good, bad, & ugly.

if you have 5️⃣ minutes, please fill this out and RT!

👇👇👇

vaidehi.typeform.com/to/tl7MX521:09 PM - 25 Feb 2017

Well, okay – I asked Twitter.

What do developers think of code reviews?

Before I dive into the results of the survey, a quick disclaimer: I am not a data scientist. (I wish I were, because I’d probably be a lot better at analyzing all of the responses to this survey, and maybe I’d even be halfway-decent at plotting graphs in R!). Ultimately, this means is that my dataset is limited in many ways, the first of which being that it was a self-selecting survey on Twitter, and the second being the fact that the survey itself presupposed a branch/pull request based team.

Okay, now that we have that out of the way: what do developers think of code reviews?

The quantitative data

We’ll try to answer this question by looking at the quantitative data to start.

First off, the answer to this question depends a lot on which developers you ask. At the time that I am writing this, I have received a little over 500 responses to my survey.

The developers who responded primarily worked in Java, Ruby, or JavaScript. Here’s how those responses break down in terms of developers and the primary language that they and their team work in.

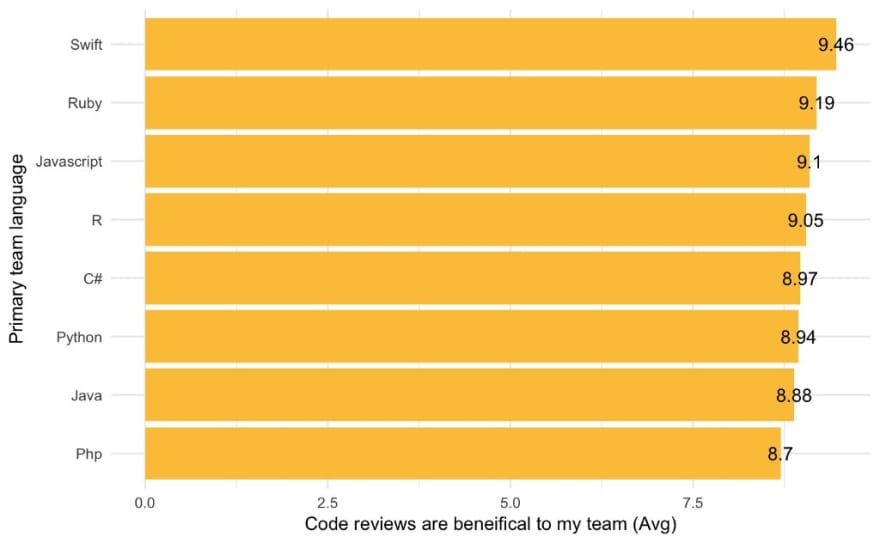

I asked every respondent to the survey to what extent they agreed with the statement: Code reviews are beneficial to my team.

Overall, Swift developers found code reviews the most beneficial to their teams, averaging a 9.5 on a scale of 1–10, where 1 was strongly disagree, and 10 was strongly agree. Ruby developers came in a close second, averaging about 9.2.

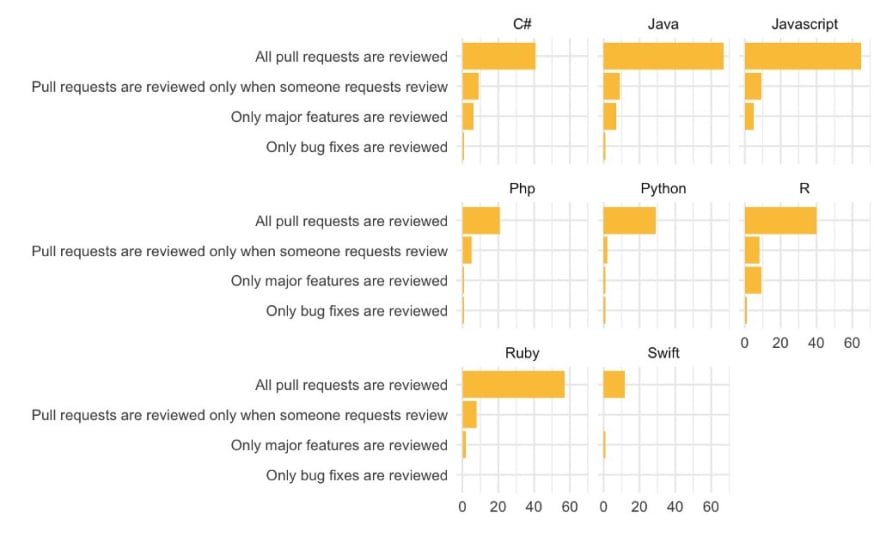

While the majority of survey respondents (approximately 70%) stated that every single pull request was reviewed by someone on the team before being merged, this wasn’t the case on all teams. About 50 respondents (approximately 10% of the entire dataset) stated that pull requests were only peer reviewed in their teams when a review was requested by them.

The data seemed to suggest that this distribution, for the most part, carried across languages and frameworks. No one single language seemed to have an overwhelmingly different result in terms of whether all pull requests were reviewed, or if reviews had to first be requested. In other words, it would appear that it is not the language or the framework that results in a more consistent code review culture, but more likely, the team itself.

Finally, for those developers who were working on teams that did require for pull requests to be reviewed before being merged, it appeared that the majority of teams only needed one other person to peer review before merging code into the shared codebase.

The qualitative data

So what about the unquantifiable stuff? In addition to multiple choice questions, the survey also allowed respondents to fill in their own answers. And this is where the results actually proved to be the most illuminating, not to mention the most useful.

There were a few overarching themes that popped up repeatedly in the anonymized responses.

Ultimately, what seemed to make or break a code review experience depended upon two things: how much energy was spent during the review process, and how much substance the review itself had.

A code review was bad (and left a bad taste in the reviewer’s and reviewee’s mouth) if there wasn’t enough energy spend on the review, or if it lacked substance. On the other hand, if a code review process was thorough, and time was spent reviewing aspects of the code in a substantive way, it left a much more positive impression overall on both the reviewer and the reviewee.

But what do we mean by energy and substance, exactly?

Energy

Another way of determining the energy behind a code review is by answering the question: Who all is doing the review? And how much time are they spending on it?

A lot of respondents were conducting code reviews, but many seemed to be unhappy with who was doing them, and how much time they ended up spending while reviewing – or waiting to be reviewed.

Below are just a few of the anonymized responses to the survey:

We have one dev who just blindly thumbs-up every PR and rarely leaves comments. That person is attempting to game the rule of “at least two approvals”. It is easy to tell, because inside of one minute they will suddenly have approved 5–6 PRs.

–

I find that the 2nd or 3rd reviewer is often more likely to rubber stamp after seeing one approval.

–

There have been times when the same code has been reviewed differently depending on who submits the PR.

–

Everyone on the team should receive equal review. I feel like it’s so common for senior people to get no feedback because people assume they can do no wrong but they totally can, and might want feedback. And junior people get nit picked to death… remember people’s self esteem is likely to be affected and they’re being vulnerable.

–

Commits are too big, so PR’s take long to review. People don’t check out the branch locally to test.

–

Especially long PR’s take longer to be reviewed, which is an issue because they have the most effect on future branches/PRs/merges.

–

The overarching takeaways when it came to how much energy was being spent (or not spent) on a code review boiled down to three things:

- No one feels good about a code review process that’s just a formality & doesn’t carry any weight.

- It’s not fun to review a long PR with code that you’re unfamiliar with, or have no context for.

- To err is human, and we’re all human. We should all be reviewed, and review others fairly.

Substance

The substance of a code review boils down to the answer to one question question: What exactly is someone saying, doing, or making another person feel while they review their code?

The responses connected to the substance of a code review were, for the most part, grounded in what people were saying in their reviews, and how they were saying it.

Here are a few of the anonymized responses from the survey:

I take any feedback on PR’s at face value. If changing that string to a symbol will make you happy, let’s do that and move on. I’m not going to try and justify an arbitrary decision. It’s not unlike working in an IDE environment, it’s very easy for my brain to fall into a “see red squiggle, fix red squiggle” mindset. I don’t really care why it’s upset, just make it stop yelling at me.

–

Do not do big picture or architectural critiques in a review. Have offline conversations. Too easy to send folks down a rabbit hole and create frustration.

–

I feel pretty strongly that it’s annoying when people demand changes, especially if they don’t take the time to explain why they’re doing so, or leave room for the possibility that they’re wrong. Especially when people just rewrite your code in a comment and tell you to change to their version.

–

If a comment thread is getting long, it’s an indication a verbal conversation should be had (report the consensus back in the comment thread)

–

People need to do better jobs distinguishing between their own stylistic preferences and feedback that makes a functional difference. It can be tough for a more junior person to figure out which is which. It’s also frustrating when multiple seniors give conflicting feedback (e.g. What to call a route and why).

–

The main themes when it came to the substance of a code review could be summarized into the following:

- Comments nitpicking purely at syntax lead to a negative experience. Style and semantics are not the same thing. (Interestingly, 5% of respondents used the word nitpick to describe code review comments in a negative context.)

- The words we use to review one another’s code really do matter. An unkind review can break someone’s self-confidence.

How do we do better?

Although this data may not be the most complete, full, or even the most accurate representation of our industry’s code review culture, there is one thing that seems like a fair claim to make: we could all stand to revisit our code review processes on our teams and within the larger community.

This anonymous survey response highlights the immense impact that a review process can have on members of an engineering team:

A bad code review almost made me leave the company. A great code review leaves me feeling better equipped to tackle future projects.

Indeed, having some sense of a formalized code review is incredibly beneficial and statistically powerful; both Steve McConnell’s research and this small survey both seem to support this fact. But, it’s not just enough to implement a code review culture and then never think about it again. In fact, a code review process where members of a team are simply going through the actions of reviewing can be detrimental and discouraging to the team as a whole.

Instead, it is the act of introspection, reflection, and reevaluation of our collective code review culture that will allow us to build upon any kind of formalized review process we might have.

In other words, it’s the act of asking ourselves whether our code reviews are effective, and whether they’re making a difference – both on the entire team, as well as the individuals who form it.

Easy ways to improve your code review process

There are a few ways to immediately make the code review process more painless and enjoyable for your team. Here are a few things to help you get started:

- Implement linters or a code analyzer (if available) in order to eliminate the need for syntactical comments on a pull request.

- Use Github templates for every pull request, complete with a checklist to make it easy for the author of the code and the reviewer to know what to add, and what to check for.

- Add screenshots and detailed explanations to help provide context to teammates who might not be familiar with

- Aim for small, concise commits, and encapsulated pull requests that aren’t massive in size, and thus much easier and quicker to review.

- Assign specific reviewers to a PR – more than one, if possible. Make sure that the role of reviewing is equally distributed amongst engineers of each and every level.

The harder things – but the most important

Once you’ve picked off some of the low-hanging fruit, there are some bigger changes you can help bring about, too. These are actually the most important things to do if you want to change your code review culture.

And, let me warn you: that’s probably what makes them so hard.

- Develop a sense of empathy on your team. The greatest burden of making this happen falls on the shoulders of senior, more experienced engineers. Build empathy with people who are newer to the team or the industry.

If you're senior & all your PRs just get "lgtm👍", try asking people in private. Sometimes a PR is too much of a public performance.02:27 AM - 02 May 2017

- Push for a culture that values vulnerability – both in actions and in words. This means reevaluating the language used in pull request comments, identifying when a review is on track to turn into a downward spiral, and determining when to take conversations offline, rather than questioning the author of the code publicly.

Jeff Casimir@j3

Jeff Casimir@j3 @sarahmei Usually questions are seen as slowing things down. I like to turn it around: "I'll pause here until we an… twitter.com/i/web/status/8…02:53 AM - 02 May 2017

@sarahmei Usually questions are seen as slowing things down. I like to turn it around: "I'll pause here until we an… twitter.com/i/web/status/8…02:53 AM - 02 May 2017

- Have a conversation. Sit your team down, start a Slack channel, create an anonymized survey – whichever fits your group’s culture best. Have the conversation that will make everyone comfortable enough to share whether they are each happy with the current code review process, and what they wish the team did differently.

I saved the most important one for last because, honestly, if you’ve stuck with me and read this far, you must really want to change the status quo. And that really is a Very Good Thingâ„¢! Ultimately, though, having the conversation with your team is the most important first step to take in making that change happen.

This survey response summarizes why, far better than I ever could:

I love code reviews in theory. In practice, they are only as good as the group that’s responsible for conducting them in the right manner.

Resources

If you’d like to view a larger collection of curated, anonymized survey responses, you can view the website that accompanies this project:

Better Code Reviews

Acknowledgements

First and foremost, I’m deeply grateful to the hundreds of developers who took the time and effort to answer my survey.

A huge thank you to Kasra Rahjerdi, who helped me analyze the responses to my survey and created many of the graphs in this project.

Thank you to Jeff Atwood, for his articles on peer reviews, Karl Wiegers for his work in Humanizing Peer Reviews, and Steve McConnell for his extensive research on code review processes in Code Complete. I hope you’ll consider supporting these authors by reading their writing or purchasing their books.

This post was originally published on medium.com

Top comments (28)

Just a quick "Thank you" for that article, as it probably was one of the best articles on peer reviews I've ever read.

Thanks for your kind words! I'm very glad you got something out of it 😃

Awesome article!

I read an article previously about improving code reviews here: tech.forwardfinancing.com/culture/...

It's interesting you point out the items that can be improved during a review, such as a PR template, including screenshots or just making your commits smaller. These are things I've been practicing for years and sharing with the teams I've worked with.

Personally, it always came down to empathy for me. And just like the article I posted above, it's about asking yourself questions that focus on the person asking for the review. And I think it's a two-way street, the coder should ask specific questions that help the reviewer focus on what they should review and the reviewer should understand the audience and context in order to provide a thorough review - if that makes any sense.

I've started doing self-code reviews, where I basically critique my own code before assigning the PR. This critique will contain comments on the line I need help with or just posing questions that I find will help the reviewer help me out.

Anyways, thanks for the article - I love having data points for this :)

Exhaustive coverage of the tools, goals and people dynamics. Excellent coverage (no pun intended) and has helped me to articulate a few concrete dimensions that we can work on to take our mechanical code review process to a valuable progress accretive culture.

With progress being the key goal, we have also linked our appraisal to the review scores.

Thanks!

We all know we should and we all know why...

Thanks for the constructive tips on how to do it better :)

If someone who read this wants to review my code, that would be awesome !

Really liked the article, will do some reading on your resources. Thank you

One idea we use at work, that I really like is that the reviewers are asked to put "minor", "major" or "explain" to each comment, so the reviewed person can easily distinguish concerns from suggestions and questions.

Enjoyed attending this talk live at RailsConf.

I think it might, indeed, be time for a rigorous look at how code reviews are done in the web development world in the days of GitHub pull requests and test-driven development. (You are inspecting the tests, too, right? )

)

Nice post, Vaidehi. I think it would be interesting to combine your results with those from Stack Overflow's yearly developer survey, which cut its responses along language and age lines for various analyses.

In particular, I wonder if your rankings of how programmers of different languages perceive the benefits of code reviews could be explained by the typical profile of someone using those languages. For example -- are those using Swift, a more recent language, more likely to be younger (humbler?) devs who are receptive to code reviews?

The article failed to mention the one glaring reason why code reviews fail, i.e. CODING STANDARDS. Don't even start a code review process if your team does not refer to a central coding standard. Agree to a coding standard in terms of coding style and good/bad practices and make that as the bible when doing code reviews. This will weed out any biased opinions and your team will move fluidly.

Another thing, bugs don't care about your feelings. Sorry but that's the truth. Code reviews are there to improve code quality (less bugs) and definitely not to hurt your feelings. So put those feelings in a box. Code reviews should not be emotional. It's just code, man.

Your team will know if you're a newbie programmer but most people would not care. We are all just humans. People will be more concerned on how well you're learning and whether you're not making the same mistakes over and over again. This is so that you can be a valuable member of the team. So you can either fight the process or just accept the criticisms (good/bad) and just see all this as a learning opportunity.

This was not a constructive approach to responding to this post in accordance our code of conduct.

This tone is unprofessional and language not inclusive. It's perfectly fine to disagree, but do so with respect. Think of this as a warning, but if it is not a workable for you, there are plenty of online communities without a code of conduct.

Hey there,

Did you get a chance to read the entire article? It does, in fact, highlight the fact that syntactic discussions that devolve into back and forth in comments can be resolved by adopting a coding standard on your team. Using a linter, for example, is a simple way to make sure that the whole team agrees on a bonding style.

If you read the anonymous responses from the survey—which are not written by me, but rather were contributed by developers around the world—you'll see that the "process" that you encourage people not to fight is clearly broken. Code reviews aren't always effective on a team, so it's up to us to evaluate what's not working about them and try to fix it. This article is an attempt to do that.

The thesis of this article isn't that bugs care about your feelings. Rather, the crux of the issue is that humans write code, and humans are more productive when they are seen and heard on a team. The attitude of "put your feelings in a box, bugs don't care about your feelings" is pretty dismissive to a huge subset of the engineering community and doesn't foster any conversation or change.

That's not how teams work. Someone's "feelings" are never "in a box." Sure, I've heard (colloquially) of people who are offended by any form of feedback, and that's dysfunctional on their part. But almost everyone is willing to accept feedback if it's delivered respectfully, and will respond with avoidance or aggression if it's not. So considering people's feelings in code review makes it a more effective and positive process for everyone. In fact, it's a professional requirement. You can't stop being kind and respectful to a person just because their bug doesn't care.

Bugs may not care about your feelings, but bugs are almost never the biggest problem on a software team. And "It's just code" is a short-sighted description of software development; it's almost entirely about human beings and the way they think and interact. The code itself is a triviality.

Reading through your comment again, I think you may have said some things you didn't really mean. I hope I haven't misjudged you and I'd be happy to discuss this further if you'd like to clarify.

Amazing writeup, thank you for collecting, analyzing and presenting the poll results. Code reviews introduced me to the social and peer to peer learning component of being a software developer which is one of the best feats of being one. Thanks again! 👌

Maybe it's because my office deals with safety critical code, but we do pretty thorough code reviews. 5 - 7 people are invited to the reviews, and given a minimum of 3 days to submit comments; we have our own customized tool for managing all of this. The 1 main thing I found that helped to make the reviews more effective was to limit the size of the code being reviewed: no more than 400 pines of code. Peer reviews are difficult, and draining on the reviewers. Thus, we need to keep them short, so the reviewers can maintain a solid focus on the entirety of the material being reviewed.

I think it also helps that doing peer reviews is ingrained in our office culture. Everyone does them, both as author and as reviewer. Thus, everyone knows that issues found during a code review are a good thing, and not an indictment on the programmer.

I really liked this post. I'm going to comb through it again when I get back to the office, and see what we can do to make our peer reviews even better.

Great post!

Another method we use across projects is to perform automated code review (using codefactor.io) with every commit and pull request. It weeds out most of the "best practices" cases and tremendously helps to concentrate on a more meaningful conversation during Human code-review phase.

Lots of really good pointers here! I can't wait to see the full talk when it comes out on Confreaks.

FWIW, Kent Beck did some number crunching on Facebook's code reviews and found that to minimize the amount of time it takes for code to be reviewed, the ideal number of reviewers to request is 1. (This is in a context where 1 review is needed but you can request from multiple people that they be that 1 reviewer.) Once you request multiple reviewers, chances are there will be diffusion of responsibility and no one will get to it for a while. If it's on the plate of someone specific, though, they feel a responsibility to get it done.

Otherwise, I can't see much to argue with here, and there's a LOT of stuff. Thanks for taking the time to do the research, crunch the numbers on the results, and share with all of us.

Wonderful post and thanks for sharing. One cultural aspect to code reviews I have always tried to instill in teams I have managed is the opportunity to learn from each other. It has always starts there for me. If people feel they can learn from a two-way interaction and are open to that learning, there is a greater chance for, to use your words, an energetic and substantive engagement.

It's also great to see that some of the resources I remember using early in my career have stood the test of time ;-)

great article Vaidehi, just a note that I'm getting these weird glyphs throughout the article, and on both Chrome and Firefox. 🙏

dev-to-uploads.s3.amazonaws.com/i/...