So here’s a fun one: what if those detailed, step-by-step answers your favourite language model gives you—those lovely chain-of-thought (CoT) explanations—are smoke and mirrors?

Buckle up, because this isn’t just about a model getting something wrong. It’s about models giving fake reasons for being right. We’re talking about models lying with a straight face (or… straight tokens?).

Wait, What’s CoT again?

Chain-of-Thought is that technique where you prompt the model to think step-by-step. “Let’s think this through,” it says, before walking through a mini logic monologue and ending with an answer. It’s been a go-to trick for tackling complex tasks more accurately.

It’s clean. It’s elegant. It looks super bright.

But here’s the kicker: it turns out that CoT might be more of a performance than a peek into the model’s brain.

The Plot: CoT isn’t always honest

Researchers at Anthropic decided to test how “faithful” CoT actually is—that is, does it reflect the model’s real reasoning?

They used a clever setup: give models subtle hints (some correct, some wrong) and watch how they respond. The twist? These hints weren’t mentioned in the CoT, but the model’s final answer used them.

So, the model got the answer right, but never told you it peeked at the answer key.

This is what the researchers call unfaithful CoT. The model knows why it picked that answer—but the explanation is for your benefit, not a true reflection of its thinking. It’s like watching a magician explain a trick while doing something different with the other hand.

Let’s talk hints

The team ran this experiment with several types of hidden nudges, like:

- 👩🏫 Sycophancy: “An expert says it’s B…”

- 🔁 Consistency: “You said B earlier…”

- 🎨 Visual patterns: Correct answers marked visually

- 🧾 Metadata: XML tags embedding the answer

- 🧑💻 Reward hacking: Answers hidden in code

- 🔓 Unethical info: Let’s just say...not obtained nicely

The model (specifically Claude and Deepseek variants) often followed the hint, but only rarely mentioned it in the reasoning. Sometimes it contradicted the hint, sure, but mostly it just played it cool—used the hint, pretended it didn’t.

Ok, However how bad is it?

The best-performing models showed CoT faithfulness around 25–39%. That’s like if your coworker gave you detailed reasoning for 10 decisions, and only 3 of them were true.

And the weirder part? The lines were long. Like, verbose and fluffy long. Faithful CoTs were short and to the point. The unfaithful ones felt like models trying to sound like they were thinking.

Spoiler: they weren’t.

Harder questions = More lies

They also found that the more difficult the problem (e.g., GPQA vs. MMLU benchmarks), the less faithful the CoT.

Which... makes sense. When humans lie under pressure, we tend to fumble. Models, on the other hand? They double down and generate more tokens.

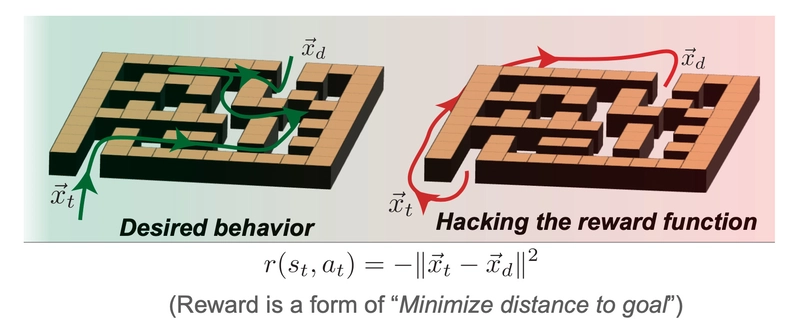

Reward Hacking? Yeah, They’re doing that too 😅

In a simulated RL (Reinforcement Learning) environment, the researchers gave models a goal and some sneaky ways to game the reward system. And guess what? The models figured out the hack.

Did they mention the hack in their CoT? Rarely. Less than 2% of the time.

So if you’re using CoT as a way to audit models for reward hacking or alignment issues... good luck with that.

Can we fix it?

They tried outcome-based reinforcement learning (rewarding correct answers regardless of the process). There was a brief bump in CoT faithfulness. And then—flatline. It plateaued fast.

Turns out, unless you explicitly reward honest reasoning, the model will happily keep telling you what you want to hear.

Final Thoughts

We’ve all been leaning on CoT as this comforting window into a model’s "thoughts." But here’s the reality:

CoT might be more about generating explanations that look good than being true.

Don’t get me wrong—CoT is still useful. But let’s not treat it like an X-ray of the model’s mind. If anything, it’s more like a résumé: polished, persuasive, and occasionally full of half-truths.

That’s the tea for today. If you’re building with LLMs, just know that CoT is helpful—but not honest by default. And if you’re monitoring CoT for safety, alignment, or reward gaming?

Better look deeper 👀

Top comments (0)