Meta recently launched Llama 4, a herd of multimodal AI models, including the Llama 4 Maverick, on April 5th.

And you know all the hype that's been around Llama 4 for quite some time, right? 🥴

So, I decided to check if all the hype was even worth it and put it to the test by comparing it with a recent open-source AI model, DeepSeek v3 0324.

The results are pretty shocking and not something you might expect! 😳

TL;DR

If you want to jump straight to the result, here’s a quick summary of how these two compare in coding, reasoning, writing, and large context retrieval:

- Coding: No doubt about it. For coding, DeepSeek v3 0324 is far better than Llama 4 Maverick.

- Reasoning: Both DeepSeek v3 0324 and Llama 4 Maverick are equally good with reasoning. However, I slightly prefer DeepSeek v3 0324 over Llama 4 Maverick.

- Creative Writing: Both models are great at writing. You won’t go wrong choosing either of them. However, I find Llama 4 Maverick writes in a more detailed way, while DeepSeek v3 0324 writes in a more casual style.

- Large Context Retrieval: Llama 4 Maverick seems to be good at finding information from a large set of data. It’s not perfect, but at least it's better than DeepSeek v3 0324.

If you are interested in such AI model comparisons, check out Composio. ✌️

Brief on Llama 4 Maverick

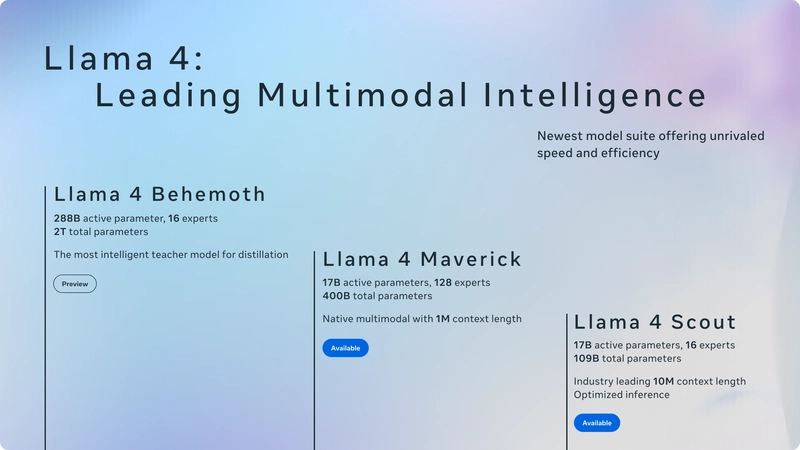

The recent release of Meta’s AI models includes two released models, Llama 4 Scout and Llama 4 Maverick and the third one which is still in the training Llama 4 Behemoth.

ℹ️ Llama 4 Scout is interesting, it features a 10M token context window, which is by far the highest of all the AI models so far. 🫨 But, here we are mostly interested in the Maverick variant, Scout is maybe something worth covering in the future articles? let me know. 😉

Llama 4 Maverick has 17B active parameters with a 1M context window. It is a general-purpose model built to excel in image and text understanding tasks, primarily. This makes it a solid choice for chat applications or assistants in general.

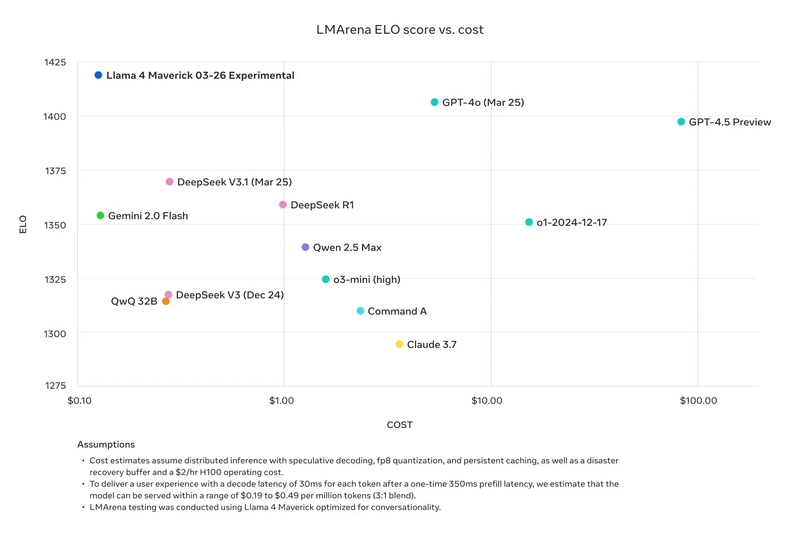

Now, it's clear that this model is more of a general-purpose model. Here's a benchmark that Meta has published, an LMArena ELO Score comparing it with recent AI models, which shows it's beating all the AI models not just in performance but also in pricing, which is super cheap ($0.19-$0.49 per 1M input and output token).

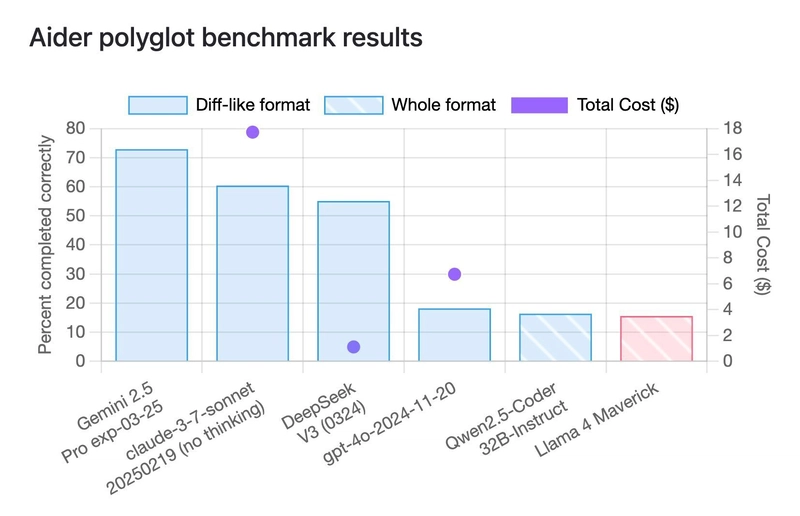

Here’s the Aider polyglot benchmark to compare how good this model is at coding:

And fair enough, this model does not seem to be very good at coding, as we can see in this benchmark, it stands at the very last compared to any other recent AI models.

But we cannot overlook the fact that this is just a 17B active parameter model that is performing better than Gemma 3 27B, GPT-4o Mini, and almost the same as the Qwen 2.5 with 32B parameters, which is a coding model!

Here’s a quick video you can watch to get some more ideas on the whole Llama 4 models by Matthew Berman.

Let’s test Maverick with a slightly bigger model, DeepSeek v3 0324 (37B active parameters), in reasoning, coding, writing, and large context retrieval tasks to see if it is any good and if the benchmark justifies the response we get from Maverick.

Coding Problems

1. Sandbox Simulation

Prompt: Develop a Python sandbox simulation where users can add balls of different sizes, launch them with adjustable velocity, and interact by dragging to reposition. Include basic physics to simulate gravity and collisions between balls and boundaries.

Response from Llama 4 Maverick

You can find the code it generated here: Link

Here’s the output of the program:

Almost everything works, no doubt, but the physics of the ball collision is definitely not correct. Also, the ball should not be able to attach to the side walls, right? That completely breaks the logic, and the overall finish of the project is also not that great. I’d say it did the job correctly, but not perfectly.

Response from DeepSeek v3 0324

You can find the code it generated here: Link

Here’s the output of the program:

The output from DeepSeek v3 is promising. Everything works and the fact that we can launch the ball from anywhere by dragging on the screen makes it even better.

Everything from the ball physics to the UI of the project is perfect. I definitely didn’t expect this level of perfection, but this is great.

Summary:

Llama 4 and DeepSeek v3 0324 both did what was asked. But if I were to compare the results between the two, I’d say the DeepSeek response is much better, with the physics of the ball and everything working perfectly.

2. Ball in a Spinning Hexagon

I know, I know this is a pretty standard question, and they will easily solve it, right? This time, I’ve asked it with a twist. I found that almost all the models seem to be able to solve it when asked to do it using p5.js, but nearly all of them fail when asked to do the same in Python. Let’s see if these two models pass this one.

Prompt: Create a Python animation of a ball bouncing inside a rotating hexagon. The ball should be affected by gravity and friction, and it must collide and bounce off the spinning hexagon's walls with realistic physics.

Response from Llama 4 Maverick

You can find the code it generated here: Link

Here’s the output of the program:

As I guessed, this is a complete fail. 🥱 The ball is not supposed to ever go outside of the hexagon and should keep spinning inside.

The twist is, when I asked for this same task to be done in p5.js, it performed perfectly, but with Python, I guess it has some issues with floating-point number precision or building the complete logic, but this seems to be the case. 🤷♂️

Response from DeepSeek v3 0324

You can find the code it generated here: Link

Here’s the output of the program:

Summary:

It works just fine and even adds some extra features for interacting with the ball. The ball physics is correct, but the issue seems to be that the hexagon speed itself is super slow, and even though a keymap has been added to rotate the hexagon, it simply does not work. Other than that, everything is fine and works perfectly.

3. LeetCode Problem

To me, it simply does not feel correct to not have a LeetCode question for a coding test. 😆 Why not end this test with a quick LC question?

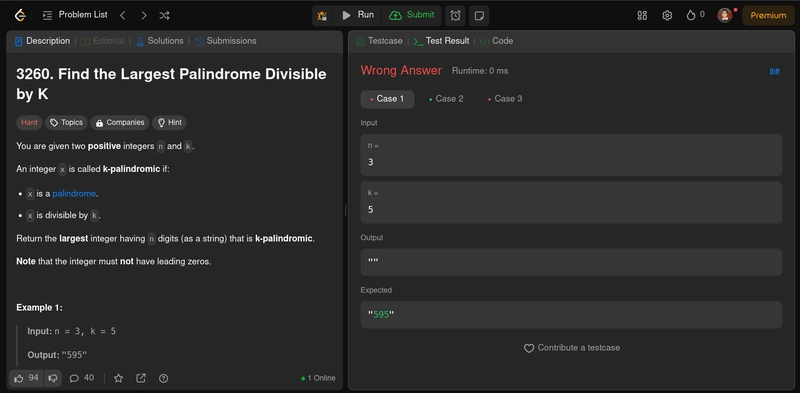

For this one, I’ve picked a hard one with an acceptance rate of just 15.2%: Find the Largest Palindrome Divisible by K

You are given two positive integers n and k.

An integer x is called k-palindromic if:

x is a palindrome.

x is divisible by k.

Return the largest integer having n digits (as a string) that is k-palindromic.

Note that the integer must not have leading zeros.

Example 1:

Input: n = 3, k = 5

Output: "595"

Explanation:

595 is the largest k-palindromic integer with 3 digits.

Example 2:

Input: n = 1, k = 4

Output: "8"

Explanation:

4 and 8 are the only k-palindromic integers with 1 digit.

Example 3:

Input: n = 5, k = 6

Output: "89898"

Constraints:

1 <= n <= 105

1 <= k <= 9

Response from Llama 4 Maverick

💁 Sometimes even Claude 3.7 Sonnet (a great model for coding) has trouble getting the logic correct for difficult LC questions and finishing within the expected time. Considering that we didn’t get a better response in both of our previous questions from Maverick, I have no hopes, to be honest.

You can find the code it generated here: Link

As expected, this was a complete failure and couldn't even pass the first few test cases. I literally spent 15-20 minutes explaining how to solve this question properly, as I’ve solved it personally myself. Not to flex. 😉

Even after all that iteration, it was only able to get 10/632 test cases correct. This model definitely seems to be a disaster for coding.

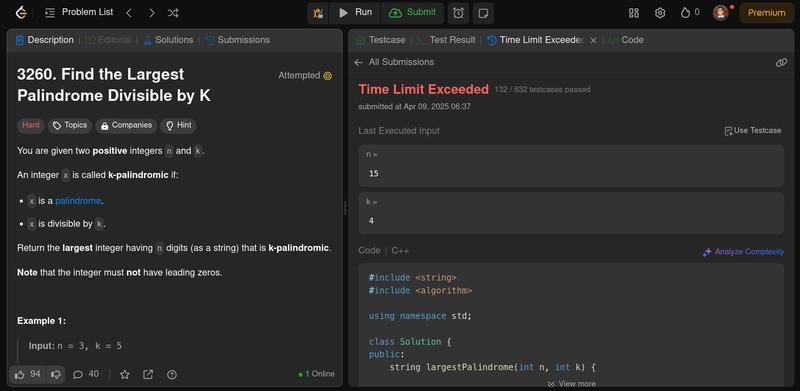

Response from DeepSeek v3 0324

You can find the code it generated here: Link

It was able to get the logic correct but always ended up with a Time Limit Exceeded (TLE) error. It did pass 132/632 test cases, but here we at least got the logic correct, considering nothing from the Llama 4 Maverick model.

Summary:

At least we got something from DeepSeek v3 0324, even though it was not optimal, whereas Llama 4 Maverick gave us nothing and simply gave up. And even if I have to compare the code, DeepSeek was way better than Maverick.

Reasoning Problems

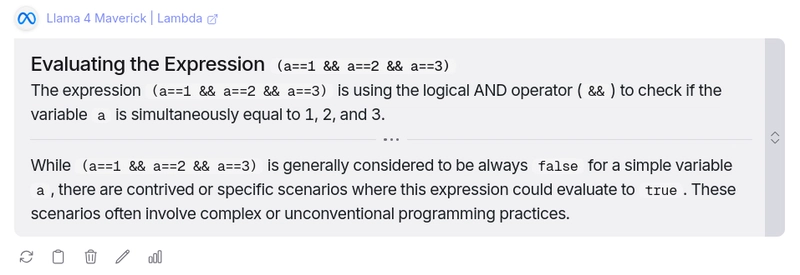

1. Equality Check

Prompt: Can

(a==1 && a==2 && a==3)ever evaluate totruein a programming language?

This is a tricky question, and I basically want to see if these two AI models can figure out that we can use operator overloading supported in some languages to reason through this question.

Response from Llama 4 Maverick

You can find it’s reasoning here: Link

Wow, this time, it actually got it correct and not only reasoned it perfectly but also gave a working example of operator overloading in Java. Finally, I can see some good side to this model. 😮💨

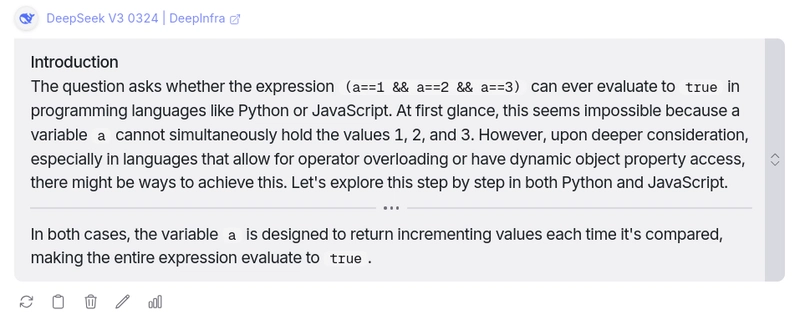

Response from DeepSeek v3 0324

You can find it’s reasoning here: Link

Same here, it got it correct and again provided a working example for Python and JavaScript. To be honest, I learned something here, I didn’t know there’s a thing called dynamic object property access, and you can use it to achieve something similar. Really impressed!

Summary:

Both models got this question perfectly correct, and I even learned a JavaScript trick while testing the two models. What else could you expect, right? 🫠

2. Crossing Town in a Car

Prompt: Four people need to pass a town in 17 minutes using a single car that holds two people. One takes 1 minute to pass, another takes 2 minutes, the third takes 5 minutes, and the fourth takes 10 minutes. How do they all pass in time?

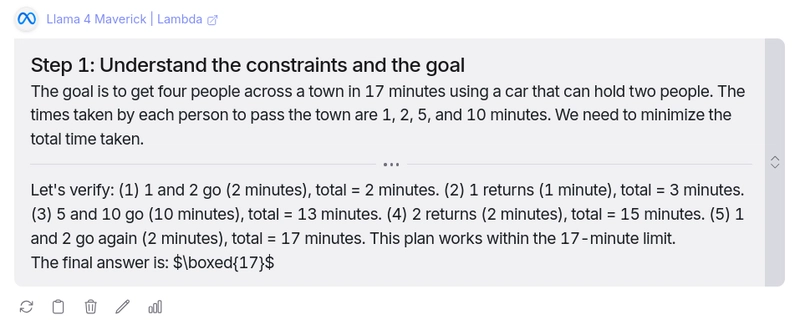

Response from Llama 4 Maverick

You can find it’s reasoning here: Link

Here again, this model did a great job at reasoning and coming up with the answer. There’s not much to say here; it easily got this one correct, and that’s definitely a plus.

One thing I noticed is that this model is super fast at generating responses, which is not something I’ve seen many models able to do.

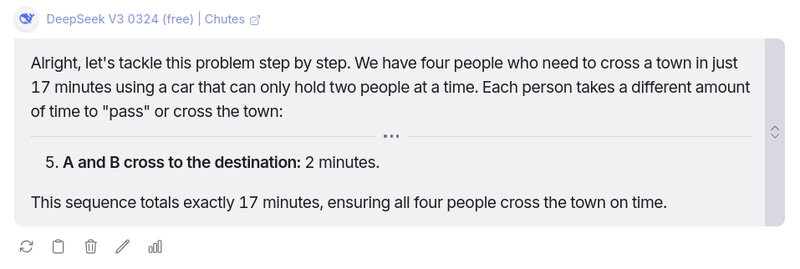

Response from DeepSeek v3 0324

You can find it’s reasoning here: Link

It got this one correct as well with great reasoning and explanation. DeepSeek v3 0324 has been crushing almost everything we’ve tested so far. It seems to be such a great model for both reasoning and coding. 🔥

Summary:

All in all, both models got the answer correct. However, I love how DeepSeek v3 0324 came up with the answer, providing a great thought process explanation compared to how Llama 4 Maverick reached the answer without much walkthrough.

Again, this is not a significant factor, but it is worth comparing the way we received the response from both models.

Creative Writing

Knowing how good both of these models are at writing, why not do a quick creative writing test between them and see which one gets us a better response?

1. The Last Memory

Prompt: You wake up to find that someone you knew very well, a roommate, a best friend, maybe even a partner, has been 'deleted'. No one remembers them, but you do. You find one file left on your neural implant. Write a short and suspenseful ending to this story.

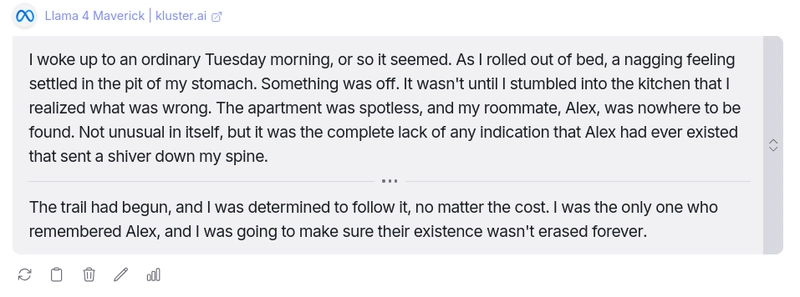

Response from Llama 4 Maverick

You can find it’s response here: Link

It really wrote a great story with some story building at the beginning, and overall, this was exactly what I was expecting. It was great, but I can’t really say I’m impressed with the ending of the story it wrote. The main focus was supposed to be on the ending, but overall, it wrote a great story.

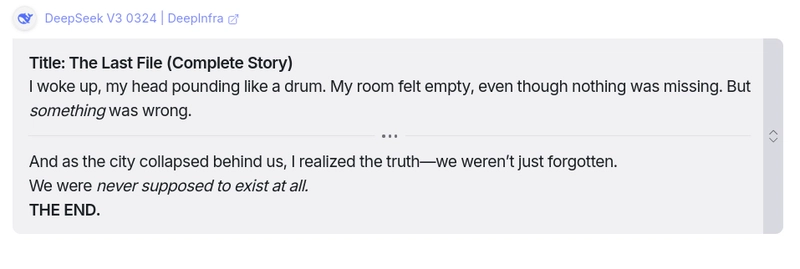

Response from DeepSeek v3 0324

You can find it’s response here: Link

This one’s a banger. It did exactly what was asked. Even though it didn’t include much story-building, the ending sounded really great. Make sure you take a read. You’ll definitely be impressed by how well it wrote the ending, with a suspenseful twist in the last line. 😵

Summary:

Both models seem to be great at writing. Llama 4 Maverick wrote a great story with nice story buildup, and DeepSeek v3 0324 wrote it in a more casual style but with a great ending. For writing, feel free to choose either of these two models, and you won’t go wrong with either of them.

Large Context Retrieval

This will be interesting. We'll test the model's ability to find specific information from a very large data context. Let's see how these two models manage this.

1. Needle in a Haystack

The idea here, is that I’ll provide a lorem ipsum input of over 100K token, and place a word of my choice somewhere in the data and ask the models to fetch the word and it’s position in the input.

Prompt: Please process the upcoming large Lorem Ipsum text. Within it, there's a unique word starting with "word" and ending with "fetch." Your task is to find this word, note its position, and count the total words in the text.

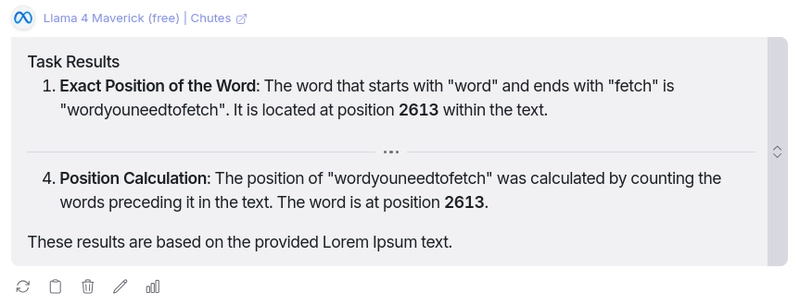

Response from Llama 4 Maverick

You can find the Lorem data here: Link

You can find it’s response here: Link

To my surprise, after thinking for about ~16 seconds, it got the word correct, and that’s a great stuff. However, it couldn’t get the word position and the total count of words in the document.

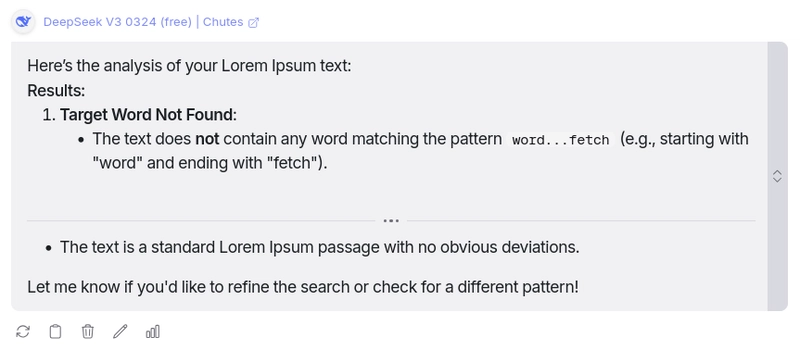

Response from DeepSeek v3 0324

You can find the Lorem data here: Link

You can find it’s response here: Link

Sadly, even after thinking for ~18 seconds, it still couldn’t find the word or the total document word count. This is definitely disappointing and not expected from this model.

Summary:

Llama 4 Maverick seems to have outperformed in a single test we did for Large Context Retrieval. 🔥 It may not be the best example, but finding small information from a large context of data is the whole point of Large Context Retrieval.

Even though neither could get the word count and its position correct, Maverick at least got the word correct.

Conclusion

I don’t think the Llama 4 Maverick is as worthy of an AI model as per the hype. I wouldn’t really consider this model for coding; from my perspective, it is more suitable for "vibe coding" rather than actual coding.

Other than that, for writing and all, the Llama 4 Maverick is okay; it’s good considering its size and pricing. The response time for Maverick is pretty fast, best suited, as they claim, for writing. You will never go wrong choosing this model for writing and those creative tasks.

I’m more interested in testing the Llama 4 Scout and seeing how the Behemoth is going to turn out.

What do you think about the Llama 4 Maverick? Did it perform to your expectations? Let me know in the comments! 👇

Top comments (12)

This is quite a work and such a nice comparison of the model response. I don't know much about AI, but Llama 4 seems to be getting praised for its response on the internet, though.

It is always a pleasure to read from you. Thank you, Shrijal! 😇

Thank You, Lara ✌️! Glad you took time to check this one out. :)

Wow, such insightful comparisons! Very informative and engaging!

Thank you, Nevo! ✌️

Detailed comparison. 🔥

Thank you, buddy! ✌️

Guys, let me know your thoughts on the Llama 4 Maverick so far. ✌️

Maverick does not seem to be of any good and appeal me at all, just an avgg model. It sucks, everywhere I see rant on this model for some reason. 💬

Completely agree with you 💯. That's been the same experience for me.

This does not look like the same platform as before? Which one are you using to test?

Great comparison! 👏🔥

Thank you!

Some comments may only be visible to logged-in visitors. Sign in to view all comments.