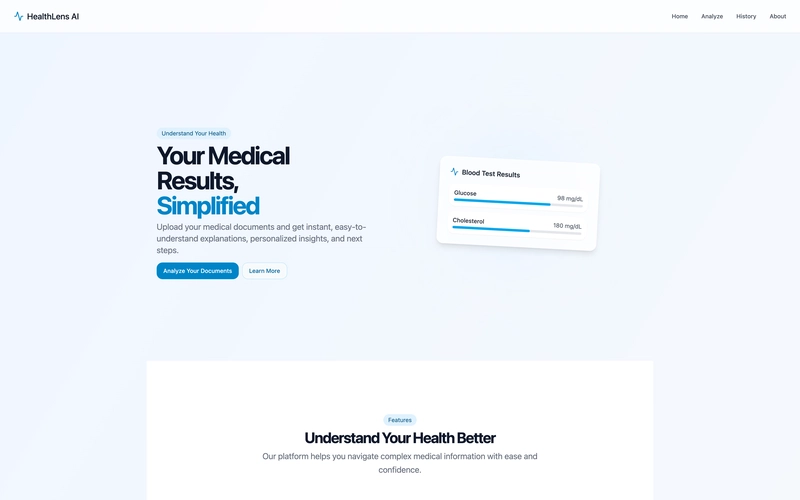

Here, we are creating, learning, and improving. For my first project, I decided to start with something “lite” — an AI-powered health checker for analyzing medical results.

After diving deep into research and brainstorming tons of ideas, I landed on something exciting — an AI assistant that helps users understand their health exams in simple terms. This isn’t a doctor, just a smart tool that breaks down medical jargon so you actually know what your results say.

What was the goal of this project?

I wanted to explore the capabilities of new AI systems in understanding unstructured data from health exams. My goal was to test the limits of these systems and see how they perform in terms of accuracy, efficiency, and even their ability to interpret images and healh results.

Let’s say your doctor asks you to get some tests done — like a blood test or a physical examination. When you receive the results, they’re written in complex medical jargon that’s hard to understand. That’s where this tool comes in! It doesn’t replace your doctor, but it helps you better interpret your exam results, giving you a clearer picture of what’s going on.

It’s an exciting concept — not easy to pull it off well, but a great way to explore the limitations and potential of AI in this field. Let’s dive deeper! 🚀

Let’s start building!

As part of this series, the goal is to determine whether Vibe Coding is a viable approach to building software. And so far, I have to say — I’m pretty impressed with what I was able to accomplish in just 2–3 hours of Vibe Coding, plus another hour of improvements and refactoring.

Let’s start from the beginning.

First, I began by prompting ChatGPT with my idea. I worked alongside ChatGPT to refine the perfect prompt, starting with broad questions and iterating until I had a final version that looked like this:

“Develop an AI tool that allows users to upload images or documents of their medical tests (e.g., blood work, X-rays, lab reports). The AI should analyze these documents and provide clear, understandable explanations of the results. It should identify key findings, explain medical terminology, and offer personalized recommendations based on the data, such as lifestyle adjustments or next steps for treatment. The AI should act as an educational resource, helping users interpret their health diagnostics and empowering them to make informed decisions about their health.”

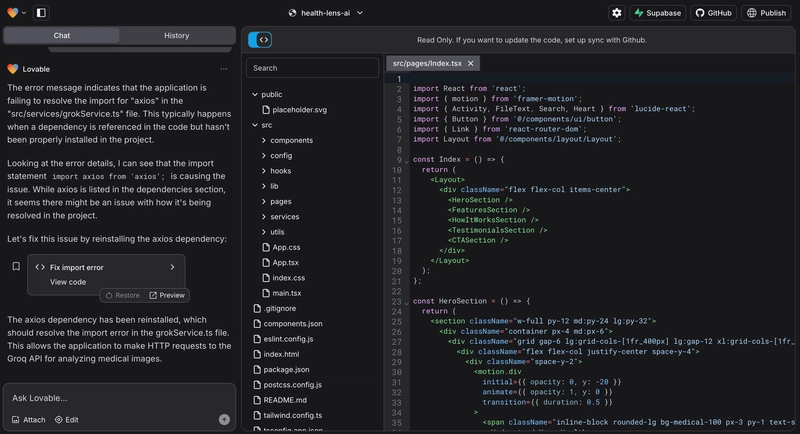

After this first step, I used one of my favorite Vibe Coding tool Lovable.dev, which is just an incredible tool.

By having the AI building the skeleton of the project, I learn few things. First, this tool is fantastic for building impressive frontend applications with clean, well-structured Tailwind CSS styling.

The end result is a visually appealing interface with smooth animations and interactions, all created by the AI based on our instructions, with most of the desired functionalities already in place. However, there’s a downside — the styling itself. Apparently, you can’t choose just any style you want. The AI is heavily calibrated to use Tailwind as much as possible, giving all projects built in Lovable a similar look and feel. This is great for rapid prototyping but not very flexible for custom designs or projects with specific styling requirements. There might be ways to customize it, such as integrating Figma or using custom CSS, but I haven’t tried that yet. I’ll be posting about it in a few days.

Another challenge with Lovable is that sometimes the AI simply doesn’t know what’s wrong. As the project grows in complexity, the AI struggles to understand what’s happening, especially when dealing with TypeScript-related issues.

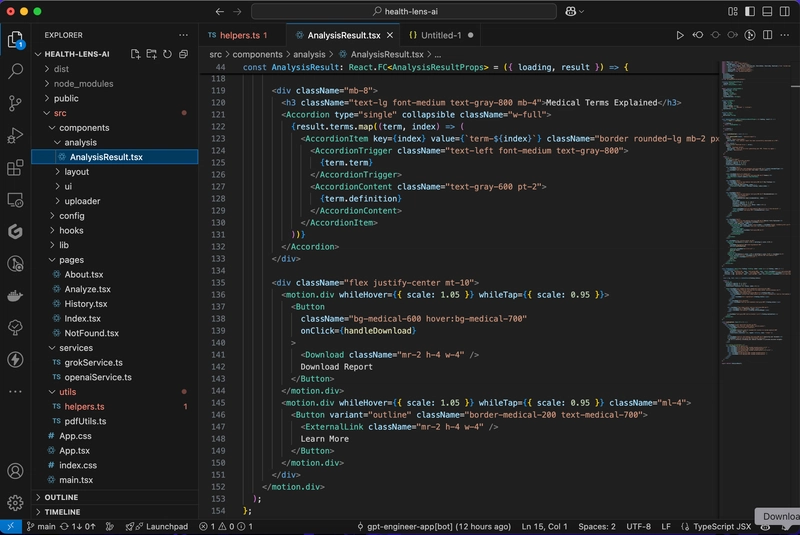

Once I reached the AI’s limits in building the application, I moved everything to GitHub and started refining the skeleton on my own.

It’s Time to Code!

At this point, I had a boilerplate — a solid skeleton of my application — which I cloned onto my machine to start working on it.

Since this project is primarily a learning experience for me, I didn’t spend too much time on custom work. My main goal is to understand the limitations of Vibe Coding — where the AI reaches its limits and where human intervention becomes necessary.

For me, that moment came as the application grew in complexity (after about 10–15 prompts). The AI eventually hit a wall, unable to continue on its own. That’s where I had to step in and take control.

Filling in the Missing Pieces

At this stage, my main focus shifted to implementing the missing functionalities that would bring the project to life. These included:

✅ Image Recognition — Enabling the AI to analyze uploaded medical documents and images.

✅ Text-Based AI Interactions — Generate the explanations with AI.

✅ Multiple AI Providers — Expanding compatibility beyond OpenAI to include other models like Grok, ensuring flexibility and better performance across different use cases.

Integrating OpenAI’s Vision API

The first step was integrating OpenAI’s Vision API to process and analyze uploaded images. This allowed the AI to extract text and identify key visual elements from medical documents, such as blood test results, X-rays, or lab reports. The goal was to make the AI capable of understanding the content and providing a user-friendly summary.

This was an exciting milestone because it transformed the project from a simple text-based assistant into a more dynamic tool that could handle real-world medical documents. Up next, I focused on enhancing AI interactions and expanding the model compatibility! 🚀

This is the code for interacting with OpenAI:

export const analyzeImage = async (imageFile: File): Promise<AnalysisData> => {

try {

// Convert file to base64

const base64Image = await fileToBase64(imageFile);

// Send to OpenAI Vision API

const response = await openai.chat.completions.create({

model: "gpt-4o",

messages: [

{

role: "system",

content: "You are a medical expert that analyzes medical documents. Extract all relevant information from the image including test names, values, reference ranges, and provide explanations in plain language. Return your analysis in a structured format."

},

{

role: "user",

content: [

{ type: "text", text: "Analyze this medical document and extract key information:" },

{ type: "image_url", image_url: { url: base64Image } }

]

}

],

max_tokens: 1500,

});

// Process the AI response

const aiResponse = response.choices[0].message.content;

console.log("OpenAI Response:", aiResponse);

// For now, we'll return mock data since we need to parse the AI response

// In a real implementation, you would parse the AI's text response into structured data

return processMedicalData(aiResponse);

} catch (error) {

console.error("Error analyzing image with OpenAI:", error);

throw new Error("Failed to analyze the document. Please try again.");

}

};

This approach worked well for the image recognition part, but unfortunately, I spent too much time trying to parse the response into a usable JSON format for the app. Ultimately, this approach wasn’t working.

Of course, this was my mistake — I wasn’t aware that OpenAI allows you to pass schemas, as explained in their documentation. Next time, I’ll make sure to take advantage of this feature and implement it properly.

At some point during development, after battling with JSON formatting issues for far too long, I decided to abandon the idea and move forward to the next task: integrating Grok (I ended up **using the **llama-3.2–11b-vision-preview model for vision and llama-3.3–70b-versatile for text, both accessed through the Grok API).

This part was particularly interesting to me. I set up Grok as a separate service, making it possible to switch between providers simply by changing a boolean in the config file. However, as I started working on this integration, I quickly realized I was running into the same issue I had with OpenAI — JSON formatting problems.

At this point, I was pretty frustrated, but never defeated.

It was time to go back to the docs. And soon enough, I found the solution: JSON schemas — something very similar to OpenAI’s but designed for Grok.

This was a game-changer, but I had to make two queries to get it right:

1️⃣ Vision — Recognize and extract information from the image.

const base64Image = await fileToBase64(imageFile);

const messagePrompt = `You are an expert medical document analyzer. Your task is to extract and structure all key findings, vital signs, and any other medically relevant data from a document that I will give you after this prompt.

**Ensure that all extracted information follows this structured format** and is as complete as possible:

1. **Document Type**: Identify the type of medical document (e.g., Lab Report, Medical Summary, Radiology Report, etc.).

2. **Date**: Extract the document's date in YYYY-MM-DD format.

3. **Summary**: Provide a brief but clear summary of the document’s contents with all the information about the patient. Use a markdown format for this.

4. **Findings**: Extract all measurable medical findings, including:

- **Name** (e.g., Heart Rate, Blood Pressure, Glucose Level)

- **Value** (e.g., 72, 120/80, 98.6)

- **Unit** (e.g., bpm, mmHg, mg/dL, °F)

- **Reference Range** (if available; otherwise, return "N/A")

- **Status** (must be one of: "normal", "abnormal", "critical")

- **Explanation** (e.g., "within normal range", "elevated", "low", "requires immediate attention")

5. **Medical Terms & Definitions**: Identify any key medical terms found in the document and provide a brief explanation.

6. **Recommendations**:

- If the document contains specific medical recommendations, extract and include them.

- If no explicit recommendations are present, analyze the findings and provide expert medical advice based on the data. Ensure the advice is **medically sound**, considering possible risks and follow-up actions.

**Rules:**

- Do not omit any relevant data. If a required value is missing, return "N/A".

- Do not use anything outside the document. Don't add anything, just use what's on the document.

- Ensure all findings are properly labeled and categorized.

- Maintain a structured, machine-readable format.

- Recommendations must be evidence-based and logically derived from the extracted findings.`

// Prepare the Groq API request body with the system and user prompts

const requestBody = {

messages: [

{

role: "user",

content: [

{

type: 'text',

text: messagePrompt

},

{

type: "image_url",

image_url: {

url: base64Image

}

}

]

}

],

model: "llama-3.2-11b-vision-preview",

temperature: 1,

max_completion_tokens: 1024,

top_p: 1,

stream: false,

stop: null

};

// Send the image to Groq API for analysis

const response = await axios.post(API_URL, requestBody, {

headers: {

'Content-Type': 'application/json',

'Authorization': `Bearer ${GROQ_VISION_API_KEY}`

}

});

// Process the AI response

const aiResponse = response.data;

console.log("Initial Groq AI Response:", aiResponse);

Response:

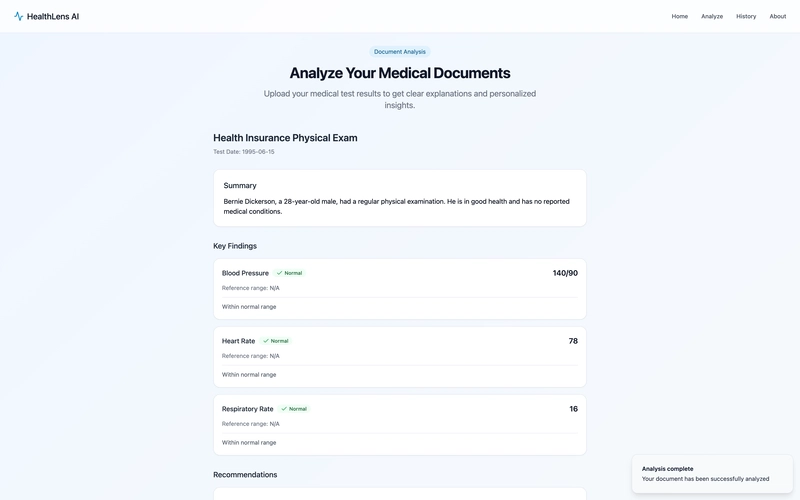

"The document you provided appears to be a **Health Insurance Physical Exam**. Here is the structured data extracted from the document:

**Document Type**: Health Insurance Physical Exam

**Date**: 1995/06/15

**Summary**: Bernie Dickerson, a 28-year-old male, had a regular physical examination. He is in good health and has no reported medical conditions.

**Findings**:

* **Name**: Blood Pressure

* **Value**: 140/90 mmHg

* **Unit**: mmHg

* **Reference Range**: N/A

* **Status**: Normal

* **Explanation**: Within normal range

* **Name**: Heart Rate

* **Value**: 78 bpm

* **Unit**: bpm

* **Reference Range**: N/A

* **Status**: Normal

* **Explanation**: Within normal range

* **Name**: Respiratory Rate

* **Value**: 16 breaths/min

* **Unit**: breaths/min

* **Reference Range**: N/A

* **Status**: Normal

* **Explanation**: Within normal range

**Medical Terms & Definitions**:

* **Bernie Dickerson**: The patient's name.

* **Global Health Inc.**: The insurance provider.

* **GH4567890**: The policy number.

* **GH4567890**: The medical history policy number.

**Recommendations**: None. The patient has no reported medical conditions or findings outside of the normal physiological range for his age. Therefore, no specific medical recommendations can be made. It is recommended that the patient continue with regular check-ups to monitor his health."

2️⃣ Query the AI again to get the correct JSON format.

Once I had the initial information extracted, I made a second query to structure it properly in a usable JSON format, which allowed me to proceed with the integration on the app.

const jsonSchema = {

type: "object",

properties: {

documentType: { type: "string" },

date: { type: "string", format: "date" },

summary: { type: "string" },

findings: {

type: "array",

items: {

type: "object",

properties: {

name: { type: "string" },

value: { type: "string" },

unit: { type: "string" },

referenceRange: { type: "string" },

status: { type: "string", enum: ["normal", "abnormal", "critical"] },

explanation: { type: "string" }

},

required: ["name", "value", "unit", "status", "explanation"]

}

},

terms: {

type: "array",

items: {

term: "string",

definition: "string"

}

},

recommendations: {

type: "array",

items: { type: "string" }

}

},

required: ["documentType", "date", "summary", "findings"]

};

const jsonFormatRequest = {

messages: [

{

role: "system",

content: `Convert the following extracted medical data into a structured JSON format according to this schema:\n${JSON.stringify(jsonSchema)}`

},

{

role: "user",

content: JSON.stringify(aiResponse)

}

],

model: "llama-3.3-70b-versatile",

temperature: 0,

stream: false,

response_format: { type: "json_object" },

};

const jsonResponse = await axios.post(API_URL, jsonFormatRequest, {

headers: {

'Content-Type': 'application/json',

'Authorization': `Bearer ${GROQ_VISION_API_KEY}`

}

});

const formattedData = jsonResponse.data;

return processMedicalData(formattedData);

{

"documentType": "Health Insurance Physical Exam",

"date": "1995-06-15",

"summary": "Bernie Dickerson, a 28-year-old male, had a regular physical examination. He is in good health and has no reported medical conditions.",

"findings": [

{

"name": "Blood Pressure",

"value": "140/90",

"unit": "mmHg",

"referenceRange": "N/A",

"status": "normal",

"explanation": "Within normal range"

},

{

"name": "Heart Rate",

"value": "78",

"unit": "bpm",

"referenceRange": "N/A",

"status": "normal",

"explanation": "Within normal range"

},

{

"name": "Respiratory Rate",

"value": "16",

"unit": "breaths/min",

"referenceRange": "N/A",

"status": "normal",

"explanation": "Within normal range"

}

],

"terms": [

{

"term": "Bernie Dickerson",

"definition": "The patient's name."

},

{

"term": "Global Health Inc.",

"definition": "The insurance provider."

},

{

"term": "GH4567890",

"definition": "The policy number and medical history policy number."

}

],

"recommendations": [

"None. The patient has no reported medical conditions or findings outside of the normal physiological range for his age. Therefore, no specific medical recommendations can be made. It is recommended that the patient continue with regular check-ups to monitor his health."

]

}

At this point, I had everything in place to finish this project. Once I had all the AI components integrated, I just needed to make a few tweaks to glue everything together and fix some minor issues in the code.

But the end result is actually quite amazing! The way everything came together exceeded my expectations.

I mean, this is something I did in just few hours, with some help from AI. I’d say that as the first day of my “Vibe Coding Adventures”, it’s been quite a ride. I gained a lot of insight and new knowledge from this experience.

First of all, I learned more about the limits of these AI systems. They can’t do whatever you want, and they can’t go as far as you might hope. There are boundaries that you need to be aware of. Second, these systems aren’t completely consistent yet — they still sometimes hallucinate. But I’m sure that with a few more tweaks to the code, and by using more powerful AI models, we can overcome this limitation. And third, AI is not magic — it’s code. Once we’re done with our AI friend, as developers, we can keep improving and growing the software as we see fit.

In conclusion, this has been a pretty interesting and exciting exercise. I gained a lot of knowledge about these systems and how they’re implemented in real-world scenarios. I’ll keep increasing the difficulty of my projects to continue pushing the limits of these AIs. For now, I’m pretty impressed with what I was able to accomplish with these tools.

That’s all for today — peace out! ✌️

To see the live project go to this website. 👨🏽💻

Top comments (0)