Satya Nadella, Microsoft's CEO, discusses AI agents and quantum technology in an interview on the South Park Commons YouTube channel. At 29:36, he says the world is too messy for SQL to work, which might be surprising, as SQL serves as an API for relational databases, built to store various types of data, including the most complex.

This reminds me of some challenges I encountered with the relational model. I will share a real-life example from many years ago when I worked as a data architect at an airline company before the rise of document databases to resolve such issues.

Flights have an airport of origin and an airport of destination. This is a typical case where one table has multiple aliases in a query because of the two different roles. In a relational database, the table of flights has two columns with a foreign key that references a table of airports: origin airport and destination airport. There are already many questions that can start a debate with relational data modelers:

Should we use singular ("flight") or plural ("flights") for table names

Should we name the columns with the role first or last: destination airport ("DST_ARP") or airport of destination ("ARP_DST")

What are the keys? The foreign key can use the IATA code to avoid joins in many queries or a surrogate key in case the IATA code changes, but with two additional joins for the queries

What are the tables? How many tables are needed to store this information, normalized for easier updates or denormalized for simpler queries?

In a document model, we can store all relevant information together. An "airport" collection will contain one document per airport, detailing its IATA code, name, city, latitude, longitude, country code, and country name, all neatly organized in sub-documents.

Here is an example of a document that describes Amsterdam's Airport

{

"_id": "AMS",

"name": "Amsterdam Airport Schiphol",

"location": {

"city": {

"name": "Amsterdam",

"country": {

"name": "Netherlands",

"code": "NL"

}

},

"coordinates": {

"latitude": 52.308613,

"longitude": 4.763889

}

},

"iata": "AMS",

"icao": "EHAM",

"is_hub_for_airline": ["KLM", '"Air France"],

"timezone": "Europe/Amsterdam",

"type": "international",

"runways": [

{

"name": "18R/36L",

"length_meters": 3800

},

{

"name": "06/24",

"length_meters": 3500

}

],

"website": "https://www.schiphol.nl/"

}

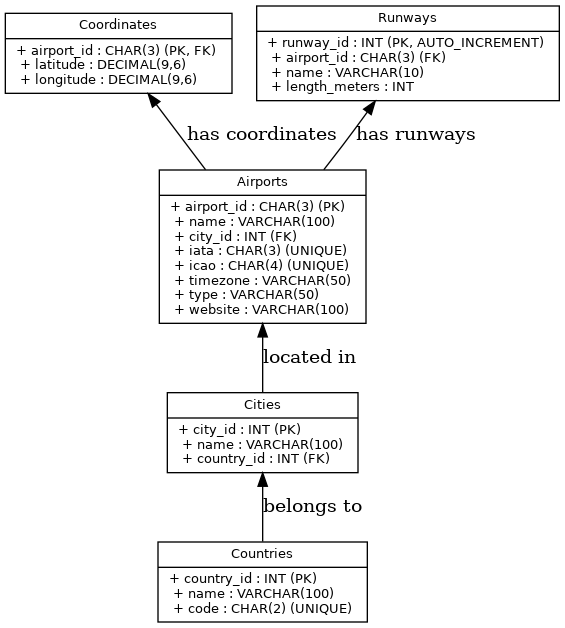

In a relational database, because of the One-To-Many relationships and to obey the first Normal Form, the same data is scattered to multiple tables:

INSERT INTO Countries (country_id, name, code)

VALUES (1, 'Netherlands', 'NL');

INSERT INTO Cities (city_id, name, country_id)

VALUES (1, 'Amsterdam', 1);

INSERT INTO Airports (airport_id, name, city_id, iata, icao, timezone, type, website)

VALUES ('AMS', 'Amsterdam Airport Schiphol', 1, 'AMS', 'EHAM', 'Europe/Amsterdam', 'international', 'https://www.schiphol.nl/');

INSERT INTO Coordinates (airport_id, latitude, longitude)

VALUES ('AMS', 52.308613, 4.763889);

INSERT INTO Runways (airport_id, name, length_meters)

VALUES ('AMS', '18R/36L', 3800), ('AMS', '06/24', 3500);

A relational table has a fixed structure that must be declared before inserting data:

CREATE TABLE Countries (

country_id INT PRIMARY KEY,

name VARCHAR(100) NOT NULL,

code CHAR(2) NOT NULL UNIQUE

);

CREATE TABLE Cities (

city_id INT PRIMARY KEY,

name VARCHAR(100) NOT NULL,

country_id INT,

FOREIGN KEY (country_id) REFERENCES Countries(country_id)

);

CREATE TABLE Airports (

airport_id CHAR(3) PRIMARY KEY,

name VARCHAR(100) NOT NULL,

city_id INT,

iata CHAR(3) NOT NULL UNIQUE,

icao CHAR(4) NOT NULL UNIQUE,

timezone VARCHAR(50) NOT NULL,

type VARCHAR(50),

website VARCHAR(100),

FOREIGN KEY (city_id) REFERENCES Cities(city_id)

);

CREATE TABLE Coordinates (

airport_id CHAR(3),

latitude DECIMAL(9,6) NOT NULL,

longitude DECIMAL(9,6) NOT NULL,

PRIMARY KEY (airport_id),

FOREIGN KEY (airport_id) REFERENCES Airports(airport_id)

);

CREATE TABLE Runways (

runway_id INT PRIMARY KEY AUTO_INCREMENT,

airport_id CHAR(3),

name VARCHAR(10) NOT NULL,

length_meters INT NOT NULL,

FOREIGN KEY (airport_id) REFERENCES Airports(airport_id)

);

Declaring foreign keys is essential to ensure referential integrity, which isn't required when modeling in a single document since the relationship is inherent in the data structure.

With numerous tables, an ERD diagram aids in illustrating the structure:

Welcome to Ou Messy World

This data model works well when a city is assigned an airport, which is usually true. You can develop numerous applications based on this model until a significant issue emerges: your flight schedule features a new international airport, EuroAirport Basel Mulhouse Freiburg, which this model cannot accommodate. This airport is physically in France, but you can drive to Basel without crossing any border, so it is also in Switzerland.

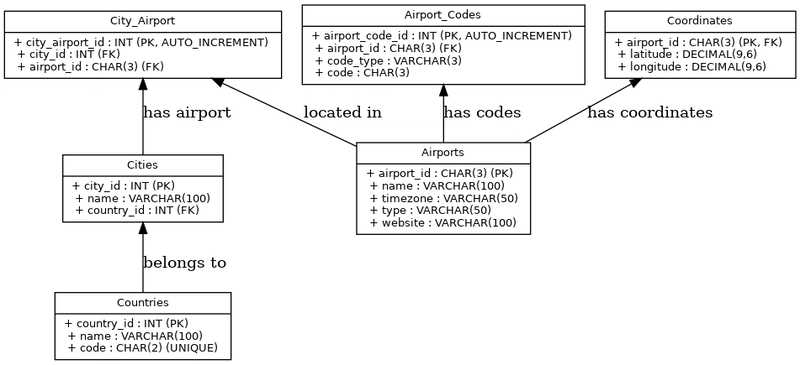

This airport is unique, bi-national, serving Basel in Switzerland and Mulhouse in France. It has multiple IATA codes: EAP, as well as MLH and BSL for each country. This necessitates a structural change to the relational data model because the relationship between airports and cities must be a Many-to-Many:

CREATE TABLE Countries (

country_id INT PRIMARY KEY,

name VARCHAR(100) NOT NULL,

code CHAR(2) NOT NULL UNIQUE

);

CREATE TABLE Cities (

city_id INT PRIMARY KEY,

name VARCHAR(100) NOT NULL,

country_id INT,

FOREIGN KEY (country_id) REFERENCES Countries(country_id)

);

CREATE TABLE Airports (

airport_id CHAR(3) PRIMARY KEY,

name VARCHAR(100) NOT NULL,

timezone VARCHAR(50) NOT NULL,

type VARCHAR(50),

website VARCHAR(100)

);

CREATE TABLE Airport_Codes (

airport_code_id INT PRIMARY KEY AUTO_INCREMENT,

airport_id CHAR(3),

code_type VARCHAR(3) CHECK (code_type IN ('EAP', 'BSL', 'MLH')),

code CHAR(3),

FOREIGN KEY (airport_id) REFERENCES Airports(airport_id)

);

CREATE TABLE City_Airport (

city_airport_id INT PRIMARY KEY AUTO_INCREMENT,

city_id INT,

airport_id CHAR(3),

FOREIGN KEY (city_id) REFERENCES Cities(city_id),

FOREIGN KEY (airport_id) REFERENCES Airports(airport_id)

);

CREATE TABLE Coordinates (

airport_id CHAR(3),

latitude DECIMAL(9,6) NOT NULL,

longitude DECIMAL(9,6) NOT NULL,

PRIMARY KEY (airport_id),

FOREIGN KEY (airport_id) REFERENCES Airports(airport_id)

);

Inserting a single airport data requires more statements per transaction:

-- Insert into Countries

INSERT INTO Countries (country_id, name, code) VALUES

(1, 'Switzerland', 'CH'),

(2, 'France', 'FR');

-- Insert into Cities

INSERT INTO Cities (city_id, name, country_id) VALUES

(1, 'Basel', 1),

(2, 'Mulhouse', 2);

-- Insert into Airports

INSERT INTO Airports (airport_id, name, timezone, type, website) VALUES

('EAP', 'EuroAirport Basel Mulhouse Freiburg', 'Europe/Zurich', 'international', 'https://www.euroairport.com/');

-- Insert into Airport_Codes

INSERT INTO Airport_Codes (airport_id, code_type, code) VALUES

('EAP', 'EAP', 'EAP'),

('EAP', 'BSL', 'BSL'),

('EAP', 'MLH', 'MLH');

-- Insert into City_Airport

INSERT INTO City_Airport (city_id, airport_id) VALUES

(1, 'EAP'),

(2, 'EAP');

-- Insert into Coordinates

INSERT INTO Coordinates (airport_id, latitude, longitude) VALUES

('EAP', 47.59, 7.529167);

The Entity-Relationship Diagram (ERD) shows an additional table for the Many-to-Many relationship between airports and cities:

All other airports must use the new model, even if they have only one city per airport. Having a model that distinguishes the two possibilities of One-to-Many and Many-to-Many would be even more complex, with inheritance leading to more tables.

Modifying such a data model affects all existing code, requiring all queries to be rewritten with a new join. In practice, this modification is too risky, leading to the implementation of some workarounds for legacy code. For instance, I've seen an application with a dummy city for Basel+Mulhouse and a dummy country for Switzerland+France. Other applications ignored the multi-city property and added a BSL-MLH dummy flight with a zero-kilometer distance and a zero-minute flight duration, which had unexpected side effects. These workarounds leverage the existing code for single-city airports, ensuring that only the new code is impacted by the new airport. However, the data model no longer reflects the real world, and the ideal normalized model that is supposed to avoid anomalies is broken.

This is where the world is too messy for SQL: a normalized data model must include all possibilities, with one model shared by all objects, including the nominal case and the diversity of edge cases. Everything can be represented with normalized tables, but in practice, applications break this model for more flexibility. Many applications that adapt to different domains opted for an Entity-Attribute-Value model to get more agility, but at the price of the worst performance because the SQL query planner of a relational database is not built to optimize such key-value data access. If you have never encountered such schema or wonder what the problem is, I recommend this old story by Tim Gorman about a single "DATA" table model: bad carma.

Document Data Modeling Flexibility

With a document model, two documents of the same collection do not strictly need to adhere to the same structure. I can keep the existing structure and code for the single-city airports and use an array instead of a single value when inserting the bi-national airport:

{

"_id": "EAP",

"name": "EuroAirport Basel Mulhouse Freiburg",

"location": {

"city": [

{

"name": "Basel",

"country": {

"name": "Switzerland",

"code": "CH"

}

},

{

"name": "Mulhouse",

"country": {

"name": "France",

"code": "FR"

}

}

],

"coordinates": {

"latitude": 47.59,

"longitude": 7.529167

}

},

"iata": [ "EAP", "BSL", "MLH" ],

"timezone": "Europe/Zurich",

"type": "international",

"website": "https://www.euroairport.com/"

}

Such a format may require additional code when the business logic differs between single and multi-city airports. Still, there's no need to change the existing code and reformat the existing data. The additional complexity due to the "messy" world has a scope limited to its new context and does not undermine the entirety of the existing data model.

Examples like this can create the impression that the relational model is too rigid. Although normalization was designed to facilitate model evolution, and SQL databases have reduced the downtime for some ALTER statements, it still restricts all data to a single model. Minor changes in business can break existing code and significantly increase the complexity of the data model. A normalized relational model makes adding a column to a table easy. However, when the keys or the association cardinality changes, the relational model requires impactful modification with lots of code to modify and test, and there is downtime during the schema migration.

Document Data Modeling and MongoDB API

Document data modeling allows you to add documents even if their structure diverges from the collection's existing documents. The structure can still be constrained with schema validation, but with more agility than what SQL relational tables definition provide.

Here is an example of schema validation that accepts the two document types - note the "oneOf" sections:

db.runCommand({

collMod: "airports",

validator: {

$jsonSchema: {

bsonType: "object",

required: ["_id", "name", "location", "iata", "timezone", "type", "website"],

properties: {

...

"location": {

bsonType: "object",

required: ["city", "coordinates"],

properties: {

"city": {

oneOf: [

{

bsonType: "object",

required: ["name", "country"],

...

},

{

bsonType: "array",

items: {

bsonType: "object",

required: ["name", "country"],

...

}

}

]

},

...

}

},

"iata": {

oneOf: [

{

bsonType: "string",

description: "must be a string or an array of strings and is required"

},

{

bsonType: "array",

items: {

bsonType: "string"

}

}

]

},

...

}

}

}

});

With schema validation, you can combine the schema flexibility with schema integrity constraints. It is recommended that schema validation be added so that the MongoDB database can guarantee that the documents have the fields expected by the application code.

Additionally, the MongoDB API prioritizes developer experience for the application evolutions. Such polymorphic schema may not need different code. I have inserted the two documents described above into an "airports" collection, and the code to find a country's airports is the same whether the city attribute is one sub-document or an array

mdb> // find airports in Netherlands

mdb> db.airports.find({

"location.city.country.name": "Netherlands"

},{

"name": 1

});

[ { _id: 'AMS', name: 'Amsterdam Airport Schiphol' } ]

mdb> // find airports in France

db.airports.find({

"location.city.country.name": "France"

},{

"name": 1

});

[ { _id: 'EAP', name: 'EuroAirport Basel Mulhouse Freiburg' } ]

mdb> // find airports in Switzerland

db.airports.find({

"location.city.country.name": "Switzerland"

},{

"name": 1

});

[ { _id: 'EAP', name: 'EuroAirport Basel Mulhouse Freiburg' } ]

mdb>

With MongoDB, you don't have to change the query. You also do not have to change the index definition. I have created an index on the country name:

mdb> db.airports.createIndex(

{ "location.city.country.name": 1 }

);

location.city.country.name_1

When having only single-city airports in the collection, the execution plan for the find query shows:

stage: 'IXSCAN',

keyPattern: { 'location.city.country.name': 1 },

indexName: 'location.city.country.name_1',

isMultiKey: false,

multiKeyPaths: { 'location.city.country.name': [] },

indexBounds: {

'location.city.country.name': [ '["Netherlands", "Netherlands"]' ]

}

Once a multi-city airport has been added, it switches to a multi-key index:

stage: 'IXSCAN',

keyPattern: { 'location.city.country.name': 1 },

indexName: 'location.city.country.name_1',

isMultiKey: true,

multiKeyPaths: { 'location.city.country.name': [ 'location.city' ] },

indexBounds: {

'location.city.country.name': [ '["Netherlands", "Netherlands"]' ]

}

MongoDB multi-key indexes simply index more than one value per document when the cardinality changes from One-to-One to One-to-Many.

You may think that using a JSON data type in an SQL database gives you the same advantages. However, query planning and indexing are significant differences between document databases like MongoDB and storing documents in a relational database like PostgreSQL.

PostgreSQL's JSONB is a data type that enables schema-less data storage. Using an expression index, you can create an index for a specific key in the document, provided it is known in advance (schema-on-write) and is a single key without an array in the path. However, you must use an inverted index when the path includes an array. GIN indexes, optimized for indexing unknown sub-paths (schema-on-read), are inverted indexes and can be used with arrays (see Indexing JSON in PostgreSQL). Still, they do not provide all the features of regular indexes. For example, the result from a GIN index in PostgreSQL is not sorted and cannot be used to optimize an ORDER BY ... LIMIT. When the schema-on-write involves a one-to-many relationship, storing it in normalized columns in a separate table and joining it at the time of the query is often recommended.

MongoDB is a database that natively indexes document paths, so you don't need this double data modeling approach. Regular indexes can be used on the schema-on-write part of the document, even when the path includes an array, and provide the full Equality, Sort, Range experience. Those indexes follow the flexibility of the document model, where not only new fields can be added, but a One-to-One can become a One-to-Many without adding more complexity. For the schema-on-read part, where the structure is unknown before the query, you can use the same indexes with wildcards to index all keys.

Satya Nadella's interview was about AI foundation models. MongoDB has search indexes for schemaless text or embeddings, but regular indexes are still used for exact searches on structured schemas. When the world is too messy for SQL, MongoDB excels by avoiding a one-size-fits-all data model. Instead, it provides a domain-driven model tailored to specific access patterns, accommodating new complex outlier business rules without complicating the existing structure, code, and index definition.

Top comments (15)

MongoDB is amazing... until you go further and discover graph databases such as Neo4j, OrientDB, et al.

The one thing MongoDB seems to have forgotten about is relationships between entities, that can be themselves messy, hence the idea of using graphs to enjoy the goodness of traversal algorithms for DB searches.

What about a graph query language on top of MongoDB:

PuppyGraph - Querying MongoDB Atlas Data as a Graph

didn't know about that... however, it's still an adaptation, an afterthought. That means all graph-related stuff happens outside of the DB space, in library space, so it's going to sacrifice performance compared to native graph DBs

anyway, please don't get me wrong, I don't want to diminish MongoDB at all, it's an excellent product with its space in the market, still great at a number of things

No problem, and you are right that a purpose-built database should perform better for its use cases than a general purpose database. But I only rely on execution plans to understand the performance difference, and didn't test PuppyGraph on MongoDB yet - but that will come

Mongo is in no way "purpose-built" for persisting business entity data, and general purpose database engines have been optimized for over 50 years to quickly, efficiently, and reliably store and retrieve relational data. They also provide relational integrity, which typically must be coded and maintained manually in document databases like Mongo.

Please, please learn more about SQL databases, and stop these misinformed and misleading posts.

Never said that 🤷🏼♂️. MongoDB is a general purpose database.

I'm writing this because I know SQL databases. I'm a big fan and have worked 30 years with Dev and Ops on DB2, Oracle, PostgreSQL, and others. I don't know what makes you angry. I wrote this to provide an example behind "The World Is Too Messy for SQL to Work" for those who want to understand where it might come from.

There's no need to read it if you are convinced that all data processing must happen in the database, on a fully normalized data model with all integrity constraints and stored procedures. You are right. But some developers and architects might think differently, and it can be interesting to listen to them and try to understand.

I don't know why you think I'm angry. I'm very calmly stating that what you're telling people is wrong and calmly asking you to stop.

I've worked for 30 years cleaning up expensive messes caused by people who are convinced that they know what they're doing.

"All models are wrong, some are useful"

en.wikipedia.org/wiki/All_models_a...

SQL presents a relational data model. It can be useful, and has semantics that can be predicted, and enable powerful queries.

Documents, not so much.

Sure, use documents if you want flexibility -- just don't hope to get very efficient queries.

Why not efficient queries? You can do good and bad queries on all models. The document model gives more control to the application developer (which can be good or bad - depends on the developer 😁) rather than the database (How Good Are Query Optimizers, Really?).

An example where storing as a document helps to index more efficiently: dev.to/franckpachot/a-case-where-s...

Sorry, but this is complete nonsense.

SQL does not in any way serve as "an API for relational databases". Structured Query Language (SQL) is a domain-specific language (DSL) that is used to manage data in relational database management systems (RDBMS). It excels at set-based operations and is no way an Application Programming Interface (API).

What your examples establish is not that the world is too mess for SQL but that your (and possibly Mr. Nadella's) understanding of and approach to data modeling (not to mention business processes) may be a bit too simplistic.

For example, there is no need to associate a single city and country with a single airport, and international airports are hardly the only examples where trying to do so will probably introduce problems. Relational databases easily support many-to-many relationships where multiple locations can be associated with a single airport, etc.

There are also issues with the assumptions you've made in your quick and dirty data model that will come back to haunt you, but the bottom line is that using a document database for business entity data is simply wrong -- the wrong tool for the job.

I could go on and on, but my How Bad Things Happen … in the Data Layer article on Medium covers most of them.

Please learn more about SQL and how to use it correctly and well before pronouncing it insufficient. Many, many relational systems disprove your thesis statement all day, every day.

I know what SQL is, and how it was designed to process data in databases. But some developers and architects have reasons to run some data processing in the application tier, then use SQL as an API to the database service. Wasn't called API in 1992, because two and three tier architecture were not out in the wild, but look at the four "Classes of SQL-statements" in the SQL standard, and you will recognize API.

Yes, but that's exactly the point of the example: normalization forces you to make a Many-to-Many the general case when it is the exception. Yes, of course relational databases support it, but with more complexity, harder query planning, and less declarative integrity constraints (in the absence of assertions define in SQL but implemented in no RDBMS). And getting back to the API: how do you return a Many-to-Many with one SQL statement when the result set is a single two-dimension table?

If you don't need it and don't want to learn it, then, sure, it's wrong for you, don't use it. But some developers are more successful with it. No reason to be angry, different development approach, skills, architecture decisions, requirements. I like to listen to others point of view. I could have stayed working on SQL databases for life, but I like to listen to developers and share what I learn from them.

Not if you think SQL is an API, regardless of where it's run. APIs are collections of discreet endpoints.

They are interfaces, as the name states, not a language, which SQL is. One cannot, for example, combine or nest API endpoint calls, e.g.

GET http://myapp/airports/(GET http://myapp/cities/1), as you can with SQL sub-selects, etc.Even more nonsensical. The thing being modeled determines the appropriate approach. Some things are by their nature one-to-many; other things are many-to-many. You've done it correctly if your model accurately reflects reality.

You're joking, right? Off the top of my head, something like this:

No, it's simply the wrong tool for the job. I'm not saying you can't get it to work, but you'll spend more time and money than necessary (and needlessly complicate the tech stack) getting it to work reliably and correctly.

Each correctly-modeled business entity -- a representation of the person, place, and thing (or "noun") our systems are intended to manage -- is by its nature a consistent set of attributes that does not often change. This is ideal for normalized, highly structured, relational tables. Document databases are ideal for semi-structured data that can vary widely from instance to instance.

I use NoSQL databases where they are appropriate. They are not appropriate for entity data.

Please stop sharing what you've learned from listening to developers. It's incorrect on several counts.

The best is to have the best of all worlds. Oracle converged database with json-relational duality, graph support, relational, sql and hey, even support for MongoDB wire protocol :) Oh, and vector support

Yes, sure, people who want to escape Oracle Database don't do it for the lack of features.