Scrape Flipkart website using beautifulsoup and store data in csv file.

IDE Used: PyCharm

First install all packages one by one

pip install bs4

pip install requests

pip install pandas

Import all modules

import requests

from bs4 import BeautifulSoup

import pandas as pd

requests to send HTTP request.

BeautifulSoup extract all content from web page (title, etc.)

pandas to make csv file.

Step 1: Loading web page with 'request'

request module allows to send HTTP requests. The HTTP request returns a Response object with all the response data (content, encoding, etc.)

url = "https://www.flipkart.com/search?q=laptop&otracker=search&otracker1=search&marketplace=FLIPKART&as-show=on&as=off"

req = requests.get(url)

Step 2: Extracting title with BeautifulSoup

BeautifulSoup provides lot of simple methods for navigating, searching, and modifying a DOM tree.

Extract the title

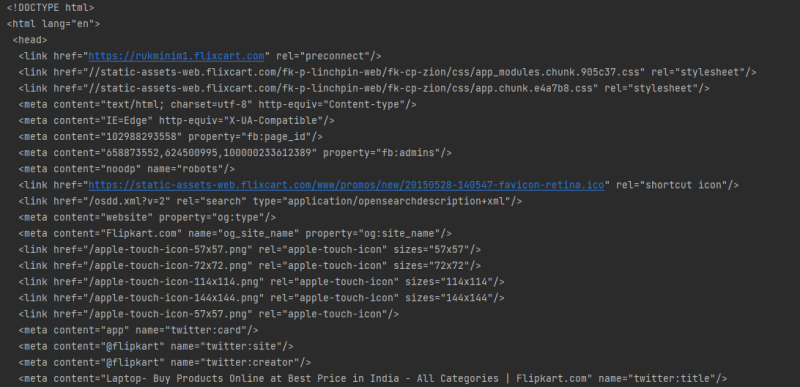

page = BeautifulSoup(req.content, "html.parser")

print(page.prettify())

title = page.head

print(title.text)

page.prittify() print all the content of page into a nicely format.

title.text print title of the page.

print without calling .text it will print full markup.

all_products = []

Add all extract data in this all_products list. Print this list at the end.

Step 3: Start scrapping

products = page.findAll("div", {"class": "_3pLy-c row"})

findAll is used for returning all the matches after scanning the entire document.

findAll(tag, attributes, recursive, text, limit, keywords)

In our case find all div tags with class name "_3pLy-c row"

.select() it returns a list of elements. The select() method to locate all elements of a particular CSS class. It find elements by Attr., ID, Class, etc.

.strip() method use to remove any extra newlines/whitespaces.

Step 4: Extract Name and Price of products

for product in products:

lname = product.select("div > div._4rR01T")[0].text.strip()

print(lname)

price = product.select("div > div._30jeq3 ")[0].text.strip()

print(lprice)

all_products.append([lname, lprice])

print("Record Inserted Successfully...")

all_products.append append Name and Price in all_products list.

print(all_products)

Step 5: Add Data in CSV file.

col = ["Name", "Price"]

First create list for column name.

data = pd.DataFrame(all_products, columns=col)

print(data)

DataFrame It is a 2 dimensional data structure, like a 2 dimensional array, or a table with rows and columns.

data.to_csv('extract_data.csv', index=False)

to_csv used to export pandas DataFrame to csv file.

Code

import requests

from bs4 import BeautifulSoup

import pandas as pd

url = 'https://www.flipkart.com/search?q=laptop&otracker=search&otracker1=search&marketplace=FLIPKART&as-show=on&as=off'

req = requests.get(url)

page = BeautifulSoup(req.content, "html.parser")

print(page.prettify())

title = page.head

print(title.text)

all_products = []

col = ['Name', 'Price']

products = page.findAll("div", {"class": "_3pLy-c row"})

print(products)

for product in products:

lname = product.select("div > div._4rR01T")[0].text.strip()

print(lname)

price = product.select("div > div._30jeq3 ")[0].text.strip()

print(lprice)

all_products.append([lname, lprice])

print("Record Inserted Successfully...")

data = pd.DataFrame(all_products, columns=col)

print(data)

data.to_csv('laptop_products.csv', index=False)

Thank You 😊

Top comments (0)