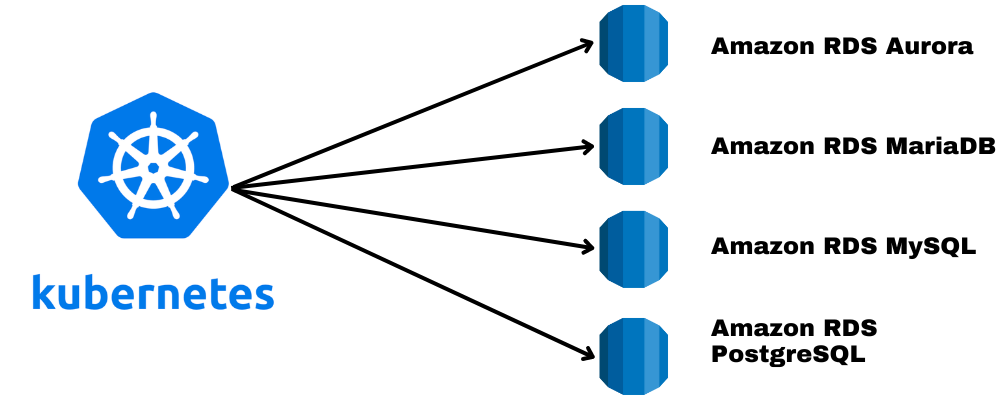

ACK controller for RDS is a Kubernetes Controller for provisioning RDS instances in a kubernetes native way.

ACK controller for RDS supports creating these database engines:

- Amazon Aurora (MySQL & PostgreSQL)

- Amazon RDS for PostgreSQL

- Amazon RDS for MySQL

- Amazon RDS for MariaDB

- Amazon RDS for Oracle

- Amazon RDS for SQL Server

Install ACK Controller for RDS

Let's start by setting up an EKS cluster. ACK Controller for RDS requires AmazonRDSFullAccess to create and manage RDS instances.

We will also create a service account ack-rds-controller for ACK Controller for RDS and attach AmazonRDSFullAccess IAM permissions to this service account with the help of IRSA.

apiVersion: eksctl.io/v1alpha5

kind: ClusterConfig

metadata:

name: ack-cluster

region: eu-west-1

version: "1.22"

iam:

withOIDC: true

serviceAccounts:

- metadata:

# Service account used by rds-controller https://github.com/aws-controllers-k8s/rds-controller/blob/main/helm/values.yaml#L81

name: ack-rds-controller

namespace: ack-system

# https://github.com/aws-controllers-k8s/rds-controller/blob/main/config/iam/recommended-policy-arn

attachPolicyARNs:

- "arn:aws:iam::aws:policy/AmazonRDSFullAccess"

managedNodeGroups:

- name: managed-ng-1

minSize: 1

maxSize: 10

desiredCapacity: 1

instanceType: t3.large

amiFamily: AmazonLinux2

eksctl create cluster -f ack-cluster.yaml

Once the cluster is created, install the ACK Controller for RDS using Helm chart. ACK Controller for RDS helm charts are stored in the public ECR repository.

Note: Check the latest version of the helm chart here

Authenticate to the ECR repo using your AWS credentials :

aws ecr-public get-login-password --region us-east-1 | helm registry login --username AWS --password-stdin public.ecr.aws

Login Succeeded

Once you're authenticated, install ACK Controller for RDS in the ack-system namespace :

helm upgrade \

--install \

--create-namespace \

ack-rds \

-n ack-system \

oci://public.ecr.aws/aws-controllers-k8s/rds-chart \

--version=v0.1.1 \

--set=aws.region=eu-west-1 \

--set=serviceAccount.create=false \

--set=log.level=debug

During installation, we are setting these values in the Helm chart:

-

aws.region- AWS Region for API Calls. -

serviceAccount.create- Setting this to false, since serviceAccount is created by eksctl during cluster creation above. -

log.level- Setting this todebugto see detailed logs.

ACK controller for RDS installs several CRDs that allow you to provision RDS instances and related components:

-

GlobalCluster- Custom resource to create Aurora Global cluster. -

DBCluster- Custom resource to create Amazon Aurora DB Cluster. -

DBInstance- Custom resource to create Amazon RDS DB Instances. -

DBClusterParameterGroup- Custom resource to create DB cluster parameter groups. -

DBParameterGroup- Custom resource to create DB parameter groups. -

DBProxy- Custom resource to create Amazon RDS Proxy -

DBSubnetGroup- Custom resource to create DB Subnet Group.

Note:. Refer here for the reference of these CRDs.

Check ACK controller for RDS logs in cluster:

kubectl logs -f -l app.kubernetes.io/instance=ack-rds

Create Autora PostgreSQL Cluster with RDS Controller

To create an Aurora PostgreSQL Cluster with ACK controller for RDS, we have to first create a DB Subnet Group using DBSubnetGroup, then create a Aurora Cluster using DBCluster and add two Aurora Database instances in the cluster using DBInstance.

-

Create a DB Subnet Group

test-ack-subnetgroupwith all the subnets that are part of the VPC created by eksctl or use your existing subnets:

apiVersion: rds.services.k8s.aws/v1alpha1 kind: DBSubnetGroup metadata: name: "test-ack-subnetgroup" spec: name: "test-ack-subnetgroup" description: "Test ACK Subnet Group" subnetIDs: - "subnet-011e4c822231b65fa" - "subnet-001ce16b9b7c3578f" - "subnet-0a90579d9b066ecf7" - "subnet-00e6d2e90ea132c8b" - "subnet-0fbc22e542749c98c" - "subnet-080c0b1ed39b0aa91" tags: - key: "created-by" value: "ack-rds"Note: To get all the subnets created by eksctl, run command:

eksctl get cluster ack-cluster -o json| jq -r '.[0].ResourcesVpcConfig.SubnetIds' -

Check the status of

test-ack-subnetgroupDB Subnet Group :

kubectl get dbsubnetgroup test-ack-subnetgroup -ojson| jq -r '.status.subnetGroupStatus' Completeif it returns

Complete, thenDB Subnet groupis successfully created. -

Create a secret to store the password of the master user :

kubectl create secret generic "test-ack-master-password" --from-literal=DATABASE_PASSWORD="mypassword" secret/test-ack-master-password created -

Create an Aurora DB Cluster

test-ack-aurora-clusterusingDBCluster:

apiVersion: rds.services.k8s.aws/v1alpha1 kind: DBCluster metadata: name: "test-ack-aurora-cluster" spec: engine: aurora-postgresql engineVersion: "14.4" engineMode: provisioned dbClusterIdentifier: "test-ack-aurora-cluster" databaseName: test-ack dbSubnetGroupName: test-ack-subnetgroup deletionProtection: true masterUsername: "test" masterUserPassword: namespace: "default" name: "test-ack-master-password" key: "DATABASE_PASSWORD" storageEncrypted: true enableCloudwatchLogsExports: - postgresql backupRetentionPeriod: 14 copyTagsToSnapshot: true preferredBackupWindow: 00:00-01:59 preferredMaintenanceWindow: sat:02:00-sat:04:00 -

Once created, check the status of

DBClusterresourcetest-ack-aurora-cluster:

kubectl describe DBCluster test-ack-aurora-clusterif the status is

availablethen Aurora DB Cluster is successfully created. -

After

DBClusteris successfully created, add two Aurora DB Instancestest-ack-aurora-instance-1andtest-ack-aurora-instance-2to the DB cluster usingDBInstance:

apiVersion: rds.services.k8s.aws/v1alpha1 kind: DBInstance metadata: name: test-ack-aurora-instance-1 spec: dbInstanceClass: db.t4g.medium dbInstanceIdentifier: test-ack-aurora-instance-1 dbClusterIdentifier: "test-ack" engine: aurora-postgresql --- apiVersion: rds.services.k8s.aws/v1alpha1 kind: DBInstance metadata: name: test-ack-aurora-instance-2 spec: dbInstanceClass: db.t4g.medium dbInstanceIdentifier: test-ack-aurora-instance-2 dbClusterIdentifier: "test-ack" engine: aurora-postgresqlWhen these instances are available, one of them will be a Writer Instance and other one will be a Reader Instance.

-

Check the status of

DBInstance:

kubectl describe DBInstance test-ack-aurora-instance-1 kubectl describe DBInstance test-ack-aurora-instance-2Status

availablemeans that DB Instances are ready to use now. -

Get the

Writer Endpointof the Aurora RDS cluster:

kubectl get dbcluster test-ack-aurora-cluster -ojson| jq -r '.status.endpoint' test-ack-aurora-cluster.cluster-cncphmukmdde.eu-west-1.rds.amazonaws.com -

Get the

Reader Endpointof the Aurora RDS cluster:

kubectl get dbcluster test-ack-aurora-cluster -ojson| jq -r '.status.readerEndpoint' test-ack-aurora-cluster.cluster-ro-cncphmukmdde.eu-west-1.rds.amazonaws.com

When you have the Aurora DB Cluster provisioned, you would want to extract values such as Reader Endpoint and Writer Endpoint and configure them in the application to connect to the cluster.

ACK controller for RDS provides a way to import these values directly from the DBCluster or DBInstance resource to a desired Configmap or Secret.

Export the Database Details in a Configmap

FieldExport CRD allows you to export any spec or status field from a DBCluster or DBInstance resource into a ConfigMap.

-

Create an empty

ConfigMap:

kubectl create cm ack-cluster-config configmap/ack-cluster-config created -

Create a

FieldExportto import the value of.status.endpointfromtest-ack-aurora-clusterDBCluster resource to the ConfigMapack-cluster-config:

apiVersion: services.k8s.aws/v1alpha1 kind: FieldExport metadata: name: ack-cm-field-export spec: from: resource: group: rds.services.k8s.aws kind: DBCluster name: test-ack-aurora-cluster path: ".status.endpoint" to: kind: configmap name: ack-cluster-config key: DATABASE_HOST -

Inspect the values of ConfigMap

ack-cluster-config

kubectl get cm ack-cluster-config -ojson|jq -r '.data'

Export the Database Details in a Secret

FieldExport CRD allows you to export any spec or status field from a DBCluster or DBInstance into a Secret.

-

Create an empty

Secret:

kubectl create secret generic ack-cluster-secret secret/ack-cluster-secret created -

Create a

FieldExportto import the value of.status.endpointfromtest-ack-aurora-clusterDBCluster resource to the Secretack-cluster-secret:

apiVersion: services.k8s.aws/v1alpha1 kind: FieldExport metadata: name: ack-secret-field-export spec: from: resource: group: rds.services.k8s.aws kind: DBCluster name: test-ack-aurora-cluster path: ".status.endpoint" to: kind: secret name: ack-cluster-secret key: DATABASE_HOST -

Inspect the values of Secret

ack-cluster-secret

kubectl view-secret ack-cluster-secret --allNote:

view-secretis a kubectl plugin, check out my blog on how to use this plugin.

📌 FieldExport and ACK resource should be in the same namespace.

Important - Clean up

To clean up the clusters, first disable the delete protection by setting .spec.deletionProtection to false for the test-ack-aurora-cluster DBCluster resource:

kubectl apply -f test-ack-aurora-cluster.yaml

dbcluster.rds.services.k8s.aws/test-ack-aurora-cluster configured

Now, you can proceed to delete the instances first and then cluster:

kubectl delete -f test-ack-aurora-instance.yaml

kubectl delete -f test-ack-aurora-cluster.yaml

Top comments (0)