🚨 There's a new, more advanced version of this bot that you can checkout for free 🚨

Visit notifast.me to learn more

Intro

You can get a serious advantage on the internet when you can code your own tools. This post is about how I got my apartment with a web-scraping Discord bot.

The problem

Apartment market in Poland is a mess with the current state of the world. The prices are crazy high because of inflation and demand is even higher with millions of people form Ukraine running away from the war. There I was, in the situation where I needed to find a new place to rent in less than 3 weeks. There were a few popular websites for listing places but the issue was that they were gone after a few minutes since the listing was posted. I needed a way to get notified as soon as possible.

The solution

The solution was very simple. To get an advantage I decided to create a bot that checks the site every single minute and sends me a message on Discord when it finds a new listing.

I decided to go with the following stack:

- Node.js

- Prisma

- Discord.js

- Puppeteer

Hosted on Heroku free tier (R.I.P)

The results

First in one day I did a quick working prototype with JS and hardcoded one single URL and it was working as a charm for a few days. 90% of the times I was the first one to call but it was still difficult because of people that were blindly paying before seeing the place.

Then after checking out additional websites I decided to make it more generic and open for other people to use. I changed it to TypeScript created a simple Discord bot where you can add your own URL and get notified when a new item appears.

The new version of the bot checking 3 different websites ran for a little over a week notifying me about hundreds of new listings and I finally got the perfect place for me. I'm absolutely sure I wouldn't be able to get it without the bot.

This is how it turned out with a simple instruction on how to use it:

Adding new scraper job

Bot is working for every single site with dynamic content (amazon, ebay, etc.). The only requirement is that the service has unique links to items.

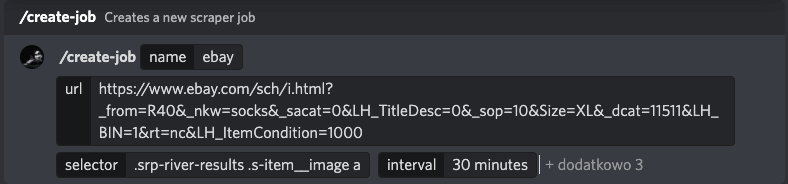

Use /create-job command and fill in the form:

- name - name of the job

- url - url that the bot will scrape

-

selector - selector for an

atag with link to element (examples below) - interval - interval of the job in minutes (min 1 minute)

-

active? - default

true, if the job should be active right away (you can always enable/disable job with[enable/disable]-jobcommands) -

channel? - defualt

channel where the command was runwhich channel should be messaged when the new item appear -

clean? - default

true, if the query params should be ignored (essential for some sites like Ebay)

After that you can do basic CRUD opeartions on jobs with commands like /list-jobs, /update-job, /delete-job...

Wait...

After that you simply wait for the job run and it will send message when new items are found:

You can also run the job manually with

/run-job. Note that the first run will get all the elements that will be on the webiste.

Code bits

You can check out the whole project here: https://github.com/jjablonski-it/webscraper-bot

Main function

This is the main function that runs when the bot starts. It checks if the guild exists in the database and if not it saves it. Then it registers the Discord slash commands for the guild. After that it runs the web server and the interval jobs.

const client = await getClient()

const existingGuilds = await getGuilds()

client.guilds.cache.forEach((guild) => {

const guildExists = existingGuilds.some((g) => g.id === guild.id)

if (!guildExists) {

saveGuild(guild.id)

}

registerCommands(CONFIG.CLIENT_ID, guild.id)

})

runWebServer()

runIntervalJobs()

Web scraper

This is the very basic web scraping function that extracts the links of new elements from the website.

export const scrapeLinks = async (

url: string,

selector: string

): Promise<Array<string>> => {

const page = await browser.newPage()

await page.setCacheEnabled(false)

await page.goto(url)

await page.waitForSelector(selector)

const links = await page.$$(selector)

const res = await Promise.all(

links.map((handle) => handle.getProperty('href'))

)

const hrefs = (await Promise.all(

res.map((href) => href.jsonValue())

)) as string[]

await page.close()

return hrefs

}

Web server

This was only to health-check the app and wake it up from Heroku.

const server = createServer((_req, res) => {

res.write('OK')

res.end()

})

export const runWebServer = () => {

const { PORT } = CONFIG

server.listen(PORT, () => console.log(`Server listening on port ${PORT}`))

}

Interval jobs

This is the function that handles all the jobs form all discord channels.

export const runIntervalJobs = async () => {

let i = 0

const run = async () => {

const jobs: Job[] = await getJobs()

const jobsToRun = jobs.filter(

({ interval, active }) => i % interval === 0 && active

)

console.log(`${jobsToRun.length} jobs in queue`)

await runJobs(jobsToRun)

i++

}

run()

const intervalId = setInterval(run, 1000 * (isDev ? 10 : 60))

return intervalId

}

Conclusion

I'm very happy with the results and I'm sure I will use this bot in the future. It's currently working until the Heroku free tier dies but if anyone will be interested I will move it somewhere else and maybe improve it a bit further. It is working but is no way near perfect so feel free to contribute if you are interested.

Bot icon generated by DALL·E 2

Top comments (1)

Enjoyed it half way and the commands on discord are missing and cannot delete, add or update anything after creating a job