Dealing with flaky tests is one of the biggest pain points for anyone doing browser and end-to-end test automation. You know the drill - tests that started to fail without any reason. Intermittent failures that sap confidence in the test suite and prevent stable deployments. Maintaining tests can take up to 50% of the time for QA test automation engineer.

Usually, the cause of failure is quite simple: the element name has changed, or a new modal window appeared. What if we could use AI to identify the root cause and try to fix the test on the fly, instead of distracting engineer from their actual work?

That's what I did.

Rather than just re-running flaky tests hoping they'll pass, or manually debugging every failure, I tried several popular AI models with new AI healing feature of CodeceptJS to fix failing tests.

The results were quite promising...

TLDR: AI CAN FIX YOUR TESTS!

But you still need to read further, to learn which models I used and how to configure them.

Failing Test

I started with a failing test which opens GitHub login page but uses incorrect locators.

Scenario('Github login', ({ I }) => {

I.amOnPage('https://github.com')

I.click('Sign in');

I.fillField('Username', 'davert');

I.fillField('Password', '123345');

I.click('Login');

I.see('Incorrect username or password');

});

This test is executed by CodeceptJS using Playwright as an engine. As an alternative, you can use webdriverio as the engine of choice, but I prefer Playwright as it is faster.

This test fails because there is no "Login" button on the page and no "Username" field. So test won't get to the assertion step and fail on interaction.

The failing test runs ~4 secs. We need to keep that information to see how using AI impacts the speed of a test.

Fixing tests on the fly is possible with

healplugin of CodeceptJS. It should be enabled incodecept.conf.jsas well as AI provider to make things work.

ChatGPT

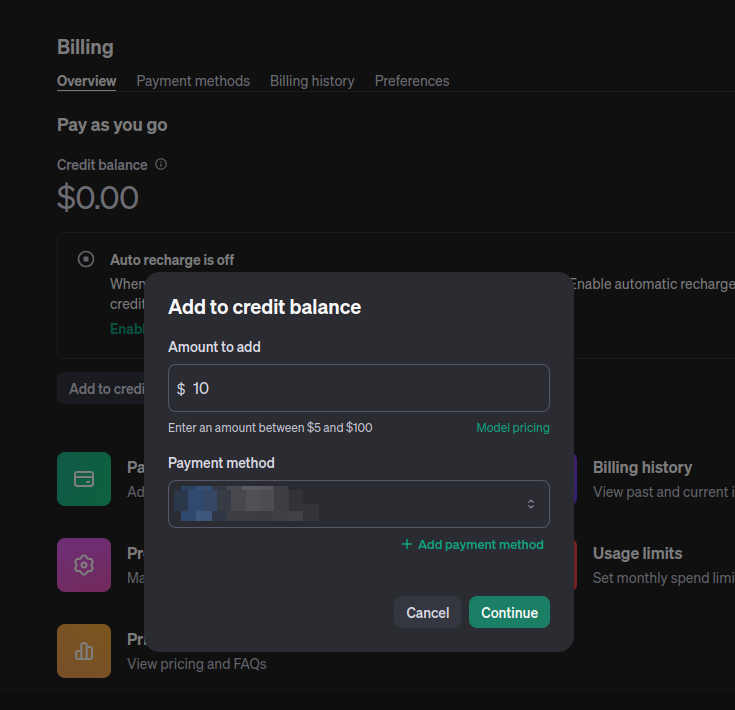

This was my first stop, as OpenAI's ChatGPT has been a game-changer in making AI accessible. My model of choice was gpt-3.5-turbo-0125. To start using it I had to fill my credits balance in OpenAI Console. ChatGPT is still free but if you use it via API, you need to have pre-paid credits. However, it's relatively cheap. I spent only a few cents playing with it.

I updated my codecept.conf.js file to use it as AI provider:

ai: {

request: async (messages) => {

const completion = await openai.chat.completions.create({

model: 'gpt-3.5-turbo-0125',

messages,

});

return completion?.choices[0]?.message?.content;

}

}

The complete configuration is here

I executed the test few times and with --ai mode and test has passed. Yey!

I received very sane suggestions to fix tests:

1. To fix Github login

Replace the failed code: (suggested by ai)

- I.fillField("Username", "davert")

+ I.fillField("input#login_field", "davert")

at Test.<anonymous> (./tests/ai_test.js:12:5)

2. To fix Github login

Replace the failed code: (suggested by ai)

- I.click("Login")

+ I.click("input[value='Sign in']")

at Test.<anonymous> (./tests/ai_test.js:14:5)

Both are correct. However, test duration has risen from 4s to 12s which is not great not terrible. I can assume that every call to OpenAI can take an additional ~4s (or more).

Claude (Anthropic)

I then tried the impressive Claude model from Anthropic. Claude seemed to have stronger reasoning capabilities and was able to provide more nuanced suggestions. I liked Claude more than ChatGPT 3.5 when I had to solve my daily programming tasks. How good Claude is when it comes to fixing tests on the fly?

Claude provides free 5$ credits to start. Nice!

I updated my config to use claude-2.1 model via Anthropic SDK:

ai: {

request: async(messages) => {

const resp = await anthropic.messages.create({

model: 'claude-2.1',

max_tokens: 1024,

messages

});

return resp.content.map((c) => c.text).join('\n\n');

}

}

This model also provided valid results:

1. To fix Github login

Replace the failed code: (suggested by ai)

- I.fillField("Username", "davert")

+ I.fillField('#login_field', 'davert');

at Test.<anonymous> (./tests/ai_test.js:12:5)

2. To fix Github login

Replace the failed code: (suggested by ai)

- I.click("Login")

+ I.click('.js-sign-in-button');

at Test.<anonymous> (./tests/ai_test.js:14:5)

But took almost 25 secs! That's too long!

However, I used the legacy Claude 2.1 model, Anthropic recommends Haiku model as a lightweight replacement for it.

So I updated my config:

request: async(messages) => {

const resp = await anthropic.messages.create({

model: 'claude-3-haiku-20240307',

max_tokens: 1024,

messages

});

return resp.content.map((c) => c.text).join('\n\n');

}

However, it was not faster than claude 2.1. It took 25s.

AI assistant took 25s and used ~3K input tokens. Tokens limit: 1000K

===================

Self-Healing Report:

2 steps were healed

Suggested changes:

1. To fix Github login

Replace the failed code: (suggested by ai)

- I.fillField("Username", "davert")

+ I.fillField('#login_field', 'davert');

at Test.<anonymous> (./tests/ai_test.js:12:5)

2. To fix Github login

Replace the failed code: (suggested by ai)

- I.click("Login")

+ I.click('Sign in');

at Test.<anonymous> (./tests/ai_test.js:14:5)

I think Claude's general purpose model is not the best choice for healing tests.

Mixtral

Mixtral was something new to me. I never tried to use mostly because it doesn't have common chat interface as Claude or ChatGPT. But recently I discovered service Groq that provides the same experience as ChatGPT and Claude but for opensource models like Mixtral.

Btw, this model is opensource so you can run it on Claudeflare, Google, Amazon, or locally. However, I will use groq due to its simplicity.

And at this moment it's free to use!

I updated my config to use mixtral via groq:

request: async (messages) => {

const chatCompletion = await groq.chat.completions.create({

messages,

model: "mixtral-8x7b-32768" // llama2-70b-4096 || gemma-7b-it || llama3-70b-8192 || mixtral-8x7b-32768

});

return chatCompletion.choices[0]?.message?.content || "";

}

Well, this was faaast!

The suggestions were good, and quite surprisingly it took 7s to fix 2 steps!

LLAMA

Another opensource model available from Groq is LLAMA from Meta. Let's try llama2:

request: async (messages) => {

const chatCompletion = await groq.chat.completions.create({

messages,

model: "llama2-70b-4096"

});

return chatCompletion.choices[0]?.message?.content || "";

Llama2 failed to provide a fix.

According to the logs it suggested code fixes for Codeception PHP testing framework, which didn't work in JS:

Here's an example code snippet that demonstrates how to adjust the `click` method to fix the test:

$I->click('Login');

Very bad. Maybe llama3 is better? I also tried llama3-70b-8192 model:

but after a few tries it resulted in fixing one step

Also, I don't like the suggested locator

1. To fix Github login

Replace the failed code: (suggested by ai)

- I.click("Login")

+ I.click('a[href*="login"]')

Llama seems like very fast (7s) but very unstable for tasks like this. Maybe another day?

Gemma

Groq provides access to Gemma model from Google. Why not adding model gemma-7b-it to competition?

It worked and worked fast!

But after a few tries, I didn't manage to reproduce the result. Sometimes its response is not valid JS code so it can't be taken into action:

**Solution:**

The adjusted locator `'.auth-form-body form button[type="submit"]:contains("Sign in")'` addresses the issue by:

Unfortunately, CodeceptJS can't run the code from its words.

Conclusion

AI can improve the way we keep our end-2-end tests up to date. If you run a lot of browser tests on CI you should already try to use AI assistant to reduce flakiness of your tests. Just why not?

If it fixes a test, it will use much less time then you would do reproducing and re-running this test.

If it fails, you lose nothing. AI is quite cheap, esp comparing to QA engineers rates.

As of April 2024 the best model you can use to heal flaky tests is Mixtral mixtral-8x7b-32768. True winner! It took about 1s for request. You can enable it today with Groq in your CodeceptJS setup and have your tests fixed up on the fly!

ChatGPT-Turbo gpt-3.5-turbo-0125 also showed a good result. Last year on same task it took ~20-30s per request. Serious improvement, as the request time was reduced to 4s.

P.S. Sure, general LLM are not stable and can't guarantee the same results. But even if they can fix 10% of failures for you, that can make huge impact!

Source code:

https://github.com/DavertMik/codecept-ai-demo/tree/demo

Top comments (0)