In this post, I present you a solution developed within the .NET ecosystem that shows how to integrate RAG with the ETL Medallion architecture using the Azure AI Document services, Pinecone vector database, Azure Cosmos DB, and the chat and embeddings OpenAI API endpoints to create a system capable of providing good responses in the context of Mexican Tax legislation.

Theoretical Framework

Medallion Architecture

The Medallion architecture organizes data transformation into three distinct layers—bronze, silver, and gold—each optimized for specific roles in the data lifecycle from raw ingestion to business-ready "golden records."

Embeddings and Vector Databases

Embeddings are numeric representations of the text, capturing their semantic relationships. During the embedding process, a vector is created, and each vector is stored in Pinecone (vector database), This setup enables the system to efficiently pull contextually relevant data using semantic searches over the stored information.

Retrieval-Augmented Generation (RAG)

RAG combines the strengths of both retrieval-based and generative AI systems to enhance the model's response accuracy and relevance. By dynamically retrieving relevant document segments, RAG allows models to generate better responses to the user's needs.

Prompt Engineering

Prompt Engineering involves designing inputs that influence the behavior of LLMs. It is crucial for adapting LLM outputs to specific tasks or contexts, especially when direct training data is limited.

Few-Shot Learning Techniques

Few-shot learning involves introducing a minimal number of examples to a pre-trained model, enabling it to apply its generalized knowledge to new tasks. This technique is used in the project as part of the golden document creation.

Enhancing RAG with Contextual Prompts

For the (RAG) process, the system's effectiveness is improved by incorporating relevant information into the context window. This method involves crafting prompts that include specific contextual information, which guides the retrieval mechanism to produce precise and relevant outputs.

Hypothesis.

Enriching a vector database with synthetic or human-generated questions related to document content, along with structured summarizations (chunks) of these documents, significantly enhances the retrieval process in a Retrieval-Augmented Generation (RAG) system.

Synthetic or Human-Generated Questions:

These anticipate user queries, improving the retrieval model’s ability to match actual user questions with relevant stored queries, resulting in more accurate and rapid information retrieval.

Structured Summarizations:

Condensing content into coherent segments reduces computational load and focuses the retrieval on relevant text areas, speeding up the process and increasing precision.

Implementation

This section describes the three primary processes that underpin the solution: the ETL process, vector index creation, and the Retrieval-Augmented Generation (RAG) process.

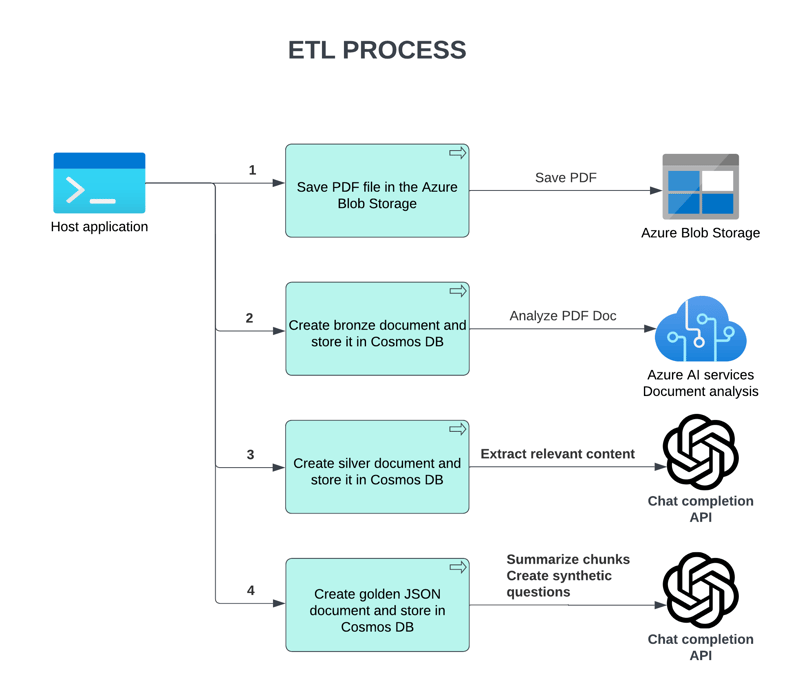

1. ETL Process

The ETL (Extract, Transform, Load) process is the initial step where data from PDF documents containing Mexican tax laws, is extracted. The data is then transformed into a standardized format in the golden document, each golden document represents a chunk from the original PDF content enriched with bounding boxes of its position in the file, a short synthetic summary, and a set of synthetic questions related to the chunk.

ETL Process Architecture

ETL Data Flow

Documents JSON schema

2. Vector Index Creation

In the vector index creation step, the process creates the vectors and metadata to store in Pinecone. For each golden document, one vector is created for the synthetic summary, and another one for each synthetic question, the metadata is populated with the golden document's unique identifier for future retrieval and enrichment purposes.

3. RAG Process

The Retrieval-Augmented Generation (RAG) process utilizes the indexed vectors during the query phase. When a query is received, it is encoded into an embeddings vector to perform semantic search over the Pinecone database, with the retrieve data the chat process creates a contextualized prompt which is used to augment the chat completion response from the LLM.

Ok, ok, now show me the code!

erickbr15

/

palaven-llm-sat

erickbr15

/

palaven-llm-sat

LLM RAG with openAI / Pinecone / Azure

PALAVEN - Project Documentation

1. Introduction

This project leverages .NET 6 (Long Term Support) to create a backend-focused solution incorporating OpenAI's Chat and Embeddings services, Pinecone's HTTP API, and Azure AI for PDF text extraction. This phase does not include web APIs or Azure Functions but features three console applications for data ingestion, vector index creation, and testing interactions with OpenAI's Chat API.

2. Requirements and Setup

System and Software Requirements

- .NET 6 (LTS) environment.

- Access to OpenAI APIs.

- Pinecone HTTP API setup.

- Azure AI services for text extraction.

3. System Architecture

4. OpenAI API Integration

Chat and Embeddings Services

The project integrates OpenAI's API through HTTP requests, utilizing the .NET IHttpClient for connectivity. Key components of this integration include:

-

Endpoints

- Chat completion endpoint: Utilized for 1. Extracting the article text, 2. Generating the synthetic questions about each ingested article, 3. Generating responses based on user inputs

- Embeddings creation…

Helpful links

- https://platform.openai.com/docs/api-reference

- https://learn.microsoft.com/en-us/azure/ai-services/document-intelligence

- https://docs.pinecone.io/guides/getting-started/quickstart

- https://help.openai.com/en/articles/8868588-retrieval-augmented-generation-rag-and-semantic-search-for-gpts

Finally...

I encourage you to consider this work as a foundational reference for your projects. The methodologies and technologies detailed here offer a robust framework for enhancing the capabilities of AI-driven systems, particularly in specialized domains.

Thanks for reading and happy coding!

Top comments (0)