Cloud Computing

What is Cloud?

Cloud is the delivery of technology resources and applications on demand, using the internet to deliver and handle these resources with charges.

So, cloud is a third-party server that delivers data.

On-primises

- Data center or data processing center.

- It is a building or area made to concentrate servers, processing equipment and data storage.

- Objective

- Made to meet the computational demands of one or more companies that work with databases, with a complex network system where switches, cables, routers, etc. are concentrated.

With that, on-premises is a physical personal server that belongs to the person or company that owns it.

Service Models

Service models are ways the cloud has services to offer you.

- IaaS

- And the famous s2, here you go up your machines, down your machines, configure them, middleware and the operating system that runs on it.

- PaaS

- And more focused on developers, businesses. Your settings here are just data and applications that will run. The runtime, operating system and environment configuration, you don't need it, AWS does it for you.

- SaaS

- Software as a service, here you just put the application to run and that's it.

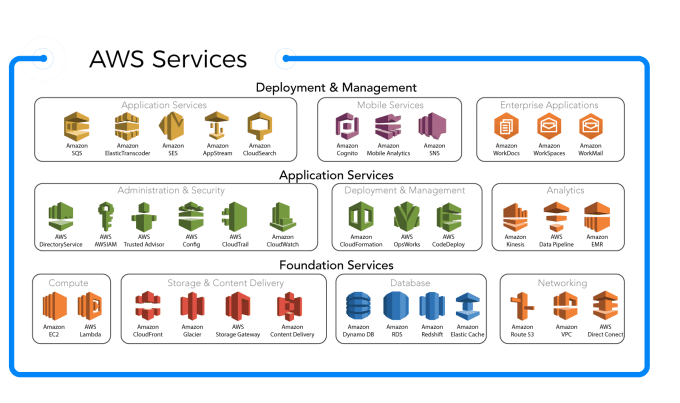

Cloud Services

Main services:

- Compute.

- Amazon s2, which is an AIs.

- AWS Lambda.

- Storage.

- Amazon CloudFront, for content delivery.

- Amazon Glacer, for cold data storage.

- Management and Security.

- AWS Storage Gateway.

- Amazon Content Delivery.

- Database.

- Amazon Dynamo DB, and NoSQL.

- Amazon RDS, Oracle, SQL Serve, Postgre etc...

- Amazon Redshift, to work with data silos.

- Amazon Elasticache, to work with in-memory data.

- Network and Connectivity.

- Amazon Route 53, to work with DNS, domains etc...

- Amazon VPC, to create internal networks and subnets within AWS domains.

- AWS Direct Connect, to make a direct connection from one point to another in a secure and extremely fast way.

Other services:

- AWS IAM

- AWS IAM is the first thing you have to create, you access it through your root account, then you create your groups and users that will access your console or that will make programmatic access through the API. Of all AWS services, this is the most important.

- AWS SWOT

- It analyzes and monitors metrics of what is being consumed, to know everything that is being counted, charged, accounted for and what times it is being used. He is very good at knowing everything that is being done.

- AWS Code Deploy

- To deploy code, place your applications, create pipelines, connect with git.

- Amazon Kinesis

- Basically it's made for data analysis, for those who work with analytics.

- Amazon SQS

- It works with messaging.

AWS Regions

AWS has data centers on every continent in the world, except Antarctica.

Region

Region and where AWS concentrates its data center, for security reasons, it is not known where the data centers are, only their region.

Edge Points of Presence (Edge POP)

Partners that connect to the AWS data center are printed. AWS is never accessed directly, it is always accessed through these edges, an Edge nodes. Which is an edge server that will intermediate to AWS.

Cloud Professions

- Cloud enginers

- Responsible for developing and working with infrastructure and software, ready to deal with problems. (Software).

- Cloud Architects

- Responsible for designing solutions and systems, knowing security, networks, virtualization and the cloud environment that is being used. (Architecture).

- Cloud OPS

- Responsible for infrastructure support and security. (Infrastructure).

Indentity and access management - IAM

Responsible for managing aws access keys. With this you can give and restrict access, create and delete users and groups. It is the most important piece within aws, as it is she who guarantees your safety.

AWS IAM is a feature of your account and is also offered free of charge.

IAM characteristics

- Shared access to your AWS account

- You can create several users, give them credentials, temporary or not. With this you restrict your team's access.

- Granular permissions.

- Manages user functions such as, delete, create, etc...

- Federation of entities.

- It makes it possible to mirror your current permissions that are in a OnePrimise, for example, directly to the Cloud, that is, it migrates. It only supports SAML 2.0

- PCI DSS Compliance

- Payment Card Industry Data Security Standard or Payment Card Industry Data Security Standard, a standard that defines a group of requirements aimed at protecting credit card holders' personal information in order to reduce damage and fraud.

Users, Groups, Policies and Permissions

- Users

- Concept used for every AWS entity, only one person or application must be assigned.

- Roles

- Concept referring to what a user, group or roles can or cannot do within AWS.

- Groups

- A set or collections of several users, who will have the same privileges.

- Policies

- Defines what is allowed and what is not allowed at any entity level.

Security Group Intro

- Security groups facilitate security by creating a virtual firewall that controls the traffic of one or more instances.

- It also allows access release rules

IAM best practices

- Each user must have permissions to perform only their activities in accordance with their responsibilities.

- A user after its creation does not have any privileges by default.

- User permission settings through groups and policies.

- Work without dependence on the infrastructure provided on-premises.

- Follow the principle of least privilege.

- Do not use users to log into applications.

- Root account must have MFA.

- Root account must have MFA.

- Do not use root account, only for initial configuration.

- Do not share IAM credentials.

- User IAM per person.

Computing and network security

VPC Security:

-

Security Groups

- Associated as a firewall for EC2 instances.

- Controls inbound and outbound traffic.

- It can be used as the only barrier to access your VPC.

- Evaluates all rules before allowing traffic.

- It is associated with instance networks.

-

NACLs

- Associated with a firewall for subnets

- Controls inbound and outbound traffic at the subnets layer.

- Applied to all instances associated with only one ACL, an ACL can be associated with more than one subnet.

Good security practices

- Network

- Definition of ANGES of ips.

- what number it can be associated with.

- Internet gateway(internet).

- virtual private gateway.

- For cross-PCS or on-premises access.

- Definition of ANGES of ips.

EC2

(EC2) Amazon Elastic Compute Cloud.

(Amazon EC2) and a web service that provides secure, dimensional computing power in the cloud. It's a quick way to start computing resources, making it easier to initialize and scale your instances.

AMI Amazon Machine Image

- AMI and a template contain software configurations (D.O, APP server and web server).

- facilitates the creation of one or several instances from a single AMI, which generates copies.

- Can be configured and customized with specific or proprietary software.

- Instances can have local disks (boot), have amazon EBS (data) or have volumes mapped to Amazon s3.

Basically the concept of this and replicating an image, for example:

You configure an entire system, so you don't have to configure it again, you create an image of it. This way, you won't need to redo the settings, in addition this image can be replicated elsewhere, thus avoiding any rework.

Elastic Ip

The elastic ip is a public address that is associated with the account that allows the instance's public ip to have access to the internet without the need for a NAT.

- We can have up to 5 elastic ip per account, for more I need to open a ticket for AWS.

- Elastic ip is a weakness in any architecture and not recommended for production environment.

User Data

Used to execute commands when an instance is created, it can be Bash, shell, etc. The crypts inserted in the user data are executed only once, only in the creation of the instance.

It is always run as root user.

Billing EC2

- On demand

- pay for use

- most expensive of the billing models, but you don't need to pay in advance.

- no prior contact.

- Short term and development.

- Dedicated

- Dedicated physical hardware for your instances.

- Full control of where your instances are.

- Access to physical details.

- 3 years reservation.

- Most expensive of the models.

- Suitable for companies with compliance restrictions.

- Suitable for companies that have software with unusual or complex licenses.

- Spot

- Up to 90% discount compared to On Demand.

- auction template.

- value depends on demand.

- Not suitable for critical applications or databases.

- Recommended for jobs and workloads that accept interrupts, such as big data (spark) jobs.

- Reserved

- 75% cheaper than on demand.

- pay in advance.

- Minimum reservation of 1 or 3 years.

- For stable applications.

Amazon Elastic Block Store

It was created to provide persistent block storage to be used in conjunction with EC2 instances. Made for high availability and durability and fault tolerance as it is replicated to your AZ automatically. It is a volume that is associated with an EC2 instance, but is independent of the type and of an EC2 associated with it. Unlike an instance storage that is an ephemeral disk drive, that is, that is lost or turned off, the proper use of the storage instance is indicated for buffers or caches.

Basically it associates an instance to an EBS. If this instance is terminated and your data is also linked to the EBS, your data will be lost and your data will not be lost.

EBS characteristics

- Persistent disk, data is not lost when an instance is shut down.

- SSD, PIOPS or Magnetics.

- Strikethrough by net.

- 16 TB maximum.

Storage is reliable and secure, as each of the volumes is replicated within its AZ to protect against failures, generating redundancy.

EC2 Auto Escaling

Service provided by AWS in order to ensure that an application has high availability, that is, a greater number of EC2 instances to deal with a greater demand for loads.

When creating the so-called scaling groups, we choose an image type and the minimum and maximum number of instances of each scaling group, guaranteed neither more nor less than what was specified.

We can define policies, so Auto Scaling can start or stop instances according to demand.

Lauch Configuration is a template that Auto Scaling uses to start EC2 instances, where we configure it as if it were to start an EC2, but this is a template that will be reused by Auto Scaling. It is not possible to modify Auto Scaling, so another one must be created.

Auto Scaling Plans

- scale out

- Start new instances.

- scale in

- Terminate new instances.

- manual

- Only the maximum and minimum capacity is defined and auto scaling manages the creation process.

- scheduling

- Specific times are defined to increase and decrease the number of instances.

- On demand

- Create when an advanced rule is associated with compute usage, for example when an instance's average CPU rate is above 85% for more than 15 minutes.

Auto Scaling Price

- no additional charge

- Pay for using EC2 instances

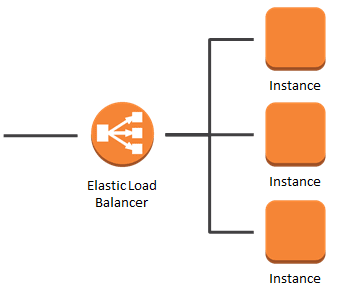

Load Balancers - ELB

- AWS Elastic Load Balance is a service offered by AWS designed to distribute incoming traffic among several EC2 instances, allowing for a single point of access to your instances. When an instance fails, traffic is automatically directed to a nostalgic instance.

- ELB characteristics

- Network

- Accepts traffic with HTTP, HTTPS, TCP and SSL protocols.

- Cryptography

- You can concentrate the encryption direction for it, taking this work away from EC2 instances, being able to configure the instances to not accept incoming traffic that is not through the ELB.

- Monitoring

- Possible to monitor ELB using metrics with CloudWatch and access logs using AWS CloudTrail.

- Safety

- Serves as the first line of defense against attacks in your network.

- Health check.

- Instance with problems stop receiving traffic until they are restored, this verification of the instance is done through a point called "health check", health check, normally done through an endpoint.

- Network

Characteristics of the 3 types of Load Balance

- Application Load Balancer

- It has the characteristic of using target groups and routes traffic to target groups, being able to enable additional ways for your request using routing based on path or on host, it has support for IPV6 and has firewall integration, in order to route different requests to the same instance, but deferring the path based on the port.

- Network Load Balance

- Classic Load Balance

- It was the first Load balance that existed, it has the characteristic of registering instances with load balance. In the future AWS will discontinue the classic load balance.

ELB - Connection draining

Enabling these features on your load balancer ensures that all instances removed from the load balance domain finish requests before being removed from the ELB.

- It has high availability between AZ.

- Separate public traffic from private traffic.

If the Health Check fails, the load balancer no longer forwards any requests to the unhealthy instances, allowing you to perform maintenance on the instance with problems so that it returns to the healthy instance group.

RDS

The Ralational database service or RDS is an interface provided by aws that provides an easy way to configure, operate and scale relational databases in the AWS cloud. It delivers cost-effectiveness when compared to the market as the infrastructure and AWS administration part.

- DB instance is a block of RDS, that is, a database in an isolated environment in the cloud, which can contain several databases and accessible to tools and interfaces such as a standalone database.

RDS models available:

- Amazon aurora

- PostgreSQL

- MySQL

- MariaDB

- Oracle DataBase

- SQL serve

It has a feature known as AWS Database Migration Service, made for migrations and replications of databases outside of AWS, into AWS.

Billing RDS

- uptime

- active hours

- disk usage

- provisioned size for database.

- Data transfer

- amount charged for the amount of data that enters and leaves the DB instance.

- Backup disk usage

- Amount of disk that automatic backup consumes.

- 1/0 requests

- total requests 1/0 per month.

- instance class

- valor baseado na classe, como o EC2, que possui varios como micro small e etc.

RDS monitoring

- CloudWatch

- It is possible to create your own metrics for usage, consumption, etc.

- CloudWatch Log

- DataBase or instance log sending to CloudWatch.

- Amazon RDS Monitoring

- More than 50 different metrics like memory, CPU, 10 and etc.

DB to RDS Migration

- AWS DMS

- Create a replica from your bank or datawarehouse to AWS in a secure way.

- AWS SCT

- Conversion of Schemas from databases or dataWareHouse to Open Source databases such as: MySQL or AWS services such as Aurora and RedShift.

RS Event Notifications

- SNS

- Simple Notification Service, an AWS tool made for notification and messaging.

- Monitor data from sources like:

- SMS

- HTTP EndPoint

- Event Categories

- With 17 distinct event categories such as: Avalibilty, backup, deletion and etc.

DynamoDB

DynamoDB and the AWS NoSQL database, deliver at very large scale, with low latency, high availability. When used in conjunction with your EC2 in the same AZ, it delivers security features, data backup and restore and cache. Working with key-value document type data. Replication pattern 3 to ensure high availability.

What is S3 ?

S3 is a concept that stands for Object Storage, a concept that is used and leveraged to create a repository.

- Web interface available in an integrated way with the s3 platform, allowing data to be stored and retrieved anywhere, uploading and downloading data.

- It has an API and SDK for integration, using calls.

- Resilience, scalability and replication across AZ.

- Data lifecycle management.

- Several resources related to data security and accessibility, complying with various regulatory security requirements, with the ability to optimize costs.

Features:

- Resilience and availability

- The s3 design was made to have 99.999999999% resilience being one of the most successful tools on AWS.

- security and compliance

- Secure, with access control protecting against unauthorized access, blocking public access with s3 Block Public Access, complying with PCI-DSS, HIPAA/HITECH, FedRAMP, FISMA among others. Integrated with Amazon Macie to protect sensitive data.

- Consult locations for analysis

- Big Data Analysis in S3 Objects, integration with amazon Athena for data queries using SQL, Amazon Redshift and s3 Select.

- flexible management

- Analysis of usage trends, classification of data usage for cost reduction.

- Data transfers made easy

- A huge variety of data import and export options supported by s3.

The amazon s3 was created to be the most complete storage platform, its charge is calculated through the storage and transfer of data.

- scalable

- durable

- geographic redundancy

- versioning

- life cycle policies

Tools:

- AWS s3 select

- The resource developed by AWS with objective and results that exceed 400% and reduces query costs by 80%, one of the explanations is that it uses data retrieval through subsets of data from an object and not the entire object, limit of 5TB.

- AWS s3 transfer acceleration

- AWS Snowball, AWS Snowball Edge and AWS Snowmobile, being one fifth cheaper than high speed internet for bicker data or for s3 buckets over long distances.

- AWS storage gateway

- Service made for hybrid architecture, which connect local environments to s3, can also be done using AWS DataSync that can transfer data 10 times faster than many open source tools.

)

)

Top comments (0)