Applications will fail, this is a reality from the universe. But these fails are not always catastrophic, sometimes they are just silent: a system that starts to answer incorrectly but just for some users, maybe responses start to get slow for an specific region.

Everyone that worked deploying systems enough have a story about how things "suddenly" went wrong on an Friday night after 10pm (a nightmare that haunts every DevOps dreams).

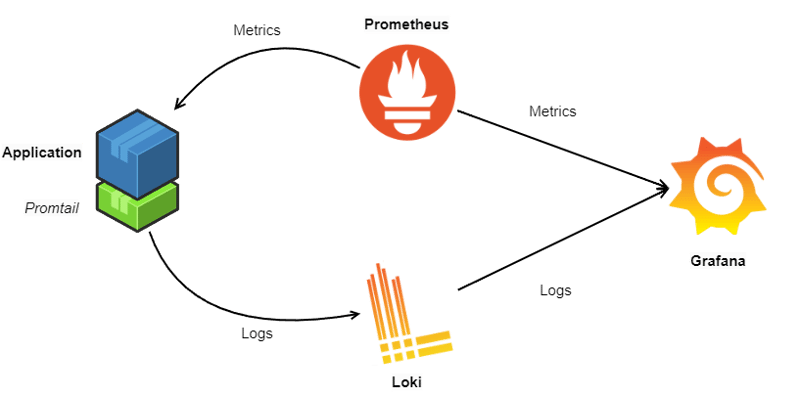

One way to avoid these kinds of problems (and others too) is to establish a good monitoring environment to track your application's health and availability. The objective of this tutorial is to help you create and understand this environment with some of the most famous tools for the task: Grafana, Loki and Prometheus.

Requirements

This tutorial uses Kubernetes to deploy the applications, however most of the content is focusing on the tools and related concepts rather than on deployment. So this should be good for everyone.

If you want hands-on practice you should have a running Kubernetes cluster (I used MicroK8s for this tutorial) and Helm (see how to install on Installing Helm tutorial). It is important that you understand the basics of these tools to fully understand.

Note that this is not an exhaustive list of features from the tools. See the sources section for a deeper understanding.

Capturing your metrics with Prometheus

One way to understand the health of our applications is to understand the metrics they produce. For example, if your database is slow you may want to know if it is not reaching the boundaries of your CPU/RAM by monitoring your CPU/RAM usage metric. If your web server is slow you may want to know if you are not receiving more requests that your system can handle. When you have one or two applications this can be easily done by getting these metrics manually for each tool. However when you have dozens or even hundreds of applications things start to get very difficult to manage.

How it works

Prometheus solves this problem by providing a centralized way to store and get these metrics from the application. For it to work each application should expose a port (by default 9090) that will contain its generated metrics in a specific human-readable format following the Prometheus Data Model. What Prometheus do is to use crawlers that will regularly get this information from the applications and send to the Prometheus instance. This is call a pull architecture.

Push Gateway

You can also work with a push architecture in Prometheus by using the Push Gateway. As the name says it works by the your application pushing its metrics to Prometheus (you can read more about how it works on "Pushing metrics").

This is not a thing to use always, in fact, the official documentation recommends to use that only in limited cases that you can understand more reading "When to use the Pushgateway".

Exporters

As I said before, Prometheus requires that the data is available for the crawlers in an specific format. But as you may be expecting not all tools follow theses rules. To solve this problem Prometheus have exporters.

Basically What an exporter do is implement a way to convert metrics from third-party systems to the Prometheus data format. One famous example is the JMX exporter can convert JVM-based application metrics. Another one is the Kubernetes Node Exporter that exports information about your Linux Kubernetes Nodes to the tool.

Querying the metrics

But with the metrics being on Prometheus, how can we actually see and use them? The answer is on PromQL, a query language that enables us to select and aggregate these metrics data.

In fact (and a little bit of a spoiler), this is what Grafana uses to query metrics from Prometheus and create the dashboards.

Alerting

Now, we just need to look for every log on the system and see if there is a problem, right? No, there is no need for that since Prometheus has also a built-in alert manager that will alert everything you want based on some metrics thresholds. For example, you can set an alert that triggers every time the response time is greater than 200ms.

Note that Grafana has a similar feature, so it is a good practice to just use one of these tools for alerts.

Configuring Prometheus

Prometheus can be deployed using the Prometheus Helm Chart. This helm chart contains a lot of features such as the already mentioned Push Gateway, Alert Manager and so on. For simplicity reasons of this tutorial I will not show all the Helm chart configuration but you can see a real example used by me here.

Crawlers and Scraping

As briefly explained before, Prometheus use crawlers to get information from the applications that generate these metrics. On Kubernetes these is done by specifying jobs on the scrape_configs section. You can see an example below:

serverFiles:

...

prometheus.yml:

rule_files:

- /etc/config/recording_rules.yml

- /etc/config/alerting_rules.yml

scrape_configs:

- job_name: prometheus

static_configs:

- targets:

- localhost:9090

- job_name: kube-state-metrics

scheme: http

honor_labels: true

static_configs:

- targets:

- prometheus-kube-state-metrics.monitoring.svc.cluster.local:8080

- job_name: kubernetes-apiservers

kubernetes_sd_configs:

- role: endpoints

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

insecure_skip_verify: true

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

relabel_configs:

- source_labels: [

__meta_kubernetes_namespace,

__meta_kubernetes_service_name,

__meta_kubernetes_endpoint_port_name,

]

action: keep

regex: default;kubernetes;https

...

On the example you can see that the first 2 jobs specify an static target (static_configs.target) that the crawlers should look for metrics. The first one is to scrape the Prometheus application itself on localhost and the second will look for metrics in the prometheus-kube-state-metrics.monitoring.svc.cluster.local:8080 address.

However the kubernetes-apiservers job acts differently. It uses a service-dicovery mechanism that will automatically find the metrics endpoints that respect some rules defined by the mechanism. Here we are using the kubernetes_sd_configs but others such as azure_sd_configs or http_sd_configs can be used. You can see more of this configuration on the scrape_config documentation.

If you go to your option status > targets inside you Prometheus instance you can see all the application (targets) that are being crawled and their status. It should look similar to this:

Capturing your logs with Grafana Loki (and Promtail)

But is not only about metrics that the SRE lives on. Another part of understanding how our applications are behaving is to read the logs that they generate, this is why you need Loki.

Loki works similar to Prometheus by having agents that scrape logs from the application (like Prometheus Crawlers), add metadata (labels) and streams them to a Loki instance. You can now read these logs using some tool, for example Grafana or the Loki Cli.

But differently from Prometheus, Loki does not scrape information automatically, so you need a way of sending you need a way of sending these logs to it. One of the most famous agents is Promtail. In a Kubernetes environment what it does is that it deploys a Daemon on every deployed node in the cluster. This Daemon reads the local logs generated by the pods and send them to a Loki instance (for those unfamiliar with Kubernetes, in a simplified way, this is similar to create a machine that will be deployed alongside your application machine and will be used to read all the logs).

There are a lot of agents that you can use such as FluentBit, Logstash and many others.

Configuring Loki

For Loki you can use the official Loki helm chart by Grafana. Again, you can see a real example by looking at this values.yaml file. Loki can be deployed in single binary where all the write, read and backend will be done in the same machine (monolithic). You can specify the values on write.replicas, read.replicas and backend.replicas to deploy in distributed mode. You can also store the logs in a file format or using a s3-like storage. In the example I use a MinIO deployed with other helm chart, but this helm chart provides a way to deploy a MinIO instance.

Configuring Promtail

To deploy Promtail, again you can use this values.yaml file as a base.

Promtail works with the concept of pipelines that will transform a log line, its labels and its timestamps in a way that is better for Loki. There are a lot of built-in stages such as

regex, json and many others. You can see more about pipelines and stages here and here.

Visualize all with Grafana

Finally, to make these metrics and logs actionable we need to have a way to visualize, aggregate and correlate them in a way that is easy for humans to understand. We also need to be alerted in case things go wrong. Grafana is a visualization and analytics software that can help us create visualizations (and alerts) from time-series databases. On our example these databases will be Prometheus and Loki, but many others could be used.

Deploy Grafana

Grafana is deployed by using the official Grafana Helm Chart. You can find more information about the chart on Deploy Grafana on Kubernetes. Again you can see a real example here.

Data Sources

What a Data Source do is specify where Grafana should get the data from, the example below shows that we are getting data from Prometheus and Loki. There are a lot of supported Data Sources that you can find here. Configuring a data source can be done directly via the UI or via the helm chart:

datasources:

datasources.yaml:

apiVersion: 1

datasources:

- name: Prometheus

type: prometheus

url: http://prometheus-server.monitoring.svc.cluster.local

- name: Loki

type: loki

url: http://loki-gateway.monitoring.svc.cluster.local

Dashboards

We also want to visualize the information in ways that make it actionable, this is done by creating dashboards to show graphs and metrics for your applications. You can create your own dashboards via the UI or json files, however there are also a lot of community dashboards that you can install directly into Grafana (more information here).

dashboardProviders:

dashboardproviders.yaml:

apiVersion: 1

providers:

- name: default

orgId: 1

type: file

disableDeletion: false

editable: true

options:

path: /var/lib/grafana/dashboards/default

dashboards:

default:

# Ref: https://grafana.com/grafana/dashboards/13332-kube-state-metrics-v2/

kube-state-metrics-v2:

gnetId: 13332

revision: 12

datasource: Prometheus

# Ref: https://grafana.com/grafana/dashboards/12660-kubernetes-persistent-volumes/

kubernetes-persistent-volumes:

gnetId: 12660

revision: 1

datasource: Prometheus

Dashboards are configured in YAML by setting a dashboard provider (read more on Dashboards) and the dashboards that you want to load, this can be a local dashboard configuration loaded as a json file or as the example shows above a dashboard found on the dashboards repository by selecting the id and the revision. For example, the first dashboard on the example loads the 12 revision of kube-state-metrics-v2 (id 13332) using the

Prometheus datasource. This should result in a dashboard that looks like this:

Top comments (0)