Imagine a world where any software, app, or device intuitively understands your requests, actions, preferences ― without complex menus or rigid commands.

Over the past few months, I’ve been quietly working on making this world a reality through a project called NLUX: A React JS / Javascript library I architected to enable developers to seamlessly build conversational interfaces.

In this post, I will reveal insights into the coming wave of transformative AI chatbots and voice assistants. I will share my perspective on what will soon drive the future of frontend software design. I will also uncover how developers and product owners can readily embrace conversational interfaces and start building the next generation of effortless, intuitive user experiences.

{a} The Future (Present) of Knowledge Work 🔮

― And how AI creeped into my screen.

Over the past 12 months, AI assistants have become an integral part of my work. At any given time, one of my dual screens is dedicated to conversing with Claude or ChatGPT-4. I estimate that 50% of my screen time now involves AI collaboration.

These AI assistants help me in various ways:

- When tackling customer issues, I'll have Zendesk software open on one display while using Claude AI chat window on the other to summarise the issue and to jog my memory about prior discussions and suggest potential solutions.

- While communicating and producing documents, I leverage AI to help structure content, create initial drafts that I then elaborate on, tweak, and refine before going back to AI for final polishing recommendations.

- While coding, my main code editor occupies one screen with its AI pair programmer activated. The second screen runs Claude to help me navigate complex codebases, recommend design patterns, or brainstorm algorithms.

- When learning something new, I often start by querying my AI assistant to prime my knowledge. If I need more depth, I'll turn to Google or reference sites ― then go back to the AI to summarise key points and answer follow-up questions.

- Even in meetings, I would sometimes have an AI chat window open to feed questions about topics that arise and get promptings for relevant talking points or ideas I can contribute.

About half of my working time now involves AI collaboration of some kind ― whether reading chatbot responses or asking questions via typed conversations, or other AI-enhanced productivity applications.

While my heavy usage of AI assistants may be slightly ahead of the curve today, I believe it soon will become the norm among most knowledge workers as these tools continue advancing rapidly.

{b} From Hyper-links to Hyper-ideas 💡

― How the Web (and User Interfaces) are changing with AI.

The early internet languished in obscurity for over a decade. While invented in 1983, it remained complex and boring until the World Wide Web arrived in the early 1990s. The Web popularised concepts we now take for granted ― like web pages, URLs, and hyperlinks.

URLs enabled unique addresses to find information again later. Hyperlinks connected relevant content across pages. Together, they let users fluidly navigate the expanding online landscape by hopping from page to page.

The page-centric browsing defined the web experience for decades. Even modern single-page web apps largely mimic this model of consuming atomised information spread across disparate screens. But this paradigm is now poised for disruption by Conversational AI. Instead of clicking links between pages, users now engage in contextual conversations centred around ideas.

With AI chatbots, we are moving from hyper-links to hyper-ideas. Users no longer transition across pages, but fluidly move from one relevant idea to another within a single paged conversation. The focus becomes pulling insights, recommendations, and answers from the assistant versus hunting for them across sites.

With conversational interfaces, there are no pages ― only an intelligent assistant ready to respond to users’ natural language intents and dialogue context. Behind the conversational facade hides the complexity of aggregating, connecting and reasoning over information. But for users, it feels like a familiar chat informed by the history and current needs.

And this new paradigm requires a radical re-thinking of how user-interfaces should be designed and built.

{c} Patterns of Conversational UI 📣

As we transition to conversational interfaces powered by AI, new UX design paradigms emerge across various embodiments. Here are some key patterns I see gaining traction:

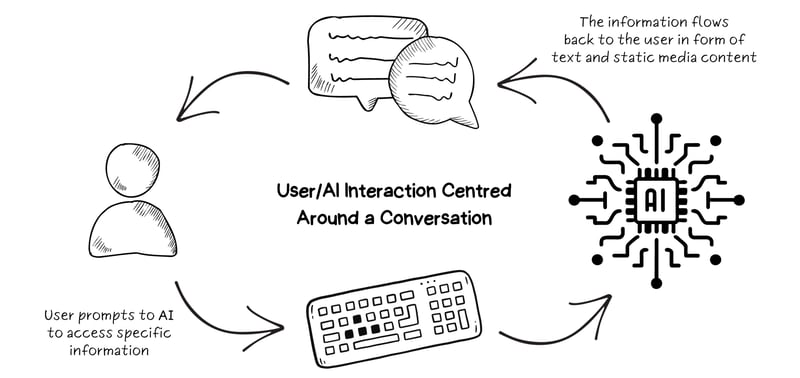

(i) AI Chatbots ― The Baseline

Text-based chatbots are becoming a key user interface layer. Every major application will soon feature a conversational assistant, allowing fluid toggling between clicking menus and chatting in natural language.

These AI companions handle text queries, offer smart suggestions based on what the user types, and generate relevant content like text, images and more. Think of them a custom ChatGPT component embedded in each app.

Examples:

- A standalone ChatGPT pane for commentary, analysis and content creation.

- Customer support bots fielding product questions.

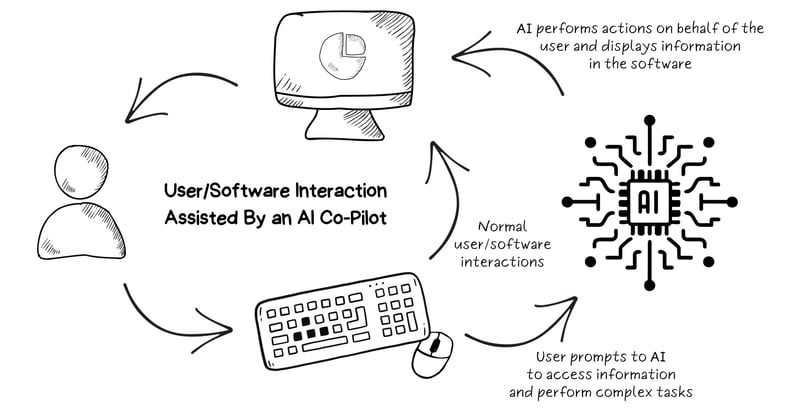

(ii) Co-Pilots ― The Smart Integration

Beyond standalone chatbots lies an even tighter AI integration: Smart co-pilots that can integrate within existing interfaces.

Embedded co-pilots inherently understand the application context, user goals and work-in-progress. They follow along in real-time and stand ready to offer proactive suggestions, answer questions, and execute actions precisely aligned to each moment's needs ― no lengthy explanations required.

Example:

Imagine an inventory management application used daily by warehouse staff. An AI co-pilot could integrate directly into the interface to assist human users through natural conversations.

For example, instead of clicking through menus and configuring filters to check low stock items, staff could simply type "Show me all stationary products with low inventory levels." The co-pilot would automatically translate this to the appropriate data query and display the results.

Rather than navigating through reorder workflows, staff could tell the assistant: "Reorder these 7 products to regular levels." It would then execute the full workflow autonomously based on one plain language command.

With these AI capabilities woven seamlessly into existing UIs, workers can focus purely on high-value business decisions rather than manual processes.

With AI co-pilots, the applications almost begin to understand and operate like human team members ― smart assistants who can comprehend instructions and take action based on user needs.

(iii) Voice Assistants ― The Hand-Free Horizon

While text chatbots provide a baseline, AI-infused voice assistants like OpenAI's Whisper API promise far more natural and ambient interactions. We stand at the cusp of perpetual ambient computing ― with always-available vocal assistants permeating our environments through smart speakers, headphones, car dashboards and more.

You may counter that you have tried solutions like Alexa or Siri before to disappointing results. But the latest wave of voice generation AI like Whisper, combined with generative powerhouses like ChatGPT, unlock game-changing capability. With its human-like vocalisations and strong contextual reasoning, such technology finally delivers on the promise of truly smart voice assistants.

Rather than stilted vocal commands, you can have free-flowing multi-turn dialogues about nuanced topics. No longer limited by narrow skills and rigid intents. And the system learns your preferences, vocabulary and requests patterns over time for personalisation.

While I frequently use smart assistants via speech at home, in professional office settings I still find it awkward to openly speak to my devices with colleagues around me. A useful hybrid model would be to prompt requests via text and listen to voiced responses through a headset. This allows discreet immersive listening while keeping focused externally.

The technology is nearly ready for seamless voice invocation in any context. But until social comfort catches up, hybrid interaction modes balance functionality with professional etiquette.

(iv) Augmented Reality Assistants ― The Merge

While chatbots, voice assistants and co-pilots are only aware of context within a limited screen or device, augmented reality will massively expand contextual understanding to a user's full surrounding space.

For office / knowledge workers, this expanded physical context awareness may not hugely aid AI assistance capabilities at first. But for many industrial, manual or spatially complex jobs, it stands to provide a game-changing boost.

Imagine maintenance technicians able to summon holographic diagrams pinned to real machinery, with AI guides tailored to each repair situation, or factory workers getting in-situ optimisation recommendations based what they are looking at mid-assembly.

Augmented reality long seemed a distant dream, but With Apple Vision Pro now shipping, blending atoms and bits is nearing reality. No longer a toggle between physical and digital ― but occupying hybrid spaces where information and AI agency manifest all around.

{d} Embedding Conversational AI Into Apps 🎬

If you haven't already begun embedding AI, now is the ideal time. Here's my advice on taking the first steps:

- Audit Workflows - Analyse existing app and identify exactly where AI could simplify processes through faster access to information, smart recommendations, automated content generation, and workflow automation.

- Evaluate AI Services - Research conversational AI services like OpenAI (powerful models/APIs) or Google Vertex AI (access to Google’s AI portfolio). Both have accessible options. Other leaders include Anthropic and AWS.

- Jumpstart With Conversational UI - Embed smart chatbot and co-pilot UIs into your web and mobile apps using tools like, NLUX. It blends chat and UI intuitively while integrating with leading language models. Check nlux.ai for examples and documentation.

The key is to start small but think big. Identify some narrow yet high value assistance capabilities that conversational AI can deliver quickly - whether bolstering customer service, unlocking employee productivity, or streamlining user flows.

Adding natural conversations is newly accessible for all levels of products if you leverage existing building blocks thoughtfully. This is just the dawn of the AI age - but you can get a head start today. Reach out if you want to discuss strategies for embedding intelligence!

About The Author

Salmen Hichri is a seasoned software architect with over a decade of experience leading engineering teams at companies like Amazon and Goldman Sachs. He is also an open-source contributor and the founder of NLUX, a library for building conversational user interfaces that integrate with AI backends like ChatGPT.

Top comments (0)