- GitLab Project Setup

- JIRA Setup

- Setup GitLab runner on Kubernetes

- JIRA issue integration on GitLab.

- Setup Traefik Proxy (optional) for edge routing experience

- Containerized the sample django application

- Populate containerized application to helm chart

- GitLab CI/CD Pipeline setup via

.gitlab-ci.yaml - Demonstration

Hey there again! In this post. I will demonstrate on how you can setup a production grade CI/CD pipeline for your production workloads with mydevopsteam.io sorcery. 😅😅😅

Overview

Most of the time, organizations struggles to implement CI/CD pipeline. Specially on a critical systems or infrastructures. Some organizations already implemented it and some organizations are not familiar with it. But most of it fails. That's why MyDevOpsTeam was created to help SME's to properly implement DevOps methodologies in able for them to stay focus on the business instead of the technicalities. In this demo we will explore how CI/CD pipeline help newbies learn how to basically implement it.

Prerequisites

- GitLab Account

- Atlassian Account with JIRA

- Existing Kubernetes Cluster

- kubectl (Kubernetes Client)

Note: We will use only Minikube as a kubernetes cluster. Because I've forgot to terminate my EKS Cluster running an m5a.2xlarge worker nodes after my demonstration last June 2022 🤣. I just terminated it yesterday after I notice something isn't right before going to sleep. 🤣 🤣 🤣

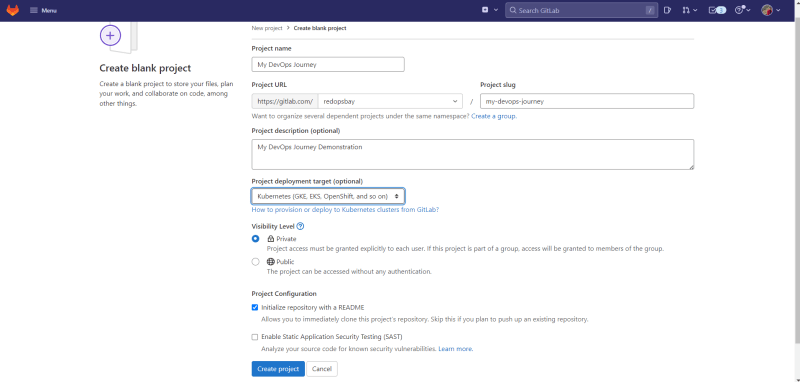

GitLab Project Setup

Overview

GitLab is one of the available devops platform with free and premium features. It offers a lot of features and lots of integrations.

Login to your account and Create a GitLab project.

and enter the necessary details for your project.

JIRA Setup

Overview

JIRA is one of the leading Project Management and Bug tracking tools. It also has a free and premium features in able for you to create and track ongoing Bug/Issues. Also, The most common use cases of JIRA is the ability to integrate it to a CI/CD pipeline for automatic transitioning once the bug has been fixed and deployed to production environment.

Assuming that you have already an account on JIRA. Now it's time to create your first JIRA project.

And select Bug Tracking.

Then enter your project details.

Setup GitLab runner on Kubernetes

There is a few options to setup a Gitlab runner.

- Run the Gitlab runner on single linux instances.

- Run the Gitlab runner on a docker container via docker API

- Run the Gitlab runner as a kubernetes pod.

Advantage

- Running Gitlab runner on single linux instances let's you easily manage your gitlab runner and quick setup.

- Running your gitlab runner as a docker container let's you dynamically run gitlab job without worrying about software installation. And the container will be automatically deleted after job run.

- Running your gitlab runner as a kubernetes pod let's you run a complete dynamic jobs like run as you go. And the pod will automatically be deleted after the pipeline execution.

Disadvantage

- It's problematic to run a Gitlab runner on an ec2 or single linux instance due to a limitations like software installations during job run. And it might cause your instance to life or death scenario. 🤣

- It's also problematic to run a Gitlab runner on a docker container. Since, Docker will be running also in a single instance. So what if your job requires a high memory/cpu capacity? And you have a multiple jobs running simultaneously?

Key Notes: My recommended option is always implement a dynamic pipeline/infrastructure etc.. So you can just plug/play your solutions in any platform just like a template. And you will not worry about vendor lock-in sh*t.

Installation of GitLab runner via Helm.

Assuming you have a minikube or any kubernetes cluster running. First, create values.yaml file.

Note: Make sure to get the gitlab runner registration token residing on the settings of your project.

# The GitLab Server URL (with protocol) that want to register the runner against

# ref: https://docs.gitlab.com/runner/commands/index.html#gitlab-runner-register

#

gitlabUrl: https://gitlab.com

# The Registration Token for adding new runners to the GitLab Server. This must

# be retrieved from your GitLab instance.

# ref: https://docs.gitlab.com/ee/ci/runners/index.html

#

runnerRegistrationToken: "<your-gitlab-registration-token>"

# For RBAC support:

rbac:

create: true

# Run all containers with the privileged flag enabled

# This will allow the docker:dind image to run if you need to run Docker

# commands. Please read the docs before turning this on:

# ref: https://docs.gitlab.com/runner/executors/kubernetes.html#using-dockerdind

runners:

privileged: true

Add the helm repository via:

helm repo add gitlab https://charts.gitlab.io

helm repo update

Installation

helm upgrade --install gitlab-runner gitlab/gitlab-runner \

-n gitlab --create-namespace \

-f ./values.yaml --version 0.43.1

After a few seconds, Gitlab runner should be available at your cluster and Gitlab Project.

JIRA issue integration on GitLab

Integration with JIRA and GitLab is easy. But for a long run like expanding your project. It would be a problematic. But for this one, We will only perform a quick way to integrate GitLab and JIRA software.

Generate JIRA API Token

Proceed to https://id.atlassian.com/manage-profile/security/api-tokens and generate your JIRA token.

Now, you have your API token. Proceed to your GitLab Project -> Settings -> Integrations -> JIRA. Then enter the necessary details.

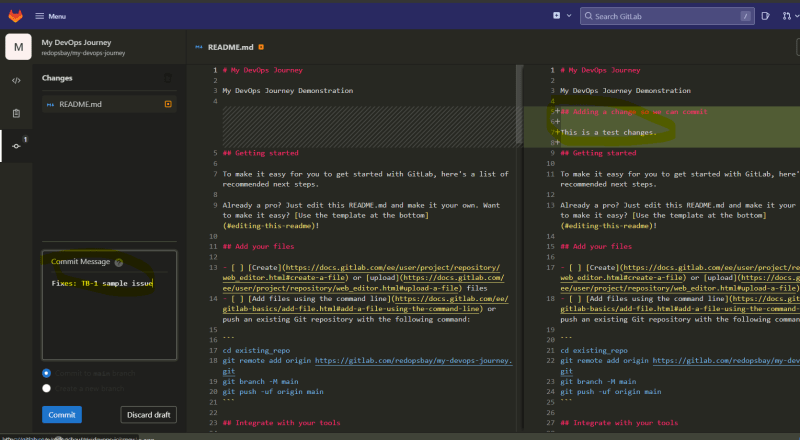

So for now, We can automatically transition reported bug issues or tickets assigned to us via commit message. But let's test it first. So we can assure it's actually working.

Open a sample JIRA issue

Open a sample JIRA issue on your JIRA software. To simulate if automatic transition is working.

Next, Update the issue status from 'To Do' -> 'InProgress'.

Next, Try to edit some files on your repository and try to commit the changes. Also, Don't forget to get the issue key in reference for your commit message. Refer to this guide on transitioning a JIRA issue with GitLab JIRA issue transitioning

Go back to your JIRA Software and check out the previously opened issue. As you can see. The issue is automatically transitioned from 'InProgress' to 'Done'.

Why automatic ticket transitioning is important?

- It keeps your project more manageable.

- Clean issue tracking.

- Allow you to easily generate a release notes and associate each issues and commits to your release notes or CHANGELOG.md files after production deployment.

Setup Traefik Proxy (optional) for edge routing experience

Overview

Traefik is an open-source Edge Router that makes publishing your services a fun and easy experience. It receives requests on behalf of your system and finds out which components are responsible for handling them.

Install Traefik Proxy via Helm

First, Add the traefik repository via:

helm repo add traefik https://helm.traefik.io/traefik

helm repo update

Next, Create values.yaml file. If you want to know all the available parameters in values.yaml you can use. helm show values traefik/traefik command.

values.yaml

service:

enabled: true

type: LoadBalancer

externalIPs:

- <your-server-ip> # if you are using minikube on a single ec2 instance.

podDisruptionBudget:

enabled: true

maxUnavailable: 0

#maxUnavailable: 33%

minAvailable: 1

#minAvailable: 25%

additionalArguments:

- "--log.level=DEBUG"

- "--accesslog=true"

- "--accesslog.format=json"

- "--tracing.jaeger=true"

- "--tracing.jaeger.traceContextHeaderName=x-trace-id"

tracing:

serviceName: traefik

jaeger:

gen128Bit: true

samplingParam: 0

traceContextHeaderName: x-trace-id

autoscaling:

enabled: true

minReplicas: 1

maxReplicas: 10

metrics:

- type: Resource

resource:

name: cpu

targetAverageUtilization: 60

- type: Resource

resource:

name: memory

targetAverageUtilization: 60

Setup Traefik Proxy via Helm via:

helm upgrade --install traefik traefik/traefik \

--namespace=traefik --create-namespace \

-f ./values.yaml --version 10.24.0

After a few seconds, You can now see traefik running on your cluster.

Next, We need to enable traefik dashboard via traefik-dashboard.yaml:

apiVersion: traefik.containo.us/v1alpha1

kind: IngressRoute

metadata:

name: dashboard

namespace: traefik

spec:

entryPoints:

- web

- websecure

routes:

- match: Host(`traefik.gitops.codes`) && (PathPrefix(`/dashboard`) || PathPrefix(`/api`))

kind: Rule

services:

- name: api@internal

kind: TraefikService

Then apply it:

kubectl apply -f ./traefik-dashboard.yaml

Then you can now visit your traefik dashboard via: http://traefik.gitops.codes/dashboard/#/

Containerized the sample django application

Next, we already have a sample Django Python application. But you can prepare yours if you want. In this case, We will containerized a sample django application. So let's start modifying the settings.py to make the credentials able to pass as environment variables.

"""

Django settings for ecommerce project.

Generated by 'django-admin startproject' using Django 3.0.5.

For more information on this file, see

https://docs.djangoproject.com/en/3.0/topics/settings/

For the full list of settings and their values, see

https://docs.djangoproject.com/en/3.0/ref/settings/

"""

import os

# Build paths inside the project like this: os.path.join(BASE_DIR, ...)

BASE_DIR = os.path.dirname(os.path.dirname(os.path.abspath(__file__)))

TEMPLATE_DIR = os.path.join(BASE_DIR,'templates')

STATIC_DIR=os.path.join(BASE_DIR,'static')

# Quick-start development settings - unsuitable for production

# See https://docs.djangoproject.com/en/3.0/howto/deployment/checklist/

# SECURITY WARNING: keep the secret key used in production secret!

SECRET_KEY = '#vw(03o=(9kbvg!&2d5i!2$_58x@_-3l4wujpow6(ym37jxnza'

# SECURITY WARNING: don't run with debug turned on in production!

DEBUG = True

ALLOWED_HOSTS = ['*']

# Application definition

INSTALLED_APPS = [

'django.contrib.admin',

'django.contrib.auth',

'django.contrib.contenttypes',

'django.contrib.sessions',

'django.contrib.messages',

'django.contrib.staticfiles',

'ecom',

'widget_tweaks',

'mathfilters'

]

MIDDLEWARE = [

'django.middleware.security.SecurityMiddleware',

'django.contrib.sessions.middleware.SessionMiddleware',

'django.middleware.common.CommonMiddleware',

'django.middleware.csrf.CsrfViewMiddleware',

'django.contrib.auth.middleware.AuthenticationMiddleware',

'django.contrib.messages.middleware.MessageMiddleware',

'django.middleware.clickjacking.XFrameOptionsMiddleware',

]

ROOT_URLCONF = 'ecommerce.urls'

TEMPLATES = [

{

'BACKEND': 'django.template.backends.django.DjangoTemplates',

'DIRS': [TEMPLATE_DIR,],

'APP_DIRS': True,

'OPTIONS': {

'context_processors': [

'django.template.context_processors.debug',

'django.template.context_processors.request',

'django.contrib.auth.context_processors.auth',

'django.contrib.messages.context_processors.messages',

],

},

},

]

WSGI_APPLICATION = 'ecommerce.wsgi.application'

# Database

# https://docs.djangoproject.com/en/3.0/ref/settings/#databases

DATABASES = {

'default': {

'ENGINE': 'django.db.backends.mysql',

'NAME': os.environ['DATABASE_NAME'],

'USER': os.environ['DATABASE_USER'],

'PASSWORD': os.environ['DATABASE_PASSWORD'],

'HOST': os.environ['DATABASE_HOST'],

'PORT': os.environ['DATABASE_PORT']

}

}

# Password validation

# https://docs.djangoproject.com/en/3.0/ref/settings/#auth-password-validators

AUTH_PASSWORD_VALIDATORS = [

{

'NAME': 'django.contrib.auth.password_validation.UserAttributeSimilarityValidator',

},

{

'NAME': 'django.contrib.auth.password_validation.MinimumLengthValidator',

},

{

'NAME': 'django.contrib.auth.password_validation.CommonPasswordValidator',

},

{

'NAME': 'django.contrib.auth.password_validation.NumericPasswordValidator',

},

]

# Internationalization

# https://docs.djangoproject.com/en/3.0/topics/i18n/

LANGUAGE_CODE = 'en-us'

TIME_ZONE = 'UTC'

USE_I18N = True

USE_L10N = True

USE_TZ = True

# Static files (CSS, JavaScript, Images)

# https://docs.djangoproject.com/en/3.0/howto/static-files/

STATIC_URL = '/static/'

STATICFILES_DIRS=[STATIC_DIR,]

MEDIA_ROOT=os.path.join(BASE_DIR,'static')

LOGIN_REDIRECT_URL='/afterlogin'

#for contact us give your gmail id and password

EMAIL_BACKEND ='django.core.mail.backends.smtp.EmailBackend'

EMAIL_HOST = ''

EMAIL_USE_TLS = True

EMAIL_PORT = 465

EMAIL_HOST_USER = 'from@gmail.com' # this email will be used to send emails

EMAIL_HOST_PASSWORD = 'xyz' # host email password required

# now sign in with your host gmail account in your browser

# open following link and turn it ON

# https://myaccount.google.com/lesssecureapps

# otherwise you will get SMTPAuthenticationError at /contactus

# this process is required because google blocks apps authentication by default

EMAIL_RECEIVING_USER = ['to@gmail.com'] # email on which you will receive messages sent from website

Next, Save it. And create a new file Dockerfile.

FROM python:3.8.13-buster

WORKDIR /opt/ecommerce-app

ADD ./ /opt/

ADD ./docker-entrypoint.sh /

# install mysqlclient

RUN apt update -y \

&& apt install libmariadb-dev -y

# Install pipenv and required python packages

RUN pip3 install pipenv && \

pipenv install && chmod +x /docker-entrypoint.sh

EXPOSE 8000/tcp

EXPOSE 8000/udp

ENTRYPOINT ["/docker-entrypoint.sh"]

Finally, Create a docker-entrypoint.sh:

#!/bin/bash

pipenv run python3 manage.py runserver 0.0.0.0:8000

Save it. Then start building the application via docker:

docker build -t <your-container-registry-endpoint> .

Note: I assume that you have a basic familiarity on Django Application Structure. So we can make the story short. 😁😁

Populate containerized application to helm chart

Next, We will populate our application to helm chart. First, Create a helm chart via:

helm create ecommerce-app

Next, Modify the helm chart. Let's start with the templates/deployment.yaml:

apiVersion: apps/v1

kind: Deployment

metadata:

name: {{ include "ecommerce-app.fullname" . }}

labels:

{{- include "ecommerce-app.labels" . | nindent 4 }}

spec:

{{- if not .Values.autoscaling.enabled }}

replicas: {{ .Values.replicaCount }}

{{- end }}

selector:

matchLabels:

{{- include "ecommerce-app.selectorLabels" . | nindent 6 }}

template:

metadata:

{{- with .Values.podAnnotations }}

annotations:

{{- toYaml . | nindent 8 }}

{{- end }}

labels:

{{- include "ecommerce-app.selectorLabels" . | nindent 8 }}

spec:

imagePullSecrets:

- name: {{ include "ecommerce-app.fullname" . }}

serviceAccountName: {{ include "ecommerce-app.serviceAccountName" . }}

securityContext:

{{- toYaml .Values.podSecurityContext | nindent 8 }}

containers:

- name: {{ .Chart.Name }}

securityContext:

{{- toYaml .Values.securityContext | nindent 12 }}

image: "{{ .Values.image.repository }}:{{ .Values.image.tag | default .Chart.AppVersion }}"

imagePullPolicy: {{ .Values.image.pullPolicy }}

env:

{{- toYaml .Values.deployment.env | nindent 12}}

{{- if .Values.persistentVolume.enabled }}

volumeMounts:

- name: {{ include "ecommerce-app.fullname" . }}-uploads

mountPath: /opt/ecommerce-app/static/product_image

{{- end }}

ports:

- name: http

containerPort: {{ .Values.deployment.containerPort }}

protocol: TCP

resources:

{{- toYaml .Values.resources | nindent 12 }}

{{- if .Values.persistentVolume.enabled }}

volumes:

- name: {{ include "ecommerce-app.fullname" . }}-uploads

persistentVolumeClaim:

claimName: {{ include "ecommerce-app.fullname" . }}-pvc

{{- end }}

{{- with .Values.nodeSelector }}

nodeSelector:

{{- toYaml . | nindent 8 }}

{{- end }}

{{- with .Values.affinity }}

affinity:

{{- toYaml . | nindent 8 }}

{{- end }}

{{- with .Values.tolerations }}

tolerations:

{{- toYaml . | nindent 8 }}

{{- end }}

Next, Create a PersistentVolume and PersistentVolumeClaim so our uploaded files will not lost if the pod get's reprovisioned.

pv.yaml:

{{- if .Values.persistentVolume.enabled -}}

{{- $fullName := include "ecommerce-app.fullname" . -}}

apiVersion: v1

kind: PersistentVolume

metadata:

name: {{ $fullName }}

labels:

{{- include "ecommerce-app.labels" . | nindent 4 }}

spec:

capacity:

storage: {{ .Values.persistentVolume.storageSize }}

volumeMode: {{ .Values.volumeMode }}

{{- with .Values.persistentVolume.accessModes }}

accessModes:

{{- toYaml . | nindent 4 }}

{{- end }}

persistentVolumeReclaimPolicy: {{ .Values.persistentVolume.persistentVolumeReclaimPolicy }}

storageClassName: {{ .Values.persistentVolume.storageClassName }}

hostPath:

path: {{ .Values.persistentVolume.hostPath.path }}

{{- end }}

pvc.yaml

{{- if .Values.persistentVolume.enabled -}}

{{- $fullName := include "ecommerce-app.fullname" . -}}

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: {{ $fullName }}-pvc

labels:

{{- include "ecommerce-app.labels" . | nindent 4 }}

spec:

{{- with .Values.persistentVolume.accessModes }}

accessModes:

{{- toYaml . | nindent 4 }}

{{- end }}

storageClassName: {{ .Values.persistentVolume.storageClassName }}

resources:

requests:

storage: {{ .Values.persistentVolume.storageSize }}

{{- end }}

Save it. Then create a createuser-job.yaml and migratedata-job.yaml to automatically seed data to MySQL database during deployment and also to create django admin user.

createuser-job.yaml

{{- if .Values.createUser.enabled -}}

{{- $superuser_username := .Values.createUser.username -}}

{{- $superuser_password := .Values.createUser.password -}}

{{- $superuser_email := .Values.createUser.userEmail -}}

apiVersion: batch/v1

kind: Job

metadata:

name: {{ include "ecommerce-app.fullname" . }}-create-superuser-job

spec:

backoffLimit: 1

ttlSecondsAfterFinished: 10

template:

metadata:

labels:

identifier: ""

spec:

imagePullSecrets:

- name: {{ include "ecommerce-app.fullname" . }}

containers:

- name: data-migration

image: "{{ .Values.image.repository }}:{{ .Values.image.tag | default .Chart.AppVersion }}"

command: ["/bin/sh","-c"]

args:

- >

/bin/bash <<EOF

export DJANGO_SUPERUSER_USERNAME="{{ $superuser_username }}"

export DJANGO_SUPERUSER_PASSWORD="{{ $superuser_password }}"

pipenv run python3 manage.py createsuperuser --noinput --email "{{ $superuser_email }}"

EOF

env:

{{- toYaml .Values.deployment.env | nindent 12 }}

restartPolicy: Never

{{- end }}

migratedata-job.yaml

{{- if .Values.migrateData.enabled -}}

apiVersion: batch/v1

kind: Job

metadata:

name: {{ include "ecommerce-app.fullname" . }}

spec:

backoffLimit: 2

ttlSecondsAfterFinished: 10

template:

metadata:

labels:

identifier: ""

spec:

imagePullSecrets:

- name: {{ include "ecommerce-app.fullname" . }}

containers:

- name: data-migration

image: "{{ .Values.image.repository }}:{{ .Values.image.tag | default .Chart.AppVersion }}"

command: ["/bin/sh","-c"]

args:

- >

/bin/bash <<EOF

pipenv run python3 manage.py makemigrations

pipenv run python3 manage.py migrate

EOF

env:

{{- toYaml .Values.deployment.env | nindent 12 }}

{{- end }}

Next, Create ecommerce-app-ingressroute.yaml inside the templates folder on your helm chart.

{{- $targetHost := .Values.traefik.host -}}

apiVersion: traefik.containo.us/v1alpha1

kind: IngressRoute

metadata:

name: {{ include "ecommerce-app.fullname" . }}

spec:

{{- with .Values.traefik.entrypoints }}

entryPoints:

{{- toYaml . | nindent 4 }}

{{- end }}

routes:

- match: Host(`{{ $targetHost }}`)

kind: Rule

services:

- name: {{ include "ecommerce-app.fullname" . }}

port: {{ .Values.service.port }}

Finally, the values.yaml file that will hold all of our values.

# Default values for ecommerce-app.

# This is a YAML-formatted file.

# Declare variables to be passed into your templates.

replicaCount: 2

image:

repository: registry.gitlab.com/redopsbay/my-devops-journey

pullPolicy: Always

# Overrides the image tag whose default is the chart appVersion.

tag: "latest"

dockerSecret: ""

nameOverride: ""

fullnameOverride: ""

deployment:

env:

- name: DATABASE_NAME

value: "ecommerce-app"

- name: DATABASE_USER

value: "ecommerce"

- name: DATABASE_PASSWORD

value: "ecommerce-secure-password"

- name: DATABASE_HOST

value: "mysql-server.mysql.svc.cluster.local"

- name: DATABASE_PORT

value: "3306"

containerPort: 8000

traefik:

host: ecommerce-app.gitops.codes

entrypoints:

- web

- websecure

serviceAccount:

# Specifies whether a service account should be created

create: false

# Annotations to add to the service account

annotations: {}

# The name of the service account to use.

# If not set and create is true, a name is generated using the fullname template

name: ""

podAnnotations: {}

podSecurityContext: {}

# fsGroup: 2000

securityContext: {}

# capabilities:

# drop:

# - ALL

# readOnlyRootFilesystem: true

# runAsNonRoot: true

# runAsUser: 1000

service:

type: ClusterIP

port: 80

ingress:

enabled: false

className: "nginx"

annotations: {}

# kubernetes.io/ingress.class: nginx

# kubernetes.io/tls-acme: "true"

hosts:

- host: ecommerce-app.gitops.codes

paths:

- path: /

pathType: ImplementationSpecific

tls: []

# - secretName: chart-example-tls

# hosts:

# - chart-example.local

resources: {}

# We usually recommend not to specify default resources and to leave this as a conscious

# choice for the user. This also increases chances charts run on environments with little

# resources, such as Minikube. If you do want to specify resources, uncomment the following

# lines, adjust them as necessary, and remove the curly braces after 'resources:'.

#limits:

# cpu: 200m

# memory: 200Mi

#requests:

# cpu: 150m

# memory: 150Mi

autoscaling:

enabled: true

minReplicas: 2

maxReplicas: 100

targetCPUUtilizationPercentage: 50

# targetMemoryUtilizationPercentage: 80

nodeSelector: {}

tolerations: []

affinity: {}

# Specify values for Persistent Volume

persistentVolume:

# Specify whether persistent volume should be enabled

enabled: true

# Specify storageClassName

storageClassName: "ecommerce-app"

# Specify volumeMode defaults to Filesystem

volumeMode: Filesystem

# Specify the accessModes for this pv

accessModes:

- ReadWriteMany

persistentVolumeReclaimPolicy: Retain

storageSize: 5Gi

hostPath:

path: /data/ecommerce-app/

createUser:

enabled: false

userEmail: "alfredvalderrama@gitops.codes"

username: "admin"

password: "admin"

migrateData:

enabled: true

That's it. Your container application is now able to run on kubernetes!!!

GitLab CI/CD Pipeline setup via .gitlab-ci.yml

We will now start setting up our pipeline. First, We have to determine what stages and how many environments our application will go through before going to the live production environment. As per common standard. There is 3 environments available which is:

Common environment workloads:

- development

- staging

- production

Let's now create a .gitlab-ci.yml file. I will enter the complete source code file to make the story short. Refer to comments.

# Required Variables to properly setup each environment

variables:

TARGET_IMAGE: "registry.gitlab.com/redopsbay/my-devops-journey"

RAW_KUBECONFIG: ""

DEV_HOST: "ecommerce-app-dev.gitops.codes"

QA_HOST: "ecommerce-app-qa.gitops.codes"

PROD_HOST: "ecommerce-app-qa.gitops.codes"

TARGET_IMAGE_TAG: "latest"

CHART: "${CI_PROJECT_DIR}/.helm/ecommerce-app"

HOST: ""

TARGET_ENV: ""

USER_EMAIL: ""

DJANGO_USERNAME: ""

DJANGO_PASSWORD: ""

DJANGODB_SEED: ""

## My Default Container tool containing helm and kubectl binaries etc.

default:

image: alfredvalderrama/container-tools:latest

stages:

- bootstrap

- install

- build

- dev

- staging

- production

- deploy

- linter

# Set the KUBECONFIG based on commit branch / environment etc.

'setEnv':

stage: bootstrap

rules:

- changes:

- ".gitlab-ci.yml"

when: never

before_script:

- |

case $CI_COMMIT_BRANCH in

"dev")

RAW_KUBECONFIG=${DEV_KUBECONFIG}

TARGET_ENV="dev"

export RELEASE_CYCLE="alpha"

HOST="${DEV_HOST}"

;;

"staging")

RAW_KUBECONFIG=${QA_KUBECONFIG}

TARGET_ENV="prod"

export RELEASE_CYCLE="beta"

HOST="${QA_HOST}"

;;

"master" )

RAW_KUBECONFIG=${PROD_KUBECONFIG}

TARGET_ENV="prod"

HOST="${PROD_HOST}"

export RELEASE_CYCLE="stable"

;;

esac

- export TARGET_IMAGE_TAG="${RELEASE_CYCLE}-$(cat release.txt)"

- mkdir -p ~/.kube || echo "Nothing to create..."

- echo ${RAW_KUBECONFIG} | base64 -d > ~/.kube/config

- chmod 0600 ~/.kube/config

script:

- echo "[bootstrap] Setting up required environment variables...."

## Build image only for installation

'Build Image For Provisioning':

image:

name: gcr.io/kaniko-project/executor:debug

entrypoint: [""]

stage: install

rules:

- changes:

- ".gitlab-ci.yml"

when: never

- if: $PROVISION == 'true'

when: manual

tags:

- dev

- staging

- sandbox

- prod

extends:

- 'setEnv'

script:

- mkdir -p /kaniko/.docker

- |

cat << EOF > /kaniko/.docker/config.json

{

"auths": {

"registry.gitlab.com": {

"username": "${REGISTRY_USER}",

"password": "${REGISTRY_PASSWORD}"

}

}

}

EOF

- |

/kaniko/executor \

--context "${CI_PROJECT_DIR}/" \

--dockerfile "${CI_PROJECT_DIR}/Dockerfile" \

--destination "${TARGET_IMAGE}:${TARGET_IMAGE_TAG}" \

--cache=true

## Provision the environment. Since you provision the environment for the first time.

# Django needs to migrate the data and also create a superuser/administrator account.

'Provision':

image:

name: alfredvalderrama/container-tools:latest

stage: install

rules:

- changes:

- ".gitlab-ci.yml"

when: never

- if: $PROVISION == 'true'

when: manual

needs:

- "Build Image For Provisioning"

tags:

- dev

- staging

- sandbox

- prod

extends:

- 'setEnv'

script:

- |

helm upgrade --install ecommerce-app-${TARGET_ENV} ${CHART} \

-n ${TARGET_ENV} \

--create-namespace \

--set createUser.enabled="true" \

--set createUser.userEmail="${USER_EMAIL}" \

--set createUser.username="${DJANGO_USERNAME}" \

--set createUser.password="${DJANGO_PASSWORD}" \

--set migrateData.enabled="${DJANGODB_SEED}" \

--set traefik.host="${HOST}" \

--set dockerSecret="${REGISTRY_CREDENTIAL}" \

--set deployment.database_name="${DJANGO_DB}" \

--set deployment.database_user="${DJANGO_DBUSER}" \

--set deployment.database_password="${DJANGO_DBPASS}" \

--set deployment.database_host="${DJANGO_DBHOST}" \

--set image.tag="${TARGET_IMAGE_TAG}"

## Execute this job to validate a valid helm chart.

'Linter':

image:

name: alfredvalderrama/container-tools:latest

stage: linter

rules:

- changes:

- ".gitlab-ci.yml"

when: never

- if: $CI_PIPELINE_SOURCE == "merge_request_event" && $PROVISION != 'true'

when: always

extends:

- 'setEnv'

tags:

- dev

- staging

- sandbox

- prod

script:

- echo "[$CI_COMMIT_BRANCH] Displaying incoming changes when creating a djando user..."

- |

helm upgrade --install ecommerce-app-${TARGET_ENV} ${CHART} \

-n ${TARGET_ENV} \

--create-namespace \

--set createUser.enabled="true" \

--set createUser.userEmail="${USER_EMAIL}" \

--set createUser.username="${DJANGO_USERNAME}" \

--set createUser.password="${DJANGO_PASSWORD}" \

--set migrateData.enabled="${DJANGODB_SEED}" \

--set traefik.host="${HOST}" \

--set dockerSecret="${REGISTRY_CREDENTIAL}" \

--set deployment.database_name="${DJANGO_DB}" \

--set deployment.database_user="${DJANGO_DBUSER}" \

--set deployment.database_password="${DJANGO_DBPASS}" \

--set image.tag="${TARGET_IMAGE_TAG}" \

--set deployment.database_host="${DJANGO_DBHOST}" --dry-run

- echo "[$CI_COMMIT_BRANCH] Displaying incoming changes for normal deployment...."

- |

helm upgrade --install ecommerce-app-${TARGET_ENV} ${CHART} \

-n ${TARGET_ENV} \

--create-namespace \

--set migrateData.enabled="${DJANGODB_SEED}" \

--set traefik.host="${HOST}" \

--set dockerSecret="${REGISTRY_CREDENTIAL}" \

--set deployment.database_name="${DJANGO_DB}" \

--set deployment.database_user="${DJANGO_DBUSER}" \

--set deployment.database_password="${DJANGO_DBPASS}" \

--set image.tag="${TARGET_IMAGE_TAG}" \

--set deployment.database_host="${DJANGO_DBHOST}" --dry-run

## Build Docker image

'Build':

image:

name: gcr.io/kaniko-project/executor:debug

entrypoint: [""]

rules:

- changes:

- ".gitlab-ci.yml"

when: never

- if: $CI_COMMIT_BRANCH == "master" && $PROVISION != 'true'

- if: $CI_COMMIT_BRANCH == "dev" && $PROVISION != 'true'

- if: $CI_COMMIT_BRANCH == "staging" && $PROVISION != 'true'

when: always

stage: build

tags:

- dev

- staging

- sandbox

- prod

extends:

- 'setEnv'

script:

- mkdir -p /kaniko/.docker

- |

cat << EOF > /kaniko/.docker/config.json

{

"auths": {

"registry.gitlab.com": {

"username": "${REGISTRY_USER}",

"password": "${REGISTRY_PASSWORD}"

}

}

}

EOF

- |

/kaniko/executor \

--context "${CI_PROJECT_DIR}/" \

--dockerfile "${CI_PROJECT_DIR}/Dockerfile" \

--destination "${TARGET_IMAGE}:${TARGET_IMAGE_TAG}" \

--cache=true

## Deploy Dev Environment with Helm Chart

'Deploy Dev':

image: alfredvalderrama/container-tools:latest

stage: dev

rules:

- changes:

- ".gitlab-ci.yml"

when: never

- if: $CI_COMMIT_BRANCH == "dev" && $PROVISION != 'true'

when: always

extends:

- 'setEnv'

tags:

- dev

- staging

- sandbox

- prod

script:

- echo "[DEV] Deploying DEV application"

- |

helm upgrade --install ecommerce-app-${TARGET_ENV} ${CHART} \

-n ${TARGET_ENV} \

--create-namespace \

--set migrateData.enabled="${DJANGODB_SEED}" \

--set traefik.host="${HOST}" \

--set dockerSecret="${REGISTRY_CREDENTIAL}" \

--set deployment.database_name="${DJANGO_DB}" \

--set deployment.database_user="${DJANGO_DBUSER}" \

--set deployment.database_password="${DJANGO_DBPASS}" \

--set deployment.database_host="${DJANGO_DBHOST}" \

--set image.tag="${TARGET_IMAGE_TAG}"

- kubectl rollout status deployment/ecommerce-app-${TARGET_ENV} -n ${TARGET_ENV}

## Deploy Staging Environment with Helm Chart

'Deploy Staging':

image: alfredvalderrama/container-tools:latest

stage: staging

rules:

- changes:

- ".gitlab-ci.yml"

when: never

- if: $CI_COMMIT_BRANCH == "staging" && $PROVISION != 'true'

when: always

extends:

- 'setEnv'

tags:

- dev

- staging

- sandbox

- prod

script:

- echo "[STAGING] Deploying STAGING application"

- |

helm upgrade --install ecommerce-app-${TARGET_ENV} ${CHART} \

-n ${TARGET_ENV} \

--create-namespace \

--set migrateData.enabled="${DJANGODB_SEED}" \

--set traefik.host="${HOST}" \

--set dockerSecret="${REGISTRY_CREDENTIAL}" \

--set deployment.database_name="${DJANGO_DB}" \

--set deployment.database_user="${DJANGO_DBUSER}" \

--set deployment.database_password="${DJANGO_DBPASS}" \

--set deployment.database_host="${DJANGO_DBHOST}" \

--set image.tag="${TARGET_IMAGE_TAG}"

- kubectl rollout status deployment/ecommerce-app-${TARGET_ENV} -n ${TARGET_ENV}

## Deploy Staging Environment with Helm Chart

'Deploy Production':

image: alfredvalderrama/container-tools:latest

stage: production

rules:

- changes:

- ".gitlab-ci.yml"

when: never

- if: $CI_COMMIT_BRANCH == "prod" && $PROVISION != 'true'

when: always

extends:

- 'setEnv'

tags:

- dev

- staging

- sandbox

- prod

script:

- echo "[PROD] Deploying PROD application"

- |

helm upgrade --install ecommerce-app-${TARGET_ENV} ${CHART} \

-n ${TARGET_ENV} \

--create-namespace \

--set migrateData.enabled="${DJANGODB_SEED}" \

--set traefik.host="${HOST}" \

--set dockerSecret="${REGISTRY_CREDENTIAL}" \

--set deployment.database_name="${DJANGO_DB}" \

--set deployment.database_user="${DJANGO_DBUSER}" \

--set deployment.database_password="${DJANGO_DBPASS}" \

--set deployment.database_host="${DJANGO_DBHOST}" \

--set image.tag="${TARGET_IMAGE_TAG}"

- kubectl rollout status deployment/ecommerce-app-${TARGET_ENV} -n ${TARGET_ENV}

The workflow of this pipeline is simple.

- First, A manual intervention is needed when you just want to provision the environment for the first time.

- Pipeline will execute when a change is committed to dev/staging/prod branches.

- Next, Pipeline will use a linter when a merge requests is detected.

- The image tag is determined via

release.txtfile. Which contains the semantic version of the release. But you can do other solutions like release version will be based on tags. But for now I only want therelease.txtfile.

After successfully creating a .gitlab-ci.yml file. You have the complete production grade CI/CD pipeline integrated with ISSUE tracking!!!

Demonstration

Finally, We were here at the demo part. I will try to create a changes on my code. Like, Just changing the release.txt file.

Note: We will not go deeper on the django app. The focus here is that we have a real working pipeline.

Now, That the changes has been committed. The pipeline is now started.

As you can see at my minikube-server. The gitlab-runner is now executing the jobs.

Now, As you can see. The deployment is now running and it's now replacing the old pods with the newly built docker image. ❤❤

That's all!!! The flow will be the same with the staging and prod environment. And you can just easily transition your JIRA Issues by committing your ISSUE ID with Git, And Gitlab will automatically finish the job.

Some key sorcery for starting DevOps career's

- Make it simple!

- Carefully plan your pipeline from the start. So, you don't have to recreate it from scratch.

- Make it portable & readable, and also reusable!

- Learn how to read codes from the others. So you have the ability to learn the infrastructure by just looking at the architecture & codes.

- Be the entire IT Department. So you are the Lebron James of your team.

Top comments (0)