Normally I'm the last proponent of collecting metrics. The reason is: metrics don't tell you anything. And anything that tells you nothing is an absolute waste of time setting up. However, alerts tell you a lot. If you know that something bad is happening then you can do something about it.

The difference between alerts and metrics is Knowing what's important. If you aren't collecting metrics yet, the first thing to do is decide what is a problem and what isn't. It's far to often I feel like I'm answering the question of:

How do I know if the compute utilization is above 90%.

The answer is, it doesn't matter, because if you knew, what would you do with that information. Almost always the answer is "I don't know" or "my director told me to it was important".

⚠ So what is important

That's probably the hardest question to answer with a singular point. So for the sake of this article to make it concrete and relevant, let me share what's important for us.

Up-time requirements

We've been at an inflection point within Rhosys for a couple of years now. For Authress and Standup & Prosper we run highly reliable services. These have to be up at least 4 nines, and often we contract out for SLAs at 5 nines. But the actual up-time isn't what has been relevant anymore. Because as most reliability experts know your service can be up, but still returning a 5XX here and there. This is what's known as partial degradation. You may think one 5XX isn't a problem. However for millions of requests per day, this amounts to a non-trivial amount. Even if it is just one 5XX per day, it absolutely is important, if you don't know why it happened. It's one thing to ignore an error because you know why, it's quite another to ignore it because you don't.

Further, even returning 4XXs is often a concern for us as well. Too many 400s could be a problem. They could tell us something is wrong. From a product standpoint, a 4XX means that we did something wrong in our design, because one of our users should never get to the point where they get back a 4XX. If they get back a 4XX that means they were confused about our API or the data they are looking at. This is critically important information. Further, a 4XX could mean we broke something in a subtle way. Something that used to return a 2XX now returning a 4XX unintentionally means that we broke at least one of our users implementations. This is very very bad.

So, actually what we want to know is:

Are there more 400s now than there should be?

A simple example is when a customer of ours calls our API in and forgets to URL Encode the path, that usually means they accidentally called the wrong endpoint. For instance if the UserId had a / in it then:

- Route:

/users/{userId}/data - Incorrect Endpoint:

/users/tenant1/user001/data - Correct Endpoint:

/users/tenant1%2Fuser001/data

When this happens we could tell the caller 404, but that's actually the wrong thing to do. It's the right error code, but it conveys the wrong message. The caller will think there is no data for that user, even when there is. That's because the normal execution of the endpoint returns 404 when there is no data associated with the user or the user doesn't exist. Instead, when we detect this, we return 422: Hey you called a weird endpoint, did you mean to call this other one.

A great Developer Experience (DX) means returning the right result when the user asked for something in the wrong way. If we know there is a problem, we can already return the right answer, we don't need to complain about it. However, sometimes that's dangerous. Sometimes the developer thought they were calling a different endpoint, so we have to know when to guess and when to return a 422.

In reality, when we ask Are there more 400s than there should be, we are looking to do anomaly detection on some metrics. If this is an issue we know to look at the recent code released and see when the problem started to happen.

This is the epitome of using anomaly detection. Are the requests we are getting at this moment what we expect them to be, or is there something unexpected and different happening.

To answer this question, finally we know we need some metrics. So let's take a look at our possible options.

🗳 The metric-service candidates

We use AWS heavily, and luckily we AWS has some potential solutions:

So we decided to try some of these out and see if any one of them can support our needs.

🗸 The Verdict

All terrible. So we had the most interest in using DevOps Guru, the problem is that it just never finds anything. It just can't review our logs to find problems. The one case it is useful is for RDS queries and to determine if you need an index. What happens when you fix all your indexes? Then what?

After turning on Guru for a few weeks, we found nothing*. Okay, almost nothing, we found a smattering of warnings regarding the not-so-latest version of some resources being used, or permissions that aren't what AWS thinks they should be. But other than that, it was useless. You can imagine for an inexperienced team, having DevOps Guru enabled will help you about as much as Dependabot does at discovering actual problems in your GitHub repos. The surprise however, is that it is cheap.

DevOps Guru - cheap but worthless.

Then we took a look at AWS Lookout for Metrics. It promises some sort of advanced anomaly detection on your metrics. AWS Lookout is actually used for other things, not primarily metrics, so this was a surprised. And it seemed great, exactly what we are looking for. And when you look at the price it appears reasonable for $0.75 / metric. We only plan on having a few metrics, right? So that shouldn't be a problem. Let's put this one in our back pocket while we investigate the CloudWatch anomaly detection alarms.

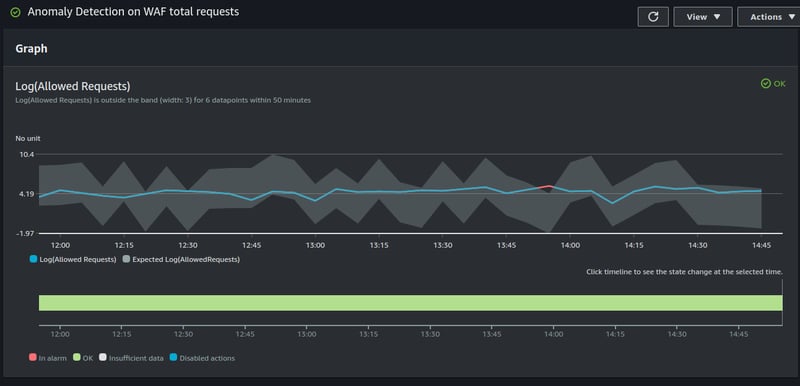

At the time of writing this article we already knew something about anomaly detection using CloudWatch Alarms. The reason is we have anomaly detection set on some of our AWS WAF (Web Application Firewalls). Here's an example of that anomaly detection:

We can see there is a little bit of red here where the results were unexpected. This looks a bit cool, although it doesn't work out of the box. Most of the time we ended up with the dreaded alarm flapping sending out alerts at all hours of the day.

To really help make this alarm useful we did three things:

- A lot of trial and error with the period and relevant datapoint count to trigger the alarm

- The number of deviations outside of the norm to be considered an issue, is the change 1 => 2 a problem or is 1 => 3 a problem

- Use

logarithmbased volume

Now, while those numbers on the left aren't exactly the Log(total requests), they are something like that. And this graph is the result. See logarithms are great here, because the anomaly detection will throw an error as soon as it's outside of a band. And that band is magic, you've got no control over that band for the most part. (You can't choose how to make it think, but you can choose how thick it is)

We didn't really care that there are 2k rps instead of 1.5rps for a time window, but we do care that there are 3k rps or 0 rps. So the logarithm really makes more sense here. Magnitudes different is more important.

So now we know that the technology does what we want we can start.

⏵ Time to start creating metrics

The WAF anomaly detection alarm looks great, though it isn't prefect, but hopefully AWS will teach the ML over time what is reasonable and let's pray that it works. (I don't have much confidence in that). But at least it is a pretty good starting point. And since we are going to be creating metrics, we can reevaluate the success afterwards and potentially switch to AWS Lookuout for Metrics if everything looks good.

💰 $90k

Now that's a huge bill. It turns out we must have done something wrong, because according to AWS CloudWatch Billing and our calculation we'll probably end up paying $90k this month on metrics.

Let's quickly review, we attempted to log APM metrics (aka Application Performance Monitoring) using CloudWatch metrics. That means for each endpoint we wanted to log:

- The response status code -

200,404, etc.. - The customer account ID -

acc-001 - The HTTP Method -

GET,PUT,POST - The Route -

/v1/users/{userId}

That's one metric, with these four dimensions, and at $0.30 / custom metric / month, we assumed that means $0.30 / month. However, that is a lie.

AWS CloudWatch Metrics charges you not by metric, but by each unique basis of dimensions, that means that:

-

GET /v1/users{userId}returning a 200 for customer 001 -

DELETE /v1/users{userId}returning a 200 for customer 002

Are two different metrics, a quick calculation tells us:

- ~17 response codes used per endpoint (we heavily use, 200, 201, 202, 400, 401, 403, 404, 405, 409, 412, 421, 422, 500, 501, 502, 503, 504)

- ~5 http verbs

- ~100 endpoints

Since metrics are saved for ~15 months, using even one of these status codes in the last 15 months will add to your bill. But this math shows us it will cost ~$2550 / per customer.

If you only had 100 customers for your SaaS, that's going to cost you $255,000 per month. And we have a lot more customers than that. Thankfully we did some testing first before releasing this solution.

I don't know how anyone uses this solution, but it isn't going to work for us. We aren't going to pay this ridiculous extortion to use the metrics service, we'll find something else. Worse still that means that AWS Lookout for metrics is also not an option, because it would cost us 75% of that cost per month as well, so let's just call it $5k per customer per month. Now, while I don't mind shelling out $5k customer for a real solution to help us keep our 5-nines SLAs, it's going to have to do a lot more than just keep a database of metrics.

We are going to have look somewhere else.

🚑 SaaS to the rescue?

I'm sure some one out there is saying we use ________ (insert favorite SaaS solution here), but the truth is none of them support what we need.

Datadog and NewRelic were long eliminated from our allowed list because they resorted to malicious marketing phone calls directly to our personal phone numbers multiple times. That's disgusting. Even if we did allow one of those to be picked, they are really expensive.

What's worse, all the other SaaS solutions that provide APM fail in one of these ways:

- UI/UX is not just bad, but it's terrible,

- don't work with serverless

- are more expensive that Datadog, I don't even know how that is possible.

But wait, didn't AWS release some managed service for metrics...

𓁗 Enter AWS Athena

AWS Athena isn't a solution, it's just a query on top of S3, so when we say "use AWS Athena" what we really mean is "stick your data in S3". But actually we mean:

Stick your data in S3, but do it in a very specific and painstaking way. It's so complicated and difficult that AWS wrote a whole second service to take the data in S3 and put it back in S3 differently.

That service is called Glue. We don't want to do this, we don't want something crawling our data and attempting to reconfigure it, it just doesn't make sense. We already know the data at the time of consumption. We get a timespan with some data, and we write back that timespan. Since we already know the answer, using a second service dedicated to creating timeseries doesn't make sense. (It does absolutely make sense if we had non-timeseries data, and needed to convert it to timeseries for querying, but we do not).

The real problem here however, is that every service we have would need to figure out how to write this special format to S3 so that it could be queried. Fuck.

While we could build out a Timeseries-to-S3 Service, I'd rather not. The Total Cost of Ownership (TCO) of owning services is really really high. We knew this already before we built our own statistics platform and deprecated it, we don't want to do it again.

So Athena was out. Sorry.

🔥 Enter Prometheus

And no I'm not talking about Elastic Search, that doesn't scale, and it isn't really managed. It's a huge pain. It's like taking a time machine back to the days on on-prem DBAs, except these DBAs work at AWS and are less accessible.

The solution is AWS runs a managed Grafana + Prometheus solutions. The true SaaS is Grafana Cloud, but AWS has a managed version, and of course these are also two different AWS services.

Apparently Prometheus is a metrics solution. It keeps track of metrics and it's pricing is:

We know we aren't going to have a lot of samples (aka API requests to prometheus), so let's focus on the storage...

$0.03/GB-Mo

Looking at a metric storage line as status code + verb + path + customer ID we get ~200B, which is $1e-7 / request + storage) For $100 / month that would afford us 847,891,077 API requests per month. (This assumes ~150 days by default retention time). Let's call it 1B requests per month, that's a sustained 400rps. Now while that is a fraction of where we are at, the pricing for this feels so much better. We pay only for what we use, and the amortized cost per customer also makes a lot more sense.

So we are definitely going to use Prometheus it seems.

For how we did that exactly, check out Part 2: AWS Advanced: Serverless Promtheus in Action

If you liked this article come join our Community and discuss this and other security related topics!

Top comments (0)