If you aim to write fast Javascript avoid intermediate arrays when transforming data or making an algorithm. This could happen both with imperative and functional code. Always ask yourself a question while writing code - are there any intermediate arrays I could avoid.

Often I see a common pattern filter + map:

const arr = Array.from({length: 100}, () => ({num: Math.random()}));

arr.filter(n => n.num > .5).map(n => n.num * 2);

Sometimes the chain is quite long, hosting several filter/map/sort in various configurations.

Replace the chain with Array#reduce() which in mostly all cases will give you better performance over any chain of other methods, since you remove the array created by Array#filter():

arr.reduce((r, n) => (n.num > .5 && r.push(n.num * 2), r), []);

@tracygjg asked in a comment why not to use:

arr.reduce((r, n) => n.num > .5 ? [...r, n.num * 2] : r, []);

Thank @tracygjg for this one, that's another great example where you create an intermediate array, moreover an array is created for every element in the result array!

So basically each time we want to add an element to the result, a bigger array is created thus the bigger the source array the slower the solution.

Moreover the spread syntax is used which should be avoided too if you want a performant solution (my next post is about that). Let's benchmark all the 3 options:

const $chunk = Array.from({length: 10}, () => ({num: Math.random()}));

const $input = [];

// @benchmark filter + map

$input.filter(n => n.num > .5).map(n => n.num * 2);

// @benchmark reduce

$input.reduce((r, n) => (n.num > .5 && r.push(n.num * 2), r), []);

// @benchmark Tracy Gilmore

$input.reduce((r, n) => n.num > .5 ? [...r, n.num * 2] : r, []);

` Chrome/124

-------------------------------------------------------------------------------------------

> n=10 | n=100 | n=1000 | n=10000

reduce ■ 1.00x x10m 355 | ■ 1.00x x1m 155 | ■ 1.00x x100k 263 | ■ 1.00x x10k 431

filter + map 1.88x x10m 669 | 1.74x x1m 269 | 1.41x x100k 370 | 1.55x x1k 67

Tracy Gilmore 3.32x x1m 118 | 20.13x x100k 312 | 196.20x x1k 516 | 893.27x x10 385

------------------------------------------------------------------------------------------- `

` Firefox/126

-------------------------------------------------------------------------------------------

> n=10 | n=100 | n=1000 | n=10000

reduce ■ 1.00x x10m 647 | ■ 1.00x x1m 433 | ■ 1.00x x100k 390 | ■ 1.00x x10k 470

filter + map 1.41x x1m 91 | 1.27x x100k 55 | 2.51x x10k 98 | 1.32x x10k 620

Tracy Gilmore 1.90x x1m 123 | 9.70x x10k 42 | 175.64x x1k 685 | 359.57x x10 169

------------------------------------------------------------------------------------------- `

As you can see with 10000 array items we could have a hundreds of times slower solution if we don't care enough about avoiding intermediate arrays.

In the next post we will discuss how to avoid intermediate arrays while parsing strings.

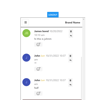

Top comments (6)

Alexander,

You are absolutely correct - no argument. Although, I do wonder if creating intermediate arrays is such a bad thing.

1) JS has a garbage collection mechanism to free up the memory.

2) Mutating the same array, even by appending, can have a downside on performance.

That said, if performance is a major concern, is JS the best language?

On the backend there's freedom of choice, but in a browser we have JS only afaik. My concern isn't about memory but rather CPU usage, modern websites became too heavy for low/mid mobile devices, also complex SPAs suffer on desktops even. That's why in my opinion we should write optimized JS code from the beginning.

Regarding mutating arrays - in most cases it's faster than creating a new copy 🤷♂️

Garbage collection is far from free if you want to avoid glitching and stalls when the GC runs.

Agreed, using a garbage collector is not cost free.

Hi Alexander,

Was there some reason you used,

instead of,

? Also, how would the alternative affect the results?

yes, it would affect in a negative way, you create a lot of intermediate arrays again (a new array for each element in the original array), I've included your example into my post and benchmarked, thank you a lot for that. If you feel something wrong about it, please notify me.