Keras provides a powerful abstraction for recurrent layers such as RNN, GRU and LSTM for Natural Language Processing. When I first started learning about them from the documentation, I couldn't clearly understand how to prepare input data shape, how various attributes of the layers affect the outputs and how to compose these layers with the provided abstraction.

Having learned it through experimentation, I wanted to share my understanding of the API with visualizations so that it's helpful for anyone else having troubles.

Single Output

Let's take a simple example of encoding the meaning of a whole sentence using a RNN layer in Keras.

Credits: Marvel Studios

{: .text-center}

To use this sentence in a RNN, we need to first convert it into numeric form. We could either use one-hot encoding, pretrained word vectors or learn word embeddings from scratch. For simplicity, let's assume we used some word embedding to convert each word into 2 numbers.

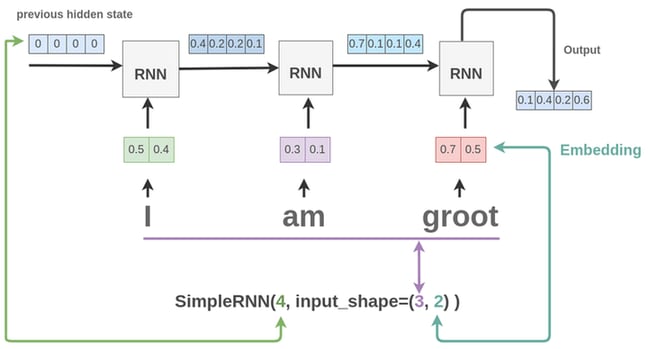

Now, to pass these words into a RNN, we treat each word as time-step and the embedding as it's features. Let's build a RNN layer to pass these into

model = Sequential()

model.add(SimpleRNN(4, input_shape=(3, 2)))

As seen above, here is what the various parameters means and why they were set as such:

-

input_shape=(3, 2):

- We have 3 words: I, am, groot. So, number of time-steps is 3. The RNN block unfolds 3 times, and so we see 3 blocks in the figure.

- For each word, we pass the word embedding of size 2 to the network.

-

SimpleRNN(4, ...):

- This means we have 4 units in the hidden layer.

- So, in the figure, we see how a hidden state of size 4 is passed between the RNN blocks

- For the first block, since there is no previous output, so previous hidden state is set to [0, 0, 0, 0]

Thus for a whole sentence, we get a vector of size 4 as output from the RNN layer as shown in the figure. You can verify this by printing the shape of output from the layer.

import tensorflow as tf

from tensorflow.keras.layers import SimpleRNN

x = tf.random.normal((1, 3, 2))

layer = SimpleRNN(4, input_shape=(3, 2))

output = layer(x)

print(output.shape)

# (1, 4)

As seen, we create a random batch of input data with 1 sentence having 3 words and each word having an embedding of size 2. After passing through the LSTM layer, we get back representation of size 4 for that one sentence.

This can be combined with a Dense layer to build an architecture for something like sentiment analysis or text classification.

model = Sequential()

model.add(SimpleRNN(4, input_shape=(3, 2)))

model.add(Dense(1))

Multiple Output

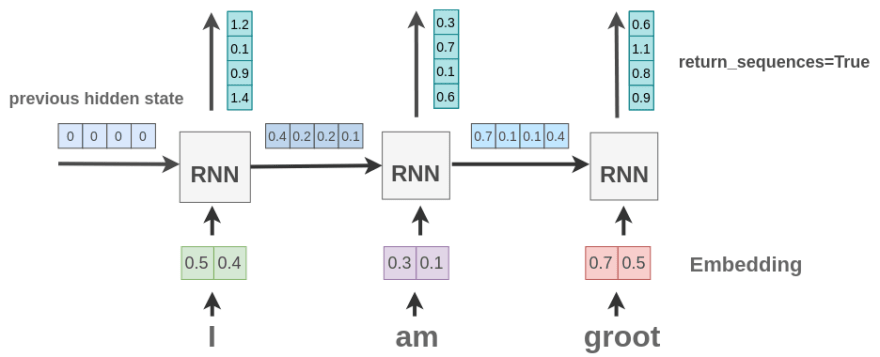

Keras provides a return_sequences parameter to control output from the RNN cell. If we set it to True, what it means is that the output from each unfolded RNN cell is returned instead of only the last cell.

model = Sequential()

model.add(SimpleRNN(4, input_shape=(3, 2),

return_sequences=True))

As seen above, we get an output vector of size 4 for each word in the sentence.

This can be verified by the below code where we send one sentence with 3 words and embedding of size 2 for each word. As seen, the layer gives us back 3 outputs with a vector of size 4 for each word.

import tensorflow as tf

from tensorflow.keras.layers import SimpleRNN

x = tf.random.normal((1, 3, 2))

layer = SimpleRNN(4, input_shape=(3, 2), return_sequences=True)

output = layer(x)

print(output.shape)

# (1, 3, 4)

TimeDistributed Layer

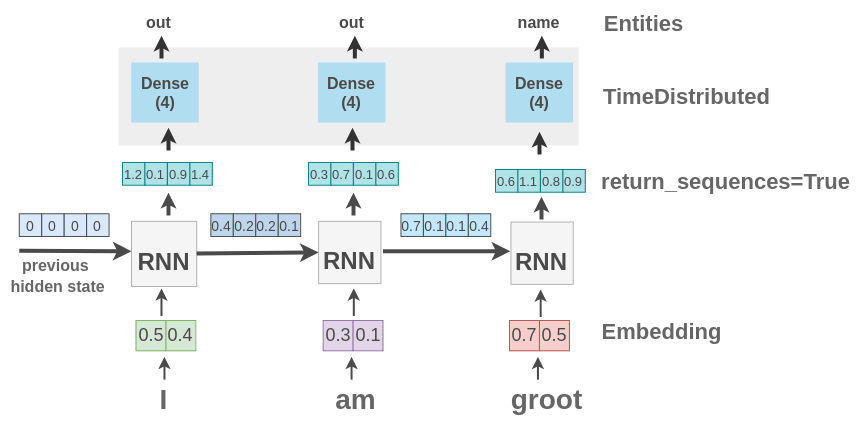

Suppose we want to recognize entities in a text. For example, in our text "I am Groot", we want to identify "Groot" as a name.

We have already seen how to get output for each word in the sentence in previous section. Now, we need some way to apply classification on the output vector from the RNN cell on each word. For simple cases such as text classification, you know how we use Dense() layer with softmax activation as the last layer.

Similar to that, we can apply Dense() layer on multiple outputs from the RNN layer through a wrapper layer called TimeDistributed(). It will apply the Dense layer on each output and give us class probability scores for the entities.

model = Sequential()

model.add(SimpleRNN(4, input_shape=(3, 2),

return_sequences=True))

model.add(TimeDistributed(Dense(4, activation='softmax')))

As seen, we take a 3 word sentence and classify output of RNN for each word into 4 classes using Dense layer. These classes can be the entities like name, person, location etc.

Stacking Layers

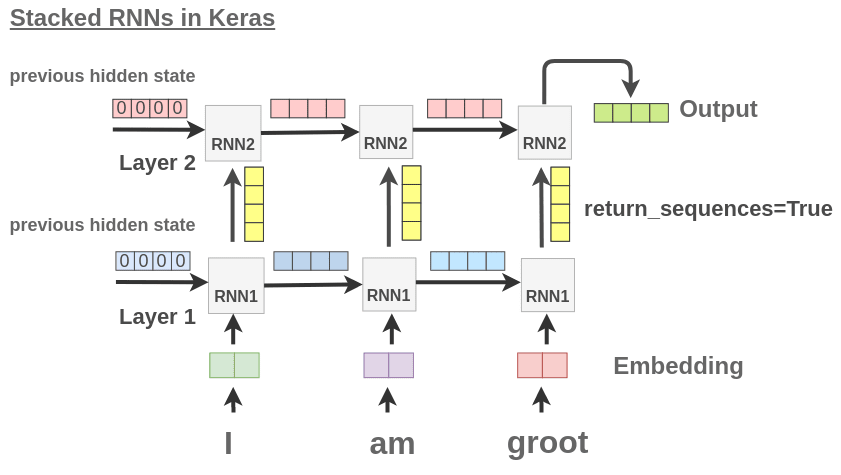

We can also stack multiple recurrent layers one after another in Keras.

model = Sequential()

model.add(SimpleRNN(4, input_shape=(3, 2), return_sequences=True))

model.add(SimpleRNN(4))

We can understand the behavior of the code with the following figure:

Insight: Why do we usually set return_sequences to True for all layers except the final?

Since the second layer needs inputs from the first layer, we set return_sequence=True for the first SimpleRNN layer. For the second layer, we usually set it to False if we are going to just be doing text classification. If out task is NER prediction, we can set it to True in the final layer as well.

References

Connect

If you enjoyed this blog post, feel free to connect with me on Twitter where I share new blog posts every week.

Top comments (0)