ETL Jobs

ETL stands for extract, transform, and load. And this is the most common paradigm for combining data from various systems into a single database, data store, or warehouse for legacy storage or analytics.

Extraction

Extraction is the process of retrieving data from one or more sources. Following data retrieval, ETL is to compute work that loads the data into a staging area and prepares it for the next phase.

Transformation

Transformation is the process of mapping, reformatting and other operations to prepare data for consumption.

Loading

Loading involves successfully inserting the transformed data into the target database, data store or data warehouse.

All of this work is processed in what the business intelligent developers call an ETL job.

AWS Glue

AWS Glue is the key service that integrates data over a data lake, data warehouse, and purpose-built data stores which simplifies data movements from multiple sources

AWS Glue Studio

AWS Glue Studio is an easy-to-use graphical interface for creating, running, and monitoring AWS Glue extract, transform, and load (ETL) jobs.

Olive wants to purchase a finger ring with her birth stone.

The details of Birth stones and ring prices are stored in two DynamoDB tables

Now, We are going to create an ETL job using AWS Glue Studio which inner join two DynamoDB tables Birthstone and RingsTable on condition RingsTable.RingStoneName = Birthstone.Stone Name

After that, apply a Query on the result table to find the ring stone and its price matching to Olive's Birth Month and then save the result to the s3 bucket named "gemstonejobresult"

We use git for the source code management so finally we are going to create a remote git repository on AWS Code Commit and push the job to the git repository

Prerequisites

- Two tables created using AWS DynamoDB Service: Birthstone, RingsTable

(Recipe: https://dev.to/aws-builders/cook-a-recipe-with-aws-dynamo-db-table-1kn1)

- S3 Bucket to store the result - gemstonejobresult

- A git repository on AWS Code commit - gemstone_job_repository

- An IAM role named AWSGlueServiceRoledemo- with permission to your Amazon S3 sources, targets, temporary directory, scripts, and any libraries used by the job.

Lets set up the Prerequisites first

1 : DynamoDB Tables

Here, I have created two Tables named "Birthstone" and "Birthstone" and some items to them

Make sure to enable PITR on both the tables

The Table schema details provided below

Table Data

2: S3 Bucket for Storing the Query Result

- Navigate to S3 console

Click Create Bucket button

You will be navigated to bucket creation page

Specify a unique name to the bucket, Leave the rest of the fields to default values

- Scroll down and click create bucket button

- You will be navigated back to bucket list page on S3 Console and the newly created bucket will be listed here

3: A git repository on AWS Code commit

AWS Code commit

- AWS CodeCommit is a fully-managed source control service that makes it easy for companies to host secure and highly scalable private Git repositories.

- CodeCommit eliminates the need to operate your own source control system or worry about scaling its infrastructure.

Repository

- In CodeCommit, the fundamental version control object is a repository.

- It's where you keep your project's code and files safe. It also keeps track of your project's history, from the first commit to the most recent changes.

- You can collaborate on a project by sharing your repository with other users.

Before you can push changes to a CodeCommit repository, you must configure an IAM user in your Amazon Web Services account, or set up access for federated access or temporary credentials.

DO NOT USE ROOT USER

- Navigate to code commit console

- Click Create Repository button to create a git repository for storing our code

- Specify the repository name, I am naming it as "gemstone_job_repository" and click create repository button

Lets Create a Readme.md file and commit it to the repository, Click Create file button

Add some data to the file and save the file as ReadMe.md

The file will be saved to the main branch

Specify the Author name,Email address and commit message (Though optional, its a good practice to provide commit message)

Click the button commit changes

Branches in git

Branches can be used to develop new features, save a specific version of your project from a specific commit, and much more.

When you create your first commit in aws code commit console , a default branch main is created for you

Now, we have created a repository on aws console , made a first commit to it by saving a ReadMe file to the main branch

4: IAM role

- Navigate to IAM Console,

- Click on Roles link on Left Pane

- Click Create Role button to create a role

- Select AWS Service as Trusted entity type

- Select glue option from Use cases for other services dropdown

- Add the below permissions to the role and click Next button

- Specify a role name "AWSGlueServiceRoledemo" and Click Create Role button

Create an ETL job using AWS Glue Studio to inner join DynamoDB Tables

- Navigate to AWS Glue Studio from aws console

- Click Jobs from Left pane

- You will be navigated to Jobs page, where you can create ETL Jobs

By default, Visual with source and target option would be selected

Select Source as DynamoDB and Target as Amazon S3 in Create job

Click Create button

- An untitled job with the source as DynamoDB and target as Amazon S3 will be created for you. And You will be navigated to the page of untitled job where you will be able to edit the configuratons

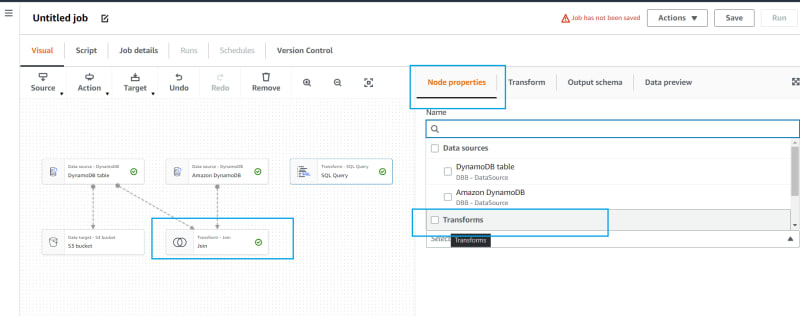

Visual Tab

- First You will be on the Visual tab

Customers can create data integration jobs using the Amazon Glue API from a JSON object that displays a visual step-by-step workflow thanks to the API offered by AWS Glue.

Clients can then work with these jobs using the visual editor in Amazon Glue Studio.

Now we are going to create a job which reads data from two dynamodb tables BirthStone table and Ring table.

We are going to inner join these two tables based on name attribute

and apply a query to find birtstone suitable for Olive and its priceFirst we will add the required Source blocks, Action blocks and Target Blocks

-

Store the Query result in S3 bucket

-

Nodes Required:

- Two DataSource DynamoDB (RingsTable, Birthstone)

- Transfrom Join (For applying inner join)

- SQL Query ( For querying the result table)

- Data target - S3 Bucket

-

Nodes Required:

Click on the Source and add one more DynamoDB table

Remove the ApplyMapping Block, if its already there, since we don't need it for this job

(Click on the ApplyMapping Block and Click Remove)From the Action, Select Join and Add it

From the Action, Select SQL Query and Add it

S3 bucket will be there on the flow as target , leave it as it is

Now, Lets Configure the flow

Source Nodes

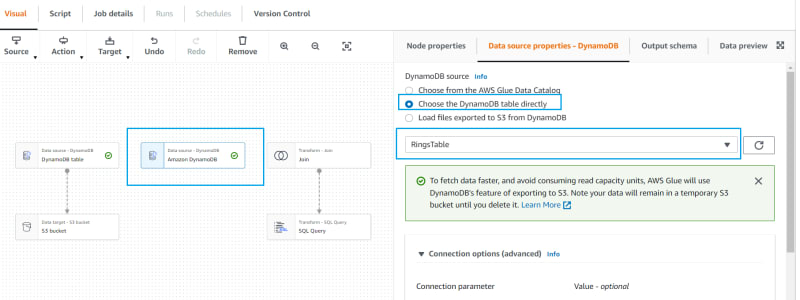

- Click on One of the Data Source DynamoDB nodes,

- On the Right pane, configure the node properties as shown in the picture below

Select the option Choose the Dynamodb Table directly radio button for DynamoDB source

Select the Birthstone table from the Dropdown, Make sure PITR is enabled for the Birth stone table , if not the table will not be populated on the dropdown, (If you do not see the table in the dropdown, Go to the Dynamodb service, enable PITR for the Birthstone table and Ring Table. Then Refresh this page to get the tables on this dropdown)

Click on the second DynamoTable Data source node,

On the Right pane, configure the node properties as shown in the picture below

- Now, We have configured the Source Nodes successfully

Action Nodes

-

- Click on the Transform join node

- On the Right pane, Click on the Node Properties Tab

- Select the two DynamoDB tables as Node Parents

- Click on the Transform Tab, Make sure Inner join is selected as Join Type, Click Add Condition button

- Specify the inner join condition as shown in the image below

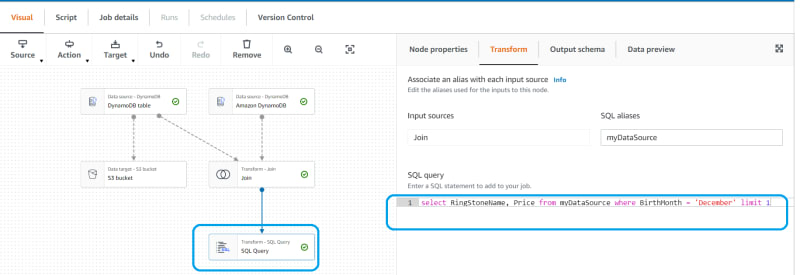

- Click on Transform SQL Query node

- On the Right pane, Click on the Node Properties Tab

- Select Transform Check box from the Node Parents dropdown list, You can see Join node getting selected

Click on the Transform Tab to specify the query on the Inner joined Table

As You see here, Inner Joined table is alised as myDataSource

Now Lets Write the SQL Query to generate the Birth Stone name and Price of the Birst stone matching to olive's Birth Month

- Olive's Birth Month is December since she is born on a christmas day , Lets Create a query to get the Birth Stone gem and its price matching to the month

select RingStoneName, Price from myDataSource where BirthMonth = 'December' limit 1

Yes, Now we have configured Transform nodes successfully

Target Node

- We need to store the query result in the s3 bucket

Click on the Data target - S3 bucket node

On the Right pane, Click on the Node Properties Tab and Tick SQL Query checkbox

- Click on Data Target Properies - S3 Tab

- Configure it as shown in the below image

- As you see, Format should be Parquet

The bucket location should be selected from s3 so that a Parquet file with the result data will be saved on the S3 bucket once the job execution is completed successfully

After all the configurations, The Visual Tab will be seen as below image

Script Tab

- Based on the Job Configuration, Python script will be generated on the Script tab

Job details

Now, Navigate to Job details tab to specify the job details

By default, Job name would be Untitled, Specify a job name in the Name Field.

Specify a description as well

Select the AWSGlueServiceRole-demo which we already created as a part of prerequisites

Leave the remaining to default settings

Click Save button to save the Job

Version Control Tab

Navigate to Version Control Tab

Here, You need to do the git configurations

Select AWS Code commit option for git service

In the Repository Configuration section, choose the git repository that we created in aws code commit (Refer Prerequisites)

Choose the branch as main

Click Save button on the top right

Click the Actions Button, Choose Push to repository option

The job will be pushed to the repository and a JSON file will be saved on to the code repository.

Navigate to aws code commit and verify if files are saved on to the repository

Executing the ETL Job and Verifying the result in S3 bucket

Once you have saved the job, its time to Run the job

- Click the Run button on the Top Right pane

- You will be getting the following message

- Navigate to Runs Tab to see the job status

Once the Run status becomes succeeded, Navigate to S3 Console and click on the gemstonejobresult bucket

You will be able to see the result saved as a parquet file

- Download the Parquet file and save the file locally by clicking the Download button

- Open the website https://www.parquet-viewer.com/ to read results from parquet file

- Now You will be able to view the result

Reading the Parquet File object using S3 select

Alternatively, You can Query the Parquet file with S3 select

Navigate to S3 Console and click on the gemstonejobresult bucket

Click on the Parquet file name link where the result is saved

Now, Click the Object Actions button and from the list, select the Query With S3 Select and give a click

You will be navigated to Query with S3 Select Page, Now by default Apache Parquet will be chosen in input settings, Keep it as it is

You can choose the Format in Output settings.

S3 Select allows you to specify how the query results are serialized in the output. You can choose formats like JSON or CSV. This determines how the data will be structured in the output files.

- Lets choose JSON here so that the result of the query will be displayed in JSON Format

- We are going to read the Parquet File using the below Select Query which is displayed in the query editor by default.

SELECT * FROM s3object s LIMIT 5

- Now Click Run SQL query button to execute the query

- Once the Query is executed successfully, You will be able to view the result in JSON format (The format chosen in Output settings)

- You can download the result by clicking the Download results button

Yaay , We found it , Olive needs to purchase a Turquoise ring and its price is 612$

And that was Olive’s story.

Challenge Task

Set up an ETL job by following the above instructions

Find out the month corresponding to the Birth stone which is priced highest

Post a screenshot of the answer in comment section

Top comments (1)

Awesome