What is Robotic Data Automation (RDA)

Robotic Data Automation (RDA) is a new paradigm to help automate data integration and data preparation activities involved in dealing with machine data for Analytics and AI/Machine Learning applications. RDA is not just a framework, but also includes a set of technologies and product capabilities that help implement the data automation.

RDA enables enterprises to operationalize machine data at scale to drive AI & analytics-driven decisions.

RDA has broad applicability within the enterprise realm, and to begin with, CloudFabrix took the RDA framework and applied it to solve AIOps problems – to help simplify and accelerate AIOps implementations and make them more open and extensible.

RDA automates repetitive data integration, cleaning, verification, shaping, enrichment, and transformation activities using data bots that are invoked to work in succession in “no-code” data workflows or pipelines. RDA helps to move data in and out of AIOps systems easily, thereby simplifying, and accelerating AIOps implementations that otherwise would depend on numerous manual data integrations and professional services activities.

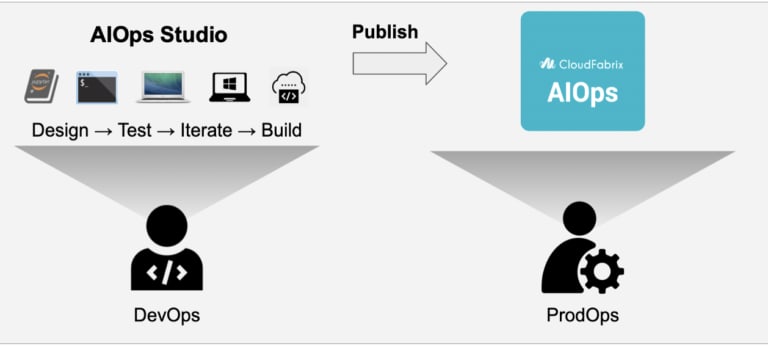

To work with RDA workflows, CloudFabrix provides a collaborative integrated development environment (IDE) called AIOps Studio, which is an integral part of all CloudFabrix solutions. Using AIOps Studio, developers, services, and implementation personnel can design, author, iterate and publish data workflows and finalized workflows can then be published to production systems for the workflows to take effect.

Why RDA is Needed?

Artificial Intelligence for IT Operations (AIOps) requires processing vast amounts of data obtained from various hybrid IT data sources, that are spread across on-premises, cloud, and edge environments. This data comes in various formats and delivery modes. Additionally, results and outcomes of such data processing need to be also exchanged with other tools in the IT ecosystem (Ex: ITSM/Closed-loop automation/Collaboration Tools and BI/Reporting tools).

All of this requires integrating, ingesting, preparing, verifying, cleaning, transforming, shaping, analyzing, and moving data in and out of AIOps systems in an efficient, reusable, and scalable manner. These essential tasks are most often overlooked in AIOps implementations and cause significant delays and increase costs of AIOps projects.

Challenges

Let us understand what some of the key challenges in data preparation & data integration activities are, when implementing AIOps projects.

- Different data formats (text/binary/json/XML/CSV), data delivery modes (streaming, batch, bulk, notifications), programmatic interfaces (APIs/Web development/Webhooks/Queries/CLIs)

- Complex data preparation activities involving integrity checks, cleaning, transforming, and shaping the data (aggregating/filtering/sorting)

- Raw data often lacks application or service context, requiring real-time data enrichment bringing in context from external systems

- Implementing data workflows require specialized programming/data science skill set

- Changes in source or destination systems require rewriting/updating connectors

Traditional Approach of Data Handling in AIOps:

In traditional approach, AIOps vendors provide a set of out-of-the-box integrations and once you connect AIOps software to your data sources, you are now pretty much at the mercy of how your data gets utilized, processed for producing results & Outcomes.

- Black box approach of data acquisition, processing, and integration

- Use cases and scenarios limited to what the platform supports

- Integrations mostly predefined/hard coded limiting reuse

- Complex scripting modules or cookbooks requiring specialized/programming skills (Javscript, Python etc.)

- Difficult to bring in external integrations for intermittent data processing (ex: enrichment)

- Difficult to access data in a programmatic way for complementary functions (ex: data access for scripting, reporting, dashboarding, automation etc.)

These are all inhibitors to effective AIOps implementations by way of adding delays & costs (manual data prep/handling activities)

Need of the Hour: Robotic Data Automation for AIOps

Robotic Data Automation (RDA), a key enabler for AIOps 2.0

RDA automates DataOps, similar to what RPA did to automate business processes. RDA is integral part of AIOps solution that provides augmented data preparation and integration capabilities. RDA is both a data automation framework and a toolkit to accelerate and simplify all data handling in AIOps implementations. Highlights- Implement No-code Data Pipelines using Data bots

- Native AI/ML bots

- CFXQL – Uniform Query Language

- Inline Data Mapping

- Data Integrity Checks

- Data masking, redaction, and encryption

- Data Shaping: Aggregation/Filtering/Sorting

- Data Extraction/Metrics Harvesting

- Synthetic Data generation

Benefits

- Simplify and Accelerate AIOps implementations

- Reduces time/effort/costs tied to data prep and integrations.

- Suitable for DevOps/ProdOps personnel (no need of data scientist skills)

Example Use Cases and Scenarios

- Log Clustering: Ingest app logs from cloud and on-prem, run ML models to cluster logs, push results to Kibana/CFX dashboards

- CMDB Synchronization: Take latest asset inventory from CFX and push it to CMDB.

- E-Bonding of tickets from partner/subsidiary ITSM to customer’s ITSM (Ex: BMC incidents to ServiceNow)

- Incident NLP Classification: Ingest tickets from ServiceNow, do NLP classification with OpenAI (GPT-3) and enrich tickets back in ServiceNow

- Anomaly Detection: From Prometheus (or any monitoring tool), get historical CPU usage data for a node (Hourly). Apply regression and send a message on Slack with a list of anomalies as an attachment.

- Ticket Clustering: Take last 24-hrs incidents from ServiceNow, apply clustering on tickets and push results to a new dataset for visualization in CFX dashboard.

- Change Detection: Take baseline inventory of AWS EC2 VMs and compare against current state to highlight unplanned changes.

How it Works

CloudFabrix AIOps platform and all its products (Operational Intelligence, Asset Intelligence, Observability-In-a-Box) are pre-packaged with RDA. Additionally, RDA is also provided as a standalone tool and as a hosted offering to help iterate, author, and publish data workflows.

To begin with, RDA provides a lot of out-of-the-box data integrations (called extensions), which are written in Python. Customers can use extensions to connect with their data sources and access them through tags or data sets. All DataOps activities like performing integrity checks, data cleaning, transforming, and shaping the data (aggregating/filtering/sorting) can be performed with data bots invoked in a pipeline, which is essentially a series of data processing steps, that typically take data from a source, do some processing and transformation, and send data to a sink. A unique aspect here is these are “No-Code” pipelines, meaning you do not have to know Python or JavaScript to implement these pipelines, and the pipeline just uses configurational semantics using text that is similar to natural language.

Following is an example of data workflow that takes AWS EC2 Inventory Baseline

@c:simple-loop loop_var = "aws-prod:us-east-1,aws-dev:ap-south-1,aws-dev:us-west-2"

--> *${loop_var}:ec2:instances

--> @dm

:save name = "temp-aws-instances-${loop_var}"

--> @c:new-block

--> @dm

:concat names = "temp-aws-instances-.*"

--> @dm

:selectcolumns include = 'InstanceId|InstanceType|State.Name$|LaunchTime|^Placement.AvailabilityZone$'

--> @dm

:fixcolumns

--> @dm

:save name = 'all-aws-instances-Mar-25'

Fig: AIOps Studio: Visualization of Data Pipeline execution

AIOps product has a lot of prebuilt pipelines that provide a turnkey experience for all core AIOps use cases and scenarios, so that customers perform integrations with their tools and get to work right away. Anytime customizations are needed, either to address new use case or scenario, or update the way data transformations or enrichments are done, customers can use RDA utility to iterate and experiment with pipelines, and once pipelines are functioning as expected, customers can then publish the pipelines to AIOps platform to be deployed into production. Resultant datasets can be easily visualized in CFX dashboards.

This process is like how developers use Integrated Development Environment (IDEs) to write code, iterate, compile and build images, which are then pushed onto a runtime environment. In the same way, RDA provides a collaborative Jupyter style notebook authoring tool and workflow visualization tool, using which pipelines can be built or customized, and once finalized they can be pushed on to the production AIOps platform. For workflow authoring, RDA is also available as a standalone tool that can be deployed in a customer’s own environment or it can be used as a service within CloudFabrix’s cloud-hosted environment.

Fig: AIOps Studio provides IDE-like capabilities for DataOps

Getting Started – AIOps Studio

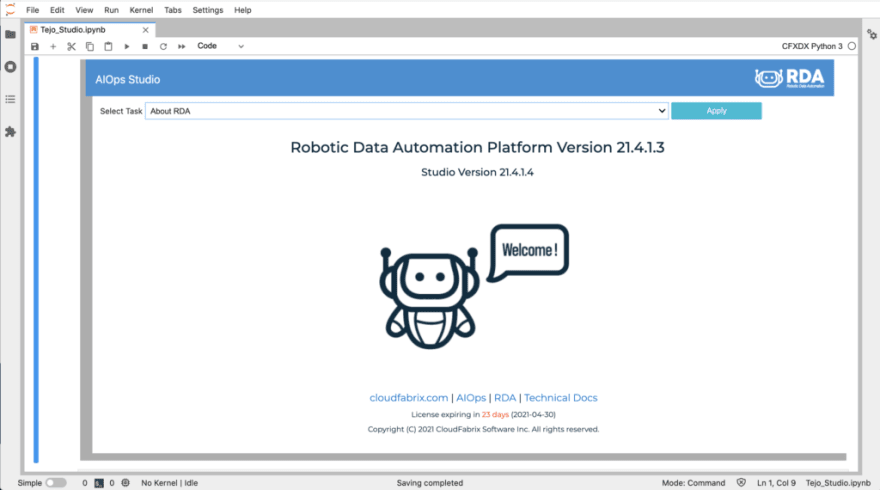

It is very easy to get started with RDA using AIOps Studio, which is visual IDE for authoring and publishing data pipelines. We have few options available.

- Install on top of Docker

- AWS image

- Hosted service

Once you bring up RDA install using one of above options, you can invoke AIOps Studio and pretty much do all data operations using the studio, that provides intuitive user interface.

Fig: AIOps Studio Welcome Page

AIOps Studio Tasks

Following are some of the tasks that can be performed from AIOps Studio:

- Add a New Pipeline

- Edit a Pipeline

- Execute a Pipeline

- Pipeline Syntax Verification

- Inspect Pipeline Data Results at Each Stage

- Visualize and Debug Pipeline Execution

- Publish Pipeline to Production App

- Explore Data Automation Bots

- Explore API models for Data Automation Bots

- Explore Datasets

- Administration: Manage Bot Sources

- Administration: Check Connectivity for Configured Sources

- Administration: View Plugins

- Administration: Add New Plugin (coming soon)

- Show Inline Help

- More … Anyone interested in trying out RDA can sign up for interest list here: https://www.roboticdata.ai/signup/

Top comments (0)