MongoDB is by far one of the most popular databases used in the modern web world. This is because it is based on a simple idea: since we send data as json through the web, why not store it in the same form in a database?

This definitely makes tho job easier and faster for developers. But storing data in the same form as we send it over the network is not necessarily optimal from a database point of view. Database creators implement various solutions (sometimes very smart ones) based on certain assumptions, if we ignore them, we will not use the full potential of their product.

To shed some light on this interesting topic, in this series of articles, I will show how we can reduce database size and improve it’s performance just by using the right structures and proper data types. From now on, every Monday I will post another post on this topic. If you find this topic interesting, please let me know by like/unicorn, so I know it's worth continuing.

Introduction

So I was tuning one of our services in order to speed up some MongoDB queries. Incidentally, my attention was caught by the size of one of the collections that contains archived objects and therefore is rarely used. Unfortunately I wasn't able to reduce the size of the documents stored there, but I started to wonder: is it possible to store the same data in a more compact way? Mongo stores JSON that allows many different ways of expressing similar data, so there seems to be room for improvements.

It is worth asking: why make such an effort in the era of Big Data and unlimited resources? There are several reasons.

First of all, the resources are not unlimited and at the end we have physical drives that cost money to buy, replace, maintain, and be supplied with power.

Secondly, less stored data results in less time to access it. Moreover, less data means that more of it will fit into cache, so the next data access will be an order of magnitude faster.

I decided to do some experiments and check how the model design affects the size of database files.

Tools

I used a local MongoDB Community Edition 4.4 installation and I initially tested collections containing 1 million and 10 million documents. One of the variants contained up to 100 million, but the results were proportional (nearly linear). Therefore, in the end I decided to stop at 1M collections, because loading the data was simply much faster.

Having access to local database files, I could easily check the size of the files storing individual collections. However, it turned out to be unnecessary, because the same data can be obtained with the command:

db.getCollection('COLLECTION_NAME').stats()

The following fields are expressed in bytes:

-

size: size of all collection documents, before compression, -

avgObjSize: average document size, before compression, -

storageSize: file size on the disk; this is the value after the data is compressed, the same value is returned bylscommand, -

freeStorageSize: size of unused space allocated for the data; Mongo does not increase the file size byte-by-byte, but allocates a percentage of the current file size and this value indicates how much data will still fit into the file.

To present the results I used (storageSize - freeStorageSize) value that indicates the actual space occupied by the data.

My local MongoDB instance had compression enabled. Not every storage engine has this option enabled by default, so when you start your own analysis it is worth checking how it is in your particular case.

Tip 1: ID field type

In the beginning I decided to check ID fields. Not the collection primary key, mind you – it is 'ObjectId' type by default and generally shouldn't be changed. I decided to focus on user and offers identifiers which, although numerical, are often saved as String in Mongo. I believe it comes partly due to the contract of our services - identifiers in JSON most often come as Strings and in this form they are later stored in our databases. Let’s start with some theory: the number of int32 type in Mongo has a fixed size of 4 bytes. The same number written as a String of characters has a size dependent on the number of digits; additionally it contains the length of the text (4 bytes) and a terminator character. For example, the text "0" is 12 bytes long and "1234567890" is 25 bytes long. So in theory we should get interesting results, but what does it look like in reality?

I prepared 2 collections, one million documents each, containing the following documents:

{ "_id" : 1 }

and

{ "_id" : "1" }

The values of identifiers were consecutive natural numbers. Here is the comparison of results:

As you can see the difference is significant since the size on disk decreased by half. Additionally, it is worth noting here that my data is synthetic and the identifiers start from 1. The advantage of a numerical identifier over a String increases the more digits a number has, so benefits should be even better for the real life data.

Encouraged by the success I decided to check if field type had any influence on the size of an index created on it. In this case, unfortunately, I got disappointed: the sizes were similar. This is due to the fact that MongoDB uses a hash function when creating indexes, so physically both indexes are composed of numerical values. However, if we are dealing with hashing, maybe at least searching by index in a numerical field is faster?

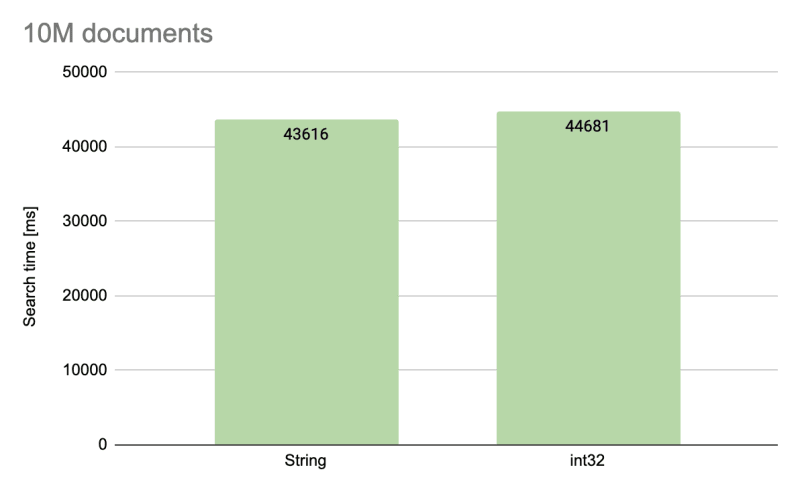

I made a comparison of searching for a million and 10 million documents by indexed key in a random but the same order for both collections. Again, a missed shot: both tests ended up with a similar result, so the conclusion is this: it is worth using numerical identifiers, because they require less disk space, but we will not get additional benefits associated with indexes on these fields.

That's it for today. In the next entry we will check whether it is better to use flat or nested json structures.

If you find this topic interesting, please let me know by like/unicorn, so I know it's worth continuing.

Top comments (0)