Last week we had a client that wanted to create a ChatGPT AI chatbot based upon YouTube transcripts. We have already written about WFM Labs previously, but we never published the actual Hyperlambda that's required to split the transcripts into smaller training snippets.

In this article we'll therefor show you the code required to load these transcripts, and turn them into high quality factual training data for a chatbot.

If you missed out on the previous article, you can watch the YouTube video below where I demonstrate how it works.

The code

/*

* This snippet will import all files recursively

* found in the '/etc/transcripts/' folder, break these into

* training snippets, by invoking OpenAI to extract important facts

* and opinions discussed in the transcript, creating (more) high

* quality data (hopefully) from podcast and video transcripts.

*

* The process will first chop up each file into maximum 4,000 tokens,

* making sure it gets complete sentences, by splitting the transcript

* on individual sentences (. separated), for then to send this data

* to OpenAI, asking it to extract the important parts.

*

* When OpenAI returns, it will use the return value from OpenAI as

* a training snippet, and insert it into the database as such.

*

* When the process is done, it will delete all files found in the

* '/etc/transcripts/' folder to avoid importing these again.

*

* Since this process will probably take more than 60 seconds,

* we need to run it on a background thread to avoid CloudFlare

* timeouts.

*/

fork

// Making sure we catch exceptions and that we are able to notify user.

try

// Massage value used to extract interesting information from transcript.

.massage:@"Extract the important facts and opinions discussed in the following transcript and return as a title and content separated by a new line character"

// The machine learning type you're importing into.

.ml-type:WHATEVER-ML-TYPE-HERE

// Basic logging.

log.info:Started importing transcripts from /etc/transcripts/ folder.

// Contains all our training snippets to be imported.

.data

// Listing all files in transcripts folder.

io.file.list-recursively:/etc/transcripts/

// Counting files.

.count:int:0

// Loops through all files.

for-each:x:@io.file.list-recursively/*

// Making sure this is a .txt file.

if

or

strings.ends-with:x:@.dp/#

.:.txt

strings.ends-with:x:@.dp/#

.:.md

.lambda

// Incrementing file count.

math.increment:x:@.count

// Loading file.

io.file.load:x:@.dp/#

// Creating prompt from filename.

strings.split:x:@.dp/#

.:/

.prompt

set-value:x:@.prompt

get-value:x:@strings.split/0/-

// Adding training snippet to above [.data] section.

unwrap:x:+/*/*/*

add:x:@.data

.

.

prompt:x:@.prompt

// Splitting file content into individual sentences.

strings.split:x:@io.file.load

.:"."

// Looping through all sentences from above split operation.

while

exists:x:@strings.split/0

.lambda

// Contains temporary completion.

.completion:

/*

* Making sure our completion never exceeds ~4000 tokens.

*

* Notice, since a video/podcast transcribe typically

* contains a lot of 'Welcome to' and 'Here we have', etc

* we can safely create original snippets of ~4,000 tokens,

* since these will probably be summarized by OpenAI down

* to 500 to 1,500 tokens.

*/

while

and

exists:x:@strings.split/0

lt

openai.tokenize:x:@.completion

.:int:4000

.lambda

// Adding currently iterated sentence to above temporary [.completion].

set-value:x:@.completion

strings.concat

get-value:x:@.completion

get-value:x:@strings.split/0

.:"."

remove-nodes:x:@strings.split/0

// Adding completion to last prompt created.

unwrap:x:+/*/*

add:x:@.data/0/-/*/prompt

.

completion:x:@.completion

// Basic logging.

get-count:x:@.data/**/completion

log.info:Done breaking all files into training snippets according to token size.

files:x:@.count

segments:x:@get-count

// Retrieving token used to invoke OpenAI.

.token

set-value:x:@.token

strings.concat

.:"Bearer "

get-first-value

get-value:x:@.arguments/*/api_key

config.get:"magic:openai:key"

/*

* Now we have a bunch of prompts, each having a bunch of associated

* completions, at which point we loop through all of these, and create

* training snippets for each of these, but before we insert the actual

* training snippets, we invoke ChatGPT to summarize each, such that we

* get high quality training snippets.

*/

for-each:x:@.data/*

// Basic logging.

get-count:x:@.dp/#/*/prompt/*/completion

log.info:Handling transcript file

file:x:@.dp/#/*/prompt

segments:x:@get-count

// Looping through each completion below entity.

for-each:x:@.dp/#/*/prompt/*/completion

/*

* Creating temporary buffer consisting of prompt + completion

* that we're sending to ChatGPT asking it to summarize the most

* important facts from the transcript.

*/

.buffer

set-value:x:@.buffer

strings.concat

get-value:x:@for-each/@.dp/#/*/prompt

.:"\r\n"

.:"\r\n"

get-value:x:@.dp/#

/*

* Invoking OpenAI to create summary of currently iterated

* transcript snippet.

*/

unwrap:x:+/**

http.post:"https://api.openai.com/v1/chat/completions"

convert:bool:true

headers

Authorization:x:@.token

Content-Type:application/json

payload

model:gpt-4

max_tokens:int:2000

temperature:decimal:0.3

messages

.

role:system

content:x:@.massage

.

role:user

content:x:@.buffer

// Sanity checking above invocation.

if

not

and

mte:x:@http.post

.:int:200

lt:x:@http.post

.:int:300

.lambda

// Oops, error.

lambda2hyper:x:@http.post

log.error:Something went wrong while invoking OpenAI

message:x:@http.post/*/content/*/error/*/message

status:x:@http.post

error:x:@lambda2hyper

prompt:x:@for-each/@.dp/#/*/prompt

completion:x:@.dp/#

// Splitting prompt and completion.

strings.split:x:@http.post/*/content/*/choices/0/*/message/*/content

.:"\n"

.prompt

.completion

set-value:x:@.prompt

strings.trim:x:@strings.split/0

remove-nodes:x:@strings.split/0

set-value:x:@.completion

strings.join:x:@strings.split/*

.:"\n"

set-value:x:@.completion

strings.trim:x:@.completion

/*

* OpenAI relatively consistently returns 'Title:'

* and 'Content:' that we remove since these just creates noise.

*/

set-value:x:@.prompt

strings.replace:x:@.prompt

.:"Title:"

set-value:x:@.completion

strings.replace:x:@.completion

.:"Content:"

set-value:x:@.completion

strings.trim:x:@.completion

set-value:x:@.prompt

strings.trim:x:@.prompt

// Inserting into ml_training_snippets.

data.connect:magic

data.execute:"insert into ml_training_snippets (type, prompt, completion, meta) values (@type, @prompt, @completion, 'transcript')"

@type:x:@.ml-type

@prompt:x:@.prompt

@completion:x:@.completion

// Signaling frontend and logging that we're done.

get-count:x:@.data/**/completion

log.info:Transcripts successfully imported.

training-snippets:x:@get-count

sockets.signal:magic.backend.message

roles:root

args

message:Transcripts successsfully imported

type:info

/*

* Deleting all files from transcripts folder

* to avoid importing these again.

*/

for-each:x:@io.file.list-recursively/*

io.file.delete:x:@.dp/#

.catch

// Oops!

log.error:Something went wrong while we tried to import transcripts

exception:x:@.arguments/*/message

sockets.signal:magic.backend.message

roles:root

args

message:Oops, something went wrong. Check your log for details

type:error

return

result:@"You will be notified when the process is done.

You can leave this page if you do not want to wait."

How it works

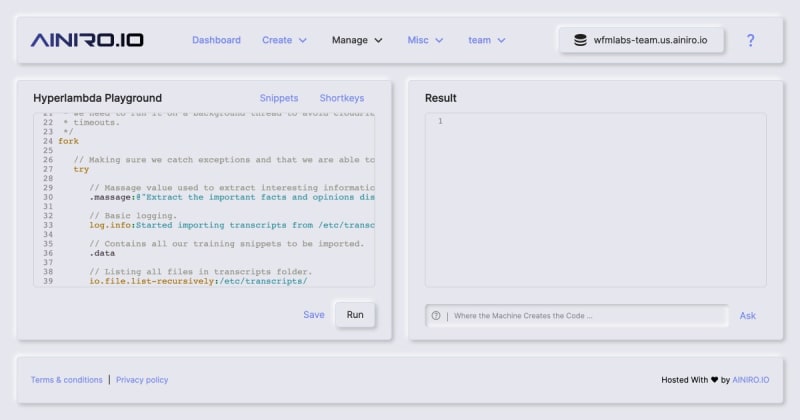

To execute the above code you need to login to your cloudlet and go to your Hyperlambda Playground. It should resemble the following.

When you click "Run" the process will start. Notice, make sure you've create a folder called "/etc/transcripts/" first, and that you've uploaded your transcript .txt files into it first.

The process will probably take several minutes, and it will invoke ChatGPT a lot of times - Once for each text snippet it inserts into your machine learning model in fact. However, the process will execute on a background thread, so you can leave the page while it does its magic.

The above Hyperlambda will chop up each text file found in your "/etc/transcripts" folder into multiple snippets of text, each snippet being maximum 4,050 OpenAI tokens in size. Then it will invoke OpenAI's API using gpt-4 as its model, and inform ChatGPT to do the following.

Extract the important facts and opinions discussed in the following transcript and return as a title and content separated by a new line character

As I explain in the above YouTube video, the last parts of the above sentence is crucial and you should not edit it. However, if the above doesn't give you good result, you can modify the above [.massage] value until you're getting the results you need.

Once you're done, you can just save the snippet for later, allowing you to easily reproduce the process in the future. Notice, the above Hyperlambda will delete all files after importing them, so there's no need to go back into Hyper IDE and delete the files.

Future improvements

The Hyperlambda script will fail if there's an error while invoking ChatGPT for instance, at which point your model will end up in an indetermined state. In future versions we might want to improve upon the script, creating "atomic commits" or something. However, that's an exercize for later.

If you want to try a ChatGPT chatbot which has someof its content based upon such YouTube transcrtips, you can find WFM Labs here.

Top comments (0)