💡 This is a very long article. It is so because Kubernetes pulls many computing concepts along before one can do anything with it. I broke those concepts down as much as possible in this article, but because of the length of this article, I'd encourage the readers to read it three times.

The first read should be a quick scan to get a general idea of what the article is about. Come back to it at some later point in time for a second read, this time, a slower, more careful read. Then, come back again at a later point in time for a more engaged form of learning. This time, you would take notes, Google unclear terms, ask questions in the comment section, and try out the examples.

What is Kubernetes

Kubernetes is a container orchestrator. Just what exactly does that mean?

In the previous article, we learned about containers and their role in the deployment/management of web applications.

In modern software engineering, we often find more than one web service communicating with each other over a network to serve a single purpose. For example, an e-commerce website would have a recommendation-service that recommends products to users based on their preferences, a cart-service that takes care of items in the user's cart, a payment-gateway-service that integrates multiple payment services, etc. Services that work in coordination as described above are called Distributed Systems, but you might be more familiar with the buzzword Microservices.

Microservices are distributed systems that contain highly specialized web services. They are also usually deployed in containers due to their lightweight and scalable nature. Learn why containers are lightweight in the previous article

When deploying a group of services, a container orchestrator becomes critical. An orchestrator ensures the deployed services adhere to the configuration specified during the deployment. A developer(or a platform engineer) would give a configuration file that describes the desired state of the distributed system. The configuration usually looks something like this in human language.

| Question | Answer |

|---|---|

| What are you trying to configure? | A deployment |

| How Can I Find The Executable For the App | Look for a docker image called auth-service:latest on this machine and run it |

| How many CPUs do you want to assign to this app | 1 |

| How much memory do you want to assign to the app | 1Gi |

The platform engineer /developer would then submit that configuration to the container orchestrator. Once received, the orchestrator would constantly look at the configuration and compare it to the current state of your app(s). If there is any variation between the comparisons, the orchestrator will automatically adjust the state of your services to match the developer's desired state.

Let's learn those concepts by associating them with concepts we already know

In the previous article in this series, I drew a parallel comparison between a program and a container. As a reminder, a program that is running is called a process, and an image that is running is a container. Let's continue with that analogy.

| Processes | Kubernetes |

|---|---|

At any moment, there are hundreds of processes running in your system. To get a snapshot of all the processes loaded to your computer memory right now, run ps -A

|

At any point in a distributed system, there is more than one container running in your deployment. |

| Most modern laptop CPUs have between 2 to 8 cores, which means they can only execute instructions from 2 to 8 processes at once. To cater to all the processes in memory, a component of the Operating System kernel called the Scheduler ensures that all processes take turns executing a bit of their instructions in fractions of a second. The schedulers(usually more than one) decide which process gets the CPU's attention at any time. | A cloud platform typically has millions of machines that they configure to act like one or multiple supercomputers. Orchestrators work like OS schedulers in the cloud, assigning your services to the suitable machine while making sure they are using the right amount of memory, CPU, and disk space. |

Essential Kubernetes Components/Terminologies

Workload

A workload is an application(usually a web service) running in Kubernetes. From this point onward, we refer to applications running inside Kubernetes as a workload.

Pod

Techies love to wrap things, and Kubernetes is no exception. Kubernetes developers, at some point, decided that containers were not enough; they needed to wrap them within something else called Pods. Whenever you deploy a workload on Kubernetes, Kubernetes puts that workload(i.e., the container your application is running in) inside a pod. So, in Kubernetes, the smallest unit of a deployment is a pod, not a container.

Let's associate pods with what we already know

An application running on an operating system is called a process. When you run that program in a Docker environment, it becomes a container; When you run your container directly in Kubernetes, it becomes a Pod.

⚠️It is possible to run multiple containers inside a single pod just as it is possible to run more than one application inside a single container. However, for 99% of use cases, you should only have one application running in a container and one container running in a pod. We will discuss the exceptions as we advance in this series.

Like many techies, you're probably asking why. Isn't container isolation enough? Are pods not redundant? No, Pods are not redundant. I'll answer this entirely in the Whys section of this article.

Node

Node is Kubernetes' lingo for a physical or virtual machine. Pods run in nodes(Obviously). Before now, I said workload runs in Kubernetes, but the right way to phrase that is "workloads run in nodes." From now on, when talking about computers/machines/virtual machines in the context of Kubernetes, we will refer to them as nodes.

Cluster

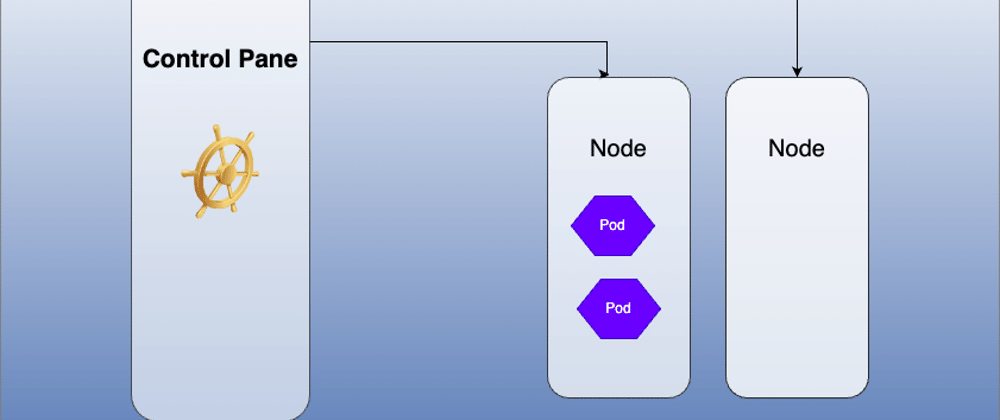

A cluster is a group of Nodes available for your workload(s). A cluster contains one master node and one or more worker nodes. The master node in Kubernetes lingo is called the Control Pane, while the worker nodes are simply called nodes.

The Control Pane is the node responsible for assigning workloads to other Nodes. It is the component of Kubernetes that fits more into the OS scheduler analogy. But wait! Does this not suggest that Kubernetes requires at least two machines to work correctly? How are people now able to run them on their laptops? In production, yes, Kubernetes requires at least one Control Pane Node and one Worker Node. That said, for experimental purposes and local development, it is possible to set up a single-node Kubernetes cluster where a single node serves as both the Control Pane and the Worker.

Now, let's learn that concept from bottom to top.

A cluster contains nodes -> a node runs one or more pods -> a pod runs containers(most time, one container)

The Whys

Are Containers not Enough Isolation? Why Do We Need Pods?

As redundant as they may look, they serve a purpose. A pod controls how a container can be discovered within a node. One problem it solves is the problem of clashing port bindings on a node. If you bind an internal port of a container to a port on a host machine and you try to bind another container port to that same port, you'll get an error. Pods solve this problem because each pod in a cluster is assigned unique IP addresses at creation time. Pods can communicate using those IP addresses through their node's port.

But why do I need Kubernetes?

That is a very valid question. After all, there are simpler alternatives like Google Cloud Run, Heroku, AWS Lambda, and many more. I'll answer with a quote from Eskil Steenberg;

In the beginning, you always want results; In the end, all you've always wanted is control.".

When building a product, you want to get to the market quickly and beat your competition. Beyond that stage, things can get very expensive with all these serverless tools. Even Amazon had to move one of its applications off serverless recently.

Beyond scheduling your pods, Kubernetes abstracts underlying infrastructure, exposing them to you as just resources (resources such as memory, disk, and CPUs, giving you an illusion that all your web services are running on a humongous machine and you can assign resources to each application by simply specifying the amount of memory, CPU and disk space it needs; This is the kind of control required by scaleups and enterprises.

But I Only Have A Portfolio Website. Why should I use Kubernetes?

Please don't unless you enjoy pain 🙊.

Why is it so complicated?

Well, it is, and it's not. If you asked a regular developer, it probably is. If you ask a system admin who's had to provision virtual machines manually in the past, supervise port mappings, sit and watch system logs to figure out when something is wrong with one of the numerous applications running on his servers, and manually restart applications on VMS when they crash, to me, Kubernetes is not that complicated.

Don't get me wrong, I'm not insinuating that Kubernetes is easy, but it's not rocket science; it's learnable. Programming was complex, but you learned it; Kubernetes is no different.

The How

How to Set up Kubernetes On Your Computer System

I'm currently on a Macbook; I'll speak quickly about setup on macOS. If you need step-by-step instructions about how to set it up on Windows and Linux, drop a comment, and I'm sure either I or other members of the community would help out.

Step 1: Download and install Docker Desktop for Mac. Follow the instructions here

Step 2: Once you start the docker desktop, go to settings. Under settings, click on the Kubernetes tab. In this tab, you'll see a check box with a label that says "Enable Kubernetes". Click on the check box and then the apply and restart button.

Step 3: Confirm that you have Kubernetes by running with the command, kubectl cluster-info. You should see an output that looks like the screenshot below.

Let's get a simple application to work with

In the previous article, we ran a simple echo server written in nodejs inside a container. We would continue from where that post stopped.

Step 1: Clone the repository, cd into the docker-and-k8s-from-localhost-to-prod directory. Duplicate the folder called node-echo and rename the duplicate to k8s-node-echo and cd into it.

Step 2: Build the application as a docker image

docker build -f Dockerfile . -t node-echo

Run the image in a Kubernetes node

Step 1: Create a Kubernetes Deployment Config

Kubernetes accepts configuration in YAML or JSON format. I'll choose YAML because it's the most popular choice in the community. Besides, it allows me to add comments to explain my configuration, so it is a favorable choice for tutorials like this.

Create a file called node-echo-deployment.yaml and add the following config to it. It's preferrable if you type it out yourself. I have added an explanation to each line of the config

# The kubernetes api version; because kubernetes' api is constantly revised

apiVersion: apps/v1

# The Kubernetes object that we are defining with this configuration. In this case, it's a Deployment

# A deployment is a Kubernetes _Object_ that stores desired state of pods

kind: Deployment

metadata:

# We are just giving a human-readable name to this deployment here

name: node-echo-deployment

spec:

# We are telling Kubernetes that we only want one instance of our workload to run simultaneously. If this instance crashes for any reason, Kubernetes will create a new instance of our

replicas: 1

# The next 7 lines assign metadata to our node to easily query them in the cluster. More on these later in the series

selector:

matchLabels:

app: node-echo

template:

metadata:

labels:

app: node-echo

# From here downward, we are describing how to run our web app to Kubernetes

spec:

# We are telling Kubernetes to find the docker image "node-echo:latest" and

# run it as a container. The container's name should also be called node-echo

containers:

- name: node-echo

image: node-echo:latest

# Next, we are telling Kubernetes to look for node-echo:latest image on our machine first. if

# If it isn't present on our local machine, then Kubernetes will go look for it on the

# internet

imagePullPolicy: IfNotPresent

# The next 4 lines define the limit of the resources that Kubernetes should make available in our app

resources:

limits:

cpu: 1

memory: 256Mi

# We are telling Kubernetes the ports that our workload will be using

ports:

# We are giving the port 5001 a name. Similar to declaring a variable

- name: node-echo-port

containerPort: 5001

livenessProbe:

httpGet:

path: /

port: node-echo-port

# In the 4 lines above, told Kubernetes to check localhost:5001/ in the pod node-echo

# the application is still running properly. If the endpoints return any status code > 299

# kubernetes restarts the application

readinessProbe:

httpGet:

path: /

port: node-echo-port

# The 4 lines above defines the endpoint that Kubernetes would check to determine that

# the pod is ready to receive trafic

startupProbe: # configuration for endpoints

httpGet:

path: /

port: node-echo-port

# The 4 lines above are uses by Kubernetes to determine if our application has started

Comparing the table in the opening section of this article might clarify this even further.

Step 2: Submit That Config To Kubernetes

Run kubectl apply -f node-echo-deployment.yaml where the -f flag specifies the file containing your config. If the file has no syntax error, you should get a message that says "deployment.apps/node-echo-deployment created"

Step 3: Confirm The Created Deployment

To check that your deployments are running, run kubectl get deployments. The output should look similar to the screenshot below.

You can also see your running pods by running the following command: kubectl get pods. Your output should also look like the screenshot below.

Step 4: Test our running workload

We know that our application is running on the localhost:5001, so if we run

curl -d 'hello' localhost:5001/

Our server should respond with "Hello", right? No. This is because a Kubernetes cluster's network is Isolated from the outside world by default. Going inside the pod, we can hit that endpoint. What a Bummer! I know, right 🙂? We can't tell users to always enter a pod before they can use our service.

We can fix this by creating another Kubernetes object called a Service. Oh no. 🤦♂️ Not another concept, right? Hold on a minute. We are almost done. This is the last concept you have to learn in this article.

Step 5: Create a Kubernetes Service

A Kubernetes service defines networking between pods and how pod networks are exposed to the internet. There is more to it, but for now, in development, we need the following configuration.

Create a node-echo-service-deployment.yaml file and add the following config.

# specifying which version of Kubernetes API we are using

apiVersion: v1

# Telling Kubernetes that we are configuring a service.

kind: Service

# assigning some metadata to the services

metadata:

# We are assigning a name to the service here. We are calling it

# node-echo-service

name: node-echo-service

# From here below, we are specifying the configuration of the Service

spec:

# There are different types of services.

# For demo purposes, we stick to NodePort. Node+Port or Machine+Port.

# With this config, we are telling Kubernetes that we would like to bind the Pods port to a Port on the Node running our Pod

type: NodePort

selector:

# We are specifying the app that we are configuring this service for

# If you go back to the deployment.yaml file, you'd remember that we defined

# this app metadata over there. It has proved to be useful when defining other

# Objects for our deployment

app: node-echo

# Define port mapping

ports:

# The port that this service would listen on

- port: 80

# The Pod's port to which the Kubernetes service would forward requests.

# This is usually the port that your workload binds to

targetPort: 5001

# The port on your Node that the service binds to

nodePort: 30001

Step 6: Submit the Kubernetes service config

Then run kubectl apply -f node-echo-service.yaml. If your configuration is fine, you should get the following message: service/node-echo-service configured

Now we can communicate with our service on localhost:30001/

curl -d "Hurray!! Now I can talk to my service in Kubernetes" localhost:30001/

To see all the code base and the configuration files in one, place, check out this repository

Conclusion

In this article, we learned the main pillars of Kubernetes: Cluster -> Node -> Services -> Deployment -> Pods -> Containers. We discussed the relevance of Kubernetes and why it can benefit a developer to learn it.

It is OK if everything stays the same. Kubernetes is best learned by trying stuff in it, running into issues, and fixing them.

In the next part of this series, we will go in-depth on services and deploy our node-echo to a cloud provider.

If you learned something, leave a

reaction, and do not hesitate to correct me if I have mixed up certain explanations.

See you on the next one.

Top comments (0)