Well, the clock speed of consumer CPUs has been constant to a few GHz for some years now. The obvious solution was to start gluing multiple CPU cores on top of each other to provide more processing power. This allowed for more available compute cycles.

This is an excellent approach if you want to run a lot of non-intensive CPU programs. The CPU scheduler works like a charm and distributes individual tasks over available CPU cores. But let’s say, we have just one job and wish to distribute it over multiple cores. This is where the design of the program and algorithm comes into the picture.

GO was designed for multitasking. There was so much importance given to multitasking that to start a parallel process in GO, you just need to start your statement with go. Take a look at the example below

func main(){

for i := 1; i <= 3; i++{

go func(n int){ // Parallel task is being created

fmt.Printf("i : %d\n", n)

}(i)

}

time.Sleep(5 * time.Millisecond) // A sleep timer of 5 sec

}

Output:

i : 3

i : 1

i : 2

Three parallel tasks are created and the user has no control over which one will execute first. Each parallel task is called a goroutine

If you don’t add a sleep timer, you probably won’t get any output because the execution won’t wait for a goroutine to complete. But adding a sleep timer isn’t a good idea, because the execution of routines isn’t something that can be predicted. A better way is to use sync.Waitgroup

GoRoutines Behind the Curtain

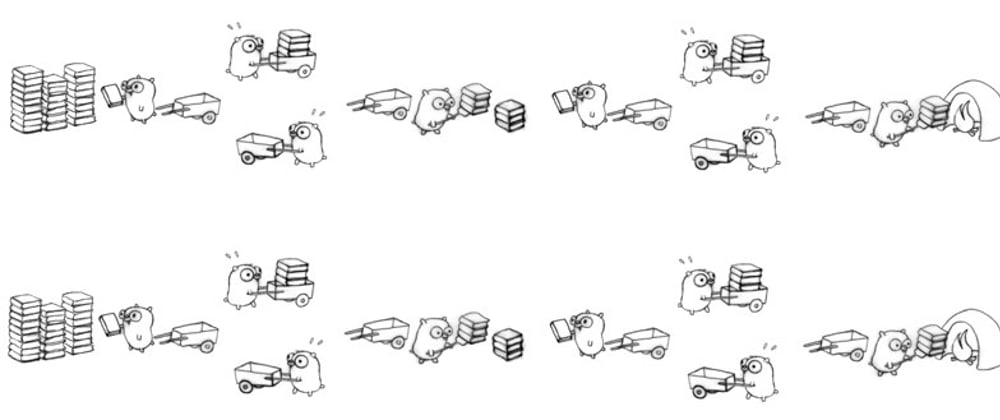

All goroutines are first pushed into a GRQ(Global Run Queue). Each Logical CPU has its OS Thread with its LRQ(Local Run Queue). Go Scheduler takes a routine from GRQ and randomly places it in any LRQ. Which is then picked up by the respective core and executed. This ensures Concurrency and Parallelism.

If you are from the distributed systems world like me, doesn’t it feel like the Go Scheduler is like the YARN scheduler in HADOOP ???

Special Cases (which are NOT so Special)

System calls(like I/O operations) are special cases where the execution of the program is suspended till the required operation is completed. If the routine stays in the LRQ till the operation is completed, a lot many CPU cycles will be wasted so a pretty decent solution is applied. Take an example of a single LRQ.

Say goroutine G7 encounters a syscall. So, instead of waiting, it’s sent to a place where it can stay till the syscall is over. It's a Parking space for goroutines which are

- Sending and Receiving on Channel

- Network I/O

- System Call

- Timers

- Mutexes

Once ready for execution, G7 is sent to the LRQ again.

Top comments (0)