Emojis are ways to indicate nonverbal clues. These clues have become an essential part of online chatting, product review, brand emotion, and many more. It also lead to increasing data science research dedicated to emoji-driven storytelling.

With advancements in computer vision and deep learning, it is now possible to detect human emotions from images. In this deep learning project, we will classify human facial expressions to filter and map corresponding emojis or avatars.

About the Dataset:

The facial expression recognition dataset consists of 48*48 pixel grayscale face images. The images are centered and occupy an equal amount of space. This dataset consist of facial emotions of following categories:

0:angry

1:disgust

2:feat

3:happy

4:sad

5:surprise

6:natural

Facial Emotion Recognition using CNN:

In the below steps will build a convolution neural network architecture and train the model on FER2013 dataset for Emotion recognition from images.

Make a file train.py and follow the steps:

- Imports:

- Initialize the training and validation generators:

- Build the convolution network architecture:

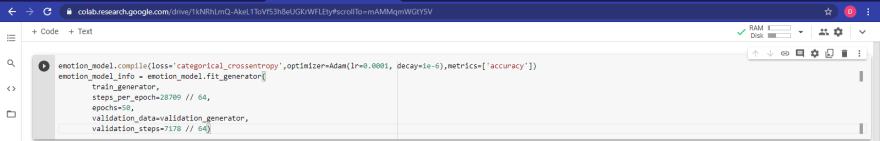

- Compile and train the model:

- Save the model weights:

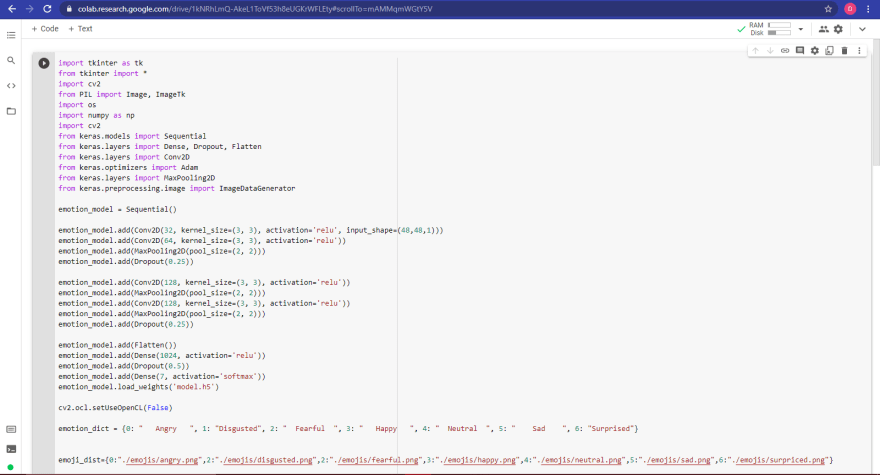

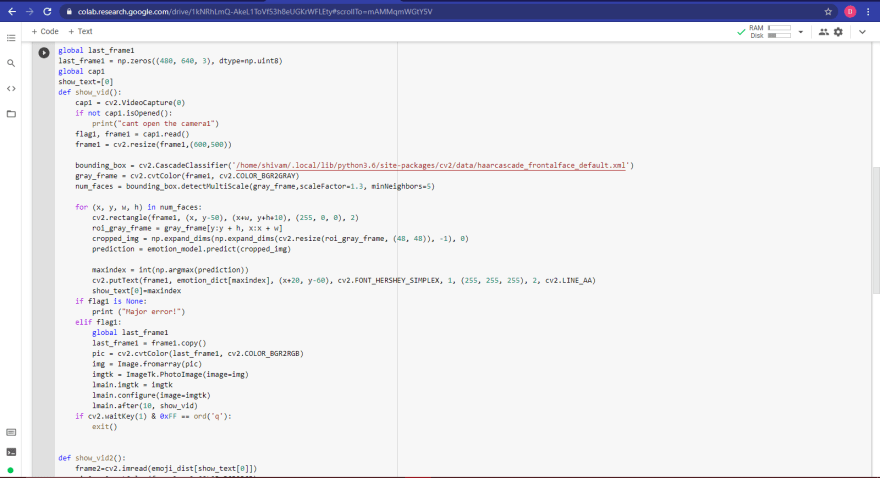

- Using openCV haarcascade xml detect the bounding boxes of face in the webcam and predict the emotions:

Code for GUI and mapping with emojis:

Create a folder named emojis and save the emojis corresponding to each of the seven emotions in the dataset.

Code for GUI and mapping with emojis:

Create a folder named emojis and save the emojis corresponding to each of the seven emotions in the dataset.

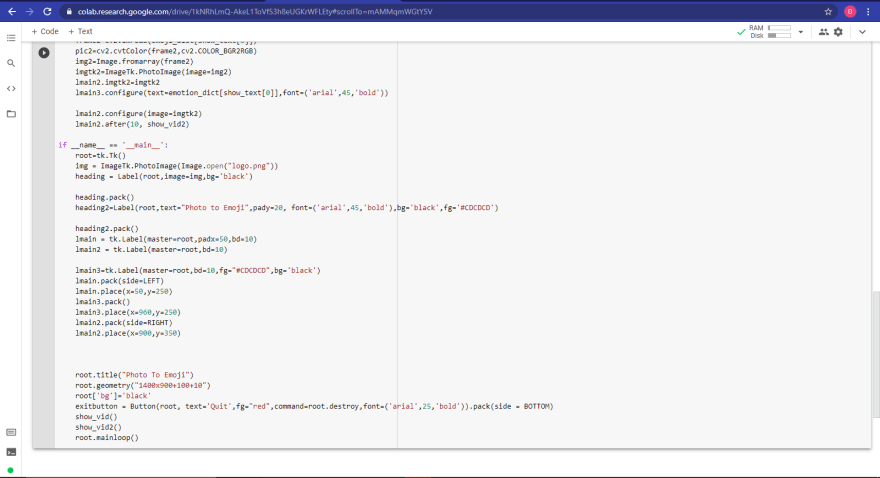

Paste the below code in gui.py and run the file.

Summary:

In this deep learning project for beginners, we have built a convolution neural network to recognize facial emotions. We have trained our model on the FER2013 dataset. Then we are mapping those emotions with the corresponding emojis or avatars.

Using OpenCV’s haarcascade xml we are getting the bounding box of the faces in the webcam. Then we feed these boxes to the trained model for classification.

Latest comments (1)

This looks really interesting but because they are pictures of code rather than code blocks they are both unreadable (as dev.to compresses them) and also very hard to work with (as if I could read them I certainly would be put off at having to manually type every line out).

May I suggest you use code blocks.

You can include code inside triple backticks so it shows as an actual code block.

So you write three backticks, then the language (so dev.to parser knows how to apply syntax highlighting to the code), on the next line copy your code in and then after it close it with three more backticks

This makes it much easier to read but more importantly, it ensures people using a screen reader can access the same information as everyone else!

The below fiddle shows the format, it is super easy!