I have been working on a trivia app so that I can revise my concepts on software engineering since I am currently preparing for interviews.

The idea was simple - I was using the openai API to get me a question with 4 options, a correct answer and some explanation regarding it.

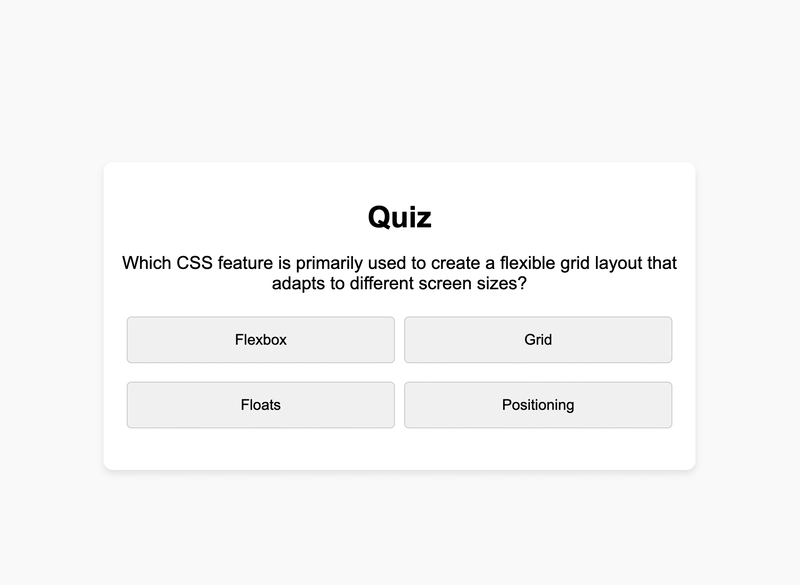

I displayed this in a form where you can then practice by clicking on the right option. A simple project.

The only problem was that OpenAI kept sending the same response.

I tried telling it to not repeat the same question by tweaking the prompt a bit but it didn't seem to work.

Finally this is what I had to do -

Topics - I made an array of topics and picked one topic at random from the array and sent it with the prompt. And now openAi started giving new responses. Not saying they are not repeated anymore but it much much better.

Here is the prompt -

{

role: 'system',

content: `Youareahelpfulassistantwhoiscreatingaquizapplicationrelatedto${

topic

}ingeneral.Trynottorepeatthetopicsfrompreviousquestions.`,

},

{

role: 'user',

content: `Createaquizquestionwith4optionsandonecorrectanswer.Thequestionshouldberelatedtotechnology.Itshouldhave4options.Itshouldhave1correctoption.Itshouldalsohaveasmalldescriptionorexplanationoftheanswer.TheresponsewillbeintheformofaJSONobjectwiththefollowingstructure: {

question: "What is the capital of France?",

options: [

"Paris",

"London",

"Berlin",

"Madrid"

],

answer: "Paris",

explanation: "Paris is the capital of France."

}Donotincludeanyothertextorexplanation.`,

}

If you guys want to check out the page here you go - https://express-quiz-app-4qqz.onrender.com/

Am constantly trying to improve on this as a hobby.

Thank you if you have reached so far. Have a good day!

Top comments (1)

I’ve noticed that sometimes when using OpenAI, the API returns the same response even if you send the same prompt multiple times. I recently found a few practical ways to handle this while exploring Revise With Me

and thought I’d share:

Adjust the Temperature: Increasing the temperature parameter (e.g., 0.7–1.0) encourages more varied responses. Lower values make outputs more deterministic.

Use Top-p Sampling: This can help the model choose from a broader set of possible outputs.

Slightly Reword Prompts: Even minor changes or adding context can produce different results.

Use System Prompts or Instructions: Giving the model extra guidance can change the style and content of responses.

Has anyone else experienced this issue? What methods have worked best for you to get varied responses from OpenAI?