Swagger Files (aka OpenAPI Specification) is the most popular way for documenting API specifications and Excel sheet provides an easy and simple way of writing structured data. Anybody can write data in excel sheet irrespective of their programming skills. Introducing vREST NG (An enterprise ready application for Automated API Testing), which combines the power of both to make your API Testing experience more seamless. The approach is also known as Data Driven Testing.

Data Driven testing is an approach in which test data is written separately from the test logic or script.

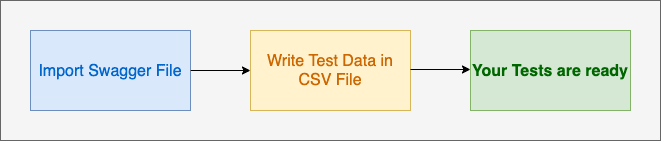

So, this is how the process looks like:

vREST NG uses swagger files to generate all of the test logic and sample test data CSV files. vREST NG reads test data from the CSV files and iterate over the rows available in the CSV files and run the iterations one by one. Today in this post, we will look at the following in detail:

- How you may generate the test cases by using the swagger files.

- How you may feed the test data to those generated test cases through an excel sheet.

How to perform Data Driven API Testing in vREST NG

To elaborate the process, I will take a sample test application named as contacts application which provides the CRUD APIs. I will guide you through the following steps:

- Setup the test application

- Download and Install vREST NG Application

- Perform Data Driven API Testing in vREST NG

Setup the Test Application:

You may skip this step if you want to follow the instructions for your own test application.

Otherwise, just download the sample Test Application from this repository link. You will also need to install the following in order to setup this sample test application:

- NodeJS (Tested with v10.16.2)

- MongoDB (Tested with v3.0.15)

To setup this application, simply follow the instructions mentioned in the README file of the repository.

Download and Install vREST NG Application

Now, simply download the application through vREST NG website and install it. Installation is simpler but if you need OS specific instructions, then you may follow this guide link.

After installation, start the vREST NG Application and use vREST NG Pro version when prompted in order to proceed further.

Now first setup a project by dragging any empty directory from your file system in the vREST NG workspace area. vREST NG will treat it as a project and store all the tests in that directory. For more information on setting up project, please read this guide link.

For quick start, if you don't want to follow the whole process and just want to see the end result. They may download and add this project directory in vREST NG application directly.

Performing Data Driven API Testing in vREST NG

vREST NG provides a quick 3 step process to perform data driven API Testing:

(a) Import the Swagger File

(b) Write Test Data in CSV Files

(c) [Optional] Setup Environment

Now, we will see these steps in detail:

(a) Import the Swagger File

To import the Swagger file, simply click on the Importer button available in the top left corner of the vREST NG Application.

An import dialog window will open. In this dialog window:

- Select "Swagger" as Import Source

- Tick the option

Generate Data Driven Tests. If this option is ticked then vREST NG Importer will generate the data driven test cases for each API spec available in the swagger file. - Provide the swagger file. For this demonstration, we will use the swagger file from the test application repository. Download Swagger File

The dialog window will look something like this. Now, click on the Import button to proceed further.

The import process has done the following things so far:

-

It has generated a test case for each API spec available in the Swagger or OpenAPI file. And test suites will be generated against each tag available in the swagger file.

-

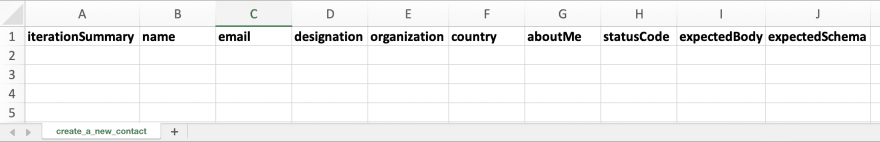

It has automatically created the sample CSV files against each test case with desired columns according to your swagger file as shown in the following image.

We will discuss in detail on how you may fill this excel sheet later in this post.

-

The generated CSV files are also automatically linked as shown in the following image.

So, before every test case execution, the test case will read the data from the linked CSV file and converts it into JSON format and store it in a variable named as data. Now the test case will iterate over the data received and run the iterations. So, if you make a change in CSV file, just run the test case again. Test Case will always pick up the latest state of the CSV file. No need to import again and again.

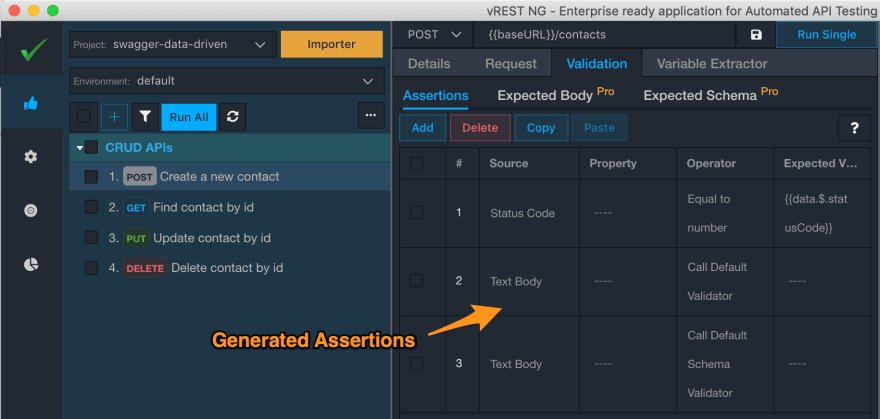

-

It has automatically inserted some variables in the API request params as per the API definitions available in the swagger file. These variables value will picked up from the linked CSV file automatically.

-

It has automatically added the response validation logic as well. In the following image, status code assertion is used to validate the status code of the API response. Text Body with Default Validator assertion compares the expected response body with the actual response body. Text body with Default Schema Validator assertion validates the API response through the JSON schema.

The expected status code will be picked up from the linked CSV file.

And the expected response body will also be picked up from the linked CSV file.

And the expected schema name is also picked up from the linked CSV file.

-

It has imported all the swagger schema definitions in the Schemas section available in the Configuration tab.

You may refer these schema definitions in the Expected Schema tab as discussed earlier. And in the CSV file, you will need to just specify the respective schema name for the test iterations in the expectedSchema column.

(b) Write Test Data in CSV Files

As we have already seen the data file generated from the import process. Let me show you the generated file again for the Create Contact API:

In this sample file, you may add test data related to various iterations for the Create Contact API. In the iterationSummary column, simply provide the meaningful summary for your iterations. This iteration summary will show up in the Results tab of the vREST NG Application. You will need to fill this test data by yourself. You may even generate this test data through any external script.

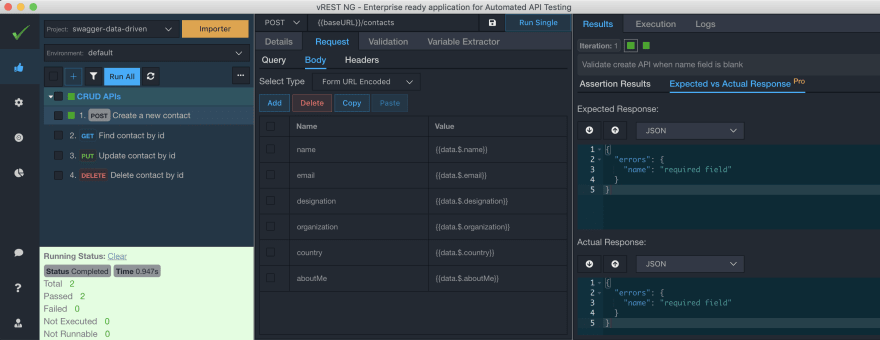

Now, let's add some test iterations in the linked CSV file.

With the above CSV file, we are checking two test conditions of our Create Contact API:

- When the name field is empty

- And when the name field length is greater than the limit of 35 characters.

In the above CSV file, we have intentionally left the expectedBody column blank. We don't need to fill this column. We can generate this column's value via the vREST NG Application itself.

Before executing the test case, we need to configure the baseURL variable of your test application in the Configuration tab like this:

In this section, you can configure your various environments like prod, dev, staging and their configurations using variables.

Now, let's execute this test in vREST NG Application. Both the iterations are failed because expected response body doesn't match with the actual response body as shown in the following image:

Now, click on button "Copy Actual to Expected" for each iteration. vREST NG will directly copy the actual response body to expectedBody column in the linked CSV file.

Now after this operation, if you look at the CSV file again. You can see that vREST NG has filled the expectedBody column for you as shown in the following image.

Note: If you have opened this CSV file in Microsoft Excel then you will need to close the file and open it again in order to reflect the changes. But some code editors e.g. VS Code automatically detect the changes on the file system and reflect it in real time.

Now, if you execute the test again, you can see that the test iterations are now passing.

You may also see the expected vs actual response for the selected test iteration:

And you may see the execution details of the selected iteration by going to Execution Tab:

So, in this way, you may execute test iterations for an API via CSV file. Just add iterations in your CSV file and run it in the vREST NG Application directly. No need to import again and again. It all just work seamlessly. So, it increases your test efficiency and productivity drastically.

(c) [Optional] Setup Environment

For the generated steps, you may also need to set the initial application or DB state before executing your tests. So that you can perform the regressions in automated way. Some use cases of setting up initial state can be:

- Restoring the database state from the backups

- Execute an external command or script

- Invoke a REST API to setup the initial state

Now let's see how you may execute an external command or restore the database state from backup. Suppose for our sample test application, we already have taken a database dump and we would like to restore that dump before executing the test case. As we are using MongoDB for our sample test application, so we can use mongorestore command for our purposes.

You may specify the command as shown in the following image:

The above command will restore the database from the backup which is already there in vREST NG Project directory >> dumps folder.

Note: Make sure the mongorestore command is in your PATH, otherwise you will need to provide full path of the command.

I would like everybody to have your feedback on this approach whether you find it useful or not for your API testing needs. Do like if you find this post helpful. Feel free to contact me in case if you need any help or if you want to use the vREST NG Pro version for free.

Top comments (3)

This is indeed a very "enterprisy" way of testing. I'm pretty sure that this tool...

Managers will love it!

But then a perfectly tested API goes into integration testing and boom will fail instantly. Because your tool only does the most "boring" tests. What do I mean by that?

So I'm afraid that this tool generates a lot of manual work (-> writing the CSV files), but isn't worth the effort, because it is destined to miss those errors that are likely to occur, e.g. wrong character encoding, json format errors, concurrency, load tests, connections not being closed / timeouts, memory leaks, ...

But I have to admit that I've built pretty similar tools for testing. They were not as beautiful as yours, not as generic, and definitely not suited to be released as a product on their own. Now my opinion of data driven testing is that it should be renamed to data testing, because that's the only thing being tested thoroughly.

As long as one doesn't expect that a data driven testing tool helps in making an API production-ready I guess it's okay.

Hi Thorsten,

Thanks for the detailed feedback. It was really enlightening to me what the end user is actually perceiving with my post.

Few points for clarification:

I do not agree with statement that the tool generates a lot of manual work for writing the CSV files. Please justify that if I am wrong. Although it saves time by separating the test logic and test data. It even opens up possibility for the end user to generate those CSV files through an external script.

vREST NG is suitable for validating the cases like wrong character encoding, json format errors etc. Yes, as of now, it is not possible to validate concurrency, load tests, etc because load testing is not supported yet. But vREST NG is architected to handle this case as well.

I agree with @Thorsten Hirsch.

I don't want to be mean or disrespectful, but this sounds to me like a tool mainly targeted at managers (test cases for everything). The downside of this whole solution is its effectiveness. All of it can be achieved more effectively with Schemathesis - without the need to manually create CSV files! As a bonus you can either use it as a CLI or in programatic way.