title: [Gemini][MCP] Using Gemini on Cline to call MCP functions

published: false

date: 2025-03-22 00:00:00 UTC

tags:

canonical_url: https://www.evanlin.com/til-cline-gemini-mcp/

---

## Foreword

Recently, MCP is a very hot topic of discussion, but when people mention MCP, they often think of Anthropic's Claude or other language models. This article will tell you some basic principles of MCP and how to use Google Gemini to call MCP. I hope to give you some organization.

## What is _MCP_ (Model Context Protocol)

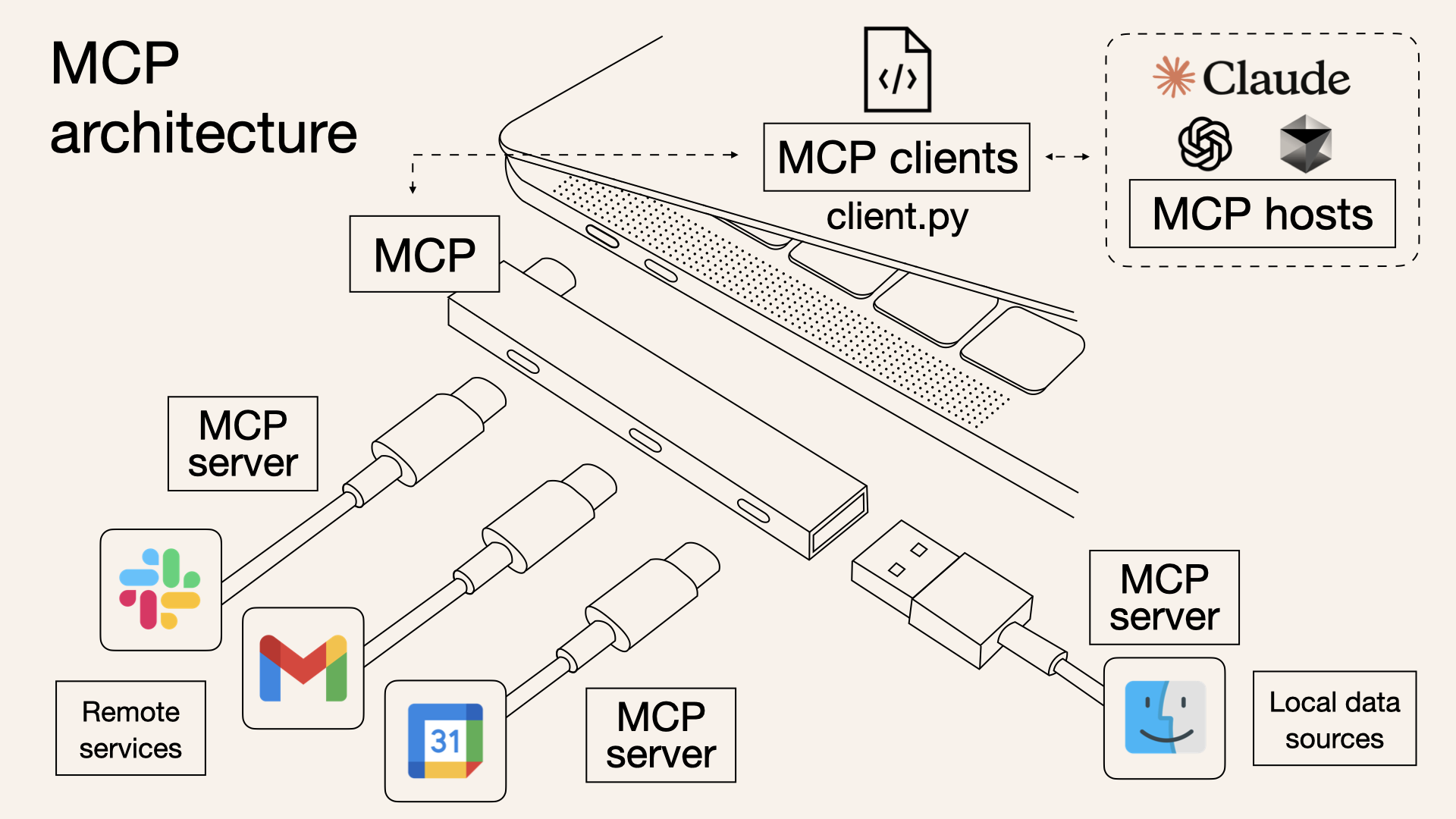

[According to Anthropic's documentation](https://docs.anthropic.com/zh-TW/docs/agents-and-tools/mcp): MCP is an open protocol for standardizing how applications provide context to large language models (LLMs). You can think of MCP as the USB-C interface for AI applications. Just as USB-C provides a standardized way for your devices to connect to various peripherals and accessories, MCP provides a standardized way for AI models to connect to different data sources and tools.

Here also shares the architecture diagram on YT [https://www.youtube.com/watch?v=McNRkd5CxFY&t=17s](https://www.youtube.com/watch?v=McNRkd5CxFY&t=17s), making it easier for everyone to understand

(Image source [技术爬爬虾 TechShrimp](https://www.youtube.com/@Tech_Shrimp) :[What is MCP? What are the technical principles? Understand everything about MCP in one video. Windows system configuration MCP, Cursor, Cline uses MCP](https://www.youtube.com/watch?v=McNRkd5CxFY&t=17s))

It can be seen here that through MCP, the AI clients mentioned here (everyone often uses ChatGPT, Claude, Gemini, etc.) can directly "operate" these services through the MCP architecture.

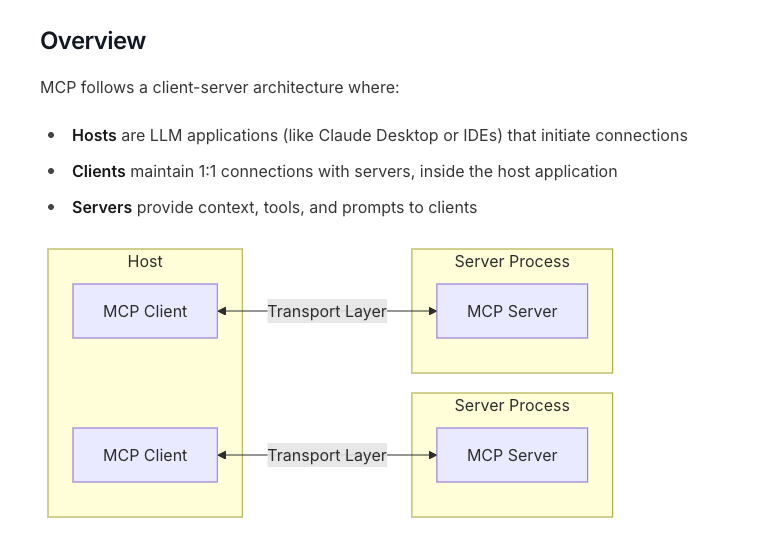

### Architecture Diagram in MCP Services

(Architecture Diagram: [MCP Core architecture](https://modelcontextprotocol.io/docs/concepts/architecture))

This architecture diagram clearly describes the Client Server architecture of MCP, and I will emphasize it here again.

- **MCP Host**: Applications that use these MCP services, such as Cline, Windsurf, or Claude Desktop)

- **MCP Server**: This should be introduced in many places, and I won't go into details here. There will also be example code later. It is a versatile communication protocol that makes it easier for each MCP Host to use some external functions and becomes a common protocol.

- **MCP Client**: In each Host, after confirming the use of a certain MCP Server. In the Host, there will be its related client, which will be stored in Prompt. Details will be described later.

## MCP Operation Details

For complete details, you can refer to the explanation of this person on the Internet, but I have changed the content to Google Gemini-related applications. In addition to replacing the DeepSeek used in the video, it can also make the entire application more in line with information security-related applications.

##### Reference Video:

[技术爬爬虾 TechShrimp](https://www.youtube.com/@Tech_Shrimp) [How does MCP connect to large models? Grabbing AI prompts, disassembling the underlying principles of MCP](https://www.youtube.com/watch?v=wiLQgCDzp44)

### Establish an AI API Gateway through CloudFlare

(If you want to view related details, you need to use OpenRouter or OpenAI Compatible)

- Create a [Cloudflare account](https://dash.cloudflare.com/)

- Create AI -> API Gateway

- Select OpenRouter as the option, and then remember to [apply for an account on OpenRouter](https://openrouter.ai/)

If you want to view the MCP communication details, you have to use OpenAI or OpenRouter. Here, you can watch the [video](https://www.youtube.com/watch?v=wiLQgCDzp44) to see the complete tutorial. Here, I will directly paste the relevant details.

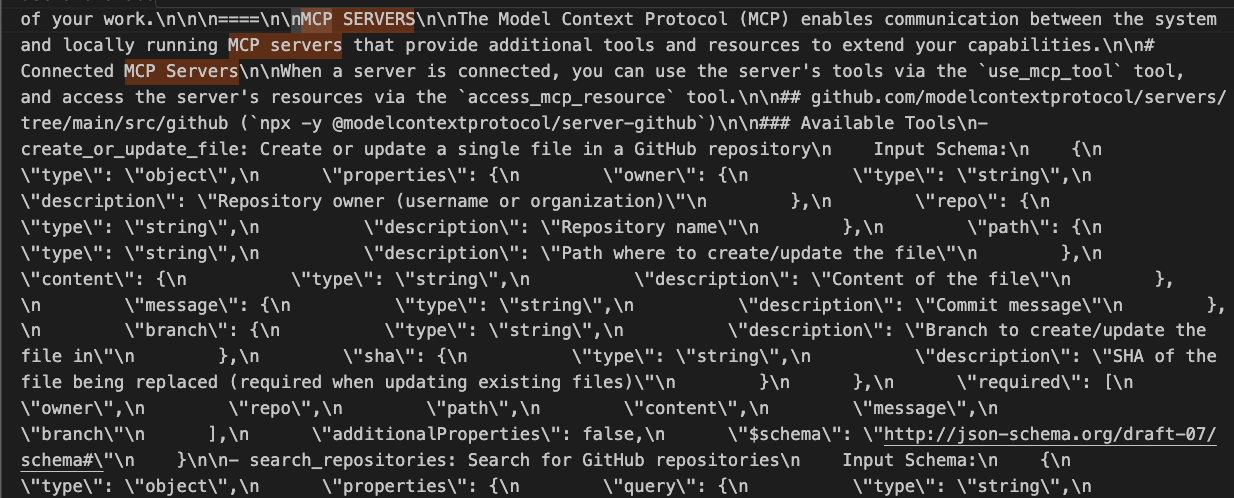

The above is to parse the MCP communication mechanism by capturing packets through Cloudflare:

- You will find that all the Servers and functions installed by the MCP Server are treated as prompts and input to the model.

- What each MCP Server can do will be written in the Prompt (the picture shows the Github Repository MCP Server)

This information is very important and will be discussed in the next section, MCP and Function Call.

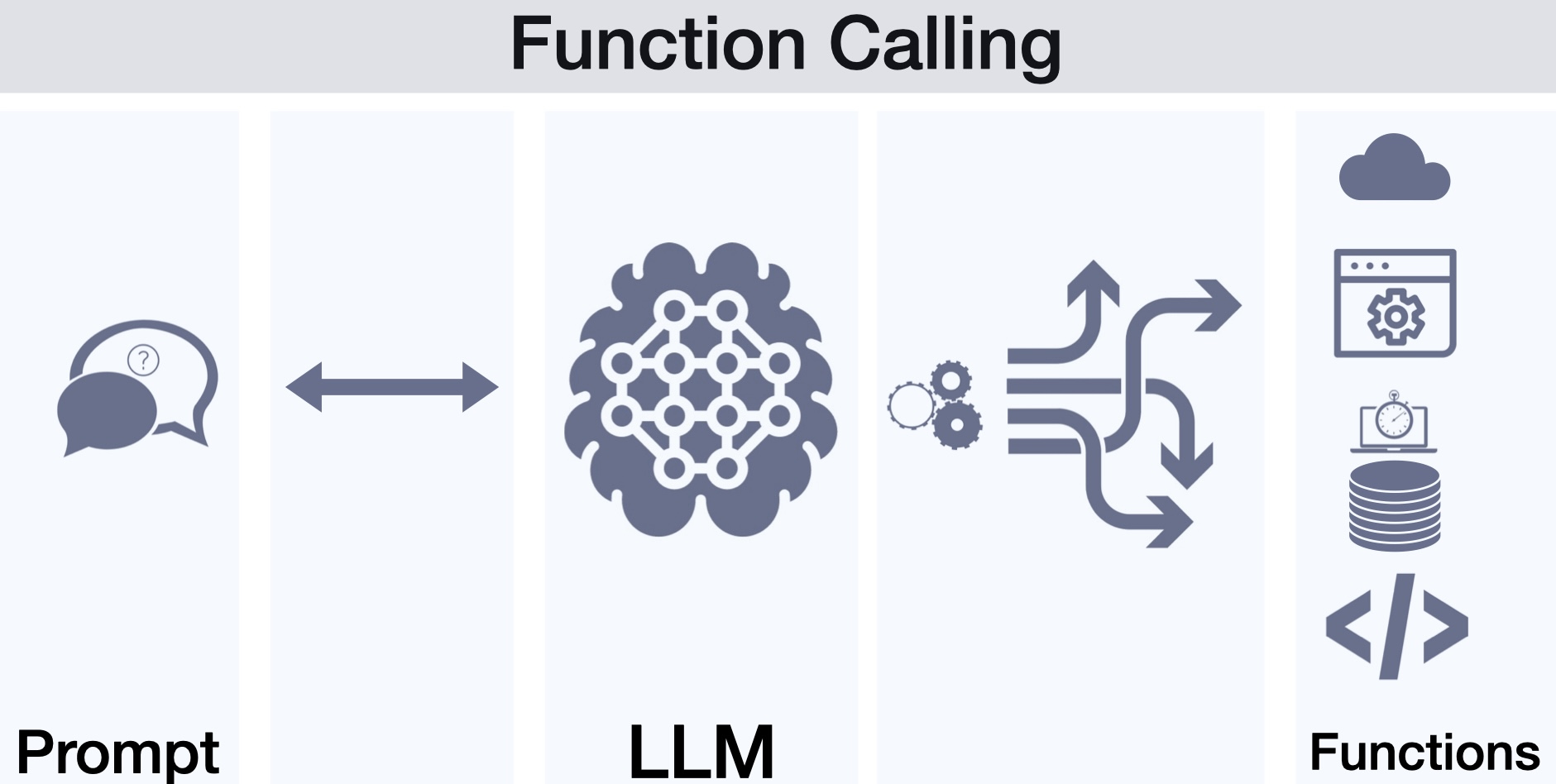

### The Difference Between MCP and Function Call

Originally, some LLMs would use Function Calling to decide how to call some tools. This actually requires additional support from the Model. Although the common LLM providers OpenAI, Google, and Anthropic all support Function Calling, it is still a headache to write related Function Calling Apps every time for these tools.

This is also why MCP is popular. It turns the original Function Calling App into a shared mechanism. Every relevant application vendor can write their own MCP Servers, and it can also make the services used available to more people.

And, very importantly:

- **MCP allows Models that cannot use Function Calling to use MCP Servers to create applications similar to Function Calling**

- **MCP allows Models that cannot use Function Calling to use MCP Servers to create applications similar to Function Calling**

- **MCP allows Models that cannot use Function Calling to use MCP Servers to create applications similar to Function Calling**

Because the relevant model used in the [技术爬爬虾 TechShrimp](https://www.youtube.com/@Tech_Shrimp) [How does MCP connect to large models? Grabbing AI prompts, disassembling the underlying principles of MCP](https://www.youtube.com/watch?v=wiLQgCDzp44) video is DeepSeekChat, which actually does not support Function Calling, but it can also interact through MCP Server.

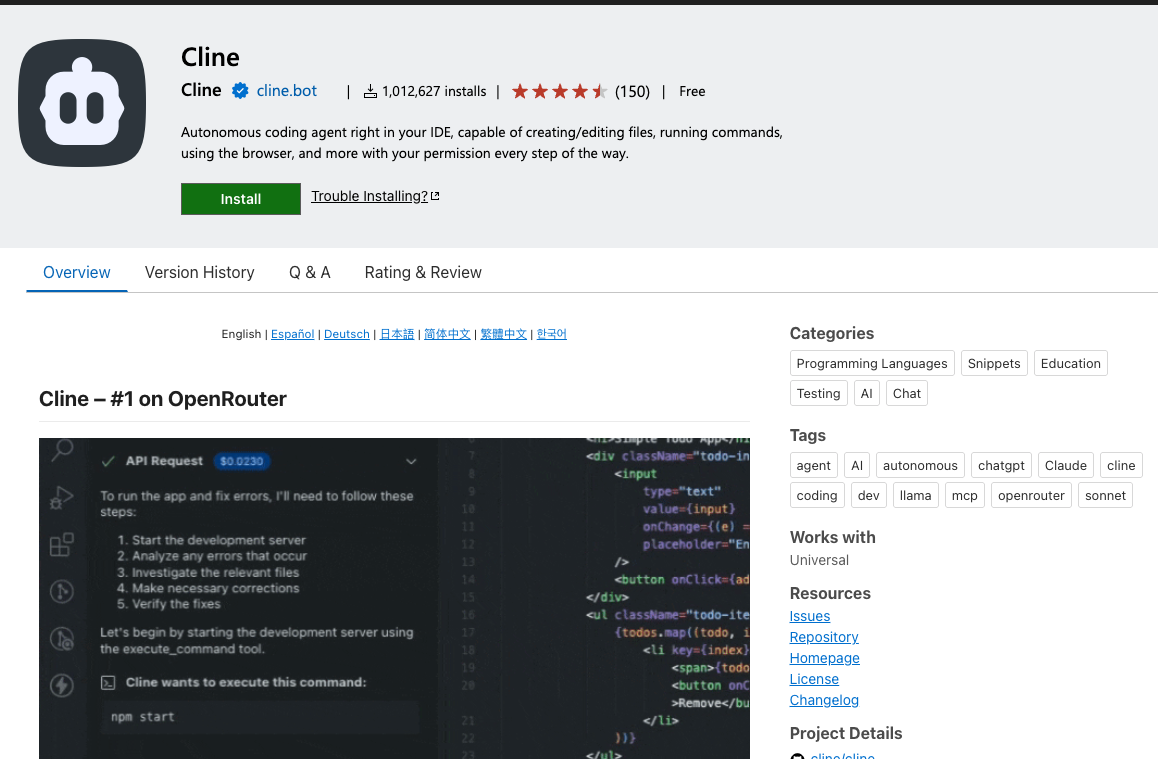

## Using Cline as an MCP host

(Refer: [Cline Plugin](https://marketplace.visualstudio.com/items?itemName=saoudrizwan.claude-dev))

Cline is a VS Code Plugin, and there are currently many similar AI IDE Plugins. However, because Cline itself has built-in support for the MCP Server communication mechanism and has a built-in recommendation list, it allows developers to quickly connect to MCP Servers. It is highly recommended to use Cline as your first tool to understand MCP.

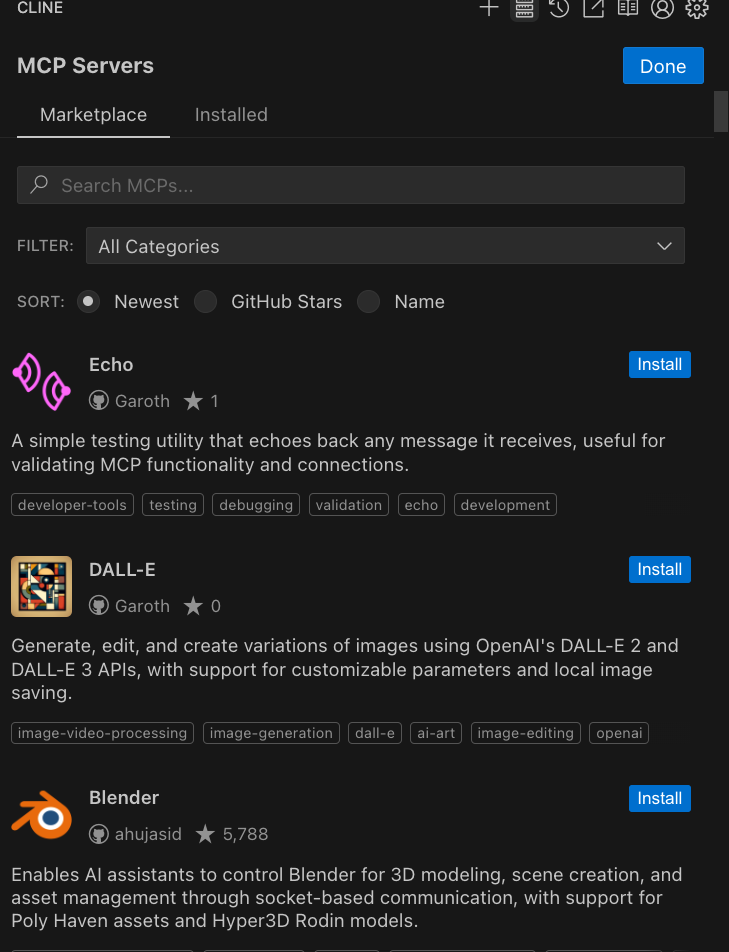

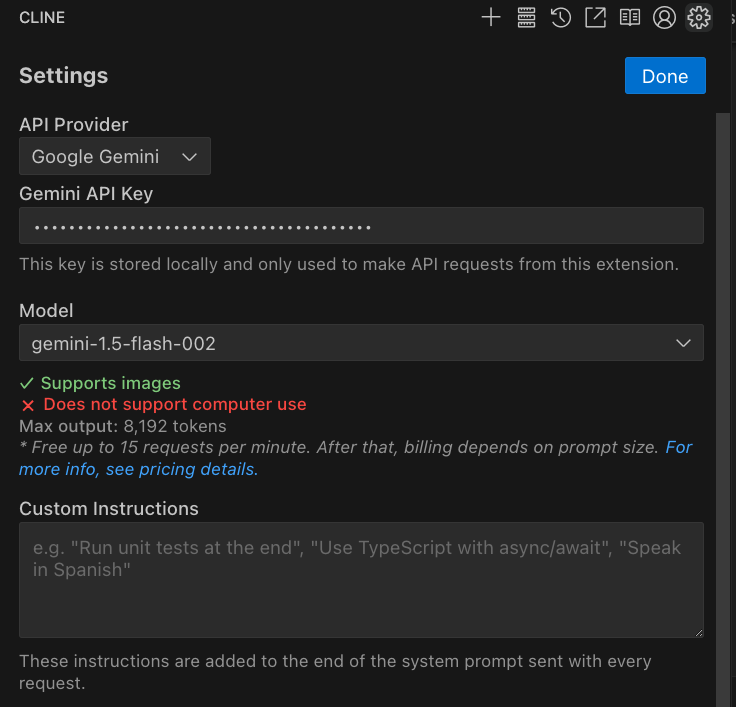

### Related Cline setup process to let you use MCP Servers

- Install [Cline Plugin](https://marketplace.visualstudio.com/items?itemName=saoudrizwan.claude-dev)

- Select the MCP Servers you need

- Set up your model, here using Google Gemini, and using the more economical `Gemini-1.5-flash`.

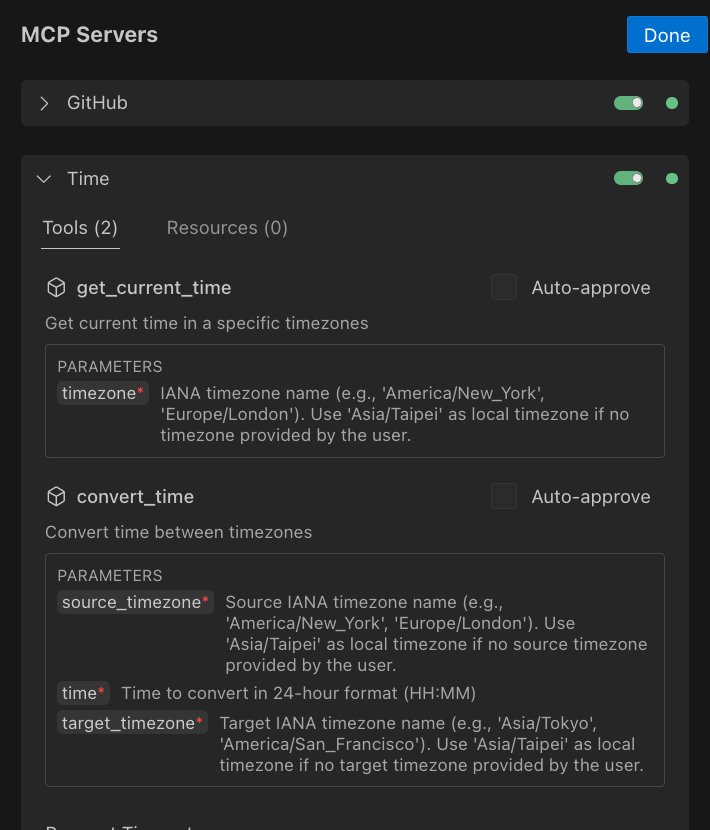

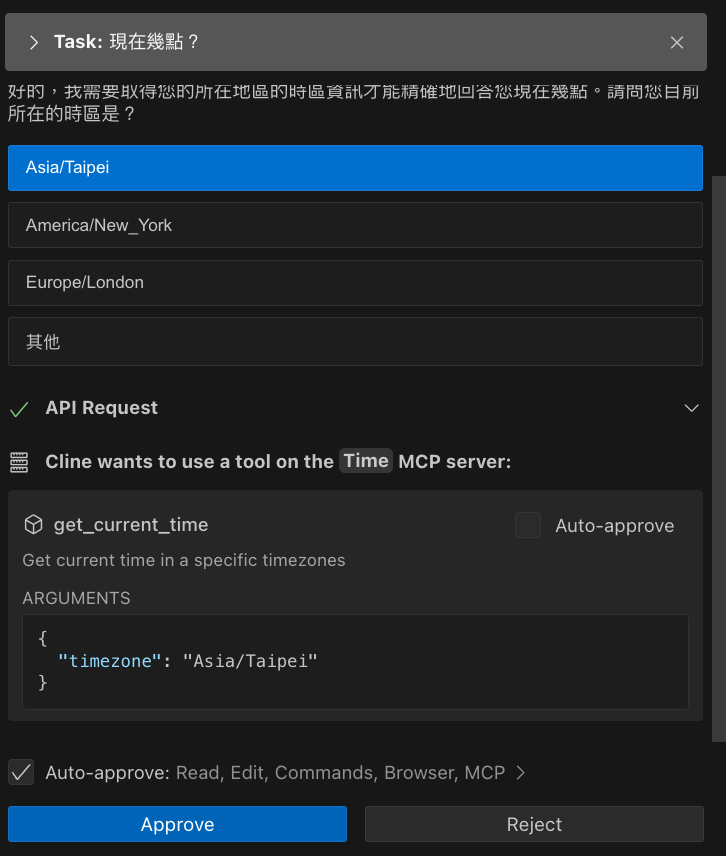

Here, it is recommended that you first install the safest and simplest "[Time MCP Servers](https://github.com/modelcontextprotocol/servers/tree/main/src/time)”, and you can also understand it from the Cline interface.

Here you can see that "[Time MCP Servers](https://github.com/modelcontextprotocol/servers/tree/main/src/time)" supports two functions:

- Get\_current\_time

- Convert\_time

At this time, if you ask, `What time is it?`

- It may first ask you to tell it your region

- Then decide to run MCP Server (will ask for your consent)

- After agreeing, it will tell you the current result.

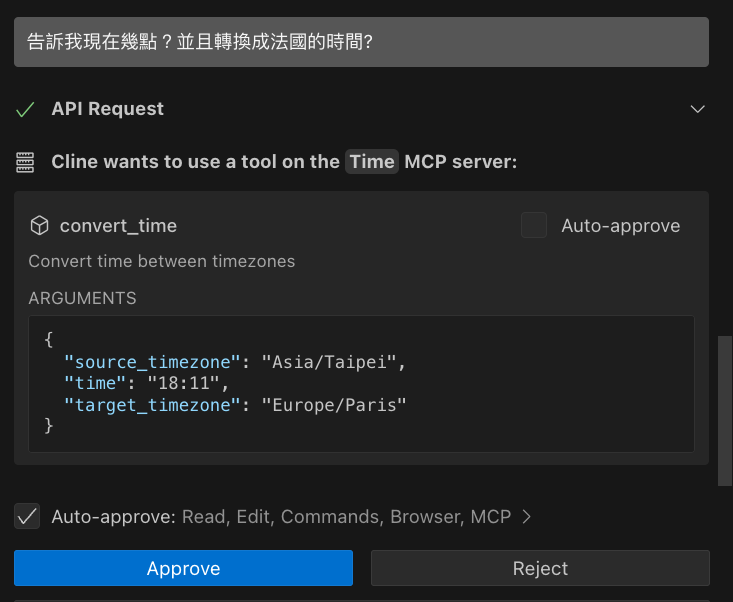

If you want to change to `Tell me what time it is now? And convert it to French time?`

Then it will call the conversion API, and it will also require consent at this time. After completion, you can run the relevant work.

## Future Development:

This article quickly made a brief introduction and used Cline with the Gemini 1.5-flash model to call the MCP Server to demonstrate a time query. The next article will tell you how to write an MCP Server and share useful applications with you.

## References:

- [MCP Core architecture](https://modelcontextprotocol.io/docs/concepts/architecture)

- [技术爬爬虾 TechShrimp](https://www.youtube.com/@Tech_Shrimp) :[What is MCP? What are the technical principles? Understand everything about MCP in one video. Windows system configuration MCP, Cursor, Cline uses MCP](https://www.youtube.com/watch?v=McNRkd5CxFY&t=17s)

- [高見龍: What is MCP? Can it be eaten?](https://www.youtube.com/watch?v=cdBRAVYZKFo)

For further actions, you may consider blocking this person and/or reporting abuse

Top comments (0)