I've been wondering more about what's keeping AI from reaching human intelligence lately and finally scoured the web for anybody else's take on the subject. There were not as many results as I expected that were recent and relevant to current advancements in AI technology. I guess it makes sense considering the lack of foreseeable business in having an AI that mimics a human brain and there's also an underlying fear of machines gaining sentience and ending humanity as we know it. But that aside, I did find an article that mentioned some interesting insights on the topic.

https://interestingengineering.com/a-new-type-of-ai-has-been-created-inspired-by-the-human-brain

This article talks about a new model for simulating AI sensory input made to view all the separate parts of an entire image in cohesion rather than breaking down the symbolic components and interpreting them separately. It's an interesting concept, but I honestly didn't realize that couldn't already be done or wasn't done cost efficiently until now. Plus, this model is still much more business oriented rather than looking into representing the human mind.

But generally, a lot still separates a human brain and an Artificial Intelligence on both a macro level and a low level. I'm genuinely surprised that there aren't more attempts at even simpler brain analogies like a Freudian or a Jungian style intelligence. I'm sure somebody has attempted to simulate this as a project somewhere, but the sources the Google and other search engines deem relevant are very sparse with information, repeat old news, or aren't really about drawing out a connection between human and machine.

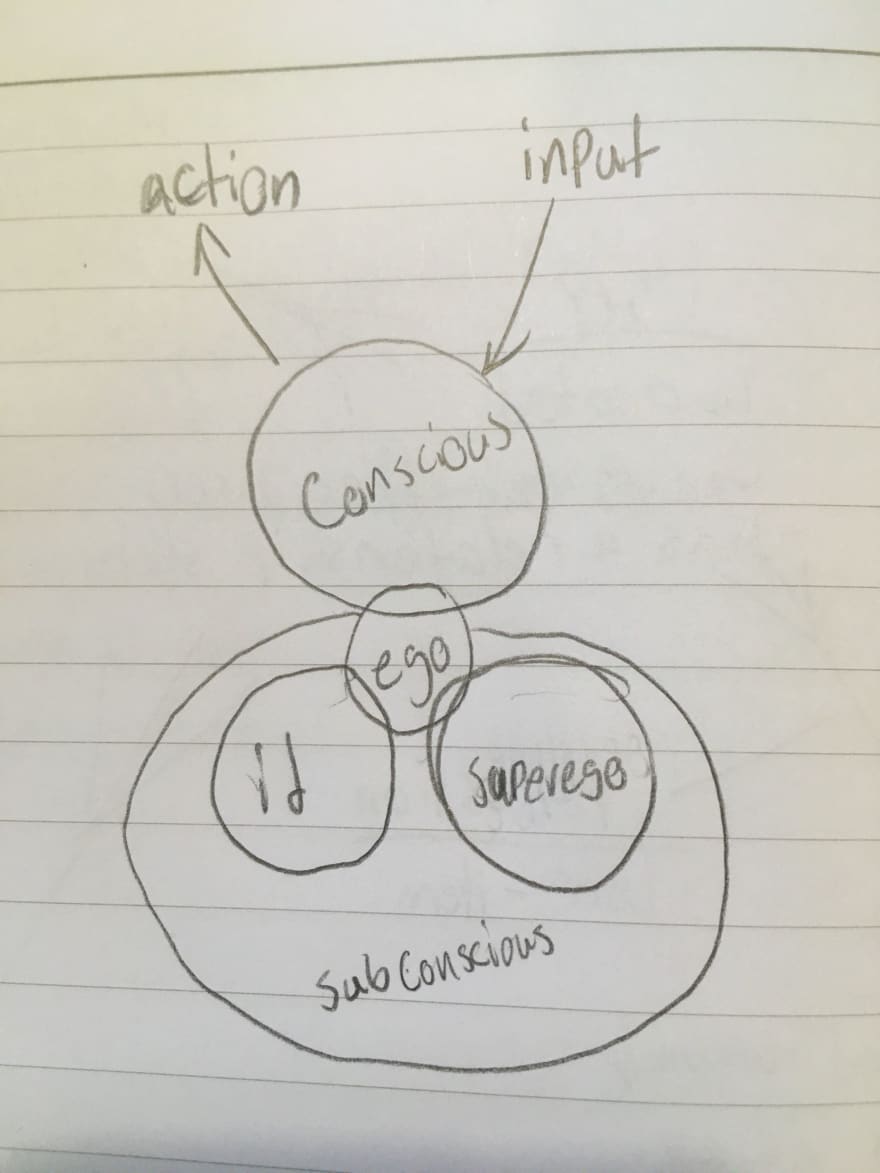

For example, a Freudian AI could have a conscious interactive side that receives input and forms responses as well as basic thinking and processing, while a subconscious side stores reinforced habits generated from experienced patterns that influence the thinking and reacting stages of the mental process. Then in the subconscious, three primary motives that are centered around self preservation representing the ego, id, and superego, with the id and superego giving the ego separate motives to the decision making process and the ego assigning priorities and weighing the options to best fulfill those motives. What could then make this AI more human is the ability to rush a decision without fully thinking it out, allowing more timely answers to difficult choices at the expense of a confident response, giving the intelligence a feature that humans regularly use. However, the computer's speed would still make it an inhumanly fast decision maker in this case as long as it can quantify opposing motivations and choose the one that aligns with its priorities.

A big question about this abstract would be something along the lines of "How are these various motivations stemming from different needs determined and given value?". I think a feasible move would be to start at a base goal for both the id and superego, something for example like "constantly gather resources for immediate consumption" and "be part a large group" and expand from there with more detail. With these basic directions an AI's goal should be to gather the means of survival like food and water as well as stick with a large group for protection. The id generally is prioritized over the superego, but that would vary from person to person being a part of their personality.

This person would seek food and water but stick with the crowd if there isn't any available. If a person intrudes on resources that another person claims ownership of and is saving for the future, they should have an anger reaction and fight the thief. From here, this thievery and consequent fighting threatens the group's stability. The thief could learn from the fight and refrain from stealing in the future, or other group members would exile the thief to prevent fighting or because they worry that they'll be robbed next. Maybe they'll even exile the victim for inciting violence. The conversion of actions like thievery and violence to data that can be weighed comes down to drawing an association between the theft and a threat to survival, which depends on a person dynamically interpreting another person taking their food as an action that must be resisted to survive and they need to prioritize it above staying loyal to the group because the thief is technically a part of said group. This could be a simple priority queue that is aware of changing circumstances.

There may also need to be a basic language simplifying the interpretation of varying objects that the AI has know previous knowledge of through different stimuli like brightly colored creatures signifying danger.

This system would still be a very simplistic model of a simplified psychological concept, and not very accurate considering it's based on a largely discredited view of psychology. The other end of the spectrum would be to model a network of neurons and simulate the actions of the neurons interacting and forming thoughts out of pathways and neurotransmitters. This concept should eventually turn out a much more recognizable brain intelligence, but the cost of simulating 100 billion neurons and all their individually moving parts is pretty far out of the realm of possibility and practicality for the vast majority of computers.

The short of it is, given the lack of material, it seems the general interest in "human AI" is surprisingly low. It's strange to me given how so many people wonder the brain works themselves, and I would love to see a more professional or at least guided approach to the topic with more to show for it.

Top comments (0)