The Jetstreams

Cloud operators now provide two completely different classes of service to customers:

-

Self-Service, VMs, Operating System Templates

- Generally mature, some private cloud operators are smoothing out CMPs or such, but work as intended from a customer perspective

- Bringup is automated

- Operating System level configuration is usually automated

- Application-level configuration is not always automated or managed as code

- Cloud Provider typically will hold responsibility for a widget working

-

Containers, Service Definitions, no GUI

- Kubernetes fits squarely here, but other services exist

- Not the most customer friendly, nascent

- Application Owner has to hold responsibility for a widget working

Infrastructure Engineers as Agents of Change

The industry cannot transition responsibility for "stuff working" to creative types (Web Developers, App Developers). Have you ever heard "it's the network"? How about "This must be a "?

This is a call for help. Once the current trends with Automation and reliability engineering slow down (Type 1 above), the second kind of automation is going to necessitate leveraging infrastructure expertise elsewhere. Services like Kubernetes both require a "distribution" of sorts, but there's nobody to blame when something fails to deploy.

Why This Matters

NSX-T's 3.2 release has provided a ton of goodies, with an emphasis on centralized management and provisioning. We're starting to see tools that will potentially support multiple inbound declarative interfaces to achieve similar types of work, and NSX Data Center Manager has all the right moving parts to provide that.

NSX ALB's Controller interface provides comprehensive self-service and troubleshooting information, and a "Lite" service portal.

NSX Datacenter + ALB presents a really unique value set, with one provisioning point for all services, in addition to the previously provided Layer 3 fabric integration. It's good to see this type of write-many implementation

Let's try it out!

First, let's cover some prerequisites (detailed list here: https://docs.vmware.com/en/VMware-NSX-T-Data-Center/3.2/installation/GUID-3D1F107D-15C0-423B-8E79-68498B757779.html):

- Greenfield Deployment only. This doesn't allow you to pick up existing NSX ALB installations

- NSX ALB version 20.1.7+ or 21.1.2+ OVA

- NSX Data Center 3.2.0+

- NSX ALB and NSX Data Center controllers must exist on the same subnet

- NSX Data Center and NSX ALB clusters should have a vIP configured

- ...it also can't support third party CAs

Once these are met,the usual prerequisites also matter.

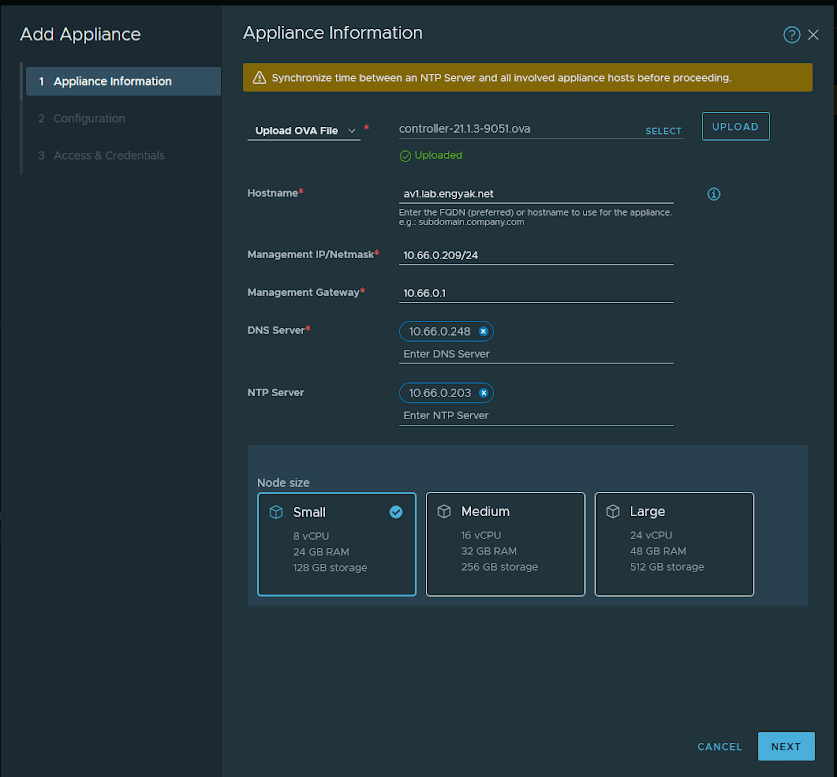

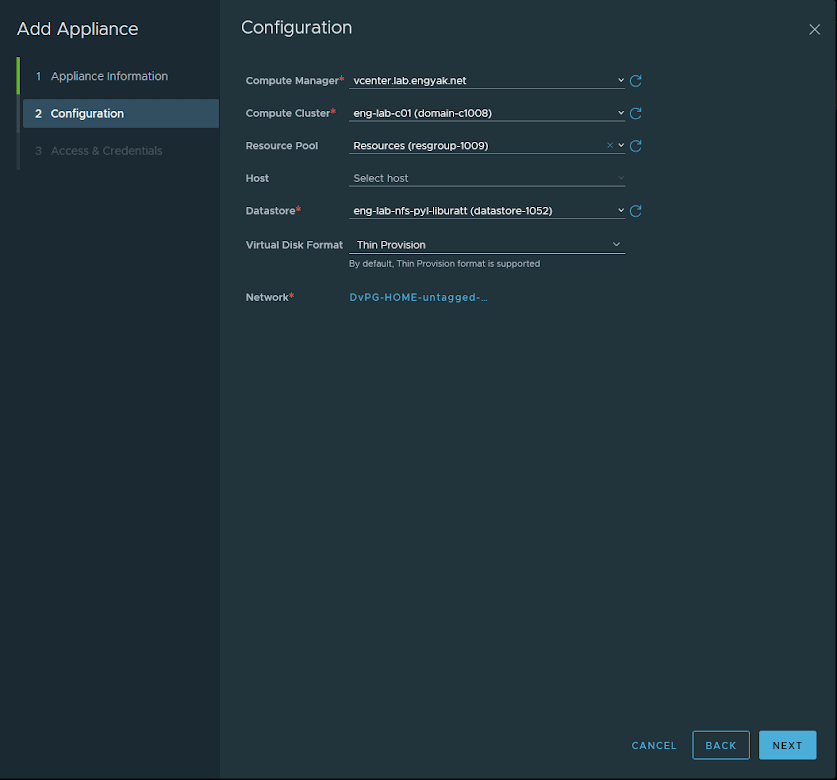

Deployment is extremely straightforward, and managed under System -> Appliances. This wizard will require you to upload the OVA, so get that rolling before filling out any forms:

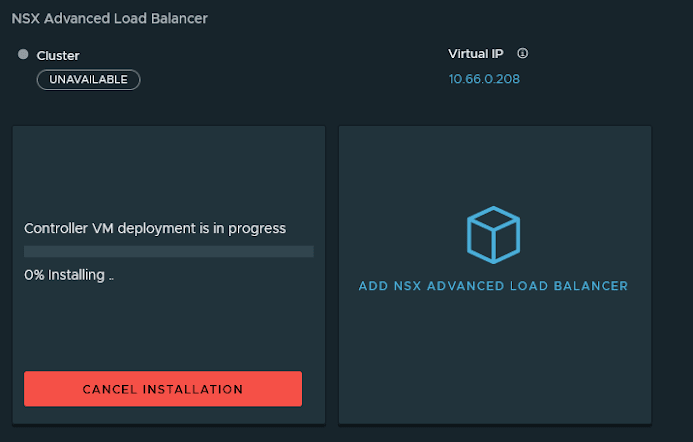

The NSX Manager will take care of the VM deployment for you. Interestingly enough, this will allow us to potentially get rid of tools like PyVmOmi and let us deploy everything with Ansible/Terraform someday.

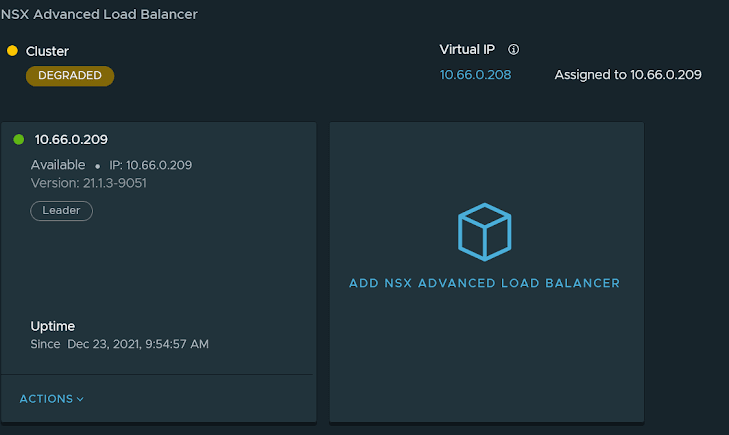

Once it's done deploying the first appliance , it'll report a "Degraded" state until 3 controllers are deployed.

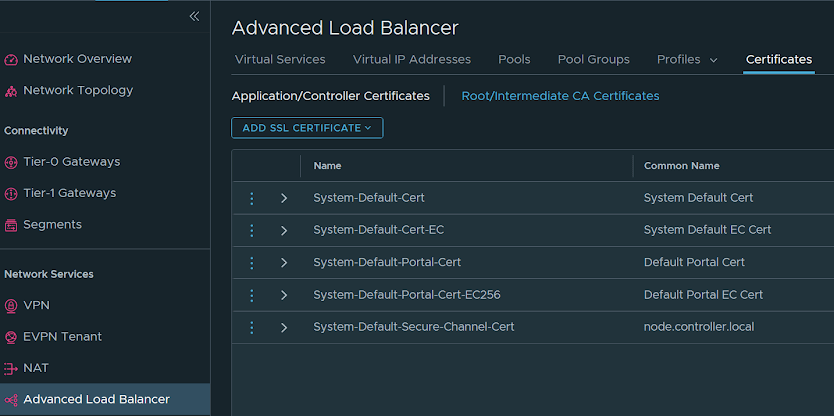

Once installed, the NSX ALB objects should appear under Networking -> Advanced Load Balancer :

At this point, NSX Datacenter -> NSX ALB is integrated, but not ALB -> Data Center. The next step is to configure an NSX-T cloud. I've covered the procedure for configuring an NSX-T cloud here: https://blog.engyak.co/2021/09/vmware-nsx-alb-avi-networks-and-nsx-t.html

Note: Using a non-default CA Certificate for NSX ALB here will break the integration. It can be reverted back by reverting the certificate, and there doesn't appear to be an obvious way to change that yet. A Principal Identity is formed for the connection between systems, indicating that the feature is just not fully exposed to users yet.

Viewing Services

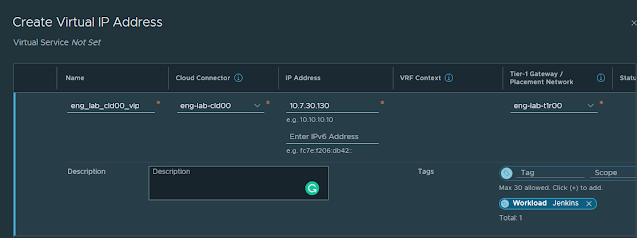

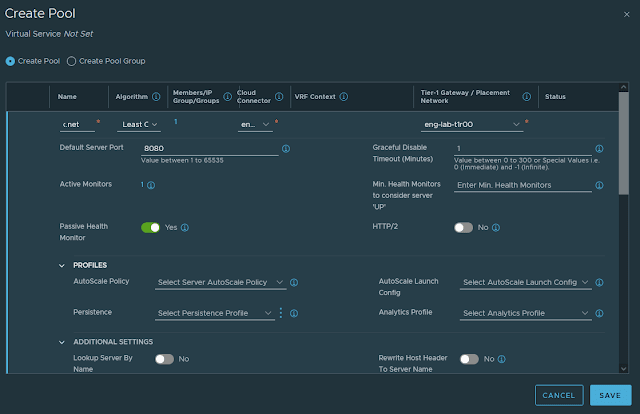

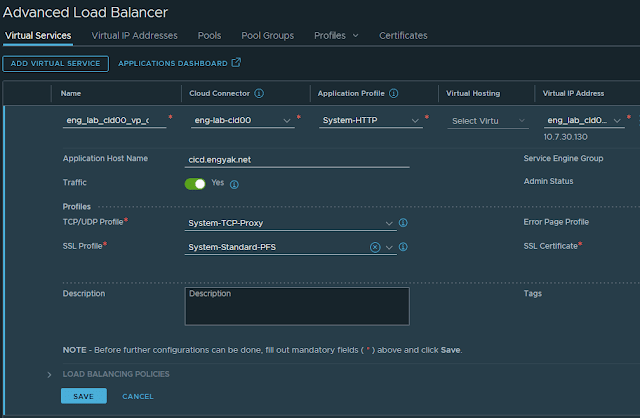

A cursory review of the new ALB section indicates that existing vIPs don't appear via the ALB GUI, but the inverse is true. Let's try and build one for Jenkins! The constructs are essentially the same as with the ALB UI, but the process is considerably simpler:

First, create a vIP

Finally, we will create the virtual service. Note: nullPointerException seems to mean that the SE Group is incorrect, and may need to be manually resolved on the ALB controller.

Unlike most VMware products, NSX Data Center seems to handle multi-write (changes from BOTH the ALB and the Manager) fairly well.

That's it!

Footnote: To use custom TLS profiles, it must be invoked via the API only. I am building a method to manage that here: https://github.com/ngschmidt/python-restify/tree/main/nsx-t/profiles/tls

Top comments (0)