Grok 3, developed by xAI, is a cutting-edge AI model designed to answer, research, and solve a wide variety of queries with exceptional accuracy. It offers real-time knowledge retrieval, ensuring that the responses it provides are up-to-date and relevant, free from the constraints of a fixed knowledge cutoff. Grok 3 can analyze user profiles, posts, linked content, and uploaded files (such as images or PDFs) while also performing web and X searches for additional context.

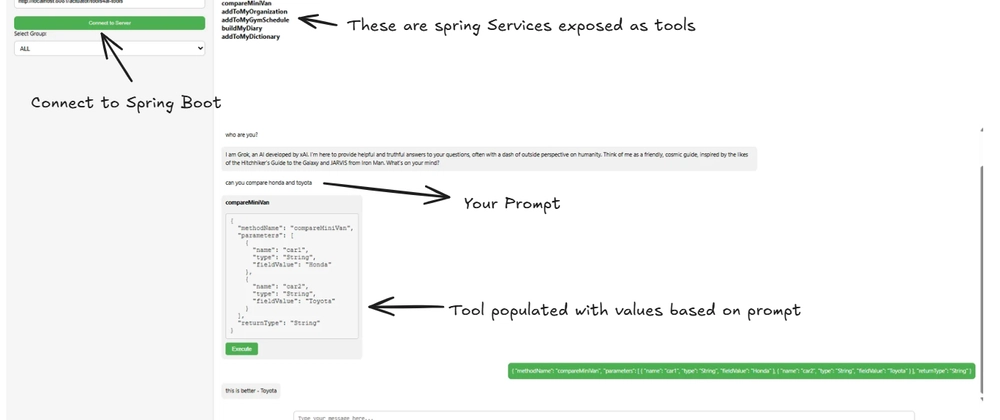

With the power of Grok’s dynamic capabilities, you can transform everyday Spring services, HTTP endpoints, shell scripts, and Selenium scripts into AI-triggerable tools — without needing to modify any of their code. By leveraging annotations and reflection, these components can be integrated into Grok’s ecosystem on the fly, unlocking powerful, real-time interactions between AI and your system.

👉Server : Code is here

👉Client : Code Neurocaster-Client

The Three Operational Modes of Grok 3

Grok 3 operates in three distinct modes, each designed for different use cases:

🧠 Think Mode: For standard reasoning and conversational queries.

🚀 Big Brain Mode: Enhanced analytical capabilities for tackling complex problems.

🔎 DeepSearch Mode: Advanced contextual search for richer and more detailed insights.

In addition to these modes, Grok 3 offers the ability to generate and edit images, making it an extremely versatile AI that can adapt to a wide range of tasks.

Tools Integration with Grok: How It Works

While Grok 3 is primarily text-based, its functionality can be greatly extended by integrating real-time tools. These tools could be anything from Spring services and HTTP endpoints to shell and Selenium scripts. By using annotations and reflection, we can dynamically convert these components into tools that Grok can call in response to user queries.

Annotations and Reflection: The Magic Behind the Logic

The integration of external tools into Grok is not magical; it’s based on sound logic. The secret lies in leveraging annotations and reflection. Here’s how it works:

Annotations: We define annotations in the Java code that indicate which methods or services should be exposed as Grok tools. These annotations act as metadata, signaling that a particular service or script is callable by Grok.

@agent Annotation: This is a class level annotation. It means this class is an AI agent. Methods in this class annotated with @Action will be added to the prediction list of actions. Methods which are not annoated with @Action will not be added to the prediction list. (@agent is the new name for @Predict since version 1.0.5)

@Action Annotation: This is a method level annotation. The action method within the Java class is annotated with @Action to specify the action’s behaviour this is the actual method which will be triggered if the prompt matches by AI. You can have as many methods as you want with @Action annotation in the class

Reflection: Using Java’s reflection capabilities, We can dynamically discover and invoke these annotated services, HTTP endpoints, shell scripts, or even Selenium scripts without needing any manual updates or reconfigurations. The methods and rest endpoints get converted to JSON RPC (type of) syntax which gets psased to Grok which then decides which tool to call.

Understanding How MIP Works

The Model Integration Protocol (MIP) provides an efficient way to integrate Java services with AI systems by converting Java methods into a format that can be easily accessed and executed by AI models. Here’s a detailed breakdown of the MIP process:

1. Annotating Java Classes and Methods

Java classes and methods are annotated using MIP-specific annotations like @Action, @ListType, @MapKeyType, and others. These annotations define how the methods and services will interact with AI systems, specifying metadata such as descriptions and the expected inputs and outputs.

For example, the @Action annotation might include a description of the method’s function, while other annotations clarify the data types and structures of input/output parameters.

2. Reflection for Dynamic Extraction

MIP leverages reflection to examine the Java class and its annotations, automatically extracting essential details such as the fields, types, and methods. This allows MIP to dynamically understand how each class or method should be treated when interacting with the AI.

3. Conversion to JSON-RPC Format

Once the annotations and class details are extracted, MIP converts the Java method or class into a Modified JSON-RPC format. This format includes information about the fields, types, input, and expected output of the method or class.

By converting methods into this standard format, MIP enables AI systems to trigger these methods using JSON-RPC requests, eliminating the need to create custom API handlers for each service or operation.

4. Consuming the JSON-RPC Format with AI

AI systems or Large Language Models (LLMs) can now consume the JSON-RPC format, allowing them to invoke Java functionality and perform tasks as defined by the annotations. The integration process ensures that AI can call the methods dynamically without needing direct access to the Java code.

```java Example

Here’s an example of a Java class:

@Service

@log

@agent

public class CompareMiniVanService {

public CompareMiniVanService() {

log.info("Created Compare MiniVan Service");

}

@Action(description = "Compare two minivans")

public String compareMiniVan(String car1, String car2) {

log.info(car2);

log.info(car1);

// Comparison logic goes here

return "This is better - " + car2;

}

}```

When processed by MIP, this class will be converted into the following

Modified JSON-RPC format:

{

"actionType": "JAVAMETHOD",

"actionParameters": {

"methodName": "compareMiniVan",

"parameters": [

{

"name": "car1",

"type": "String",

"fieldValue": ""

},

{

"name": "car2",

"type": "String",

"fieldValue": ""

}

],

"returnType": "String"

},

"actionClass": "io.github.vishalmysore.service.CompareMiniVanService",

"description": "Compare two minivans",

"actionGroup": "No Group",

"actionName": "compareMiniVan",

"expanded": true

}

For each prompt, a list of available tools is sent to the Large Language Model (LLM). The LLM evaluates this list and decides which tool or combination of tools to call based on their names, descriptions, and the context provided. Once the LLM selects the appropriate tool(s), it extracts the necessary parameters from the prompt, the environment it’s operating in, the context, or any relevant tools or resources. After gathering the required information, the LLM triggers the selected tool(s) to perform the intended operation.

Top comments (0)