This was originally posted at the Accelerate Delivery blog

QA in Engineering

A software engineering teams's goal is to maximize the throughput and stability of effort put into a product. I'd like to examine where Quality Assurance (QA) fits into this goal. To calibrate, Wikipedia describes QA as:

a means of monitoring the software engineering processes and methods used to ensure quality

From my experience a designated role performs QA, the QA engineer. This person checks that developers have coded what they intended. QA engineers verify that developer's effort matches design's and product management's expectations. Here's a diagram showing a simplified delivery flow for a new feature, and where QA fits:

As mentioned engineering's goal is to maximize throughput and stability. What do we mean by maximizing stability? We can substitute "stability" for "expected behavior". We want to maximize the expected behavior from our product. This expected behavior can cover a wide spectrum. On one end of the spectrum stability is ensuring operational needs. For example, stability can mean that your product scales quickly and reliably in a high traffic scenario. On the other end of the spectrum stability is ensuring UI requests. For example, stability can mean that the blue button has the color and typography that design intended.

The QA engineer's domain is stability. Adding a QA verification step to your delivery pipeline has an impact. What is the trade-off for this stability?

The Good

First, QA engineers improve quality if they perform their job. Developers make mistakes. Let me write it again because it's a fact and it's fine. Developers make mistakes. QA distributes the burden of catching mistakes. That burden is already shared by the developer who wrote the code, the peer reviewers, and the accepting product manager. QA verification is an extra check.

Second, a QA engineer's perspective is a beneficial addition. Developers want to get code working. To do this they generally focus on the happy path. Peer reviewers focus a little less on the happy path, but generally empathize with the developer. QA engineers try to break the code. This is their unique perspective. The code is a black box to them. It's not a well-placed design pattern, the latest JavaScript library, or a clever algorithm. It's a thing they interact with. They want that interaction to be predictable under pressure.

Finally, trust is improved with a QA step in the delivery pipeline. The analogies I hear from product managers are:

- You keep a beat cop on the street to prevent crime

- You put a backstop behind the pitcher

- You have a goalie as a last line of defense

- You can drive 120 mph and get where you're going faster, but the accidents will be worse.

These analogies are bad abstractions (What if we don't need "beat cops" anymore? What if it's more like "asking the pitcher to pitch slower than 50mph"? What if machines let us drive 120mph safely?), but their intention is clear. We have a higher degree of trust if we lower our tolerance to risk. If we abide by the rules we can expect fewer radical actors performing untrustworthy actions. If we submit our work to the QA before its shipped then we've done our due diligence.

The Bad

Let me preface this section with caveats. My experience is with outsourced QA engineers in another time zone. Also, I'm speaking to a web development delivery pipeline. Please note these caveats! They make a huge difference when discussing a QA engineer's role. Adjust the following points to your own situation; it's likely different, but related.

Cost

There's a financial cost for QA engineers. Compare this cost against the price of a bad build making it to production. For some products the price of a bad build is higher than others. In web development rolling back a bad build is easy. However, even in web development, a bad build can be expensive. QA engineers require money. Ask yourself if it's worth the cost.

Throughput

Throughput will suffer any time you add a step in your delivery pipeline. Our QA team is seven hours ahead of our dev team. This is a bad split. It means that any work we finish has no hope of verification on the same day. Here are the approximate cycle time additions when a developer creates a pull request that QA needs to verify:

-

+1 day:

- Day 1: no issues found, merge PR

-

+2 days:

- Day 1: QA has questions, we answer

- Day 2: QA approves, merge PR

-

+X days:

- Day 1: QA finds issues, dev updates

- Day 2: QA reviews, finds issues

- Day X: repeat until PR merge

Waiting at least one day for QA on every issue is a high price to pay.

Stability

At PBS, for the pbs.org product, we ran an experiment. We had no designated QA role for two months. Of the 202 requests we delivered in that time, we flagged <5% as quality issues. These quality issues were trivial by engineers' and product managers' standards. I translated "trivial" to mean that they were easy to remedy and did not impact the mission. A few examples are:

- Pressing spacebar on Firefox did not play/pause video

- We displayed a "Donation" screen instead of a "Related Videos" screen at the end of a subset of videos

- A carousel display unexpectedly reverted to a previous iteration

These were not system outages or recovery events (in the MTTR sense). With a mature, reliable automated test suite. We could increase throughput with little impact to stability.

The Mitigations

How do you compromise on throughput while increasing stability?

Selective QA

We don't have to have a verification step for every change. There are cases where QA is more valuable:

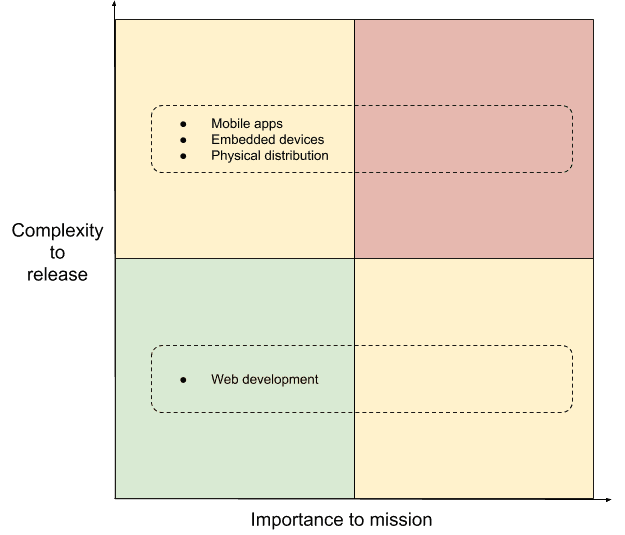

This diagram shows risk quadrants as mission importance an deployment complexity increase. There is a green, low-risk quadrant for delivery without QA. There are yellow caution areas where you should use your discretion. Finally the red quadrant illustrates high-risk, high-value work that benefits from QA.

Move QA into peer review

You can collapse the peer review and verification steps into one. Have QA engineers verify a developers work on the pull request. This still impacts Cycle Time. The upside is that it allows us continue trunk-based development. When we merge the pull request it's ready for deployment. There's no need for valueless release management via git cherry-picking. QA has verified the work and trunk is in a deployable state.

Quality Auditor

Change the point where QA verifies quality. The QA engineer monitors the product in production. They create issues at the beginning of the delivery pipeline. This is in contract to a verification step in the middle of the pipeline. The benefit of the approach is that QA doesn't affect Cycle Time. Developers and QA engineers don't get bogged down in hashing out edge cases mid-development. This keeps work-in-progress to a minimum. However, production stability may lower. The hope is that auditors will catch issues and maintain quality over the long term.

Automate 🤖

Write and trust your tests. Software developers become responsible for coding their QA companion. We'll make mistakes. The goal is to never make the same mistake twice.

Self Reflection

Examine where your delivery process could improve. Do you have an increasing backlog of features? Try increasing your throughput. Do you have an increasing backlog of bugs? Try increasng your stability. In addition, examine your company's road map. Is your team accomplishing long term plans? Of your 2018 "big ideas" how many were achieved? If plans are unrealized ask yourself if ambition is worth the price of safety. Quoting Niccolò Machiavelli's The Prince:

All courses of action are risky, so prudence is not in avoiding danger (it's impossible), but calculating risk and acting decisively. Make mistakes of ambition and not mistakes of sloth. Develop the strength to do bold things, not the strength to suffer.

Top comments (0)