The anatomy of a livestream

Livestreaming: you turn on your camera, connect it to your software, and suddenly anyone around the world can see you through your camera! It almost seems like magic, but in reality there is a lot of heavy lifting occurring as your video traverses the internet to your viewers.

Have you ever wondered how livestreaming video works? In this post, we’ll walk through the steps that occur at api.video to create, encode and distribute your live video stream to a global audience.

Basics of a Live Stream

When writing this article, I planned to describe a livestream from api.video as a “pipe” that collects video from the streamer and delivers it to viewers. As I drew the diagram below, it started out like a pipe, but became more complex, as the stream split off to into thousands of smaller connections that deliver the livestream to each viewer.

As I looked at the diagram (perhaps I squinted a bit), I realised that this diagram more closely resembles a motor neuron than a pipe:

A motor neuron takes an impulse from the brain into the dendrites. These impulses are turned into one message stream, sent along the axon, and then split to the Axon terminals to transmit into the muscles. (A huge thank you to my daughter Grace, who has taken biology a lot more recently than I have.)

Livestream as Neuron

When you create a livestream at api.video, you are creating the 'neuron' that transmits video from your lens, modifies the signal to send out, and then passes the signal through the internet to your viewers. In your body, the brain sends a signal to the neuron with chemicals called neurotransmitters to kick off the signalling process.

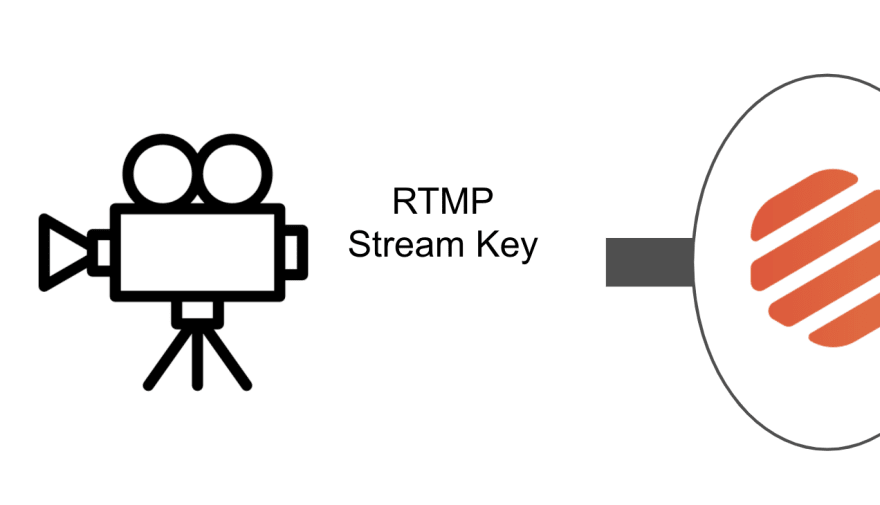

The "brain" of your livestream is your camera and video processing software on your computer. We have tutorials on how to use OBS, Zoom and a web browser to be the brains that connect with your stream. Your 'neurotransmitter' to connect to the livetsream is a streamKey and RTMP url (rtmp://broadcast.api.video/s) in which to begin the transmission of the video.

Once you have connected your camera to the livestream, the video will travel the internet to api.video, undergo transcoding, and travel as single signal through the internet to a CDN. At the CDN, each segment of the livestream is cached, and sent on to each individual viewer.

On Livestream creation, you receive a link (and an iframe) url that describes the “other end” of the stream, where your many viewers can watch your stream. (it will look something like: https://embed.api.video/live/li400mYKSgQ6xs7taUeSaEKr).

Just like your muscles get signals from the same neurons over and over to contract and expand, you can reuse your livestream. The great part of reusing your livestream is that your settings remain the same in your recording setup AND for your viewers.

Digging deeper

For most of you - that’s all you want to know: hook up the pieces, and the video will stream. Like a neuron, data travels through the expected path, and it works without any concious effort. But let’s look a bit deeper into how the livestream works.

Connecting to Broadcast

The connection between your camera and the api.video livestream is the streamKey and the RTMP url. RTMP stands for “Real Time Messaging Protocol” and was initially used by Macromedia/Adobe for (insert time warp sound) Flash Video.

Flash? I thought Adobe was completely stopping support of Flash in 2020. Yes, they are, but RTMP and Flash video are used in many live streaming applications, if only to deliver video from the recorder to the media server. It is popular in these setups because it has VERY low latency. Once it arrives at the media server, it must then be transcoded into a more widely supported format before final video delivery, as support for Flash in browsers no longer exists.

If your video recording output is not RTMP, you are probably using a tool like FFMPEG to transcode your video into flv (literally Flash Video) to send to the server.

Transcoding

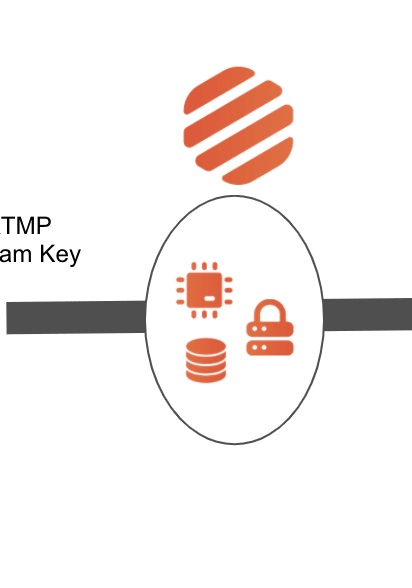

Once your video leaves your camera, it is on its way to api.video. Once it arrives at the api.video servers our live transcoders begin the process of transforming the Flash Video into a HLS stream.

A HLS stream consists of several different sizes and bitrates to ensure proper delivery of your stream to end users. Additionally, each livestream is cut into in 2 second segments of video.

Did you know that if you stream 4K video to us, we’ll output a 4K stream to your viewers?

Each stream has a manifest file that lists upcoming segments. At the time of this writing (October 2020) each manifest lists the next 3 segments - for the next 6 seconds of video delivery. The manifest and the corresponding video segments are quickly transmitted to the many endpoints that are watching the video. AS you might imagine, there is a new manifest file every few seconds that must come from the servers to each player, in addition to all of the video segments.

Internet to the CDN

Once created, the video segments are requested by your users all around the world. The segments leave api.video, and travel through the internet to a Content Distribution Network (CDN) node. At the CDN, the segments are cached so that other viewers in that region do not need to go all the way back to the home server for the segments. From the CDN, the video continues the "last mile" to your viewers, where they can see the video.

Viewing

At the end of the api.video livestream, you use the provided player URL or iframe on your webpage. This url allows the video to leave the api.video 'neuron' and appear on your webpage or mobile app for your viewers to consume the video.

Player feedback

Unlike neurons, where information travels just one way, the connection between api.video and the the player is not a one-way pipe. As the player receives the manifest files - it can request different video bitrates to ensure smooth playback should there be changes in network connectivity.

Rewind

The manifest file lists the 3 newest segments of the video for playback. But there is a 2 way communication from the player to the server. If your users “rewind” the live stream, the player can request a manifest and segments for parts of the video that are in the “past." This means that you can watch earlier in the live video (up to 5 minutes from the live point) in our DVR mode.

Conclusion

A neuron fires information from the brain to muscles in the body instantaneously and efficiently thousands of times a second. Similarly, a livestream is the transmission of data between the streamer, through api.video and to your viewers in a fast, seamless, and efficient way.

Top comments (0)