Video is one of the most popular forms of media today, with billions of hours of video content being watched every day. But have you ever wondered how all of this video content is compressed and decompressed? Or what the difference is between a codec and a container? Understanding these concepts is crucial for anyone who works with video, whether you’re a content creator, marketer, or just someone who enjoys watching videos online. In this article, we’ll take a deep dive into the world of video formats, codecs, and containers. We’ll explore the most popular codecs used today, such as H.264, H.265, and VP9, and discuss how they work to compress and decompress video files. We’ll also examine some of the most popular video formats used today, such as MP4, AVI, and MOV. By the end of this article, you’ll have a better understanding of how video files work and why it’s important to understand these concepts.

With the rise of video-sharing platforms like YouTube, Vimeo, and TikTok, more and more people are uploading videos to the internet every day. Understanding codecs and compression is essential for creating high-quality videos that can be easily shared and viewed on different devices and platforms. Similarly, understanding container formats is important for ensuring that your videos are compatible with different software applications and operating systems.

By using the right codecs and container formats, you can create videos that are optimized for different use cases. For example, if you’re creating a video for streaming over the internet, you’ll want to use a codec that provides good compression while maintaining high-quality video playback. On the other hand, if you’re creating a video for archival purposes, you may want to use a codec that provides lossless compression to preserve the original quality of the video.

Now that we’ve established the importance of codecs and containers in digital video, let’s dive a little deeper into video encoding and the different types of codecs that are commonly used.

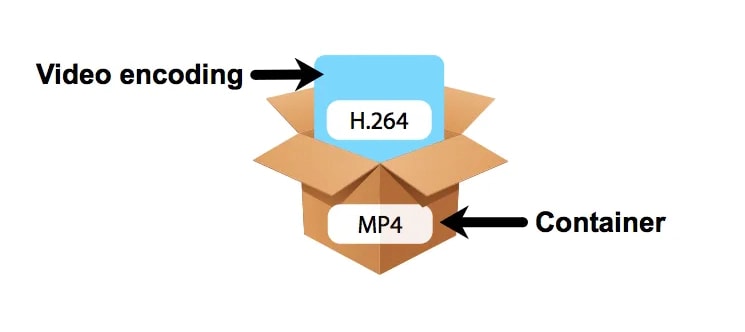

Encoding is the process of exporting digital video into a format and specification suitable for playback by a user. Each video file contains two elements: codecs and containers. Codecs are compression-decompression algorithms that compress the video data to reduce its size and decompress it for playback. Containers are file formats that hold the compressed video data along with other information such as audio tracks, subtitles, and metadata.

Imagine a shipping container filled with packages of many types. In this analogy, the shipping container is the container format, and the codec is the tool that creates the packages and places them in the container. The container format determines how the compressed video data is stored and organized within the file.

Deep dive into compression-decompression algorithms

Codecs compress and decompress video data by using complex algorithms that analyze the video data and remove any unnecessary information while preserving the quality of the video. During the compression process, the codec analyzes the video data and identifies areas that can be compressed without affecting the overall quality of the video. The codec then discards this information and stores only the essential data required to recreate the video.

When decompressing the video data, the codec reverses this process by analyzing the compressed data and recreating the original video data by filling in any missing information. This process is done in real-time during playback, allowing you to watch high-quality videos without having to wait for them to fully download.

There are two main types of codecs: lossy and lossless. Lossy codecs use compression algorithms that discard some of the original video data to reduce the file size. Lossless codecs, on the other hand, use compression algorithms that preserve all of the original video data while still reducing the file size.

There are many different lossy video codecs available today, each with its own strengths and weaknesses. Some of the most popular codecs include H.264, H.265 (also known as HEVC), VP9, and AV1. H.264 is currently the most widely used codec and is supported by most devices and platforms. It provides good compression while maintaining high-quality video playback.

Let's take H.264 as an example and explain how its algorithms actually work:

H.264 uses a block-oriented motion compensation algorithm. This means that it divides each frame into small blocks called macroblocks and then predicts the movement of these blocks in the next frame. By predicting the movement of these blocks, H.264 can compress the video data more efficiently.

In a little more detail:

The entropy coding algorithm used in H.264 is called CABAC (Context-adaptive binary arithmetic coding). CABAC is used to compress the video data by encoding the symbols that represent the video frames. The symbols are typically pixel values or motion vectors that describe how the pixels move from one frame to another. CABAC uses adaptive probability estimation to adjust the probabilities of symbols based on the context in which they appear. This means that as more data is compressed, CABAC can adjust its probabilities to better reflect the actual distribution of symbols in the data stream. This allows CABAC to achieve better compression than other entropy coding algorithms that use fixed probabilities.

The same process occurs in reverse when decoding an H.264 video.

H.265 is a newer lossy codec that provides even better compression than H.264 but requires more processing power to decode. VP9 is an open-source codec developed by Google that provides high-quality video playback while using less bandwidth than other codecs. AV1 is a newer codec that provides even better compression than VP9 but is not yet widely supported by devices and platforms.

Lossless codecs on the other hand retain all of the original data after decompression. However, lossless video codecs like ProRes codecs can’t compress videos low enough for live web streaming. In contrast, a lossy codec will compress a file by permanently getting rid of data—especially data that is redundant.

Lossless compression is also used in cases where it is important that the original and the decompressed data be identical, or where deviations from the original data would be unfavorable like in the production stage of videos and movies.

Video formats: not just .mp4

Video formats are more than just a file’s extension, like the .mp4 in Video.mp4. They include a whole package of files that make up the video stream, audio stream, and any metadata included with the file. All of this data is read by a video player to stream video content for playback.

The video stream includes the data necessary for motion video playback, while the audio stream includes any data related to sound. Metadata is any data outside of audio and sound, including bitrate, resolution, subtitles, device type, and date of creation.

In addition to standard metadata fields, MP4 files can also contain Extensible Metadata Platform (XMP) metadata. XMP metadata is a standard for embedding metadata in digital files that was developed by Adobe Systems. It allows you to add custom metadata fields to your video files that can be used to store additional information about the video.

Some examples of XMP metadata fields that can be added to MP4 files include camera settings, location data, and copyright information. This information can be useful for video production and editing. For example, if you are working on a video project that includes footage from multiple cameras, you can use XMP metadata to keep track of which camera was used for each shot.

There are many different video formats available, each with its own advantages and disadvantages. Some of the most common video formats include:

- MP4: This is a popular format that is widely supported by most devices and platforms. It uses the H.264 codec for video compression and can store both audio and video data.

- AVI: This format is widely used on Windows-based systems. It uses a variety of codecs for video compression, including DivX and XviD.

- WMV: This format was developed by Microsoft and is commonly used for streaming video on the web. It uses the Windows Media Video codec for compression.

- MOV: This format was developed by Apple and is commonly used on Mac-based systems. It uses the H.264 and ProRes codecs for video compression and can store both audio and video data.

Choosing the right video format depends on your specific needs. If you want a format that is widely supported by most devices and platforms, then MP4 is a good choice. If you are working on a Windows-based system, then AVI might be a better choice. If you are streaming video on the web, then WMV might be the best option.

Bit depth and rate…

In video files, bit depth and color depth are two different concepts that are often confused with each other. Bit depth refers to the number of bits used to represent each pixel in an image or video file. The higher the bit depth, the more colors can be represented in an image or video file. Color depth, on the other hand, refers to the number of colors that can be displayed in an image or video file. It is measured in bits per pixel (bpp).

For example, if a video file has a bit depth of 8 bits and a color depth of 24 bpp, it means that each pixel in the video file is represented by 8 bits and can display up to 16.7 million colors.

In summary, bit depth refers to the number of bits used to represent each pixel in an image or video file, while color depth refers to the number of colors that can be displayed in an image or video file.

Now on to Bitrate. It is the rate at which bits are processed or computed over time. The higher the bitrate, the more data there is, which generally means a higher-quality video file. When choosing a bitrate it is important to consider the device your videos will be played on. High bitrate file streams need lots of processing power to encode, and a fast internet connection to download for playback. There are two main types of bitrate: CBR (constant bitrate) and VBR (variable bitrate). Bitrates directly affect the file size and quality of your video. The higher the bitrate, the better the quality of your video, but also the larger the file size.

For example, if you have a video with a bitrate of 10 Mbps (megabits per second), it means that 10 million bits are transmitted every second.

A useful application for your new understanding of bitrate is making sure all your future videos uploaded to a specific platform meet the requirements for minimum and maximum bitrate. This is important when using programs such as Adobe's Premiere Pro or Apple's final cut pro to obtain the best results when exporting.

Here is a brief guide on the bitrates required for optimal streaming performance on Instagram, TikTok, and YouTube:

- Instagram: Instagram recommends a bitrate of 3-5 Mbps for optimal streaming performance.

- TikTok: TikTok recommends a bitrate of 2.5-3.5 Mbps for optimal streaming performance and a maximum of 8.

- YouTube: YouTube recommends a bitrate of 8 Mbps for 1080p video at 30 frames per second (fps) and 12 Mbps for 1080p video at 60 fps and a maximum of 50 at 1080p 60fps or 68 Mbps at 4K.

Please note that these are general recommendations and the actual bitrate required may vary depending on the video content and other factors.

Playback Techniques for Video and Livestreaming

When on the viewing end of the video streaming pipeline there are a few things to take into account:

Video playback and livestreaming are two different methods of viewing video content. Video playback refers to the process of downloading a video file and playing it back on your device. This is the traditional method of watching videos online. Livestreaming, on the other hand, refers to the process of broadcasting live video content over the internet in real time. This allows viewers to watch events as they happen.

There are several formats available for live streaming. One popular format that supports adaptive bitrate (ABR) is HTTP Live Streaming (HLS). HLS or HTTP Live Streaming is a popular format that uses adaptive bitrate streaming techniques to enable high-quality streaming with small HTTP-based file segments. The key to this format’s popularity is the use of HTTP, which is the universal language protocol for the Internet. This allows for reduced infrastructure costs, greater reach, and simple HTML5 player implementation.

Another popular format is Real-Time Messaging Protocol (RTMP), which is a proprietary protocol developed by Adobe Systems for streaming audio, video, and data over the internet between a server and a client.

A great feature of the HLS format is the M3U8 file descriptor. This file allows the client or device to automatically select the best quality stream at any time to prevent playback buffering regardless of bandwidth or CPU power. This means that if your internet connection slows down or speeds up, the stream will automatically adjust to ensure that you have a smooth viewing experience.

Wow, it turns out that the ultimate guide to video is a bit more complex than anticipated 😅🙃.

Congratulations on understanding all the nuances of digital video! It’s no small feat to have a good grasp of such a complex topic. You should be proud of yourself!

If you’re looking for a way to simplify your video hosting, encoding, transcoding, and streaming pipeline, I recommend using api.video. This platform makes it easy to manage your online video needs. Upload your videos, encode them into multiple formats and resolutions, and stream them to your viewers all from one place. Plus, api.video offers a variety of features such as analytics and security options to help you get the most out of your video content.

Whether you’re a seasoned video professional or just starting out, api.video is the perfect solution for simplifying your video workflow.

Top comments (0)