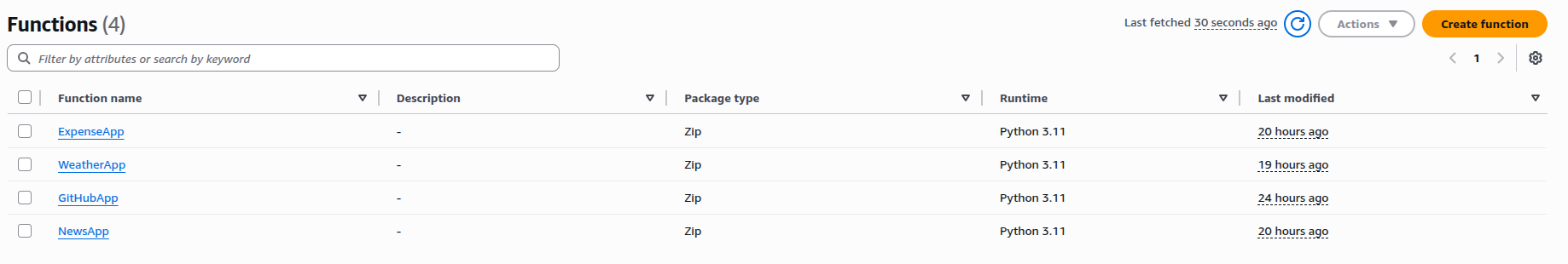

In my ongoing cloud journey, I decided to build a multi-functional serverless dashboard using AWS. The dashboard includes multiple applications like ExpenseApp, WeatherApp, NewsApp, and GitHubApp. Each of these apps runs serverlessly with AWS Lambda, uses API Gateway for routing, and stores data in DynamoDB. For easier deployment, I’ve implemented CI/CD pipelines using GitHub Actions, automating the entire process from code commit to deployment.

Architecture Diagram

Tech Stack Breakdown

Frontend:

- The dashboard's frontend, with its various apps, is built using TypeScript. Since I wanted to focus on the infrastructure, I used AI tools to handle this part for me.

Backend:

- AWS Lambda: The logic for each app (Expense, Weather, News, GitHub) runs on AWS Lambda functions, written in Python. I also used AI to generate the code for these functions.

- API Gateway: This service acts as the router, directing requests to the correct Lambda function based on the API path, such as

/ExpenseApp. - DynamoDB: I used this NoSQL database specifically for the ExpenseApp to store user data. To keep the project simple, I decided not to store API keys for the other apps here.

CI/CD:

- GitHub Actions: I automated the deployment of both the frontend and backend using GitHub Actions, ensuring that any changes are automatically pushed to AWS without any manual steps.

IAM Roles & Permissions

I created a dedicated IAM user to manage the various resources required by the dashboard. The user is limited to the resources necessary for each app. This user’s permissions include creating and updating Lambda functions, managing API Gateway configurations, accessing DynamoDB, and interacting with S3 for frontend assets. Using a dedicated IAM user is a good security practice, as it follows the principle of least privilege.

Policy Example:

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"iam:CreateRole",

"iam:GetRole",

"iam:AttachRolePolicy",

"iam:ListAttachedRolePolicies",

"iam:PassRole"

],

"Resource": [

"arn:aws:iam::123456789012:role/LambdaDynamoDBRole",

"arn:aws:iam::123456789012:role/LambdaDynamoDBCloudWatchRole"

]

},

...

Database Design For ExpenseApp

For the ExpenseApp, I used DynamoDB as the database. I designed the table with the following schema:

-

Table Name:

expenses-table -

Partition Key:

expenseId(Number) -

Sort Key:

timestamp(Number) -

Attributes:

description,amount,category,date, andtimestamp.

I used DynamoDB On-Demand capacity mode because this model is ideal for staying within the AWS free tier, as I only pay for what I use, and my simple application's low traffic will likely remain far below the free limits.

Setting Up the Lambda Functions & API Gateway Integration

Each app (Expense, Weather, News, GitHub) has its own Lambda function. Here’s an overview of the integration and automation:

-

Lambda Function Deployment:

- Each app has its own set of Lambda functions deployed automatically using a deployment script.

-

The functions are connected to the API Gateway endpoints:

/ExpenseApp,/WeatherApp,/NewsApp, and/GitHubApp.

-

API Gateway Routes:

-

I used API Gateway as the main router for my dashboard. I set up a

dedicated path for each part of my app. For instance, I created a

specific route called

/ExpenseAppfor the ExpenseApp. On that route, I added aGETmethod. Then, I linked it directly to my Lambda function. I used something called Lambda Proxy integration, which is a simple way to make sure the API Gateway sends everything from the request straight to my code so it can handle it. -

API Gateway Configuration Error: A challenge I

encountered was an integration error between API Gateway and Lambda

functions, which was caused by a mismatch in expected resource paths.

The Lambda functions expected paths like

/ExpenseAppbut were defined under the root path/. This was a frustrating issue because I just could not get the API Gateway align with my Lambda no matter how much permissive my permissions were. Once I adjusted the paths to match, everything worked seamlessly.

-

I used API Gateway as the main router for my dashboard. I set up a

dedicated path for each part of my app. For instance, I created a

specific route called

Handling CORS (Cross-Origin Resource Sharing)

Another frustrating issue that I ran into was getting CORS (Cross-Origin Resource Sharing) to work. My dashboard's frontend had to talk to different backends, and for some reason, the requests kept getting blocked. I thought I'd solved it by just enabling CORS on the main API Gateway (the root resource), but that wasn't enough. It turns out I had to go into every child resource (like /ExpenseApp and /WeatherApp)and enable it there too. And that was just the start of it. I also had to make sure my Lambda functions included the code snippet to return the correct Access-Control headers in their response. The final challenge was using the AWS CLI for this. I couldn't just pass the headers on a single line because the command line kept messing up the quotes. To fix it, I had to create a separate JSON file just for the headers and then reference that file in my CLI command. This was a tedious trial and error process.

CI/CD Pipeline with GitHub Actions

Backend CI/CD:

- GitHub Actions automates the deployment of Lambda functions whenever code is pushed to the repository.

Frontend CI/CD:

- The frontend is deployed to S3 buckets automatically whenever changes are committed. The GitHub Actions pipeline builds the frontend assets and uploads them to the appropriate S3 bucket for public access.

AWS CLI for Automation

After the completion of my dashboard, I wanted to challenge myself: how do I get all of this stuff onto AWS without touching the AWS console? Manually creating all those Lambda functions, IAM roles, and API Gateway endpoints would be a tedious and hectic process. That's when I used the AWS Command Line Interface (CLI) to automate the entire process.

I wrote a single deploy_infra.sh script to handle everything. The script is smart enough to check if a resource already exists before trying to create it. For each of my apps, the script first creates the necessary IAM roles and gives each app the basic permissions it needs. For the ExpenseApp, it adds extra access to DynamoDB.

Once the roles are set up, the script moves to function deployment. It takes my Python code, bundles it with any required libraries, and then uploads it to create a new Lambda function. It also pulls any private API keys from a separate file so I don't accidentally expose them.

The final, and perhaps most complex, part was automating API Gateway. The script not only creates a new API for each app but also builds out the routes, like /ExpenseApp and /WeatherApp. It connects these routes to their corresponding Lambda functions using the Lambda Proxy integration. And because CORS was such a pain to configure, I built a special function into the script to handle all of those headers and pre-flight OPTIONS requests automatically.

Finally, the script deploys the entire API and gives me the public endpoint for each app, so I can immediately start testing. This entire process, which would have taken me more than 15 minutes of manual clicking and configuring, is now done with a single command. It's a huge win for me and I learned so much when playing with it.

Website Image

Conclusion

This multi-app dashboard project gave me a good learning experience in designing and implementing serverless architectures with AWS. I was able to focus on cloud engineering aspects (like IAM roles, Lambda functions, and API Gateway), while using GitHub Actions to automate deployment processes. This project was a learning exercise and a portfolio piece to showcase my cloud engineering skills. Moving forward, I’m excited to explore Infrastructure-as-Code tools like AWS CDK or Terraform to further streamline the setup and deployment of serverless applications.

Top comments (0)