If you utter the words “I call a Lambda function from another Lambda function” you might receive a bunch of raised eyebrows. It’s generally frowned up for some good reasons, but as with most things, there are nuances to this discussion.

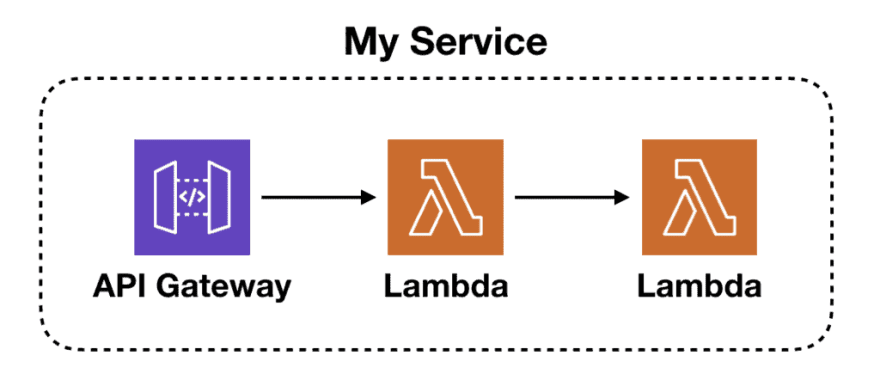

In most cases, a Lambda function is an implementation detail and shouldn’t be exposed as the system’s API. Instead, they should be fronted with something, such as API Gateway for HTTP APIs or an SNS topic for event processing systems. This allows you to make implementation changes later without impacting the external-facing contract of your system.

Maybe you started off with a single Lambda function, but as the system grows you decide to split the logic into multiple functions. Or maybe the throughput of your system has reached such a level that it makes more economical sense to move the code into ECS/EC2 instead. These changes shouldn’t affect how your consumers interact with your system.

Update 16/07/2020

Following some lively debate on Twitter, I sat down with Michael Hart and Jeremy Daly to discuss this topic of Lambda-to-Lambda invocations in more detail. I think it’s worth putting more nuance and context into this “why you shouldn’t” section.

Firstly, my advice here is not hard and fast. There are lots of exceptions and reasons to break from the mould if you understand the tradeoffs you’re making. But the most important thing to consider is the organizational environment you operate in.

Earlier, I said that you shouldn’t call Lambda functions across service boundaries. A more accurate description of my thinking should be “across ownership lines”. That is, calling Lambda functions that are owned by other teams. The two are usually equivalent in companies where I had worked — the system is broken up into microservices, and teams own one or more microservices.

An important factor to consider is “how expensive is the communication channel between the two teams”. The bigger the company, the higher these costs tend to be. I’ve seen projects delayed for months or even years because of these cross-team dependencies. And the higher these costs are the more you need to put a stable interface between services. Having a more stable interface affords you more flexibility to make changes without breaking your contract with the other teams.

I think a Lambda function is not a stable interface because the caller needs to know its name, its region and its AWS account. Which stops me from being able to refactor my functions without forcing the callers to change, hence creating dependency on another team when I want to refactor my service.

For example:

- renaming a function

- splitting a fat Lambda (e.g. one that handles all CRUD actions against one entity type) into single-purpose functions

- go multi-region, and route caller to the closest region

- move the service to another account (e.g. if you’re migrating from a shared AWS account for all teams to a model where you have separate accounts per team)

In many of these cases, if there’s an API Gateway in front of my service, then, by and large, I can refactor my service without impacting my callers. I’m only talking about refactoring here, i.e. changes that don’t affect the contract with my callers.

If I’m making breaking contract changes then it’s unavoidable that my callers have to make changes too. I avoid these breaking changes like a plague, especially when the cost of cross-team coordination is high.

It’s also worth noting that while API Gateway can be a more stable interface for synchronous calls that cross the ownership line, the same logic can be applied to SNS/SQS/EventBridge for asynchronous calls between Lambda functions.

What if you’re operating in a small team (or on your own) and the cost of coordinating changes across teams is negligible? Then you might not need the flexibility a more stable interface can give you. In which case it’s not a problem to call a Lambda function directly across service boundaries. And what you lose in flexibility you gain in performance and cost efficiency as you cut out a layer from the execution path.

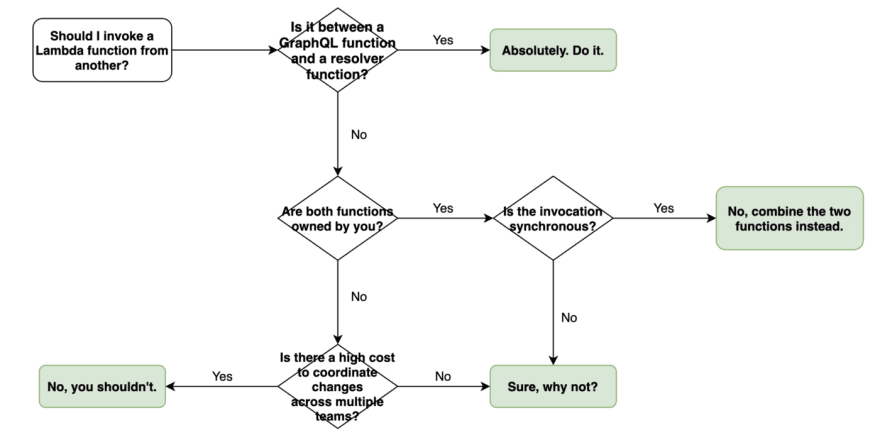

There are also other exceptions to keep in mind. For example, if you are running GraphQL in a Lambda function, then you do need to make synchronous calls to other resolver Lambda functions. Without this, you’d have to put the entire system into a very fat Lambda function! That’d likely cause far more problems for you than those synchronous Lambda calls.

I hope this clarification gives you a better understanding of when my advice above should be applied. Context is important.

p.s. big thanks to Michael Hart and Jeremy Daly for taking the time to discuss in detail with me! The flow chart at the end of the post has also been updated to reflect the extra nuances that have been discussed here.

But what if the caller and callee functions are both inside the same service? In which case, the whole “breaking the abstraction layer” thing is not an issue. Are Lambda-to-Lambda calls OK then?

It depends. There’s still an issue of efficiency to consider.

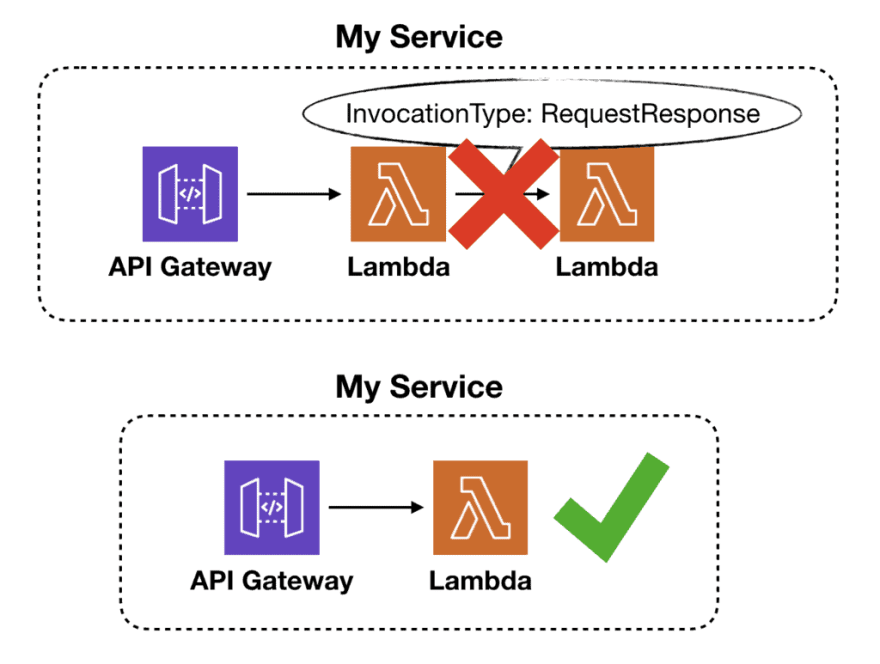

If you’re invoking another Lambda function synchronously (i.e. when InvocationType is RequestResponse ) then you’re paying for extra invocation time and cost:

- There is latency overhead for calling the 2nd function, especially when a cold start is involved. This impacts the end-to-end latency.

- The 2nd Lambda invocation carries an extra cost for the invocation request.

- Since Lambda durations are paid in 100ms blocks, so you will pay for the amount of “roll-up” time for both caller and callee functions.

- You will pay for the idle wait time while the caller function waits for a response from the callee.

While the extra cost might be negligible in most cases, the extra latency is usually undesirable, especially for user-facing APIs.

If you care about either, then you should combine the two functions into one. You can still achieve separation of concerns and modularity at the code level. You don’t have to split them into multiple Lambda functions.

But, if the goal is to offload some work so the calling function can return earlier then I don’t see anything wrong with that. That is, assuming both functions are part of the same service.

So, to answer the question posed by the title of this post — it depends! And to help you decide, here’s a decision tree for when you should considering calling a Lambda function directly from another.

Hi, my name is Yan Cui. I’m an AWS Serverless Hero and the author of Production-Ready Serverless. I specialise in rapidly transitioning teams to serverless and building production-ready services on AWS.

Are you struggling with serverless or need guidance on best practices? Do you want someone to review your architecture and help you avoid costly mistakes down the line? Whatever the case, I’m here to help.

You can contact me via Email, Twitter and LinkedIn.

The post Are Lambda-to-Lambda calls really so bad? appeared first on theburningmonk.com.

Oldest comments (6)

Great article! The last approach, returning early, letting other lambda handle the long running task is where the gold can be when it comes to improving end user UX.

This is neat. I don't disagree with the decision tree. 😀 A little feedback: Our Lambdas are probably going to get tinier. Possibly simple things like Math libraries may become a set of Lambda functions. A single Lambda can recall a computationally expensive function result from memory. The exact pain point - cost of the time rolled up - is nicking away at the cost advantage of one lambda invoking another lambda. Think about something extremely large like rehosting Unity engine on Lambda.

I once helped a client that was in a bit of a pickle. They outsourced worked and got a horror story of Lambdas calling Lambdas back (think it needs to be coined, Lambda nest ?).

The developer(s) could not get a Lambda inside the VPC to speak to RDS and to the public internet. So the developer decided to write all the database queries inside X amount of Lambdas, basically a single query per Lambda. Then the API GW Lambdas not in the VPC would call these "database Lambdas" directly using the SDK.

So I think it needs a sticker. Danger Zone: do not attempt Lambda-to-Lambda calls unless you absolutely know what you are doing.

It also relates to how small/big a Lambda function should be.

Maybe developers are confusing the idea of a "Lambda function" with their programming language's function statement. It's not the same. I think Lambda should be seen closer to a containerized app than to a tiny programming function.

100% what happened in this scenario.

Helpful summary in the decision tree! I agree mostly with your vision. In the decision process, I would also take into account Performance efficiency and Cost optimization:

For workloads that are highly sensitive to latency, adding an API Gateway or Load Balancer in front of every Lambda call might be detrimental. Especially if those Lambdas execute in under 100 or 200 ms, the relative impact of API latency may be too high.

An internal API is a fixed cost to every Lambda invocation. We need to consider how much value that workload delivers to the organization. Especially if it's running several millions of times, the internal API might not make sense, financially-wise.