This post was originally written on my personal website, where the charts and plots are interactive and I show the code to plot. If you are missing that, check out the original.

Short recap: Part 1

In part 1 of this series we looked at the first part of this project. This included:

- The data we are working with and what it looks like.

- The amount of listening done per year and per month.

- The amount of listening done per hour of day, also throughout the years.

- The amount of genres we have per song/artist.

We will continue from where we left of, diving deeper into the genres.

We'll load up the original JSON from Spotify, as well as the genres we created in part 1. We then combine them into comb, the combined dataframe. In genres.csv, we again see the 20 columns with the genres for each song, where the genres are collected from the artist, since songs are not labeled as having a genre. For more details, please have a look at part 1.

| ts | ms_played | conn_country | master_metadata_track_name | master_metadata_album_artist_name | master_metadata_album_album_name | reason_start | reason_end | shuffle | skipped | ... | city | region | episode_name | episode_show_name | date | year | month | day | dow | hour | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 2013-10-09 20:24:30+00:00 | 15010 | NL | Wild for the Night (feat. Skrillex & Birdy Nam... | A$AP Rocky | LONG.LIVE.A$AP (Deluxe Version) | unknown | click-row | False | False | ... | NaN | NaN | NaN | NaN | 2013-10-09 | 2013 | 10 | 9 | 2 | 20 |

Genres retrieved from Spotify and the combined dataframe. We rename the genres columns from just a number 0-20 to 'genre_x' with x between 0 and 20, so they're easier to recognize.

comb consists of df + genres_df, with the genre columns at the end.

# genres retrieved through Spotify API

genres_df = pd.read_csv('genres.csv', low_memory=False)

genres_df = genres_df.rename(columns={str(x): f'genre_{x}' for x in range(21)})

comb = pd.concat([df, genres_df], axis=1)

comb.head(2)

| ts | ms_played | conn_country | master_metadata_track_name | master_metadata_album_artist_name | master_metadata_album_album_name | reason_start | reason_end | shuffle | skipped | ... | genre_11 | genre_12 | genre_13 | genre_14 | genre_15 | genre_16 | genre_17 | genre_18 | genre_19 | genre_20 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 2013-10-09 20:24:30+00:00 | 15010 | NL | Wild for the Night (feat. Skrillex & Birdy Nam... | A$AP Rocky | LONG.LIVE.A$AP (Deluxe Version) | unknown | click-row | False | False | ... | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN |

| 1 | 2013-10-09 20:19:20+00:00 | 68139 | NL | Buzzin' | OVERWERK | The Nthº | unknown | click-row | False | False | ... | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN |

Top genres

In part 1, we have seen how many genres each song has and how their numbers are distributed. The next question then, naturally, is: What genres are they? So let's see!

For the following analyses, remember that if I play 10 songs by Kanye, Kanye's genres will be present 10 times.

To analyze the genres, I first create a dataframe that contains all of the genres and their counts. This will be handy in the near future.

top_genres = (

genres_df.apply(pd.Series.value_counts)

.apply(np.sum, axis=1)

.sort_values(ascending=False)

.reset_index()

.rename(columns={'index': 'genre', 0: 'count'})

)

Then we can plot. Lets start with the total listens per genre.

No big surprises here. My main music tastes are hip hop and electronic music, with main genres techno and drum and bass. However, for the latter two I mainly use Youtube, which hosts sets that Spotify does not have. So my Spotify is mainly dominated by hip hop and its related genres, like rap, hip hop and pop rap (whatever that is? Drake maybe?). I expect many hip hop songs are also tagged as pop, which would explain the high pop presence, while I normally am not such a pop fan. Lets dive a bit deeper into this!

Top genres per song

As a next step, let's verify which genres coincide with which other genres. This will test our hypothesis that pop is used as a tag for hip hop, but will also in general provide us with a better feeling of what genres are related to which other genres.

For this,we loop over the rows and for each present genre, we put a 1 in that column, while also casting to np.int8. This means that, instead of the normally 32 bits, we use 8 bits and thus safe some memory. Since we only wanna represent a binary state (present or not present), we could also use boolean. However, since we're doing arithmetic with it later, int8 will do. We fill the empty cells with 0. We only do this for the top 20 genres. This results in a dataframe with a column for each of the top 20 genres.

rows = []

for i, row in comb.loc[:, [f'genre_{x}' for x in range(21)]].iterrows():

new_row = {}

for value in row.values:

if value in top_genres_20:

new_row[value] = 1

rows.append(new_row)

genre_presence = pd.DataFrame(rows)

genre_presence = genre_presence.fillna(0).astype(np.int8)

genre_presence.head(2)

| hip hop | pop | pop rap | rap | edm | electro house | dance pop | tropical house | big room | brostep | bass trap | electronic trap | house | progressive electro house | progressive house | detroit hip hop | g funk | west coast rap | conscious hip hop | tech house | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 1 | 1 | 1 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| 1 | 0 | 0 | 0 | 0 | 1 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

Now that we have this data, we can do a correlation analysis of when each genre coincides with what other genre. Now, because genre is a nominal data type, we cannot use the standard correlation, which is the Pearson correlation coefficient. Instead, we should use a metric that works with nominal values. I choose Kendall's tau for this, due to its simplicity. Normally, Kendall's tau is meant for ordinal values (variables that have an ordering). However, because we are working with a binary situation (genre is either present or not) represented by 0 and 1, I think this should still work. One other thing to note is that Kendall's tau is symmetric, and this means tau(a, b) is the same as tau(b, a).

Lets loop over all the combinations of the top 20 genres and compute their tau coefficient.

from scipy.stats import kendalltau

from itertools import product

rows = []

for genre_a, genre_b in product(genre_presence.columns.values, repeat=2):

tau, p = kendalltau(genre_presence[genre_a].values, genre_presence[genre_b].values)

rows.append({'genre_a': genre_a, 'genre_b': genre_b, 'tau': tau})

tau_values = pd.DataFrame(rows)

tau_values[:2]

| genre_a | genre_b | tau | |

|---|---|---|---|

| 0 | hip hop | hip hop | 1.000000 |

| 1 | hip hop | pop | -0.040954 |

If we plot this nicely, we get the following overview of "correlations".

We immediately can see some interesting clusters. We can see a strong tau between most of the electronic music genres, like edm, electro house, bass trap, big room, brostep and electronic trap. Then, looking at hip hop, we can see very strong coefficients with rap and pop rap, neither of which are big suprises. My initial hypothesis that pop would be correlated with hip hop has been debunked, though. Pop seems to be more strongly related with edm and some other electronic genres, and have a negative tau with hip hop related genres, like hip hop (-0.29), pop rap (-0.28) and rap (-0.32).

In this overview, I think there are two interesting insights still:

- A strong coefficient between conscious hip hop and west coast rap. I did not really expect this, but can likely be attributed to artists like Kendrick Lamar, who deal with social and political issues in their lyrics. Additionally, cities like Compton played a big role in west coast hip hop, and were often strongly related to their social and economical situation (Also for Kendrick Lamar).

- A strong coefficient between G-funk and Detroit hip hop. G-funk is a is a subgenre of hip hop that originated in the west coast, while Detroit hip hop, as the name says, comes from Detroit. A strong coefficient between G-funk and west coast rap might have been more expected. Interesting to see, but I won't dive deeper into these findings for now.

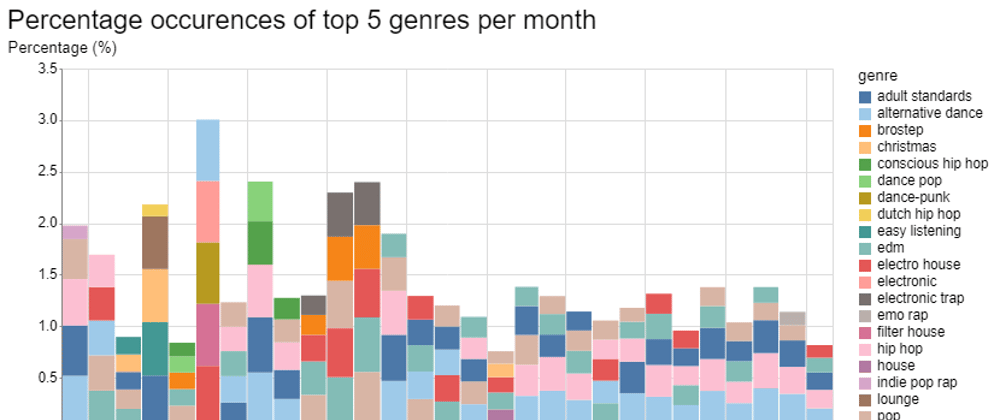

Monthly change in genres 📅

This is a very interesting analysis in my opinion, but also one of the more challenging one. I've approached the problem the following way, given the data I had.

- Count the frequency of each genre on a certain interval, monthly in this case.

- Divide these numbers by the total plays for those intervals, so we get a percentage of total plays of that month. This number means how much of the songs had that genre. This means that these percentages will not sum to one (or you know, they can, but they don't have to).

- Sort given these percentages and extract the monthly top 5.

Step 1: count the frequency per interval. We don't do this just for the top n genres, but for all genres. This, naturally, results in a lot of columns and a very wide dataframe.

# Step 1. Count all genre occurences per month.

counters_per_month = []

unique_years = comb.year.sort_values().unique()

unique_months = comb.month.sort_values().unique()

for year, month in tqdm(product(unique_years, unique_months), total=len(unique_years)*len(unique_months)):

if len(comb.loc[(comb.year == year) & (comb.month == month)]) > 0:

counter = {'year': year, 'month': month}

for i, row in comb.loc[(comb.year == year) & (comb.month == month)].iterrows():

for genre in row[[f'genre_{x}' for x in range(21)]]: # the genre columns are named '0' to '20'.

counter[genre] = counter.get(genre, 0) + 1

counters_per_month.append(counter)

Put the counts_per_month in a dataframe and calculate the total songs played per month.

counts_per_genre_per_month = pd.DataFrame(counters_per_month)

monthly_sum = df.groupby(['year', 'month']).size().reset_index().rename(columns={0: 'count'})

Step 2: We then normalize all genre counts by the number of songs played in that time period.

# 2.Normalize all genre counts by the number of songs played in that time period.

# Select all columns except the time columns

columns = counts_per_genre_per_month.columns.tolist()

columns.remove('year')

columns.remove('month')

for i, row in monthly_sum.iterrows():

counts_per_genre_per_month.loc[(counts_per_genre_per_month.year == row.year) & (counts_per_genre_per_month.month == row.month), columns] = counts_per_genre_per_month.loc[(counts_per_genre_per_month.year == row.year) & (counts_per_genre_per_month.month == row.month), columns] / row['count']

To get a cleaner visual, we remove any data before August 2016.

counts_per_genre_per_month_filtered = counts_per_genre_per_month.loc[

(counts_per_genre_per_month.year > 2016)

| (

(counts_per_genre_per_month.year == 2016)

& (counts_per_genre_per_month.month > 8)

)]

We now have a dataframe with 863 columns, which corresponds to 861 different genres. This dataframe has all the genres and what percentage of total plays they were present as a genre. Keep in mind that an artist/song generally has more than one genre, so the sum of these fractions is not 1. This dataframe looks like this:

Our data now looks as follows:

| year | month | east coast hip hop | hip hop | pop | pop rap | rap | trap music | NaN | catstep | ... | classical soprano | spanish hip hop | trap espanol | pop reggaeton | chinese hip hop | corrido | regional mexican pop | australian indigenous | witch house | ghettotech | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 16 | 2016 | 9 | 0.038760 | 0.449612 | 0.387597 | 0.488372 | 0.519380 | 0.069767 | 16.689922 | 0.007752 | ... | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN |

| 17 | 2016 | 10 | 0.055409 | 0.313984 | 0.343008 | 0.279683 | 0.337731 | 0.036939 | 16.469657 | 0.026385 | ... | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN |

Step 3: Sort given these values and extract the top 5. Unfortunately, the data is not in a shape that we can do that (to my knowledge at least), so we need to transform it a bit further by moving from a wide to a long data format and filtering out some values.

The melting of the dataframe results in a single row per percentage per genre per timeunit. This makes it easier to plot with Altair. Furthermore, we create a datetime column from our year + month columns, which is also better for Altair to use.

counts_per_genre_per_month_melted = pd.melt(

counts_per_genre_per_month_filtered,

id_vars=['year', 'month'],

value_vars=columns,

var_name='genre',

value_name='percentage'

)

counts_per_genre_per_month_melted['datetime'] = pd.to_datetime(

counts_per_genre_per_month_melted.month.astype(str)

+ '-' + counts_per_genre_per_month_melted.year.astype(str),

format='%m-%Y')

Drop columns where either the genre or percentage is Nan. This reduces the number of rows even more, so that taking the n-largest later will be faster.

counts_per_genre_per_month_melted = counts_per_genre_per_month_melted.dropna(

subset=['percentage', 'genre']

)

Which gives us:

| year | month | genre | percentage | datetime | |

|---|---|---|---|---|---|

| 0 | 2016 | 9 | east coast hip hop | 0.038760 | 2016-09-01 |

| 1 | 2016 | 10 | east coast hip hop | 0.055409 | 2016-10-01 |

This looks great! But, there is one problem, and that is that we likely have way too many rows for Altair. We have almost 7k rows, while Altair's maximum is 5k. Not too bad, but we still need to remove a bunch of rows. But that is fine, since we're only interested in the top 5 of each month anyway. Using pandas' .groupby and .nlargest, we can extract this fairly easy. We extract those the indices of the remaining rows and index into the melted dataframe to only have the rows in the top 5 for each month left.

top_genres_per_month_with_perc = counts_per_genre_per_month_melted.loc[

(counts_per_genre_per_month_melted.groupby(['year', 'month'])

.percentage.nlargest(5)

.reset_index().level_2.values), :]

top_genres_per_month_with_perc.set_index(

['year', 'month']

).head(5)

| genre | percentage | datetime | ||

|---|---|---|---|---|

| year | month | |||

| 2016 | 9 | rap | 0.519380 | 2016-09-01 |

| 9 | pop rap | 0.488372 | 2016-09-01 | |

| 9 | hip hop | 0.449612 | 2016-09-01 | |

| 9 | pop | 0.387597 | 2016-09-01 | |

| 9 | indie pop rap | 0.131783 | 2016-09-01 |

And we only have 145 rows left, so we can use it with Altair 😎.

In the chart below, there is a lot going on. On the x-axis we have time while on the y-axis we have the normalized percentages of the top 5 genres. This means that for each month, the top 5 genres' percentages sum to represent 1. This might be hard to grasp, so I've put the non-normalized one next to this plot to make the difference clear. Some colors are used twice, but there is no color scheme available in Altair that supports more than 20 colors, so this will have to do for now 😉. You can hover over the bars to get details of those bars and click on legenda items to highlight a genre.

Top genres with percentages 📊

The following plot is very nice when it's interactive, so please check out that one on my own website

There are definitely some interesting things in theses plots. We can see some consistent attendees that we also saw in the most listened genres in general, so that's not a big surprise. For example, these include rap, edm and hip hop.

- Seasonal effects: What is quite interesting is to see when the very common genres are not dominating the chart, like in December of 2016. Both in November and December of 2016 we see I was in a very strong Christmas mood, with christmas covering 16% of songs in November and 51%(!) in December. The top genres in December are adult standard (whatever that may be), easy listening, christmas and lounge. Those definitely are in the same segment, so it's not surprising that those other genres appear alongside Christmas in a heavy Christmas month. We do not see this seasonal effect in 2017 and 2018, but those years my Christmas music urge was just less, so this drop is explainable. Instead of Christmas, in December of 2018 emo rap is in my top 5 genres 🤔. That might be interesting to look at in another blog post.

- Electronic periods: Something else that stands out is that there are electronic music periods, like June, July and August of 2017 and January of 2018. However, both edm and electro house are present in essentially each month as high scorers, so I'm definitely a fan in general. But these peak months still stand out.

- Rise of Rap: The last thing that is interesting is probably the fact that rap and hip hop have almost exclusively been the top 2 from February 2018 to January 2019. This indicates a move away from the more electronic genres and more towards hip hop. A possible reason for this might be the move towards more set-based plays for electronic music, which are generally not on Spotify, but on platforms like YouTube. Otherwise, it might just be an actual preference shift. However, I do still listen to a lot of these types of music, so I suspect the former. Looking at data from 2019 and 2020 might give some insight in this.

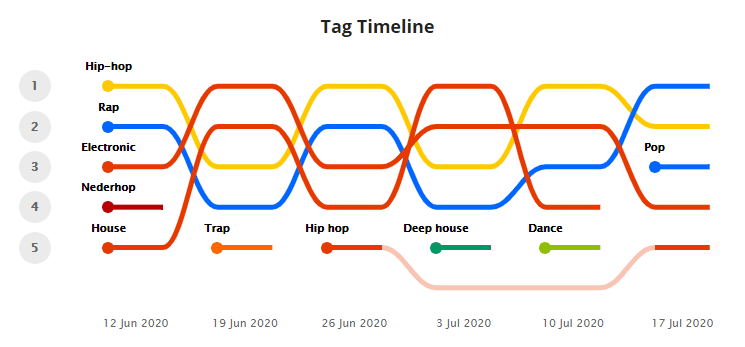

Top genres without percentages 🏆

So we've seen how the genres relate to each other in terms of percentages per month. We can also see what the top genres are per month, but it can definitely still be improved. I really just want a list with the top 5 genres per month, ideally easily readable and pretty close to the example we had from Last.fm.

As a reminder, that looked like this:

We can get a list of the top genres per month by grouping and then applying list on the Series.

top_genres_per_month = (top_genres_per_month_with_perc

.groupby(['year', 'month'])

.genre.apply(list)

.reset_index()

)

top_genres_per_month[:2]

| year | month | genre | |

|---|---|---|---|

| 0 | 2016 | 9 | [rap, pop rap, hip hop, pop, indie pop rap] |

| 1 | 2016 | 10 | [edm, pop, rap, electro house, hip hop] |

We then create a numpy array from these values and apply them column by column to new dataframe columns.

Until we finally arrive at the following dataframe. Now, still is pretty much what I wanted, so I'm happy with the result.

| year | 2016 | 2017 | ... | 2018 | 2019 | ||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| month | 9 | 10 | 11 | 12 | 1 | 2 | 3 | 4 | 5 | 6 | ... | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 | 12 | 1 |

| genre_1 | rap | edm | edm | adult standards | pop | electro house | pop rap | rap | rap | pop | ... | rap | edm | rap | rap | rap | rap | rap | rap | rap | rap |

| genre_2 | pop rap | pop | pop | easy listening | edm | filter house | rap | pop rap | pop rap | edm | ... | hip hop | rap | pop rap | hip hop | edm | hip hop | hip hop | hip hop | hip hop | hip hop |

| genre_3 | hip hop | rap | adult standards | christmas | rock | dance-punk | edm | hip hop | hip hop | electro house | ... | edm | electro house | hip hop | pop rap | hip hop | pop rap | edm | pop rap | pop rap | pop rap |

| genre_4 | pop | electro house | christmas | lounge | dance pop | electronic | hip hop | conscious hip hop | pop | brostep | ... | pop | hip hop | edm | edm | pop rap | edm | pop rap | pop | pop | edm |

| genre_5 | indie pop rap | hip hop | easy listening | dutch hip hop | tropical house | alternative dance | pop | west coast rap | conscious hip hop | electronic trap | ... | pop rap | pop | pop | electro house | electro house | pop | pop | edm | emo rap | electro house |

Now, in my original post I colored this table to be easier interpretable, but Dev.to does not allow that :(. So please see the original post for the full version.

But, it looked like this!

Better get the 🚒 cause this table is 🔥.

This is really close to the Last.fm plot, apart from the lines between points that require 10 years of D3.js experience. We see some similar pattern to those in the earlier plot, but also can see some new insights. Here, we can focus some more on the anomalies that are present, like indie pop rap, dutch hip hop, filter house and conscious hip hop. These stand out more using this representation than before, which focused more on trends.

Insights

- More electronic peaks: We can see that February 2017 was actually also a peak in electronic music, but due to similar colors in the previous plot this was a bit hidden.

- Pure hip hop periods: Furthermore, we can also see there are some pure hip hop periods, like April and May of 2017, where EDM and electro house are not present at all, and we see more specific hip hop genres make way like west coast rap and conscious hip hop.

In conclusion

In part 2 we took a closer look at what genres I listen to and how that has developed over time. There were some very interesting insights, like the effects of holidays, and the change of music preference towards rap. We also recreated the plot from Last.fm, as close as possible at least. I'm quite happy with the outcome, but definitely have some newfound respect for Spotify analysts that have to do this for way more people. Although generalization also brings some advantages of course. Doing these analyses also is improving my skills with Pandas, because I have not previously worked that much with time data, so this is a great exercise. Also, having to look into the details of .groupby, and how it operates on timeseries aggregates and what operations are possible were great. For instance, I learned you can do a groupby on a datetime index or column like so:

df.groupby(df['datetime-column'].dt.year)

and even multi-index this for month/year using:

df.groupby([

df['datetime-column'].dt.year,

df['datetime-column'].dt.month

])

Which is very cool and way cleaner than what I used! But I'm getting sidetracked.

Rounding off; thank you for reading and sticking with me! I'm very curious what results Part 3 will bring.

Topics for part 3:

- An analysis of musical features, like energy, danceability and acousticness. Those are numeric values and thus allow for some different visualizations then all of the discrete values of this blogpost.

- Which songs do I listen to that are emo rap. This is probably quite a small point of research, but still I'm quite curious.

If you liked this blogpost, don't hesitate to reach out to me on linkedin or twitter. 😊

Top comments (0)