Organizations in the industrial space deal with similar challenges when it comes to data, and those challenges become more prominent the longer they go unanswered. Industry 4.0 is moving forward at full speed, meaning that traditional manufacturing and industrial processes continue to be automated with modern technology -- which is super exciting! This is done through methods like internet of things (IoT), smart machines, and advanced monitoring and analysis techniques. Now that we have all these mechanisms in place to capture data, questions arise such as what to do with that data, where to put it, what can we learn from it, and are we even capturing it in the most efficient manner possible in the first place? There is so much data being generated every single nanosecond from endless sources globally, it’s kind of hard to wrap your head around!

Too Much Data, Too Little Time

The problem is that a lot of industrial organizations still have legacy systems in place for the capture, processing, and analysis of that data, and they end up throwing more and more money at the problem without gaining real value or insights. When collected and utilized correctly, this data can provide unparalleled insights that can predict and prevent things like machine maintenance and downtime, loss of production, and system failure. In certain industries, the difference between near real-time insights and actual real-time insights could be hundreds of millions of dollars. Many technologies, both legacy and modern, become so overloaded with the sheer speed and volume of data being generated that they cannot process efficiently enough to provide actual real-time insights. Additionally, it’s important to be able to separate the noise from the anomalies in the massive amounts of data being generated so that teams both on the shop floor and at the central office can focus on what’s really important for the organization, instead of wasting time looking at data that shows nothing of value.

The Solution: High Speed Data Monitoring

Two organizations that have witnessed this problem with industrial clients time and time again recently joined forces to tackle it with a highly effective solution. HarperDB and Casne Engineering have partnered to develop high performance data acquisition and in line analytics capabilities that enable organizations to capture sub-second data streams from industrial control systems like PLC’s and SCADA systems. The solution then filters out the massive amounts of irrelevant data at the edge, and forwards only the pertinent or anomaly data for use in OT historians and machine learning applications. Filtering out the noise at the edge, on or next to the machinery itself, saves the organization significant time and money. In traditional systems there are two likely scenarios; send all of the data for later analytics, or only capture a fraction of the data at low frequency rates and hope to catch any important information. In the first case, all of the data is forwarded to on-premise cloud systems where data scientists can determine what data is important for analytics - an expensive solution that quickly overwhelms the underlying servers with noise. In the second scenario, collecting low frequency datasets could overlook important thresholds which only occur at high data rates. These overlooked signals could predict failures that lead to downtime.

This high frequency in-line analytics approach allows operators to gain actual real-time insights and detect anomalies that would normally be missed using traditional low frequency data collection techniques. HarperDB’s data management solution enables organizations to efficiently collect, process, and analyze data streams in conjunction with other unstructured data including machine vision, geospatial, and Internet data feeds. By implementing HarperDB with Casne’s industry experts, clients can build data flows that greatly improve visibility and reduce costs. Implementing event triggering and anomaly detection can also help to identify and avoid bottlenecks. The joint solution extracts only the data pertinent to their operations and forwards the information directly to existing plant historians, SCADA systems, reporting systems, and work management systems, ultimately reducing unnecessary data and streamlining overall operations.

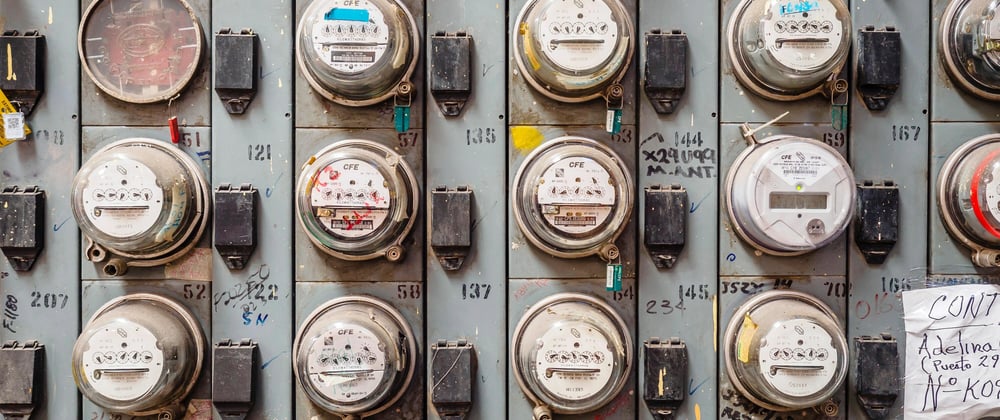

HarperDB and Casne recently completed a project for a client in the utilities / energy sector, where they were able to capture high resolution data to predict and prevent equipment failures and downtime with a single data management solution from plant to cloud. You can access and download the case study to learn more here.

Oldest comments (0)