In the previous post, we've gotten Grafana up and running with a cloudwatch datasource. While it provides us with many insights on AWS resources, it doesn't tell us how our applications are doing in our Kubernetes cluster. Knowing the resources our applications consume can help prevent disasters, such as when applications consume all the RAM on the node, causing it to no longer function, and we now have dead nodes and applications.

For us to view the metrics on our Grafana dashboard, we can integrate it into a Prometheus datasource, and have Prometheus collect metrics from our nodes and applications. We will deploy Prometheus using helm, and explain more along the way.

Table of contents

Requirements

- Kubernetes cluster, preferably AWS EKS

- Helm

The quick 5-minute install

What you get

- Prometheus Server

- Prometheus Node Exporter

- Prometheus Alert Manager

- Prometheus Push Gateway

- Kube State Metrics

Setup

Storage space

Before we begin, it is worth mentioning the file storage requirements of Prometheus. Prometheus server will be running with a persistent volume(PV) attached, and this volume will be used by the time-series database to store the various metrics it collects in the /data folder. Note that we have set our PV to 100Gi in the following line of values.yaml.

https://github.com/ryanoolala/recipes/blob/8a732de67f309a58a45dec2d29218dfb01383f9b/metrics/prometheus/5min/k8s/values.yaml#L765

## Prometheus server data Persistent Volume size

##

size: 100Gi

This will create a 100Gib EBS volume attached to our prometheus-server. So how big of a disk do we need to provide? There are a few factors involved so generally, it will be difficult to calculate the right size for the current cluster without first knowing how many applications are hosted, and also to account for growth in the number of nodes/applications.

Prometheus also has a default data retention period of 15 days, this is to prevent the amount of data from growing indefinitely and can help us keep the data size in check, as it will delete metrics data older than 15 days.

In Prometheus docs, they suggest calculating using this formula, with 1-2 bytes_per_sample

# https://prometheus.io/docs/prometheus/latest/storage/#operational-aspects

needed_disk_space = retention_time_seconds * ingested_samples_per_second * bytes_per_sample

This is difficult for me to calculate even with an existing setup, so if this is your first time setting up I can imagine it being even more so. So as a guide, I'll share with you my current setup and disk usage, so you can gauge how much of disk space you want to provision.

In my cluster, I am running

- 20 EC2 nodes

- ~700 pods

- Default scrape intervals

- 15 day retention

My current disk usage is ~70G.

If the price of 100GiB of storage is acceptable for you, in my region it is about USD12/month. I think it is a good starting point and you can save the time and effort on calculating for storage provisioning and just start with this.

Note that I'm running Prometheus 2.x, that has an improved storage layer over Prometheus 1, and has shown to have reduced the storage usage and thus the lower need of disk space, see blog

With this out of the way, let us get our Prometheus application started.

Installation

$ helm install prometheus stable/prometheus -f https://github.com/ryanoolala/recipes/blob/master/metrics/prometheus/5min/k8s/values.yaml --create-namespace --namespace prometheus

Verify that all the prometheus pods are running

kubectl get pod -n prometheus

NAME READY STATUS RESTARTS AGE

prometheus-alertmanager-78b5c64fd5-ch7hb 2/2 Running 0 67m

prometheus-kube-state-metrics-685dccc6d8-h88dv 1/1 Running 0 67m

prometheus-node-exporter-8xw2r 1/1 Running 0 67m

prometheus-node-exporter-l5pck 1/1 Running 0 67m

prometheus-pushgateway-567987c9fd-5mbdn 1/1 Running 0 67m

prometheus-server-7cd7d486cb-c24lm 2/2 Running 0 67m

Connecting Grafana to Prometheus

To access Grafana UI, run kubectl port-forward svc/grafana -n grafana 8080:80, go to http://localhost:8080 and log in with the admin user, if you need the credentials, see the previous post on instructions.

Go to the datasource section under the settings wheel

and click "Add data source"

If you've followed my steps, your Prometheus setup will create a service named prometheus-server in the prometheus namespace. Since Grafana and Prometheus are hosted in the same cluster, we can simply use the assigned internal A record to let Grafana discover prometheus.

Under the URL textbox, enter http://prometheus-server.prometheus.svc.cluster.local:80. This is the DNS A record of prometheus that will be resolvable for any pod in the cluster, including our Grafana pod. Your setting should look like this.

Click "Save & Test" and Grafana will tell you that the data source is working.

Adding Dashboards

Now that Prometheus is setup, and has started to collect metrics, we can start visualizing the data. Here are a few dashboards to get you started.

https://grafana.com/grafana/dashboards/315

https://grafana.com/grafana/dashboards/1860

https://grafana.com/grafana/dashboards/11530

Mouse over the "+" icon and select "Import", paste the dashboard ID into the textbox and click "Load"

Select our Prometheus datasource we added in the previous step into the drop-down selection

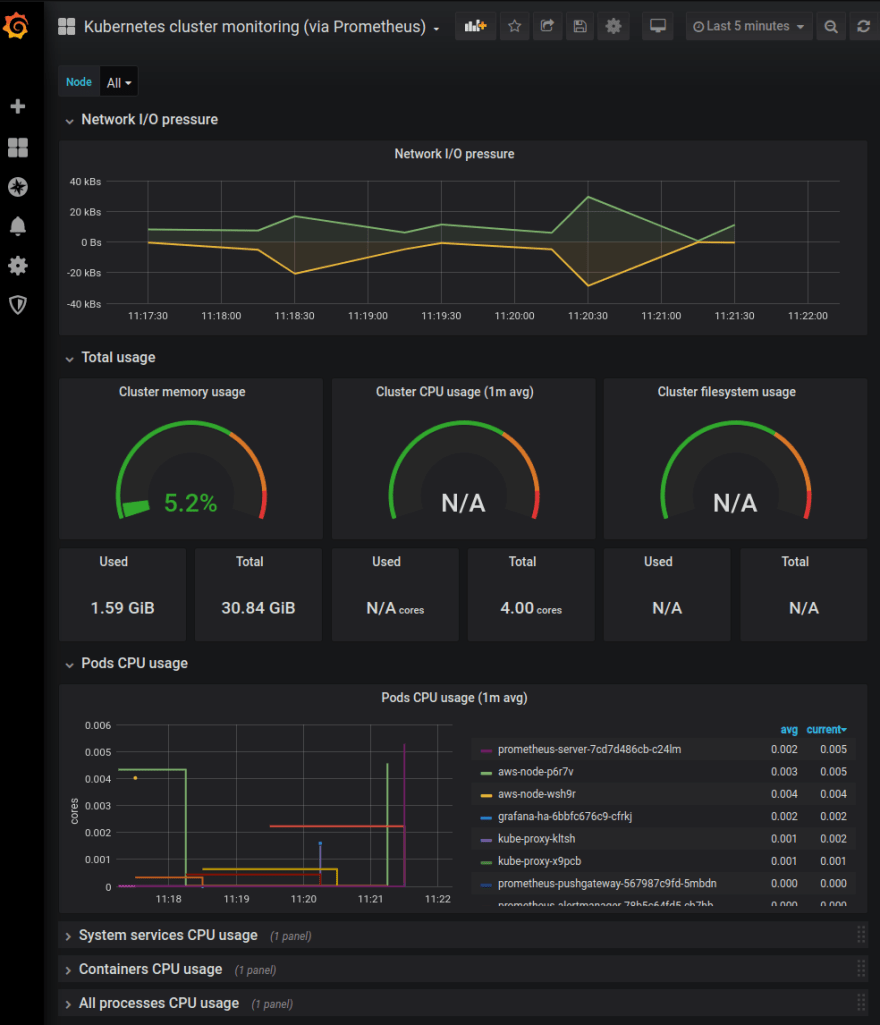

You will have a dashboard that looks like this

You may have noticed the "N/A" in a few of the dashboard panels, this is a common problem in various dashboards, due to incompatible versions of Prometheus/Kubernetes with changes in metric labels, etc.

We will have to edit the panel and debug the queries to fix them. If there are too many errors, I will suggest finding another dashboard until you find one that works and fits your needs.

How does it work?

You may have wondered how all these metrics are available, even though you've simply deployed it, without configuring anything other than disk space. To understand the architecture of Prometheus, check out their documentation. I've attached an architecture diagram from the docs here for reference.

We will keep it simple by only focusing on how we are retrieving node and application(running pods) metrics. Remember at the start of the article, we noted down the various Prometheus applications you will get for following this guide.

Prometheus-server pod will be pulling metrics via Http endpoints, most typically the /metrics endpoint from various sources.

Node Metrics

When we installed prometheus, there is a prometheus-node-exporter daemonset that is created. This ensures that every node in the cluster will have one pod of node-exporter, which is responsible for retrieving node metrics and exposing them to its /metrics endpoint.

Application Metrics

prometheus-server will discover services through the Kubernetes API, to find pods with specific annotations. As part of the configuration of the application deployments, you will usually see the following annotations in various other applications.

metadata:

annotations:

prometheus.io/path: /metrics

prometheus.io/port: "4000"

prometheus.io/scrape: "true

These are what prometheus-server will look out for, to scrape for metrics from the pods.

So how is this configured?

Prometheus will load its scraping configuration from a file called prometheus.yml which is a configmap mounted into prometheus-server pod. During our installation using the helm chart, this file is configurable inside the values.yaml, see the source code at values.yaml#L1167. The scrape targets are configured in various jobs and you will see several jobs configured by default, each catering to a specific configuration of how and when to scrape.

An example for our application metrics is found at

#https://github.com/ryanoolala/recipes/blob/8a732de67f309a58a45dec2d29218dfb01383f9b/metrics/prometheus/5min/k8s/values.yaml#L1444

- job_name: 'kubernetes-pods'

kubernetes_sd_configs:

- role: pod

relabel_configs:

- source_labels: [__meta_kubernetes_pod_annotation_prometheus_io_scrape]

action: keep

regex: true

- source_labels: [__meta_kubernetes_pod_annotation_prometheus_io_path]

action: replace

target_label: __metrics_path__

regex: (.+)

- source_labels: [__address__, __meta_kubernetes_pod_annotation_prometheus_io_port]

action: replace

regex: ([^:]+)(?::\d+)?;(\d+)

replacement: $1:$2

target_label: __address__

- action: labelmap

regex: __meta_kubernetes_pod_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

action: replace

target_label: kubernetes_namespace

- source_labels: [__meta_kubernetes_pod_name]

action: replace

target_label: kubernetes_pod_name

This is what configures prometheus-server to scrape pods with the annotations we talked about earlier.

Wrapping up

With this, you will have a functioning metric collector and dashboards to help kick start your observability journey in metrics. The Prometheus we've set up in this guide will be able to provide a healthy set up in most systems.

However, there is one limitation again to take note of and that is this Prometheus is not set up for High Availability(HA)

As this uses an Elastic Block Store(EBS) volume, as we have explained in the previous post, it will not allow us to scale-out the prometheus-server to provided better service uptime, if the prometheus pod restarts, possibly to due Out-of-memory(OOMKilled) or unhealthy nodes, and if you have alerts set up using metrics, this can be an annoying problem as you will lose your metrics for the time being, and blind to the current situation.

The solution to this problem is something I have yet to deploy myself, and when I do, I will write the part 3 of this series, but if you are interested in having a go at it, check out thanos.

Hope that this has been simple and easy enough to follow and if you have a Kubernetes cluster, even if it is not on AWS, this Prometheus setup is still relevant and be deployed in any system, with a StorageDriver configured to automatically create persistent volumes in your infrastructure.

Top comments (0)