This is a Plain English Papers summary of a research paper called AI Models Achieve Better Data Compression Through Code Generation Than Direct Compression, Study Shows. If you like these kinds of analysis, you should join AImodels.fyi or follow us on Twitter.

Overview

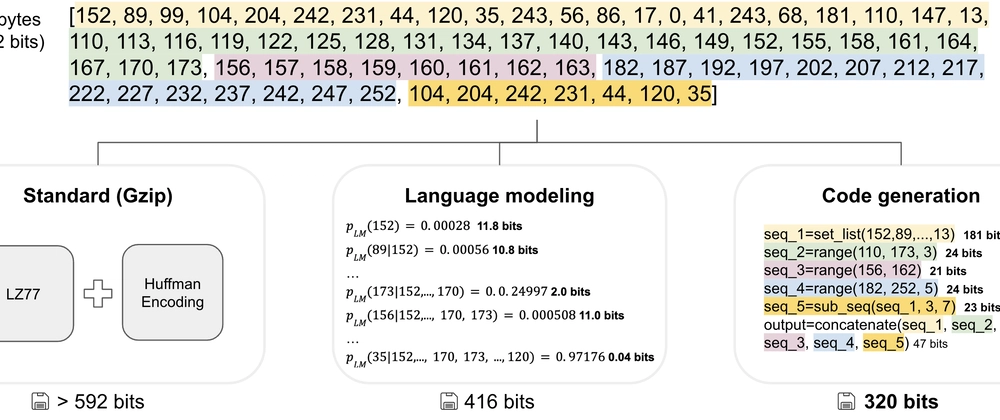

- The KoLMogorov Test measures a language model's understanding by its ability to compress data through code generation

- Compression provides a universal metric for AI capabilities across different domains

- LLMs can achieve strong compression by generating executable code

- Code generation outperforms direct compression in most tested scenarios

- Higher compression correlates with better problem-solving capabilities

- Claude 3 Opus demonstrates superior compression abilities compared to other models

Plain English Explanation

The researchers propose a new way to measure how well AI systems understand the world - by testing how efficiently they can compress data. This approach, called the KoLMogorov Test, asks an AI t...

Top comments (0)