This is a Plain English Papers summary of a research paper called AI Vision Models Often Look at Wrong Image Areas When Answering Questions, Study Finds. If you like these kinds of analysis, you should join AImodels.fyi or follow us on Twitter.

Overview

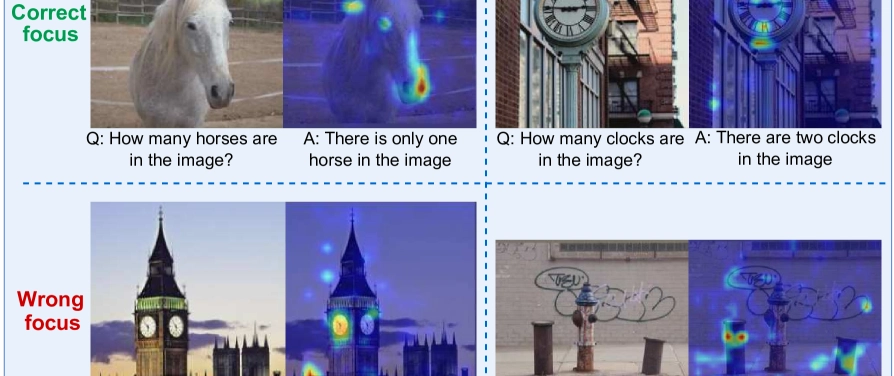

- Study examines what large vision-language models (VLMs) actually look at when answering questions

- Introduces a novel measure called Answer-Driven Attention to track attention patterns during response generation

- Analyzes multiple popular VLMs including LLaVA, InstructBLIP, and MiniGPT-4

- Finds VLMs often don't focus on the right image regions while answering questions

- Proposes answer-aware instruction tuning to improve model performance

Plain English Explanation

When we ask a question about an image to a vision-language model like those powering advanced AI assistants, we assume the model is looking at the relevant parts of the image to answer correctly. This paper challenges this assumption by investigating what these models actually ...

Top comments (0)