This is a Plain English Papers summary of a research paper called SkyLadder: 3x Faster AI Training by Gradually Increasing Text Length During Learning. If you like these kinds of analysis, you should join AImodels.fyi or follow us on Twitter.

Overview

- SkyLadder is a novel approach for more effective and efficient large language model pretraining

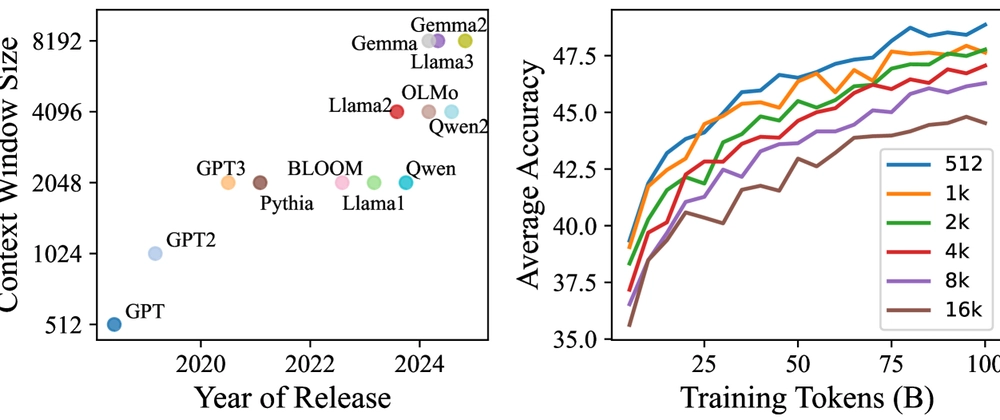

- Introduces context window scheduling that gradually increases sequence length during training

- Achieves 2-3x faster training than standard methods while maintaining or improving performance

- Scales effectively to 128k context window without position interpolation

- Demonstrates superior long-context understanding compared to traditional methods

Plain English Explanation

Training large language models (LLMs) to handle long texts is expensive and time-consuming. The traditional way is to train models on their maximum context length from the beginning, which wastes resources since most learning happens on shorter sequences anyway.

SkyLadder take...

Top comments (0)