This is a Plain English Papers summary of a research paper called Small Language Models Learn Complex Reasoning Through RL: Study Shows What Training Methods Work Best. If you like these kinds of analysis, you should join AImodels.fyi or follow us on Twitter.

Overview

- Study examines how reinforcement learning (RL) improves reasoning abilities in small language models

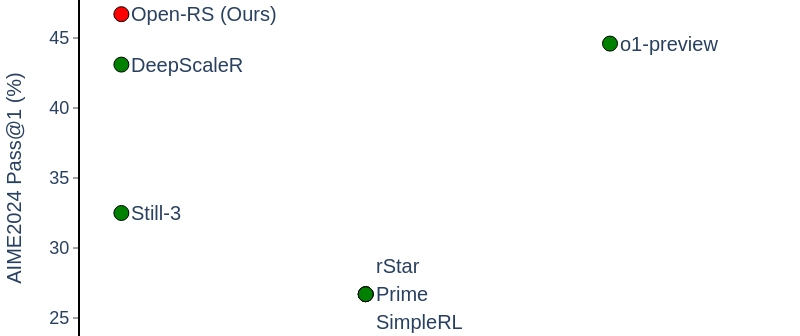

- Researchers tested 3B and 7B parameter models on complex reasoning tasks

- Direct preference optimization (DPO) and PPO (Proximal Policy Optimization) were applied to these models

- High-quality dataset curation proved essential for effective training

- RL training significantly improved performance on logical and mathematical reasoning tasks

- Combining PPO with DPO yielded best results in mathematical reasoning

- Study provides practical guidance for training smaller models to reason effectively

Plain English Explanation

Making small language models better at logical thinking is possible through reinforcement learning. This study shows how we can teach these compact models to solve complex reasoning problems without needing massive computing resources.

The researchers focused on models with ju...

Top comments (0)