Imagine running LLMs and GenAI models with a single Docker command — locally, seamlessly, and without the GPU fuss. That future is here.

🚢 Docker Just Changed the AI Dev Game

Docker has officially launched Docker Model Runner, and it’s a game-changer for developers working with AI and machine learning. If you’ve ever dreamed of running language models, generating embeddings, or building AI apps right on your laptop — without setting up complex environments — Docker has your back.

Docker Model Runner enables local inference of AI models through a clean, simple CLI — no need for CUDA drivers, complicated APIs, or heavy ML stacks. It brings the power of containers to the world of AI like never before.

✅ TL;DR - What Can You Do With It?

- Pull prebuilt models like

llama3,smollm,deepseekdirectly from Docker Hub - Run them locally via

docker model run - Use the OpenAI-compatible API from containers or the host

- Build full-fledged GenAI apps with Docker Compose

- All this — on your MacBook with Apple Silicon, with Windows support coming soon

🧪 Hands-on: How It Works

Docker’s approach is dead simple — just the way we like it.

🧰 Install the Right Docker Desktop latest one

Make sure you’re using a build that supports Model Runner.

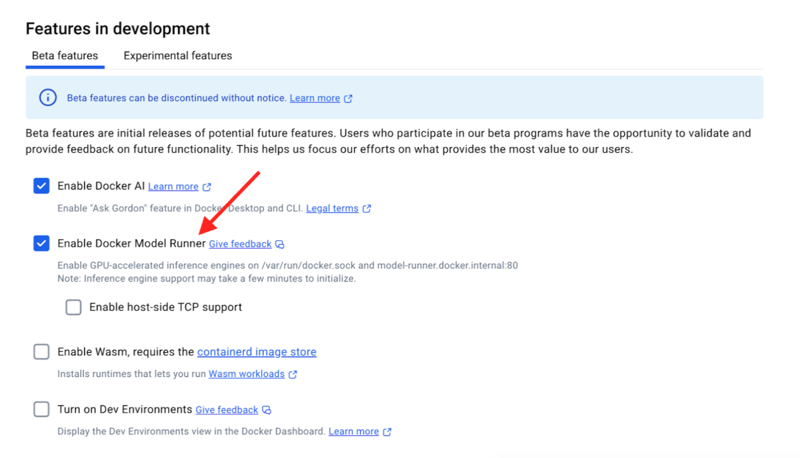

⚙️ Enable Model Runner

Install the latest version of Docker Desktop 4.40+

Navigate to Docker Desktop → Settings → Features in Development → Enable Model Runner → Apply & Restart.

🚀 Try It Out in 5 Steps

docker model status # Check it’s running

docker model list # See available models

docker model pull ai/llama3.2:1B-Q8_0

docker model run ai/llama3.2:1B-Q8_0 "Hello"

#Instantly receive inference results:

#Hello! How can I assist you today?

docker model rm ai/llama3.2:1B-Q8_0

It feels almost magical. The first response? Instant. No server spin-up. No API latency. Just raw, local AI magic.

🔌 OpenAI API Compatibility = Integration Bliss

Model Runner exposes OpenAI-compatible endpoints, meaning you can plug your existing tools — LangChain, LlamaIndex, etc. — with zero code changes.

Use it:

- Inside containers:

http://ml.docker.internal/ - From host (via socket):

--unix-socket ~/.docker/run/docker.sock - From host (via TCP): reverse proxy to port 8080

🤖 Supported Models (So Far)

Here are a few gems you can run today:

llama3.2:1bsmollm135mmxbai-embed-large-v1deepseek-r1-distill- …and more, more public pre-trained models

💬 Dev-Friendly, Community-Driven

What makes this release truly exciting is how Docker involved its community of Captains and early testers. From the Customer Zero Release to the final launch, feedback was the fuel behind the polish.

🔮 What’s Next?

- ✅ Windows support (coming soon)

- ✅ CI/CD integration

- ✅ GPU acceleration in future updates

- 🧠 More curated models on Docker Hub

🚨 Final Thoughts

Docker Model Runner is not just a feature — it’s a shift. It’s the bridge between AI and DevOps, between local dev and cloud inference.

No more juggling APIs. No more GPU headaches. Just type, pull, run.

AI, meet Dev Experience. Powered by Docker.

🚀 Try it today

Top comments (0)

Some comments may only be visible to logged-in visitors. Sign in to view all comments.